Acknowledgements:

I would like to thank Apart Research for organizing the Alignment Jam hackathon where I wrote this. Thanks to Javier Prieto for the systemic inefficiency discussion we had at an EAGx which led to this.

Background and Epistemic Status:

This report was written over a weekend at an AI Governance hackathon for the problem statement on proposing governance ideas to minimize the risks of large training runs. A lot of the specifics are arbitrary and just proposal ideas.

I think the most valuable thing here, which was a deliberate attempt on my part, was to build a system that satisfies everyone's incentive structures but, as a whole, leads to an inefficient ecosystem when all the parts are put together that would act as a counter to sudden capability jumps.

Introduction

We propose an administrative body, the National Artificial Intelligence Regulatory Authority(NAIRA), to regulate, monitor and engage stakeholders, making safety a competitive game between labs, leading to the development of better safety standards.

Prompt= “Epic AI regulation council with bureaucrats sitting all around a table”

Salient considerations:

For this policy brief, we will stick to the purview of existential risks due to AGI and keep the discussion of misuse due to AGI at a minimum. We will propose recommendations from a US national government standpoint(primarily because it is seen as the leader in the AI space today).

To better understand the feasibility, let us discuss the incentive structures of the two most crucial stakeholders here- the US Government and the tech companies involved.

From a US Government standpoint, what are the outcomes it would want to avoid?

- A foreign power developing more capable AI systems and/or becoming the centre of innovation

- Non-state actors developing AI in particularly harmful ways

- Any sort of organisation (including US labs/ tech companies) accidentally stepping on an irreversible tripwire, causing a major threat to humanity’s existence (a unilateralist's curse scenario)

Naturally, any mandate it would want to take up would be a balancing act between these outcomes. Broadly speaking, all countries are racing to get the most advanced AI systems first. Speaking about AI, Vladimir Putin, the President of Russia in 2017, mentioned that “Whoever becomes the leader in this sphere will become the ruler of the world.”

What do companies want, then?

- To be the first to deploy advanced AI systems into the market because

- They want to maximise profit on a unit economic basis

- While wanting products to be generally safe to sell recurringly in the same market

PROPOSAL

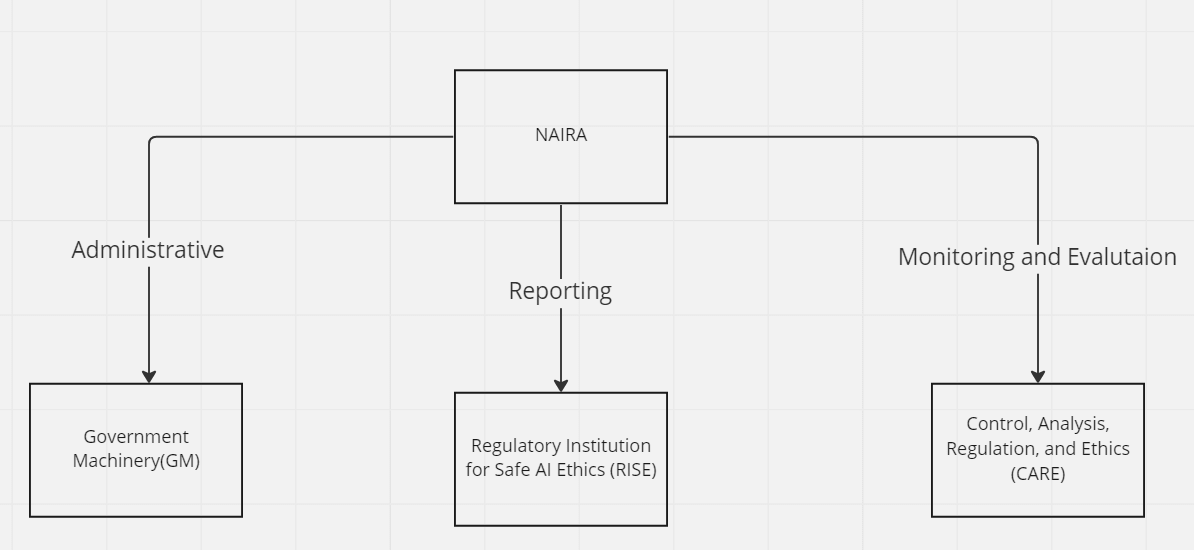

We propose a government body called NAIRA (National Artificial Intelligence Regulatory Authority) consisting of 3 sub bodies:

1.Government Machinery (GM):

To manage the incentivisation of alignment research, compliance of models with current safety standards, handle paperwork, collaborate with foreign governments and bodies, and formulate AI and AI-adjacent policies(such as the CHIPS Act); This is the administrative, enforcing and monitoring part of the body

2. Regulatory Institution for Safe AI Ethics (RISE) committee:

A committee comprising stakeholders from the government, policy teams of leading and smaller budding AI Labs, and public interest groups; This is the reporting and formal voting part of the body

3. Control, Analysis, Regulation, and Ethics (CARE) committee

A committee determining whether a model complies with safety benchmarks consisting of international safety inspectors (such as from the International Telecommunications Union), major alignment labs(MIRI, ARC) and government-appointed. This is the monitoring, evaluation and compliance part of NAIRA.

NAIRA is a national-level governing body, pursuant to the Administrative Procedure Act processes, that oversees the regulation and development of artificial intelligence technologies within the United States to respond dynamically to the development of AGI. It aims to promote the responsible use of AI, mitigate existential risks, and ensure the ethical use of AI for the betterment of society within the country.

NAIRA would assign a safety score by ranking each model it evaluates to be compulsorily shown on the product.

Mandates and Scope of this Body:

- Protect consumers against dangerous goods, services and other malpractices in line with the code of the Bureau of Consumer Protection under the Federal Trade Commission

- Promote AI safety and alignment research by a granting program through a collective security trust fund

- Formulate and advance national AI policy and strategy.

- A risk office which maintains a log of AI Safety Incidents

- Establishing technical safety standards and benchmarks by acting as a third-party auditing mechanism

- Through the GM and CARE, monitor, inspect and enforce safety guidelines on AI systems

- To enforce penalties on non-compliant actors

- To develop better ways to assess progress

- To track the progress and assess the maturity of capabilities in specific domains.

- To identify and mitigate adverse effects by utilising task-oriented regulatory craftsmanship that focuses on the specific harms and target policies to address those behaviours.

Funding:

As a new department, it should be given a benchmark starting budget 10% more than the current Bureau of Consumer Protection budget, subject to review.

Timeline:

(for first-year establishment)

| Time | Goals |

| First 2 Quarters | Identification of the safety standards to be developed, a base set of principles to be included, a timeframe for resolution, and the industry and public members of the standards development group. |

| 3rd Quarter | Put the above out for public comment; Determine criteria for Leading AI Labs and constitution of CARE committee |

| 4th Quarter | Incorporating feedback and developing enforcement standards |

Pathway to implementation:

a) Formed through an act of Congress as a separate federal department or

b) Made into an existing department, such as the FCC or the FTC

c) Parts of this can also be constituted via an executive order signed into law by the President

How would this work?

Basic idea:

The problem right now is safety isn’t taken seriously. There are clear economic incentives to develop AI systems in a fast and dirty way. Moreover, this field is anti-democratic by nature because it is CAPEX intensive. We are essentially trying to implement a model where safety becomes a competitive spot between labs. To maximise effectiveness, NAIRA should embrace, as a new regulatory model, the kind of standards-setting activities that the digital industry itself utilises to define technical codes. NAIRA would also be involved in a granting program to incentivise research at top universities on AI Alignment

Working:

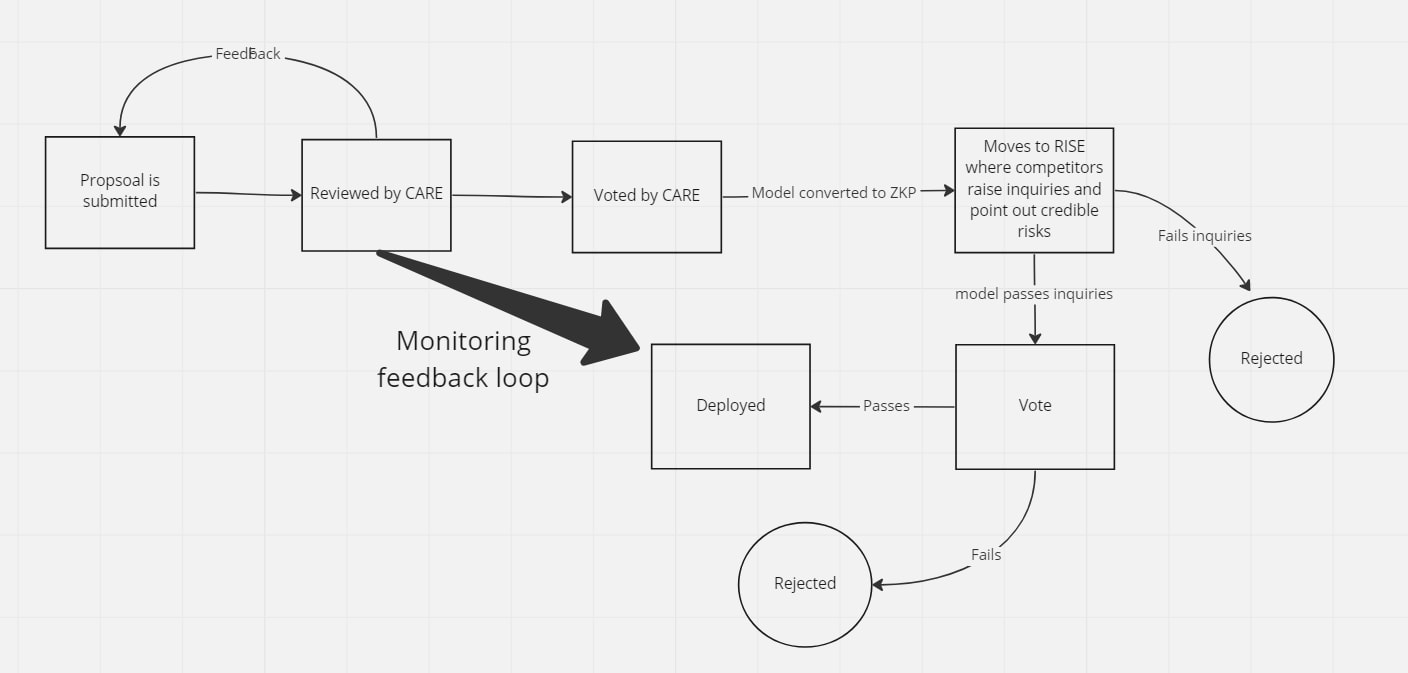

Suppose a leading AI Lab wants to release a new model. It would first need the approval of the CARE Committee (not going through this process would result in heavy fines and penalties to the lab akin to releasing an unregulated drug by the FDA). The CARE Committee would vote on the safety standards of the model(and the data set) and propose recommendations. After this, the CARE committee would vote to pass this by a ⅔ margin to the RISE Committee.

The RISE Committee would, in consultation with the CARE Committee, then deliberate on this proposal. The exciting part here is that to prevent any models from being shown to competitors, all models will be forwarded to RISE in the form of Zero Knowledge Proofs(or other encrypted information querying mechanisms) with a basic idea of the architecture. Competitors can query trial prompts, raise inquiries and concerns to probe the model indirectly. Now, the competitors would be incentivised to poke holes and develop higher safety bars for the model (to prevent their competitor’s model from going to the market and knowing they would be subject to the same standards in the future).

This sets up a competitive safety and alignment standards game where you can stifle your competitor without having the best model. If the vote in the RISE passes, the CARE committee would be tasked with setting up a monitoring and evaluation feedback loop to keep an eye on the model’s behaviour. NAIRA would also incentivise alignment work by giving out grants, as mentioned in its mandate.

In case of a potential perceived threat, labs, which are members can ask for an evaluation, resources and assistance from the other council members, with some degree of shared accountability among members.

A final aspect of RISE is that each voting member will be required to submit a risk assessment report of the field to the government body, which the GM would use to publish its yearly public risk assessment report.

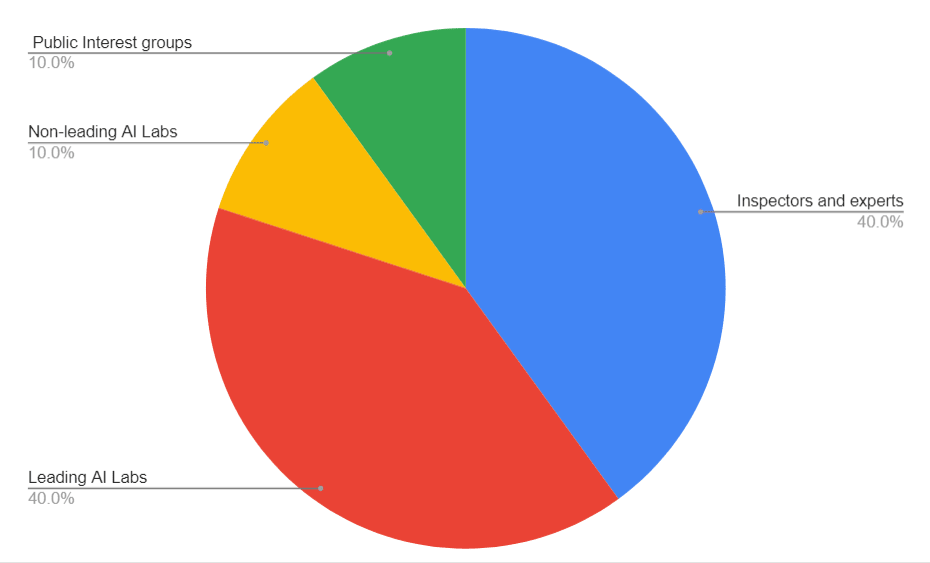

RISE Voting Structure:

| Party | Vote Share |

| Government-appointed inspectors and experts | 40% |

| Leading AI Labs (defined using market share, size and complexity of models; standards decided by GM) | 40% (to be split proportionately) |

| Non-leading AI Labs | 10% |

| Representatives from Public Interest groups | 10% |

| Tie-breaker | International Inspector from the UN who sits on CARE |

Why would RISE work?

It essentially is a game theory model where you are incentivising individual actors to sign up to a system which promotes net inefficiency( think strategic voting) in the AI development pipeline while also promulgating basic safety checks and making safety standards a competitive game between labs. The main objection here is why these companies would sign up for RISE.

Why would companies sign up for RISE?

Here are the reasons why:

- It gives them access to government resources and helps especially in case of something goes wrong

- It allows them to keep a check on their competitors, especially through the reporting and voting mechanism

- It allows them to influence government policy (such as a future CHIPS Act)

- It would be a good PR move

- Tax credits depending on the level of transparency demonstrated by the lab (determined by the GM)

REFERENCES:

- Wheeler, T. (2022, March 9). A focused federal agency is necessary to oversee Big Tech. Brookings. Retrieved March 25, 2023

- Ho, L., Barnhart, J., Trager, R., Bengio, Y., Brundage, M., Carnegie, A., Chowdhury, R., Dafoe, A., Hadfield, G., Levi, M., & Snidal, D. (2023, July 10). International Institutions for Advanced AI. arXiv.org. https://arxiv.org/abs/2307.04699

- Carlsmith, J. (2022, June 16). Is Power-Seeking AI an Existential Risk? arXiv.org. https://arxiv.org/abs/2206.13353

- Whittlestone, J., & Clark, J. (2021, August 28). Why and How Governments Should Monitor AI Development. arXiv.org. https://arxiv.org/abs/2108.12427

Executive summary: The author proposes creating a U.S. government body called NAIRA to regulate AI development, promote safety research, and make AI safety a competitive priority between labs in order to mitigate existential risks from advanced AI systems.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.