Total vs average utilitarianism

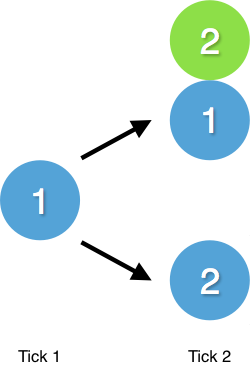

Say you have one utility (think of utility as happiness points) and you have to choose between either creating another person that has two utility, or increasing your own utility to two.

A total utilitarian would choose the first option. It's the one that generates the highest amount of total utility in the next tick (the next moment in time). An average utilitarian would choose the second option since it generates the highest amount of average utility in the next tick.

Here these two theories argue for how we should aggregate utility for the next moment in time. But how should we aggregate utility over a period of time?

Introducing timeline utilitarianism

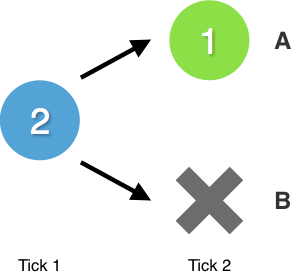

Say you are about to die and you have two utility. You have to choose between either just dying, or dying while creating another person that has one utility.

Let's look at how a timeline can aggregate the total amount of utility.

Timeline A has two ticks. You could aggregate the total amount of utility of this timeline by simply adding the two ticks together (2+1). Let's call this method of aggregating "total timeline utilitarianism". Here we can see that timeline A would be a better choice than timeline B since timeline B only has one tick and therefore only two utility.

You could also aggregate the utility of timeline A by taking the average of the two ticks ((2+1)÷2). Let's call this method of aggregating "average timeline utilitarianism". Here we can see that timeline B would be a better choice than timeline A since timeline B only has one tick and therefore two utility.

Combining moment and timeline utilitarianism

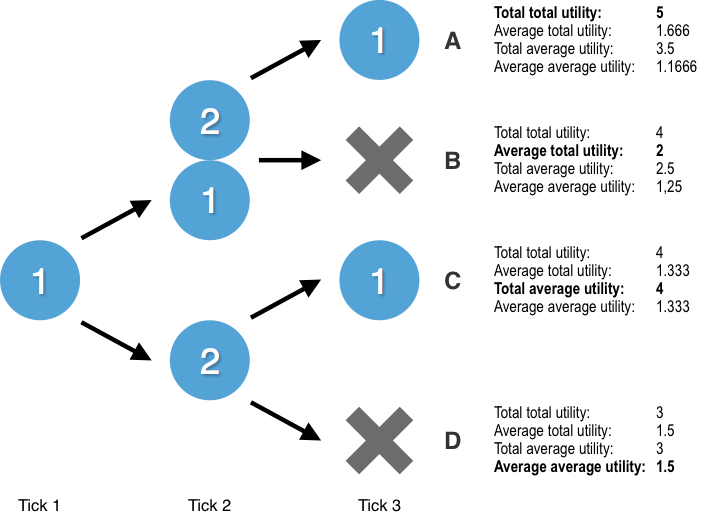

So we have "moment utilitarianism" to look at moments in time and "timeline utilitarianism" to look at the entire timeline. What happens if we combine them? Let's introduce some terms. In "total average utilitarianism" the "total" refers to how we should aggregate the entire timeline. The "average" refers to how we should aggregate the individual moments. I will always mention the timeline aggregation first and the moment aggregation second. There are four different combinations that all make different claims about how we should act.

If we want to maximize total total utility we should choose timeline A. If we want maximize average total utility we should choose timeline B. If we want to maximize total average utility we should choose timeline C. If we want to maximize average average utility we should choose timeline D.

Usually when people talk about different types of utilitarianism they automatically presuppose "total timeline utilitarianism". In fact, the current debate between total and average utilitarianism is actually a debate between "total total utilitarianism" and "total average utilitarianism". I hope this post has pointed out that this assumption isn't the only option.

Wrapping up

In reality we have many more options to choose from and we will have to do complicated probability calculations under uncertainty instead of following a simple decision tree. Some might argue that non-existence should count as zero utility. Some might argue for more exotic forms of utilitarianism like median or mode utilitarianism (I hope you don't spend too much time fretting over which of these options is the "correct" form of utilitarianism and adopt something like meta-preference utilitarianism instead). This is just a simplified model to introduce the concept of timeline utilitarianism. In future posts I will expand on this concept and explore how it interacts with things like hingeyness and choice under uncertainty.

Interesting idea!

In light of the relativity of simultaneity, that whether A happens before or after B can depend on your reference frame, you might have to just choose a reference frame or somehow aggregate over multiple reference frames (and there may be no principled way to do so). If you just choose your own reference frame, your theory becomes agent-relative, and may lead to disagreement about what's right between people with the same moral and empirical beliefs, except for the fact they're choosing different reference frames.

Maybe the point was mostly illustrative, but I'd lean against using any kind of average (including mean, median, etc.) without special care for negative cases. If the average is negative, you can improve it by adding negative lives, as long as they're better than the average.

Thanks for the post. Coincidentally, I was thinking about how I have a strong moral preference for a longer timeline when I saw it.

I feel attracted by total total utilitarianism, but suppose we have N individuals, each living 80y, with the same constant utility U. Now, these individuals can either live more concentrated (say, in 100y) or more scattered (say, in 10000y) in time; I strongly prefer the latter (I'd pay some utility for it) - even though it runs against any notion of (pure) temporal discount. My intuition (though I don't trust it) is that, from the "point of view of nowhere", at some point, length may trump population; but maybe it's just some ad hoc influence of a strong bias against extinction.

Please, let me know about any source discussing this (I admit I didn't search enough for it).

If with "this" you mean timeline utilitarianism, then there isn't one unfortunately (I haven't published this idea anywhere else yet). Once I've finished university I hope some EA institution will hire me to do research into descriptive population ethics. So hopefully I can provide you with some data on our intuitions about timelines in a couple years.

I suspect that people more concerned with the quality of life will tend to favor average timeline utilitarianism, and all the people in this community that are so focused on x-risk and life-extension might be a minority with their stronger preference for the quantity of life (anti-deathism is the natural consequence of being a strong total timeline utilitarian).

If you want to read something similar to this then you could always check out the wider literature surrounding population ethics in general.

Personally, I've always understood total utilitarianism to already be across both time and space, as it is often contrasted not just with average utilitarianism but with person-affecting/prior existence views.

Yes, (total) total utilitarianism is both across time and space, but you can aggregate across time and space in many different ways. E.g median total utilitarianism is also both across time and space, but it aggregates very differently.

Right, I guess what I mean is that in an EA context, I've historically understood total utilitarianism to be total (an integral) across both time and space, rather than total in one dimension but not the other.

I think so too, because you can't really talk about ethics without a timeframe. I wasn't trying to argue that people don't use timeframes, but rather that people automatically use total timeline utilitarianism without realizing that other options are even possible. This was what I was trying to get at by saying:

Got it, I must have just misread your post then! :) Thanks for your patience in the clarification!

The question of how to aggregate over time may even have important consequences for population ethics paradoxes. You might be interested in reading Vanessa Kosoy's theory here in which she sums an individual's utility over time with an increasing penalty over life-span. Although I'm not clear on the justification for these choices, the consequences may be appealing to many: Vanessa, herself, emphasizes the consequences on evaluating astronomical waste and factory farming.

Hey Bob, good post. I've had the same thought (i.e. the unit of moral analysis is timelines, or probability distributions of timelines) with different formalism

(In light/practice of advice I've read to just go ahead and comment without always trying to write something super substantive/eloquent, I'll say that) I'm definitely interested in this idea and in evaluating it further, especially since I'm not sure I really thought about this in an explicit way before (since I generally just think "average per each person/entity's aggregate [over time] vs. sum aggregate of all entities," without focusing that much on a distinction between an entity's aggregate over time and that same entity's average over time). Such an approach might have particular relevance under models that take a less unitary/consistent view of human consciousness. I'll have to leave this open and come back to it with a fresh/rested mind, but for now I think it's worth an upvote for at least making me recognize that I may not have considered a question like this before.