Nuclear winter is a topic that many have heard about, but where significant confusion can occur. I certainly knew little about the topic beyond the superficial prior to working on nuclear issues, and getting up to speed with the recent research is not trivial. Even then there are very large uncertainties around the topic, and different teams have come to different conclusions, which are unlikely to be resolved soon.

However, this does not mean that we cannot draw some conclusions on nuclear winter. I worry that the fact there are still uncertainties has been taken to the extreme that nuclear winters are impossible, which I do not believe can be supported. Instead, I would suggest the balance of evidence suggests there is a very serious risk a large nuclear exchange could lead to catastrophic climate impacts. Given that the potential mortality of these winters could exceed the direct deaths, the evidence is worth discussing in detail, especially as it seems that we could prepare and respond.

This is a controversial and complex topic, and I hope I have done it justice with my overview. Throughout this article I should however stress that these words are my own, along with any mistakes.

EDIT OCTOBER 2024

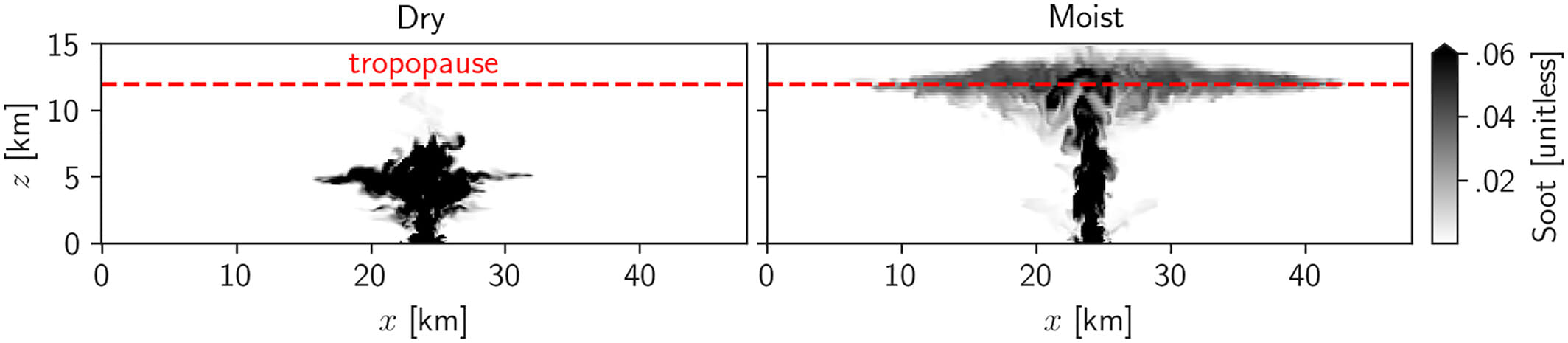

Around one year after this document has been published another study was flagged to me: "Latent Heating Is Required for Firestorm Plumes to Reach the Stratosphere". This raises another very important firestorm dynamic, that a dry firestorm plume has significantly less lofting versus a wet one due to the latent heat released as water moves from vapor to liquid - which is the primary process for generating large lofting storm cells. However, if significant moisture can be assumed in the plume (and this seems likely due to the conditions at its inception) lofting is therefore much higher.

The Los Alamos analysis only assesses a dry plume - and this may be why they found so little risk of a nuclear winter. In the words of the authors of the study on dry/wet lofting: "Our findings indicate that dry simulations should not be used to investigate firestorm plume lofting and cast doubt on the applicability of past research (e.g., Reisner et al., 2018) that neglected latent heating". This is a pretty serious blow to the models that suggest that there is a low risk of a winter - if firestorms form.

Lofting with and without moisture included - it's not a subtle difference.

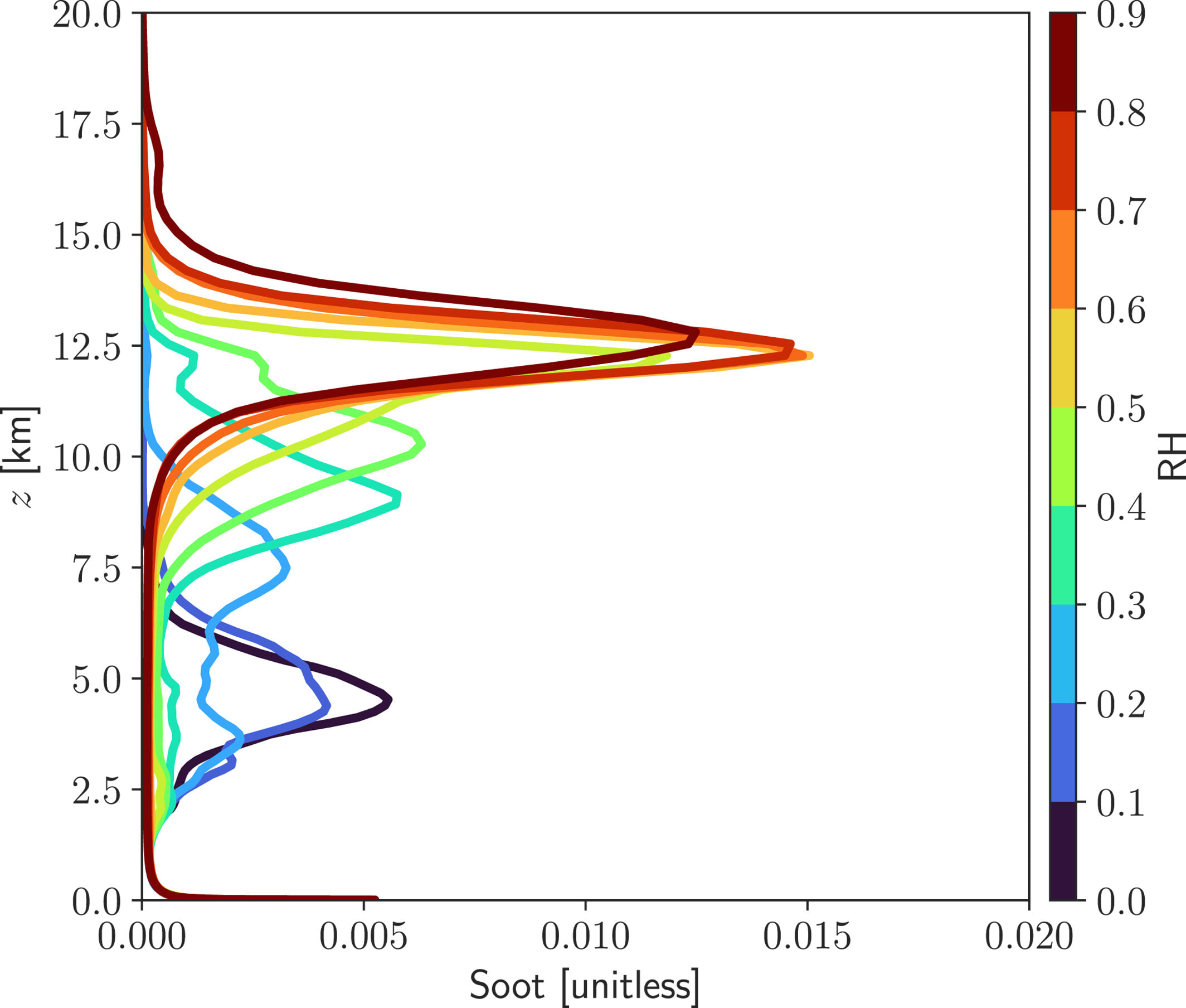

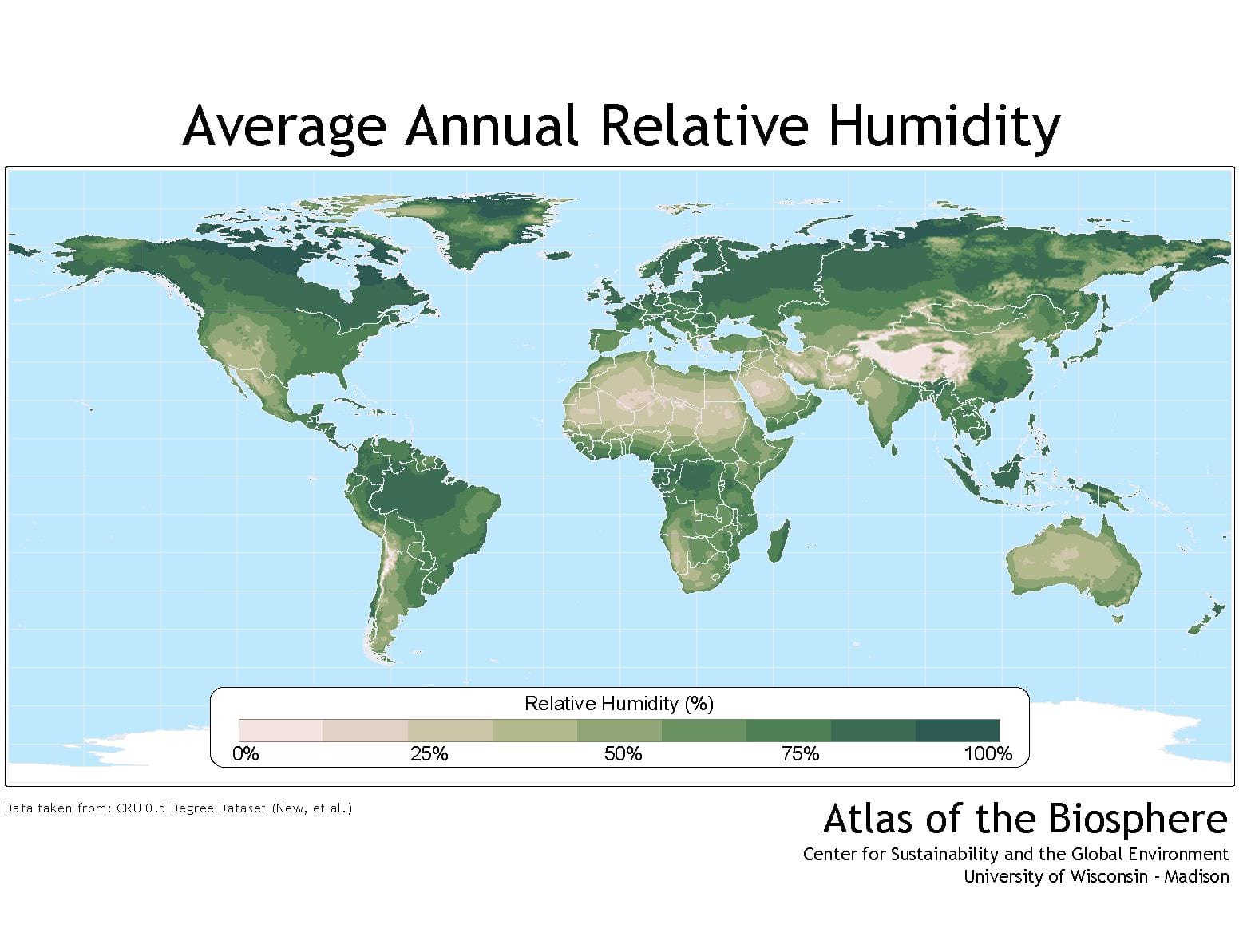

Per the paper, you only need a relative humidity of ~50% or more to inject soot in the stratosphere.

Unfortunately, most of the world has this for most of the year.

This has pushed me further towards being concerned about nuclear winter as an issue, and should also be considered in the context of other analysis that relies upon the Reisner et al studies originating at Los Alamos (at least until they can add these dynamics to their models), such as:

I highlighted how complex the dynamics of nuclear winter are in this article, and this another example of this in effect - where excluding a phase transition in your fluid dynamics can create an entirely different conclusion for the full system. This is also an example where it seems like additional model complexity resulted in poorer accuracy versus other efforts, as they included some but not all relevant fluid dynamics and seemingly ended up further from the truth. I have updated this post in a few places as a result, and I may also publish a second follow up post with more details, as it is an important consideration.

Headline results, for those short on time

This is a very long post, and for those short on time the thrust of my argument is summarized here. I’ve also included the relevant section(s) in the post that back up each point for those who wish to check my working on a specific piece of analysis.

- Firestorms can inject soot effectively into the stratosphere via their intense plumes. Once there, soot could persist for years, disrupting the climate (see “The case for concern: Firestorms, plumes and soot”).

- Not every nuclear detonation would create a firestorm, but large nuclear detonations over dense cities have a high risk of causing them (see “The case for concern: Firestorms, plumes and soot”).

- Not every weapon in arsenals will be used, and not all will hit cities. However, exchanges between Russia and NATO have the potential to result in hundreds or thousands of individual detonations over cities. This has the potential to result in a large enough soot injection that catastrophic cooling could occur (see “Complexities and Disagreements: Point one”).

- The impacts of soot injections on the climate are nonlinear, and even if the highest thresholds in the literature are not met there still could be serious cooling. My personal estimates are that around 20-50 Tg of soot could be emitted in the event of a full-scale exchange between Russia and NATO with extensive targeting of cities. This is less than the upper threshold of 150 Tg from other sources, however that would still cause catastrophic crop losses without an immediate and effective response (see “Synthesis”).

- There are disagreements between the teams on the dynamics of nuclear winters, which are complex. However, to date these disagreements center on a regional exchange of 100 weapons of 15 kt each, much smaller than Russian and NATO strategic weaponry. Some of the points raised in the debate are therefore far less relevant when looking at larger exchanges, where fuel loads would be higher, weapons are much more powerful and firestorms more likely (see “Complexities and Disagreements: The anatomy of the disagreements, followed by Synthesis”).

- This means that nuclear winter is not discredited as a concept, and is very relevant for the projected mortality of nuclear conflict. There are uncertainties, and more research is needed (and possibly underway), however it remains a clear threat for larger conflicts in particular (see “Synthesis”).

Overall, although highly uncertain, I would expect something like 2-6°C of average global cooling around 2 - 3 years after a full-scale nuclear exchange. This is based on my best guess of the fuel loading and combustion, and my best guess of the percent of detonations resulting in firestorms. I would expect such a degree of cooling would have the potential to cause many more deaths (by starvation) than the fire, blast and radiation from the detonations.

This is a similar conclusion to others who have looked at the issue, such as Luisa Rodriguez and David Denkenberger.

It differs from Bean, who appears to believe that the work of the Rutgers team must be discarded, and that only limited soot would reach the stratosphere in a future war. I believe some of their points may be valid, particularly in the context of exchanges with 100 detonations of 15 kt weaponry, and in highlighting that the dynamics of a future conflict must be considered. However, catastrophic soot injections seem highly likely in the event of 100+ firestorms forming, which is a significant risk in larger exchanges with higher yield weapons. This conclusion is true even if you display skepticism towards the work of the Rutgers team.

Introduction

The Second World War resulted in somewhere between 70-85 million deaths, or around 3% of the world’s population at the time. The rate of these deaths peaked towards the end of the war, but averaged in the range of 30-40,000 lives lost per day in the six years of conflict. It is hard to really grasp the scale of this: Neil Halloran has a really moving video that comes closest in terms of laying it out in a way that humanizes the statistics, but I would argue that so many deaths will always defy comprehension in some ways.

It is unknown how many would die in a full nuclear exchange between Russia and NATO, but plausible estimates reach into the hundreds of millions within the first three weeks. The majority of these would occur in the opening hours due to fires and blast damage, followed by the deaths of those injured by the searing heat or trapped in the rubble, unable to be saved by a rescue that would likely never come. Fallout deaths would follow after around a week, particularly where nuclear weapons were detonated at the ground level. Countries with many detonations on their territory would likely struggle to restore even basic services, further raising the tally. Again, it is hard to visualize what a nuclear detonation could do in a dense city, but Neil Halloran did another two videos on the topic, with the first modeling the impact of a single detonation on a city and the second projecting what would be the impact of a full exchange. Both are very much worth watching.

What would likely follow could be an order of magnitude worse. Nuclear winter refers to the hypothesis that soot and other material generated from burning cities following nuclear detonations would reach the upper atmosphere, absorbing part of the sunlight that would have reached the surface. Based on the size of the conflict, the weapon targeting and the model in question, this soot would cause cooling that could start around a few degrees centigrade, or range into a double-digit decline in average surface temperatures. We are rightly very concerned about warming in the order of single degrees caused by anthropogenic climate change over decades, a nuclear winter of those magnitudes over just a few years would have catastrophic implications for the climate and threaten the survival of many ecosystems.

However, there are questions raised about if nuclear winters are possible in the kinds of magnitudes shown below. The nuclear winter hypothesis is based on models and estimates: we fortunately do not have direct observations, and some who have looked at the issue have come to different conclusions. To go back to the example of Neil Halloran, he later issued a video partly retracting his earlier work which mentioned the impacts of nuclear winters (here), as he felt its earlier conclusions were too strong.

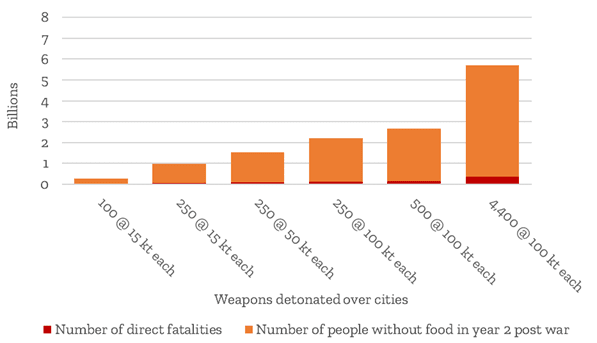

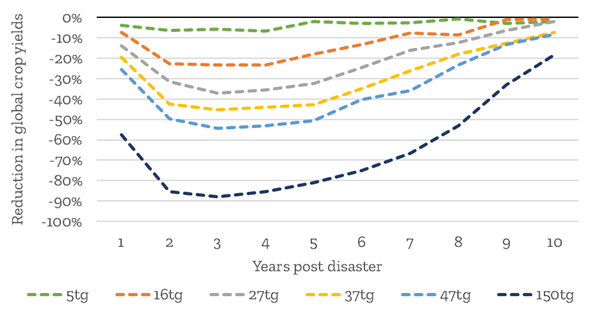

Values taken from Xia et al. 2022[1]- potential mortality in a nuclear conflict assuming no effective food system response and a nuclear winter in line with the projections of the Rutger’s team analysis – more on this later in the “Complexities and disagreements” section. It should also be stressed that the dire projections for starvation assume no effective food system response, and are not destiny. In fact, there are a number of effective steps to greatly reduce deaths by starvation already identified that we could take, both in advance and in response.

The initial projections in 1983 for the severity of nuclear winters were certainly too pessimistic in some regards, which estimated cooling of something close to 20°C and with a rapid onset/comparatively short duration[2], and the picture has certainly evolved over time. However, this does not mean that nuclear winter is discredited entirely. There are significant uncertainties, but the statements that nuclear winter is disproven is far too strong, and even a “mid” range nuclear winter would result in the largest shock our modern food system has ever seen.

This article will seek to discuss these elements in detail, where analysis agrees and disagrees, and where that leaves us in terms of the threat of nuclear winter.

The core argument for a nuclear winter reduces to the following:

- Nuclear detonations over cities will start firestorms and serious conflagrations, generating large amounts of soot.

- The size of these fires would inject the soot into the stratosphere (~12 km and above).

- Once into the stratosphere and above, this soot would only be cleared slowly by natural processes, and there is no feasible technology we could deploy to speed up the process, especially following a destructive war with little to no notice.

- Soot in the stratosphere would absorb part of the sunlight that would otherwise reach the earth’s surface.

- Nuclear conflicts may involve hundreds or thousands of weapons detonated over cities. At this scale, the soot emitted would reduce temperatures and average rainfall years to come, severely disrupting agriculture and ecosystems.

As background, nuclear winter is an example of an abrupt sunlight reduction scenario (or ASRS[3]), which are events that absorb/scatter incoming sunlight to a significant degree. There are other reasons to prepare for ASRSs, including the ongoing risks of volcanic eruptions as well as asteroids and comets. However, if nuclear winter remains a risk that makes the task far more pressing, given the very serious probability[4] of a nuclear exchange and the number of lives that would be threatened by disruptions to their food supplies. It matters if a nuclear conflict places billions of lives at risk of starvation, and even if agricultural interventions cannot save the lives lost in the initial conflict, they could prevent a huge amount of starvation and the terrible downstream impacts that would follow in the rest of the world.

The probability of nuclear winter really matters, and so it is worth thinking about in detail. I hope the following is of some use to readers in that regard.

The case for concern: Firestorms, plumes and soot

Before we talk about nuclear winter, we are going to need to discuss fires and firestorms.

Firestorms have a key distinction from conventional fires or conflagrations: they create their own self-reinforcing wind system. All fires draw air to a degree: hot air rises, which leads to an updraft and lower pressure below, pulling oxygen in. However, in a firestorm this process is self-reinforcing: drawing oxygen at such a rate that combustion rises and in turn draws even more air from all sides, reaching hurricane speed winds.

Once formed, firestorms are close to unstoppable until the conditions that caused them abate or they consume their available fuel, mere human firefighting is helpless to respond. Anecdotes from past firestorms include those trying to escape being pulled off their feet into the blaze by the force of the wind, water bodies within their radius such as reservoirs and canals boiling, and the buildings themselves twisting and melting. There are also worse stories.

Aftermath of “Operation Gomorrah” the Allied bombing of Hamburg in WW2, which planned and succeeded in creating a firestorm over the city. However, other efforts, most notably over Berlin and Tokyo, failed, you need specific conditions for one to form.

There needs to be a number of conditions for a firestorm to form: primarily a large area on fire simultaneously, in a fuel dense area, with favorable climatic conditions. For example, from empirical data we know the following conditions are at least sufficient[5]: First, a huge fire, spread over an area of 1.3 square km of which half is ablaze at any single point in time[6]. Secondly, a high density of fuel in that area, of at least 40 kg per square meter (or 4 g/cm2, a more common unit in modeling). This may seem like a lot of fuel, but most cities are estimated to easily provide this, the less dense cities of the 1940s certainly did so, and some forests would potentially do so too. Finally, low ambient winds help, as the fire needs to be drawing from all sides, not rolling[7]. Other factors, such as the shape of the surrounding land and other weather factors also play a part, but are much harder to neatly pin down[8].

John Martin - Sodom and Gomorrah – A painting of the biblical event that inspired the naming of the Allied firebombing operation over Hamburg (Operation Gomorrah, see the photo above). We now have the power to do much worse than depicted in this painting, even the largest ancient city is a small district of our modern capitals.

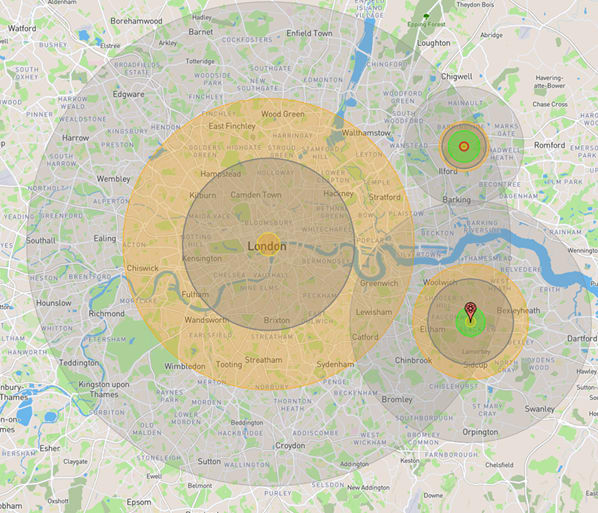

Nuclear weapons certainly meet the criteria above for the size of the initial fires, many times over in the case of thermonuclear hydrogen-based weapons[9]. Again, this is hard to understand with mere words, so here’s a comparison of a Russian SS-25 bomb at 800 kt and an American 100kt W-76 with the 15k “Little Boy” used at Hiroshima.

*Source: *https://nuclearsecrecy.com/nukemap/ . Estimates of the damage a Russian 800kt SS-25 airburst on the left and a US/UK 100kt W-76 airburst on the bottom right (marked with the red pin), compared to the 15kt “Little Boy” used at Hiroshima at the top right. The W-76 is of course smaller than the SS-25, however its smaller size means that many warheads can be carried in a single missile, striking multiple targets independently. Of particular note is the relative size of the areas of thermal radiation impact marked in orange (the larger circle, the smaller one is the size of the nuclear detonation itself). This is 384 km2 for the SS-25, 60 km2 for the W-76, vs 11 km2 for the “Little Boy”. Within this zone exposed flesh will receive 3rd degree burns and fires will be widespread. When talking about nuclear winters and comparing the possible fires to those of the two Japanese nuclear bombings, the difference in weapon yield and number of weapons deployed needs to be remembered, we’re talking about many more much larger explosions over almost certainly more fuel dense cities.

A firestorm occurred at Hiroshima, and while one was avoided at Nagasaki (most likely due to the fact that the weapon missed its target and the area of highest fuel loading, as well as the hilly topography) the far greater modern nuclear weapon yields means that there is a chance they would occur under much more challenging climatic conditions compared to those listed above. We’ll discuss this more in the section below on the modeling.

The direct impact of these firestorms would be catastrophic for all those nearby, but it is the soot they generate that matters for the nuclear winter argument. Their sheer energy and heat create a far stronger plume of smoke, potentially orders of magnitude stronger than wildfires and other rolling conflagrations. These loft soot and other aerosols high into the sky and up into the stratosphere[10], where the impacts of direct sunlight and other factors could loft black carbon even further[11]. Once at the stratosphere all this material would be far above the water which typically clear aerosols fairly quickly, meaning that it may persist for the order of years rather than weeks. Some soot is also lofted to these levels from the other conflagrations and fires that do not reach the threshold of a true firestorm, however a lower percentage of their carbon is likely to reach the stratosphere.

While this may look like the mushroom cloud from Hiroshima’s nuclear detonation (and has been misidentified as such), the actual mushroom cloud had long passed by the time this photo was taken. Instead, this is actually a photograph of the soot plume rising from the firestorm over the city, extending well above the clouds and at least into the upper troposphere. It is also an image that instinctively makes me shiver. Again, it is vital to stress that this firestorm shown would almost certainly be many times smaller than one triggered by a strategic nuclear weapon over a large city.

Aerosols absorb and reflect sunlight from the Earth’s surface, and at high volumes such material could seriously disrupt the climate, and therefore agriculture. We know that aerosols certainly can cause significant climate impacts due to past asteroid and volcanic shocks, and soot at the volumes projected in some models[12] could lower temperatures to a catastrophic degree following a serious nuclear conflict, as well as sharply reducing rainfall for its duration. Hence, the concern of a nuclear winter.

Complexities and disagreements

Based on the above, there seems to be a fairly clear argument for nuclear winters. We know from direct observations that both conventional and nuclear weapons can create firestorms when detonated in fuel rich environments. We also know that at least part of the soot and black carbon generated can rise to stratospheric levels, and that aerosols certainly can disrupt sunlight. Modern weapons are vastly more powerful than those used in WW2, and deployed in far greater numbers, meaning that there could be hundreds of firestorms simultaneously in a nuclear exchange. Put together, that means nuclear winters could reach thresholds where the climate would be disrupted.

This seems to suggest there is a high risk of at least some climate disruption from nuclear conflict, and these core elements certainly seem to have merit. However, devils lie in every detail with such complex systems, and these are worth discussing.

Despite the importance of the question, only a few teams have carried out detailed public nuclear winter modeling, and only one has recently modeled a full-scale Russia/NATO conflict (the most damaging scenario). Here’s a quick summary of the teams that have worked on the issue in the past few years:

- A team currently centered around Rutgers University – which includes names who have been working on nuclear winter right from the start, as well as a number of new researchers. They have certainly published and written the most on the topic, and have the most concerns about nuclear winter overall. They have modeled a number of scenarios, ranging from regional exchanges with Hiroshima sized weapons right up to full scale global conflicts[13].

- A team at the Los Alamos National Laboratory – The famous US nuclear research laboratory of Oppenheimer fame, established in order to build the first nuclear bomb and which now carries out a variety of different research projects concerning nuclear issues. They modeled a regional nuclear exchange between India and Pakistan, based on 100 15kt bombs being dropped on cities[14], and came to very different conclusions to the Rutgers team above. This has led to a spirited back and forth, which we’ll get to in a bit. 2024 EDIT - Los Alamos' work also only focuses on dry convection - which is a serious limitation for its applicability.

- A team at Lawrence Livermore National Laboratory – which is another federally funded research laboratory based within the US with a focus on nuclear issues. They also modeled a soot injection following regional nuclear exchange between India and Pakistan[15], weighing in on the debate that occurred between the two teams above. 2024 EDIT - This models both dry and wet convection.

Let’s start with some of the estimates from the Rutgers’ nuclear winter research team[16], and whose models present the starkest warnings. The table below lays out their most recent estimates of how a certain number of detonations over cities translates into stratospheric soot (black carbon primarily, and measured in teragrams, equivalent to one million metric tonnes).

| Number of weapons detonated over cities | Yield per weapon (kt)[17] | Number of direct fatalities | Soot lofted into stratosphere (Tg) | Approximate decline in average surface temperature - min/max monthly disruption in year 2 |

|---|---|---|---|---|

| 100 | 15 | 27,000,000 | 5 | 1-2 °C, 5-4 °F |

| 250 | 15 | 52,000,000 | 16 | 3-4 °C, 5-7 °F |

| 250 | 50 | 97,000,000 | 27 | 4-6 °C, 7-11 °F |

| 250 | 100 | 127,000,000 | 37 | 6-7 °C, 11-13 °F |

| 500 | 100 | 164,000,000 | 47 | 6-8 °C, 11-14 °F |

| 4,400 | 100 | 360,000,000 | 150 | 12-16 °C, 22-29 °F |

These are terrifying projections in terms of the climate shock alone, and there is little dispute that wars involving hundreds of nuclear detonations at 100 kt or above over cities would lead to many millions of direct fatalities. Beyond that however, significant disputes remain about the projections above, as well as the downstream conclusions presented, which are worth discussing in detail.

Values taken from Xia et al. 2022 [1:1]- expected losses to average crop yields following an injection of different volumes of stratospheric carbon. These figures are based on modeling of a few key staples (rice, wheat, maize and soybeans), and assume nothing is done to respond after a catastrophe in order to offset the losses. This need not be the case – there are a number of proposed interventions that could potentially allow everyone to be fed even in a 150 Tg scenario, but this is the scale of the potential impact and demonstrates how serious the crop losses could be. For comparison, the recent market disruptions from the Russian invasion of Ukraine were in the order of 2-3% of global calories, and a 10%+ loss of crop output could push millions into starvation without an effective response. It should be quickly mentioned that these figures are from the Rutgers team, the Lawrence Livermore team calculated that the climate shock would be shorter, but there is little disagreement that if double digit Tg levels of soot reach the stratosphere, there would be a serious climate shock and crop losses.

Point one – How many detonations can we expect?

Let’s start with the numbers of detonations over cities shown above from the Rutgers analysis. Specifically, in their model this figure is taken to be strikes that do not overlap, such that each detonation starts a new fire and as such they do not compete with each other for fuel. How credible is this in a future nuclear war?

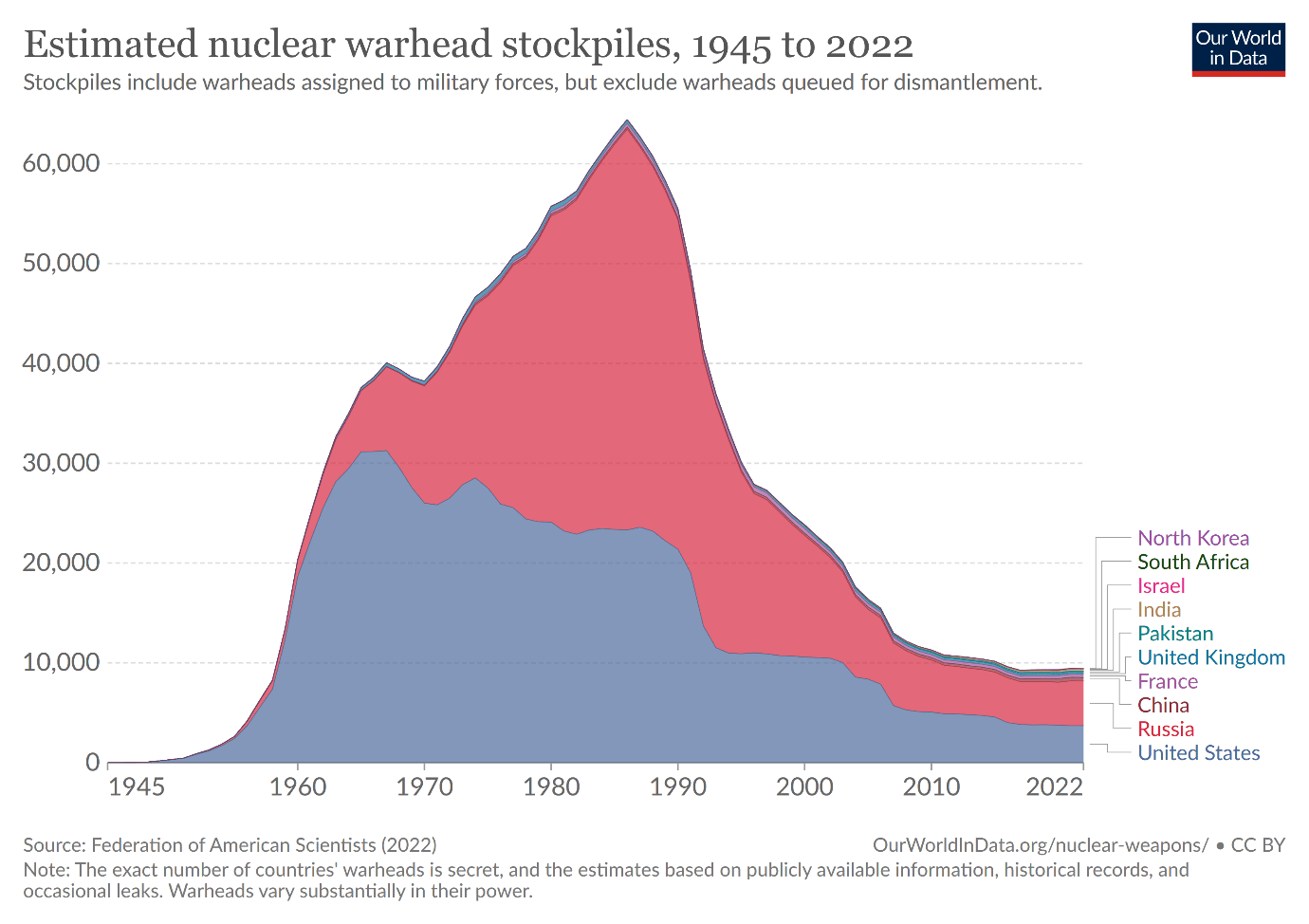

Parts of the logic of nuclear deterrence, targeting and weaponry have stayed the same since the cold war, but there have also been some very significant changes. When the first nuclear winter projections were published in 1983, we were in a very different world to today, both in terms of weapon numbers and the yields of those weapons.

I was going to write that weapon totals had become insane, but that’s not correct. Nuclear weapons, the structures of deterrence and the game theory that shapes everyone in the chain right up to premiers and presidents has a logic, and that is worse than insanity. Insecurity between the great powers bred fear of a disabling first strike, and the desire to preserve your own forces in order to credibly respond created the need for more weapons, and fundamentally dangerous levels of automation and hair triggers. Systems that “fail deadly” such as launch-on-warning, and “Dead hand” (officially called “Perimeter” in Russia and the Soviet Union, and possibly active today as we speak due to the standoff with NATO) were created, further raising the stakes.

In such a world with 60,000 plus weapons it was plausible that 4,000 detonations could occur over cities or fuel rich zones. Tensions were high, and even though neither side wished to start a nuclear war the chance of a miscalculation meant it was perfectly possible one could have been caused by accident. In particular NATO’s Able Archer exercise in 1983[18] combined with a Soviet nuclear computer system failure[19] nearly triggered an accidental exchange, and there were other close calls. At some point so many weapons with so many points of failure would create an accident, either they would need to go, or they would take modern civilization with them.

Fortunately, these weapon numbers were so clearly risky and expensive that both sides took a step back once tensions eased following the fall of the Soviet Union and into the early 2000s[20]. Arms control treaties were signed to reign in these totals, and should be credited with serious and meaningful progress in reducing the risk and severity of nuclear wars. New START[21] is the latest of these, which limits Russia and the United States to 1,550 deployed strategic weapons. Seeing as the treaty contains (or contained) provisions for inspections, and both parties have each other’s forces under close surveillance, it is actually reasonably likely that these totals are being adhered to.

This gives us approximately 3,000+ strategic weapons deployed across Russia and the United States in total, with a few hundred apiece also in the arsenals of China, France and the United Kingdom. You also need to add some smaller tactical weapons into the mix (START only covers strategic weapons), as well as bombers (which count for one bomb under START definitions, but can carry several), which pushes us up to a total of maybe 4,000 deployed nuclear weapons ready to fire in a future Russia/NATO exchange.

This is clearly enough to utterly devastate all parties involved, and you might think the reductions make little difference, just that the rubble will bounce a few less times. Fortunately, the reductions do likely matter, both from the perspective of direct damage, the risk of a nuclear accident and from the standpoint of a nuclear winter. Vitally for winters, the reductions mean that the more severe soot scenarios (based on thousands of unique detonations over cities) would be less likely to be reached, for a number of reasons:

- Many of these weapons (most tactical and a portion of strategic weapons), will be targeted at key military installations and infrastructure outside of cities. In a nuclear war there is no clean line between military and civilian, and these could still generate fires and kill, but they would create far less soot.

- Some weapons will be destroyed on the ground or intercepted prior to detonation. Bombers, submarines and land-based systems are all vulnerable to greater or lesser degree[22], and any first strike would aim to locate and disable as much of the opponent’s nuclear arsenal as possible to minimize the return fire. It is vanishingly unlikely that any first strike would be able to catch all of an opponent’s arsenal, but it is also unlikely that it would be unscathed, which removes more weapons from our total.

- Nuclear warfare plans must be set beforehand to a high degree, with a few set options on the menu of responses[23]. An unknown number of weapons will be out of touch or destroyed when you give the go order, and some launched will be intercepted. Because you must guarantee key targets will be destroyed, this results in redundancy in targeting. At its most extreme, this logic meant that some key Soviet air defense radars close to Moscow or military sites were each assigned double digit nuclear weapons in declassified US planning to make completely sure that they were gone early in any exchange. This means that weapons that do strike cities will certainly have duplication and overlap.

Put together, this means that while the larger scenarios for detonations over cities are not impossible, even a full-scale nuclear war with 4,000 weapons deployed does not translate into the same number of unique detonations spaced out over cities. Instead, numbers ranging from low hundreds to a few thousand are more plausible, and for the purposes of estimating nuclear winter for the rest of this article is probably the best we can do.

It is still vital to stress and reiterate that even with these caveats such a war would still be the worst thing to happen to humanity, and hundreds or thousands of weapons could still kill many millions and put billions more at grave risk.

I also worry that some take the logic too far, and assume the factors above mean that cities wouldn’t see significant devastation beyond a few key targets. I have seen estimates that place nuclear weapon use in the low tens or maximum hundreds even in a supposedly pessimistic scenario for a Russia/NATO exchange. This does not appear to be supported by the information publicly available, and it should be stressed that there are strong factors that push both sides to launch weapons at cities in a future war: even if you wish to only target military targets and war supporting industries/infrastructure you’re going to hit cities and civilians[24]. Also, in the event of a first strike, the responding country may well think that there is little point in striking empty silos, and be unsure about the scale of the attack they have received, and so go for a full response plan aimed at crippling the attacker with maximum force with all weapons available.

Finally, it is worth stressing that the progress we have made so far is not guaranteed to continue, and even the treaties we have in place cannot be taken for granted. The cold war had phases of negotiation waxing and waning into phases of hostility, and similarly we are in a phase of high great power tension at the time of writing. New START currently faces an uncertain future, with Russia suspending its cooperation in order to try and leverage concessions in Ukraine. For the moment it seems like there has not been a significant increase in deployed strategic weapons and the terms of the treaty are still being adhered to (possibly as putting resources into more strategic weapons serves no purpose in shoring up Russia’s position on the frontlines) but that may not last. Both Russia and the US have far more than 1,550 strategic weapons in storage, as well as the potential to make more, and the threat of nuclear use by Russia in order to back up a conventional war of aggression is a serious escalation. If a weapon is ever used, no matter its yield all bets are off.

Point two – How do detonations translate into stratospheric soot?

Beyond the number of expected detonations, there are also important uncertainties about the fires and resulting soot dynamics that would follow, with each team working on the issue having a different estimate.

This is to be expected. While we have data from nuclear tests no one has ever detonated a hydrogen bomb over a city, thankfully, so we do not have direct empirical data. We have observations from the two first atomic bombings, but it is hard to know how much these examples can be projected forwards to modern cities or modern weapon sizes. We also have observations of firestorms, but again fortunately only a few, and again never on the scale we expect to see in these exchanges.

Instead, teams must model the scenarios themselves, based on assumptions around the cities and weapons in question. This means disagreements are unlikely to be fully resolved soon. Firstly, at least some of the models used are not open source, for reasons of preserving control of intellectual property or even for national security. Secondly, there is no objective standard, there are no clean general formulas or easy linear relationships for such complex systems, and all models have to round off some of the corners of reality in order to function. All models are wrong, just that some are useful. Improved climate modeling and computing power has allowed some refinements of estimates over time[25], but in the absence of empirical results there are simply too many unknowns for all teams to agree on a number even within a factor of say two.

Take a step back and think about how you actually calculate the soot that ends up in the stratosphere from a given nuclear conflict, and the number of steps that are required.

First, you need to model your hundreds or thousands of targets, how much fuel is in them, and the climate at each (in all cases run so far this has been highly simplified, and factors like topography excluded). Then you need to model the detonation of a nuclear weapon over the target, how the blast deforms buildings, if that makes fuel more or less available, and how much of it would combust. After this you have to model the fire itself, how much area would be ignited, would it spread, would a firestorm form, etc. This is not solvable by a clean formula, and once again we do not have direct observations on the scale of our largest weapons.

But you’re still not close to done, as we still need to model the dynamics of the soot. This is also not defined by a clean formula, especially as you’re estimating this based on complex fluid dynamics and need to include things like the variable temperature and humidity as the smoke rises, and the impact of sunlight on the plume. It is highly complex to accurately model smoke in a closed environment like a room, doing so in an open air situation, then adding firestorms and local weather conditions the challenge becomes orders of magnitude harder. Soot also interacts with water in a process called “scavenging”, where soot sticks to the water particles and is rained out, which means you have to include water dynamics in your model. Some water may be naturally present over some cities as clouds, water will also be emitted as vapor from the fire you’ve just created, which means your model now has both solid, liquid and gas modeling to greater or lesser degrees.

2024 EDIT -

As it turns out, moisture is likely to be incredibly important in that lofting process, as raised in"Latent Heating Is Required for Firestorm Plumes to Reach the Stratosphere". A dry firestorm plume has significantly less lofting versus a wet one due to the latent heat released as water moves from vapor to liquid - which is the primary process for generating large lofting storm cells. However, if significant moisture can be assumed in the plume (and this seems likely due to the conditions at its inception) lofting is therefore much higher.

The Los Alamos analysis only assesses a dry plume - and this may be why they found so little risk of a nuclear winter - and in the words of the study authors on the moisture dynamics: "Our findings indicate that dry simulations should not be used to investigate firestorm plume lofting and cast doubt on the applicability of past research (e.g., Reisner et al., 2018) that neglected latent heating".

This has pushed me further towards being concerned about nuclear winter as an issue, and should also be considered in the context of other analysis that relies upon the Reisner et al studies originating at Los Alamos (at least until they can add these dynamics to their models).

We’re still not done however, as we still need to consider how long the soot would remain in the stratosphere. How high would it settle? Would it last for years, as it is above almost all atmospheric water at this point, or would it be cleared reasonably quickly? Again, we have some observations here from similar materials, but nothing conclusive, and a one-month half-life for the material versus a five year one leads to radically different climate results.

Then, all you need to do is model how your predicted volume of soot will impact every aspect of the global climate, including wind speeds, rainfall, humidity and air pressure for the duration you’re modeling. Interestingly, this seems to be the step with the fewest current disagreements, partly because both Los Alamos and Rutgers use the same climate model (the NCAR Community Earth System Model (CESM1)). For example, there seems to be no dispute that 50 Tg of soot persisting in the stratosphere would cause sharp cooling, instead the debate is if that 50 Tg of stratospheric carbon is possible.

The anatomy of the disagreements

Given that there are so many places for disagreement, we are going to have to focus on the key points. The only direct comparison between different models running the same scenario happened when both Rutgers and Los Alamos looked at a regional nuclear exchange between India and Pakistan – 100 weapons of 15 kt each (the scale of the Hiroshima bomb), with Lawrence Livermore later sharing some results from their own modeling.

This back and forth[26] started with the publication of papers by the Rutgers team (Toon et al 2007 and Mills et al 2014), which estimated worst case stratospheric soot from such a war at around 5 Tg (5 million tonnes). This was followed by the Los Alamos team (Reisner et al 2018), who ran the same scenario and came to a worst case figure of around 0.2 Tg of stratospheric soot. Rutgers published a critique, highlighting in particular where they felt the Los Alamos laboratory had underestimated the fuel available for the fires. Los Alamos responded, accepting some of the points but still estimating that even with unlimited fuel levels, stratospheric soot levels would not exceed 1.3 Tg.

Finally, Lawrence Livermore touched on the debate in 2020 (Wagman et al 2020). They did not estimate the fuel present or combustion directly, instead they modeled how different levels of average combustion mapped to stratospheric soot and cooling. At 1 g combusted/cm2, the soot would be cleared quickly and little would reach the stratosphere, meanwhile at over 16 g/cm2 there would be a lasting climate shock. However, unlike Rutgers they estimated that the climate shock would last around 4 years[27], rather than 8-14 for Rutgers, with implications for the potential response and mortality (although still deeply catastrophic in the absence of an effective response, we don’t have anything like 4 years of storage). They also model intermediate scenarios, and note that significant soot injections into the stratosphere occur at 5 g/cm2 or above[28].

The key differences between the teams were as follows:

Fuel loading. Rutgers estimated that India/Pakistan average 28-35 g/cm2 of fuel in the target zones (estimated based upon the fuel loading from Hamburg and other cities in WW2), while Los Alamos initially estimated that fuel was as low as 1 g/cm2. However, Los Alamos did partially concede that their totals could be too low initially in their model (they picked a suburb of Atlanta in the USA for the estimate, which included big gardens and a golf course), raising their model run to around 1-5 g/cm2. This did not substantially alter their results.

Fuel combustion. Rutgers estimated that fuel combustion over the duration of the fire was linear (twice the fuel, twice the combustion, twice the soot). Los Alamos estimated from their model that there would be strong nonlinearities, with higher fuel loads being choked under rubble caused by the bomb or being partially oxygen starved for other reasons, and would not fully combust as you added fuel. To make their point, they raised the model fuel density to 72 g/cm^2 ^and ran that, with a resulting combustion well below the Rutgers estimate (with or without rubble included). However, secondary ignitions were excluded from the Los Alamos estimates, such as gas line breaks. These may have made a significant contribution to past firestorms, such as Hiroshima, as they note.

The reduced fuel combustion combined with a lower fuel load means that Los Alamos predicted that only 3.2 Tg of black carbon would be produced in total following the 100 detonations, vs around 6 Tg for the Rutgers team, a factor of 1.9 difference.

Firestorms. Rutgers expect that a firestorm would start in all detonation zones as they believe the blast to meet all the sufficient preconditions, effectively lofting soot. Los Alamos excluded that possibility in their model run, estimating that either fuel loads would be too small, or the dense concrete buildings needed for higher fuel loads would form too much rubble for one to be created or fires cannot draw enough oxygen even without rubble included. This radically impacts the dynamics of the plume and soot lofting. Lawrence Livermore does not commit to the probability of firestorms occurring definitively, instead stating that if combustion is high enough to create firestorms (around 5 g/cm2 or above in their modeling) that far more lofting and climate impacts will occur.

Stratospheric soot. Due to the firestorm and other factors, Rutgers assumed that the majority of the soot would reach 12 km or higher, penetrating the stratosphere, with just 20% being cleared via scavenging. Los Alamos estimates that the vast majority of soot would be lost before it reaches these altitudes (mostly due to the lack of a firestorm), with 93.5% of soot being scavenged before reaching 12 km. Lawrence Livermore notes the nonlinearities in the soot dynamics, and estimates a high level of soot would reach the stratosphere with high combustion and firestorms, and limited amounts for lower combustion rates. EDIT 2024 - This disagreement is also due to the dry lofting assumptions made by the Los Alamos team - which excludes the latent heating of evaporation. Rutgers avoids this by assuming a simple rule of thumb, while the Lawrence Livermore estimates the plume both under dry and wet conditions.

In effect, the presence of firestorms means that 12.8 times more of the generated soot[29] reaches the stratosphere in the Rutgers model, compared to the Los Alamos projections where soot does not have the same lofting plume and is dry. The probability of firestorms really matters here, and is a key driver of the differing perspectives between the teams.

Synthesis

I have no experience running these models, and as such have no particular expertise or intuitions to draw upon to make strong judgements about the details of the arguments above. It may be the case that firestorms cannot form in an India/Pakistan exchange, and as a result there would not be a serious nuclear winter for such a conflict. Similarly, it may be that Los Alamos is wrong about fuel loads, or they are wrong that firestorms are highly improbable/EDIT 2024 wet plume dynamics are important, raising the emitted soot.

However, I would argue this uncertainty is less relevant when discussing a Russia/NATO exchange, for a number of reasons. Firstly, the fuel loads of the cities in question are much higher. Hiroshima with low built structures in WW2 averaged 10 g/cm2,[30] while estimates for the areas burnt across Hamburg range between 16-47 g/cm2, depending on the source[31]. Cities like New York, Paris, London and Moscow are now much denser, and that suggests at least as much fuel as Hamburg, far higher than Los Alamos’ 1-5 g/cm^2 ^projections for the outskirts of an Indian/Pakistani city. The exact burn rates are complex, but it is hard to argue that the fuel isn’t there in sufficient quantities for a firestorm (at least 4 g/cm2, and many materials burn in the heat of nuclear fire).

In addition, a greater area will almost certainly be ignited simultaneously, given that more than 100 detonations are possible and many strategic weapons are over an order of magnitude larger than 15 kt[32]. Los Alamos’ statement that 15 kt weapons are highly unlikely to cause a firestorm over cities in India/Pakistan seems optimistic, but much larger weapons may also change their conclusions should they analyze their effects.

It may be a stretch to state that every large weapon detonated over or near a city will cause a firestorm. However, we know firestorms have formed in 50% of past nuclear detonations over cities even with comparatively low yield weapons, as well as following conventional/incendiary bombing of cities (where much rubble was present, and conventional high explosive bomb blasts were used deliberately to make fuel more accessible for the following incendiaries). As a result, I would say the possibility of firestorms following a full-scale nuclear exchange certainly cannot be discounted without far stronger evidence.

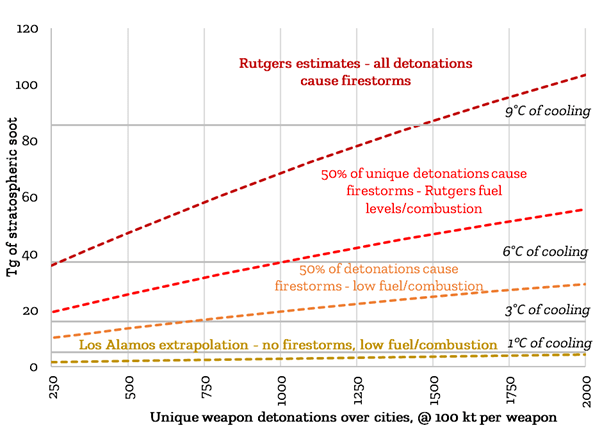

Collectively, this pushes us towards being concerned when looking at larger exchanges, although maybe not quite to the upper limits of modeling: not every weapon will have a unique detonation over a city, or cause a firestorm. However, even if that’s the case of a full-scale exchange between Russia and NATO could still be in the order of 20-50 Tg (3-7°C of cooling, from ~200 to 750 firestorms), and a smaller scale one could be 5-20 Tg (1-3°C of cooling). That’s plenty to devastate agriculture worldwide unless we’re prepared, but as I stressed before we can and should prepare.

We’re now over 8,000 words in, however, if you’ll stay with me, we’ll go over the horizon into a one more bit of extrapolation. This is certainly not suitable for peer review, but let’s compare Los Alamos to Rutgers one last time, but this time apply their different factors to different conflict scenarios, displayed on the diagram below. As above, using Los Alamos’ fuel loads/combustion reduces the soot produced in the fires by a factor of 1.9, while assuming no firestorms form reduces soot lofting to the stratosphere by a huge factor of 12.9. These factors of course are certain to be non-linear, and would therefore be different in the event that Los Alamos modeled a full-scale exchange, but they at least let us look at how much the differing opinions alter our conclusions in principle.

Overall, if all detonations cause firestorms and cities burn well (the top red line), we should be seriously concerned about nuclear conflict causing nuclear winters in almost any large exchange: even 250 unique detonations could cause 6 degrees of cooling at the peak of the climate shock (expected to occur something like 18 months post war).

Meanwhile, if half as much smoke is produced versus what Rutgers estimates, and no detonation will lead to a firestorm and so lofting is weak (the bottom yellow line), we might only see a maximum 1 degree of cooling, and nuclear winter is less of a concern.

However, if firestorms do occur in any serious numbers, for example in half of cases as with the historical atomic bombings, a nuclear winter is still a real threat. Even assuming lower fuel loads and combustion, you might get 3 degrees centigrade cooling from 750 detonations; you do not need to assume every weapon leads to a firestorm to be seriously concerned.

Extrapolated soot and climate impact from 250 detonations of 100 kt:

| All unique detonations cause firestorms | Half of unique detonations cause firestorms | No firestorms | |

|---|---|---|---|

| Rutgers fuel load | 37 Tg, ~ 6°C of cooling | 19.9 Tg, ~ 3°C of cooling | 2.9 Tg, ~ 1°C of cooling |

| Reduced fuel/combustion | 19.5 Tg, ~ 3°C of cooling | 10.5 Tg, ~ 2°C of cooling | 1.5 Tg, <1°C of cooling |

To stress, this argument isn’t just drawing two lines at the high/low estimates, drawing one between them and saying that is the reasonable answer. This is an argument that any significant targeting of cities (for example 250+ detonations) with high yield strategic weaponry presents a serious risk of a climate shock, if at least some of them cause firestorms.

This is a view held most strongly by the Rutgers team, but Lawrence Livermore National Laboratory also estimated that if firestorms were formed they would have a strong soot lofting effect, and in high numbers create a climate shock. They also estimated that the fuel combustion needed for this is not impossible in the context of modern cities, something like 5 g/cm2 or above is sufficient in their India/Pakistan scenario. Lawrence Livermore are not biased towards nuclear disarmament and looking for excuses, they are a federal laboratory that designed the 1.2 mt B-83 nuclear weapon, the largest currently deployed in America’s arsenal. Their conclusions are more guarded, they do not commit to an estimate of the number of firestorms that would actually form in the India/Pakistan scenario for example, but unless we can be confident that firestorms will not form following thermonuclear detonations over cities their conclusions also suggest a risk remains.

Overall, although highly uncertain, I would expect something like 2-6°C of average global cooling around 2 - 3 years after a full-scale nuclear exchange. This is based on my best guess of the fuel loading and combustion, and my best guess of the percent of detonations resulting in firestorms. I would expect such a degree of cooling would have the potential to cause many more deaths (by starvation) than the fire, blast and radiation from the detonations.

This is a similar conclusion to others who have looked at the issue, such as Luisa Rodriguez and David Denkenberger.

It differs from Bean, who appears to believe that the work of the Rutgers team must be discarded, and that only limited soot would reach the stratosphere in a future war. I believe some of their points may be valid, particularly in the context of exchanges with 100 detonations of 15 kt weaponry, and in highlighting that the dynamics of a future conflict must be considered. However, catastrophic soot injections seem highly likely in the event of 100+ firestorms forming, which is a significant risk in larger exchanges with higher yield weapons. This conclusion is true even if you display skepticism towards the work of the Rutgers team.

I hope that there is further modeling and work on nuclear winters, particularly of the largest exchanges; the question really does matter for humanity. If it is the case that a nuclear winter falls in probability, either from reduced weapon numbers or from updated credible data, I certainly will breathe a very deep sigh of relief. Until that happens, I think the arguments above suggest that they do present a serious and credible threat, and must be considered in the context of preventing and responding to nuclear conflicts.

We can and should reduce their probabilities with our actions, we can and should build resilience and prepare for their occurrence. Given the risks, doing anything else would be extremely reckless.

- Xia, L., Robock, A., Scherrer, K. *et al.* Global food insecurity and famine from reduced crop, marine fishery and livestock production due to climate disruption from nuclear war soot injection. *Nat Food* **3**, 586–596 (2022). https://doi.org/10.1038/s43016-022-00573-0 ↩︎ ↩︎

- R. P. Turco et al., Nuclear Winter: Global Consequences of Multiple Nuclear Explosions. *Science* **222**, 1283-1292 (1983). DOI:[10.1126/science.222.4630.1283](https://doi.org/10.1126/science.222.4630.1283) ↩︎

There are three primary triggers for an ASRS currently identified:

- Nuclear winters: caused by black soot lofted into the stratosphere by firestorms following nuclear conflict and the topic of this article.

- Volcanic winters, caused by material such as sulfur dioxide from high magnitude eruptions being launched high enough into the stratosphere to persist for years, or from an ongoing eruption that continually generates emissions.

- Asteroid winters, caused by a large bolide or comet impact that launches material into the stratosphere or causes mass fires.Of the three, thanks to recent efforts in mapping the solar system we can have reasonable confidence that no large asteroids capable of causing an ASRS will impact over the coming centuries. However, there is still risk from comets, particularly dark ones for which we would have little warning. Meanwhile, VEI 7 eruptions sufficient to cause an ASRS like Tambora in 1815 (leading to the “Year Without a Summer” in 1816) occur at a frequency of around 15-20% per century.

ASRS are not binary, and vary importantly in form and scale. However, any ASRS would present a clear catastrophic threat to food supplies for their duration, and they will happen at some point in our future, from one cause or another. ↩︎

- It is very difficult to accurately determine the probability of a nuclear exchange, which has fortunately not yet occurred. A truly accurate forecast would also rely on information not available to any single forecaster (even those with high level clearances in a given government), and would change over time based upon technologies, doctrine and global tensions. However, to take [metaculus](https://www.metaculus.com/questions/4779/at-least-1-nuclear-detonation-in-war-by-2050/) as an example, the community gives a 33% probability of at least one offensive detonation by 2050 at time of writing. Given that one detonation has a [significant probability of being followed by many others](https://www.metaculus.com/questions/11602/number-of-nuclear-detonations-by-2050/) due to the logic of nuclear warfare, this presents a clear and present danger to civilization, significantly more so if nuclear winters are credible. ↩︎

- *Glasstone, Samuel and Philip J. Dolan. “The Effects of Nuclear Weapons. Third edition.” (1977).* ↩︎

- This can come from multiple smaller fires that converge, indeed excluding Hiroshima all have done so. ↩︎

- Firestorms can almost certainly still form under higher wind speeds, but this may need a larger fire/a high fuel loading to offset the higher winds. The exact dynamics here are fiendishly complicated fluid dynamics, and the exact conditions for a firestorm are disputed. ↩︎

We know these factors in part due to the effort given to creating them. While some firestorms have (possibly) been observed in nature or due to earthquakes in cities, the largest and most destructive have been man made and no accident. All major combatants in WW2 used fire as a weapon against civilians, but it was the sheer industrial base of the United Kingdom and United States and the number of aircraft they could deploy that allowed firestorms to be created deliberately. This started with the bombing of Germany, with rolling coordinated attacks that mixed high explosives to break apart buildings and create the conditions for fires to spread, followed with napalm and magnesium incendiary bombs deployed in grids. This started uncontrollable fires spread across wide areas simultaneously, with Hamburg, Dresden and other cities experiencing firestorms that killed [many tens of thousands](https://en.wikipedia.org/wiki/Firestorm) each.

However, the largest fires and firestorms occurred over Japan, where the collapse of air defenses and the high combustibility of cities combined to create the perfect environment for strikes. Bombers once in range could be stripped of all defenses in order to pack in more bombs, and could switch their formations due to the limited anti-aircraft fire and fighter attacks. This meant more bombs could be dropped in more optimal grids and more accurately, the perfect conditions for firestorms and conflagrations given the combustible cities below them. The atomic bombings of August 1945 are rightly remembered, but the firebombing of Tokyo in May killed a similar magnitude of people as the bombing of Hiroshima (although with far more aircraft employed in the attacks). ↩︎

- Owen B. Toon, Alan Robock, Richard P. Turco; Environmental consequences of nuclear war. *Physics Today* 1 December 2008; 61 (12): 37–42. https://doi.org/10.1063/1.3047679 ↩︎

- Owen B. Toon, Alan Robock, Richard P. Turco; Environmental consequences of nuclear war. *Physics Today* 1 December 2008; 61 (12): 37–42. https://doi.org/10.1063/1.3047679 ↩︎

- There is some evidence that firestorms in WW2 launched at least some of their material to sufficient altitudes in the stratosphere for it to persist, although not in sufficient volumes to disrupt the climate (Mills, M. J., Toon, O. B., Lee-Taylor, J., and Robock, A. (2014), Multidecadal global cooling and unprecedented ozone loss following a regional nuclear conflict, Earth's Future, 2, doi:[10.1002/2013EF000205](https://doi.org/10.1002/2013EF000205)). However, there is some debate on the exact volumes here, sensing equipment was far simpler than what we have today and the climate is noisy. ↩︎

- Coupe, J., Bardeen, C. G., Robock, A., & Toon, O. B. (2019). Nuclear winter responses to nuclear war between the United States and Russia in the Whole Atmosphere Community Climate Model Version 4 and the Goddard Institute for Space Studies Model E. Journal of Geophysical Research: Atmospheres, 124, 8522–8543. https://doi.org/10.1029/2019JD030509 ↩︎

- Coupe, J., Bardeen, C. G., Robock, A., & Toon, O. B. (2019). Nuclear winter responses to nuclear war between the United States and Russia in the Whole Atmosphere Community Climate Model Version 4 and the Goddard Institute for Space Studies Model E. *Journal of Geophysical Research: Atmospheres*, 124, 8522– 8543. https://doi.org/10.1029/2019JD030509 ↩︎

- Reisner, J., D'Angelo, G., Koo, E., Even, W., Hecht, M., Hunke, E., et al. (2018). Climate impact of a regional nuclear weapons exchange: An improved assessment based on detailed source calculations. *Journal of Geophysical Research: Atmospheres*, 123, 2752– 2772. https://doi.org/10.1002/2017JD027331 ↩︎

- Wagman, B. M., Lundquist, K. A., Tang, Q., Glascoe, L. G., & Bader, D. C. (2020). Examining the climate effects of a regional nuclear weapons exchange using a multiscale atmospheric modeling approach. *Journal of Geophysical Research: Atmospheres*, 125, e2020JD033056. https://doi.org/10.1029/2020JD033056 ↩︎

- Xia, L., Robock, A., Scherrer, K. *et al.* Global food insecurity and famine from reduced crop, marine fishery and livestock production due to climate disruption from nuclear war soot injection. *Nat Food* **3**, 586–596 (2022). https://doi.org/10.1038/s43016-022-00573-0 ↩︎

- Smaller exchanges are assumed to occur between countries with lower yielding weaponry ↩︎

- Able Archer was a massive joint exercise across NATO to demonstrate readiness. However, Soviet doctrine called for doing such fake exercises as a pretext for mobilization before a surprise invasion, and the exercise created serious panic within the Soviet Union and even calls for pre-emptive nuclear attacks. Russia tried a similar strategy of fake exercises prior to their invasion of Ukraine, which failed miserably to achieve much deception outside of their own troops and George Galloway. ↩︎

- Including the one Stanislav Petrov avoided turning from a system bug into a full nuclear war in 1983, who is a man who should be canonized for his efforts in doing the right thing even at the cost of his career and against all orders. ↩︎

- Fred Kaplan has a great summary of these dynamics in his [Asterisk article](https://asteriskmag.com/issues/01/the-illogic-of-nuclear-escalation), which again is very readable. ↩︎

- This is currently under threat due to rising tensions surrounding the Russian invasion of Ukraine, which is really not great. ↩︎

- Even launched missiles are now vulnerable due to the missile defense systems in play, and while it is unknown how the strategic systems will perform, the Patriot PAC-3 missile has achieved several interceptions of the Russian Kinzhal hypersonic missile over Ukraine. The Russians and Chinese stated this was impossible prior to it occurring, which must have come as a shock when it did. The Chinese also seem to be stating it is impossible afterwards, and declined to view the wreckage when offered, possibly because they have pirated the missile technology from Russia. Many missiles will get through, but rising levels of missile defense shift the balance of deterrence, which is not certain to be a good thing. ↩︎

- For a long time in the early cold war the United States only had a single plan within the SIOP (Single Integrated Operational Plan) due to the expectation that any nuclear war would be all out, the more you fire at them the less comes back at you, and because it was computationally difficult to prepare multiple plans every year. This plan called for the utter elimination of the Soviet Union, all of the Warsaw pact states under Soviet control and all of communist China as well, and had far more than enough weapons assigned to do so on the “go” command – see “The Doomsday Machine” by Daniel Ellsberg for more details. ↩︎

- What are commonly thought of as counterforce targets are often located within cities themselves, or close nearby, and there is no clear division between counterforce and countervalue in much military planning, as seems to be the understanding in some circles. Instead, at least the United States specifies that all nuclear weapons would be targeted at military sites (conventional and nuclear) as well as command and control infrastructure and “war supporting industries”. However, these are often in and around key cities, literally in the middle of them in some cases, guaranteeing huge numbers of civilian casualties. Jeffrey Lewis discussed this in his [80,000 hours interview,](https://80000hours.org/podcast/episodes/jeffrey-lewis-common-misconceptions-about-nuclear-weapons/) and it was one thing he highlighted as a failure of understanding in the EA community, which I have been guilty of too. Meanwhile, it is not known exactly how Russia will target their weapons, and if they would show any restraint. ↩︎

- Better models were a key driver behind the revising down of the initial 1983 estimates that suggested that large sections of land across the northern hemisphere could physically freeze. These reasonable revisions, however, are sometimes taken as disproving the theory itself, which is not the case. ↩︎

- If you want a more detailed 3rd party summary, see: G. D. Hess (2021) The Impact of a Regional Nuclear Conflict between India and Pakistan: Two Views, Journal for Peace and Nuclear Disarmament, 4:sup1, 163-175, DOI: 10.1080/25751654.2021.1882772 ↩︎

- They calculate an e folding time of around 3 years for soot that is injected into the stratosphere and above. That’s the time taken for 1/e of the material to remain, or around 37%. In turn, that suggests a half life of around 2 years. ↩︎

- The relevant quote from the [paper summary](https://agupubs.onlinelibrary.wiley.com/doi/10.1029/2020JD033056): “If the fuel loading is 5 g cm<sup>−2</sup>, our simulations show that fires would generate sufficient surface heat flux to inject BC into the upper troposphere, where it self-lofts into the stratosphere. If fuel loading exceeds 10 g cm<sup>−2</sup>, a fraction of BC is directly injected into the stratosphere. Using spatially varying instantaneous meteorology for individual detonation sites and 16 g cm<sup>−2</sup> fuel loading, almost 40% of the BC is injected directly into the stratosphere. However, we show that direct injection of BC into the stratosphere makes little difference to the climate impact relative to upper tropospheric BC injections.” ↩︎

- 0.8 (the Rutgers estimate of soot injection) / (0.196 (the Los Alamos stratospheric soot) / 3.158 (the Los Alamos total soot)) ↩︎

- Rodden, R. M., John, F. I., & Laurino, R. (1965). Exploratory analysis of fire storms, OCD Subtask NC 2536D. ↩︎

- Toon, O. B., Turco, R. P., Robock, A., Bardeen, C., Oman, L., and Stenchikov, G. L.: Atmospheric effects and societal consequences of regional scale nuclear conflicts and acts of individual nuclear terrorism, Atmos. Chem. Phys., 7, 1973–2002, https://doi.org/10.5194/acp-7-1973-2007, 2007. ↩︎

- The smallest currently deployed US strategic weapon is the [W-76](https://en.wikipedia.org/wiki/W76), at around 100 kt. We showed that on the map above. The largest is the [B-83](https://en.wikipedia.org/wiki/B83_nuclear_bomb), at around 1.2 megatonnes, nearly 100 times as powerful as the Hiroshima bomb. ↩︎

I'm curating this post. I also highly recommend reading the post that I interpret this post as being in conversation with, by @bean .

These posts, along with the conversation in the comments, is what it looks like (at least from my non-expert vantage point) to be actually trying on one of the key cruxes of nuclear security cause prioritization.

Agreed, JP! For reference, following up on Bean's and Mike's posts, I have also done my own in-depth analysis of nuclear winter (with more conversaion in the comments too!).

I want to second the skepticism towards modelling. I have myself gone pretty deep into including atmospheric physics in Computational Fluid Dynamics models and have seen how finicky these models are. Without statistically significant ground truth data, I have seen no one being able to beat the most simple of non-advanced models (and I have seen many failed attempts in my time deploying these models commercially in wind energy). And you have so many parameters to tweak, there is such a high uncertainty about how the atmosphere actually works on a scale smaller than around 1km so I would be very careful in relying on modelling. Even small scale tests are unlikely to be useful as they do not capture the intricate dynamics of the actual, full-scale and temporally dynamic atmosphere.

Hi Ulrik,

I would agree with you there in large part, but I don't think that should necessarily reduce our estimate of the impact away from what I estimated above.

For example, the Los Alamos team did far more detailed fire modelling vs Rutgers, but the end result is a model that seems to be unable to replicate real fire conditions in situations like Hiroshima, Dresden and Hamberg -> more detailed modeling isn't in itself a guarantee of accuracy.

However, the models we have are basing their estimates at least in part on empirical observations, which potentially give us enough cause for concern:

-Soot can be lofted in firestorm plumes, for example at Hiroshima.

-Materials like SO2 in the atmosphere from volcanoes can be observed to disrupt the climate, and there is no reason to expect that this is different for soot.

-Materials in the atmosphere can persist for years, though the impact takes time to arrive due to inertia and will diminish over time.

The complexities of modeling you highlight raise the uncertainties with everything above, but they do not disprove nuclear winter. The complexities also seem raise more uncertainty for Los Alamos and the more skeptic side, who rely heavily on modeling, than Rutgers, who use modeling only where they cannot use an empirical heuristic like the conditions of past firestorms.

FWIW, Los Alamos claims they replicated Hiroshima and the Berkeley Hills Fire Smoke Plumes with their fire models to within 1 km of plume height. It's pretty far into the presentation though, and most of their sessions are not public, so I can hardly blame anyone for not encountering this.

I have never worked with fire plume models nor looked at that presentation, but have done some of the most advanced work on understanding wind conditions on the 2km-100km scale. What I know from that, probably quite similar work, is that there are so many parameters in these type of models to tweak the output. And that often, unfortunately, practice is to keep re-running models while tweaking until the output looks like the experimental results. I am not saying this happened here, I am just encouraging anyone looking into this to really pay attention to this, especially if making important decisions. If they do not explicitly and clearly say that the model results are a first-try, "no tweak" run, I would assume they have done tweaking and would consider the results not of sufficient quality to support conclusions.

What we did in the wind speed work I was involved in was to look at performance on statistically significant numbers of never-seen-before cases, really sitting on our hands and avoiding the temptation to re-run the simulations with more "realistic" model settings.

Hi Mike, in similar fashion to my other comment, I think in my pursuit of brevity I really missed underlining how important I think it is to guard against nuclear war.

I absolutely do not think models' shortcomings disprove nuclear winter. Instead, as you say, the lack of trust in modeling just increases the uncertainty, including of something much worse than what modelling shows. Thanks for letting me clarify!

(and the mantra of more detailed models -> better accuracy is one I have seen first-hand touted but with really little to show for it, it is what details you include in the models that drove most of the impact in the models we dealt with which were about 30km x 30km x 5km and using a resolution of 20-200m)

Great post, Mike!

It sounds like the above argues convincingly for firestorms, but not necessarily for significant soot ejections into the stratosphere given firestorms? Relatedly, from the Key Points of Robock 2019:

Hi Vasco

I'm not sure I follow this argument: almost all of the above were serious fires but not firestorms, meaning that they would not be expected to effectively inject soot. We did not see 100+ firestorms in WW2, and the firestorms we did see would not have been expected to generate a strong enough signal to clearly distinguish it from background climate noise. That section was simply discussing firestorms, and that they seem to present a channel to stratospheric soot?

Later on in the article I do discuss this, with both Rutgers and Lawrence Livermore highlighting that firestorms would inject a LOT more soot into the stratosphere as a percentage of total emitted.

Thanks for the reply!

Agreed. I pointed to Robock 2019 not to offer a counterargument, but to illustrate that validating the models based on historical evidence is hard.

I think the estimates for the soot ejected into the stratosphere per emitted soot are:

I am not sure Lawrence Livermore estimates the soot ejected into the stratosphere per emitted soot. From Wagman 2020, "BC [black carbon, i.e. soot] removal is not modeled in WRF, so 5 Tg BC is emitted from the fire in WRF and remains in the atmosphere throughout the simulation". It seems it only models the concentration of soot as a function of various types of injection (described in Table 2). I may be missing something.

So basically, no matter how much fuel Los Alamos puts in, they cannot reproduce the firestorms that were observed in World War II. I think this is a red flag for their model (but in fairness, it is really difficult to model combustion - I've only done computational fluid dynamics modeling - combustion is orders of magnitude more complex).

Thanks for commenting, David!

For the high fuel load of 72.62 g/cm^2, Reisner 2019 obtains a firestorm:

So, at least according to Reisner, firestorms are not sufficient to result in a significant soot ejection into the stratosphere. Based on this, as I commented above:

For reference, this is what Reisner 2018 says about modelling combustion (emphasis mine):

So my understanding is that they:

Minor correction to my last comment. I meant:

I feel there are a few things here:

My point from the article is that:

Thanks for clarifying!

Which observations do we have? I did not find the source for the 0.02 Tg emitted in Hiroshima you mentioned. I suspect it was estimated assuming a certain fuel load and burned area, which would arguably not count as an observation.

I cannot read how much soot is in the photo, so I do not know whether it is evidence for/against the 72.62 g/cm^2 simulation of Reisner 2019.

I very much agree it is a threat in expectation. On the other hand, I think it is plausible that Los Alamos is roughly correct in all of their modeling, and it all roughly holds for larger exchanges, so I would not be surprised if the climate shock was quite small even then. I welcome further research.

Thanks for the correction. Unfortunately, there is no scale on their figure, but I'm pretty sure the smoke would be going into the upper troposphere (like Livermore finds). Los Alamos only simulates for a few hours, so that makes sense that hardly any would have gotten to the stratosphere. Typically it takes days to loft to the stratosphere. So I think that would resolve the order of magnitude disagreement on percent of soot making it into the stratosphere for a firestorm.

I think the short run time could also explain the strange behavior of only a small percent of the material burning at high loading (only ~10%). This was because the oxygen could only penetrate to the outer ring, but if they had run the model longer, most of the fuel would have eventually been consumed. Furthermore, I think a lot of smoke could be produced even without oxygen via pyrolysis because of the high temperatures, but I don't think they model that.

Los Alamos 2019 "We contend that these concrete buildings will not burn readily during a fire and are easily destroyed by the blast wave—significantly reducing the probability of a firestorm."

According to this, 20 psi blast is required to destroy heavily built concrete buildings, and that does not even occur on the surface for an airburst detonation of 1 Mt (if optimized to destroy residential buildings). The 5 psi destroys residential buildings that are typically wood framed. And it is true that the 5 psi radius is similar to the burn radius for a 15 kt weapon. But knocking down wooden buildings doesn't prevent them from burning. So I don't think Los Alamos' logic is correct even for 15 kt, let alone 400 kt where the burn radius would be much larger than even the residential blast destruction radius (Mike's diagram above).

Los Alamos 2018: "Fire propagation in the model occurs primarily via convective heat transfer and spotting ignition due to firebrands, and the spotting ignition model employs relatively high ignition probabilities as another worst case condition."

I think they ignore secondary ignition, e.g. from broken natural gas lines or existing heating/cooking fires spreading, the latter of which is all that was required for the San Francisco earthquake firestorm, so I don't think this could be described as "worst case."

Actually, I think they only simulate the fires, and therefore soot production, for 40 min:

So you may well have a good point. I do not know whether it would be a difference by a factor of 10. Figure 6 of Reisner 2018 may be helpful to figure that out, as it contains soot concentration as a function of height after 20 and 40 min of simulation:

Do the green and orange curves look like they are closely approaching stationary state?

In that slower burn case, would the fuel continue to be consumed in a firestorm regime (which is relevant for the climatic impact)? It looks like the answer is no for the simulation of Reisner 2018:

For the oxygen to penetrate, I assume the inner and outer radii describing the region on fire would have to be closer, but that would decrease the chance of the firestorm continuing. From Reisner 2019:

Reisner 2019 also argues most soot is produced in a short time (emphasis mine):

I do not think they model pyrolysis. Do you have a sense of how large would be the area in sufficiently high temperature and low oxygen for pyrolysis to occur, and whether it is an efficient way of producing soot?

Good point! It is not an absolute worst case. On the other hand, they have more worst case conditions (emphasis mine):