Content warning: this post is mostly vibes. It was largely inspired by Otherness & Control in the age of AGI. Thanks to Toby Jolly and Noah Siegel for feedback on drafts.

The spiritual goal of AI safety is intent alignment: building systems that have the same goals as their human operators. Most safety-branded work these days is not directly trying to solve this,[1] but a commonly stated motivation is to ensure that the first AGI is intent-aligned. Below I’ll use “aligned” to mean intent-aligned.

I think alignment isn’t the best spiritual goal for AI safety, because while it arguably solves some potential problems with AGI (e.g. preventing paperclip doom), it could exacerbate others (e.g. turbocharging power concentration).

I’d like to suggest a better spiritual goal– the goal of restrained AGI. I say an agent is restrained if it does not pursue scope-sensitive goals[2] (terminal or instrumental). Of course, this may involve hard technical problems,[3] but it’s not clear to me whether these are more or less difficult than alignment. And you may shout “restrained AIs won’t be competitive!”. But is it a bigger hindrance than the alignment tax?[4]

Let me flesh out my claim a bit more. Here are some bad things that can happen because of AGI:

- AI takeover

- AI-powered human takeover or power concentration

- AI-powered malevolence and fanaticism

- Value lock-in preventing the emergence of better value systems in the future

- Instability from 100 years of technological progress happening in 5

- The Waluigi effect

While alignment might prevent some of these, it could exacerbate others. In contrast, I claim that restraint reduces the probability & severity of all of them.

My central argument: I’m scared of a single set of values dominating the lightcone,[5] regardless of what those values are. Since value is fragile, these values probably won’t be even partially what you want. And given the complexity of the future, it is unlikely that your values will be the ones to fill the lightcone. And even if you get lucky, how confident are you that these values deserve to dominate the future? Restraint aims to prevent such domination.

Two worlds

To illustrate why I’m more excited about restraint than alignment, let’s imagine two cartoonishly simple worlds. In the “aligned world”, we have solved intent alignment but we allow AGI to have arbitrarily scope-sensitive goals. In the “restrained world”, we didn’t solve intent alignment but no scope-sensitive AGI is allowed to exist. I’ll discuss some possible problems in the two worlds, and you can make up your own mind which world you would prefer.

I’m going to make a whole bunch of simplifications,[6] I hope you can extrapolate these stories to a more realistic future of your choice. I’m also going to use the language of traditional Yudkowsky/Bostrom-esque framing of AI risk.[7] I don’t want to claim that this well describes what will actually happen, but it sure makes my claim easier to present, and I think it’s fair to use this frame given that alignment has been largely motivated within this frame.

The aligned world

One set of values will dominate the lightcone

Because of instrumental convergence, a singleton will likely form. A single set of values dominates the lightcone. Systems whose goals are more scope-sensitive are more likely to take over, so the singleton will most likely have scope-sensitive goals.

You won’t like those values unless they’re very overlapping with yours. Since the singleton is scope-sensitive, it will optimise hard. The hard optimization will cause these ambitions to decorrelate from other goals that may naively seem to overlap. Your values might be fragile, so if the singleton is misaligned to even a tiny component of your values, the future will be garbage to you. You’ll either win the lightcone or you’ll lose it.

They won’t be your values

I think it’s unlikely that the dominant values will overlap with yours enough to avoid decorrelating from them. Let’s brainstorm some vignettes of how things go:

Vignette 1: Nerd Values

The first AGI (which forms a singleton) is given goals that are hastily decided by a small team of engineers and executives at DeepMindSeek. Perhaps they tune the AGI’s goals to their personal gain, or they try to implement their favourite moral theory, their best guess at the aggregate of human values.

How do you feel about this one? What are the chances that your preferences get included in the engineer’s considerations? Perhaps their goals are largely overlapping with yours, but will it be overlapping enough?

Joe Carlsmith points out that the story of “tails coming apart” may apply just as much to the comparison between humans and AIs as to the comparison between humans and humans. If the nerd values are optimised hard enough, they may decorrelate with yours. DeepMindSeek may be “paperclippers with respect to you”.

Vignette 2: Narrow Human Values

AGI empowers a person or group to grab the world and organise it to meet their own selfish needs.

I assume you wouldn’t be happy with this.

Vignette 3: Inclusive Human Values

A coordinated international effort results in some massive democratic process (for example) that builds an aggregated set of values shared by all humans. This aggregate is voluntarily loaded into the first AGI, which forms a singleton.

First of all, this seems unlikely to me. Secondly, are human values actually a thing? A static thing that you can align something to? Seems unclear to me. Can we really find a single set of norms that all humans are happy with?

Vignette 4: Ruthless Values

For-profit companies build a number of AGI systems with goals aligned to the will of their customers. The systems that gain the most influence will be those with the broadest scope of goals and ruthless enough to not let moral, legal or societal side constraints get in their way. In the limit, an amoral set of goals capture the lightcone.

Perhaps you get extremely lucky and have control of the ruthless AGI that captures the lightcone. Yay for you, you have won the singularity lottery.[8]

The singularity lottery is an infinite brittle gamble. There is an infinite payoff but a minuscule chance of winning. You will almost definitely lose – be it to other humans or to unaligned AI. In my view, the more robust strategy is to not buy a lottery ticket. Avoid a single agent grabbing the lightcone.

But let’s say you’re a risk-taker. Let’s say you’re willing to buy a ticket for the possibility of tiling the future with your preferences. Now try to imagine that actually happens, and introspect on it. Is this actually what you want?

Do you even want your values to fill the lightcone?

Hanson and Carlsmith have both expressed discomfort in being overly controlling of the future. One way Hanson cashes out this discomfort is:

“Aren’t we glad that the ancient Greeks didn’t try to divert the future to replace us with people more like them?”

How much steering of the long-term future do we have a right to do? This question is a whole can of worms, and I feel pretty confused about how we should balance our yang (steering the future to prevent horrible outcomes by our lights) with our yin (letting things play out to allow future beings and civilisations to choose their own path).[9]

I think it’s reasonable to say that an extreme amount of moral caution is needed if you were to decide today how to spend the cosmic endowment. I think this is still true if you planned to give the AGI more abstract values, like second-order values, that it could keep it open to some degree of moral evolution[10] or allow it to work out what is right by itself[11]. How flexible should these values be? What, if anything, should we lock in? Are we anywhere near ready to make these kinds of decisions?

How confident are you that the decisions you make would have results that are both morally good by your own lights, and morally good by some more impartial moral realism-esque vantagepoint? I’m confused enough about all this that there’s no way you’d catch me vying to tile the universe with my favourite computation. I don’t think anyone, today, should be confident enough to do that.

Enshrining your values could lead to the opposite of your values

There is a concerning pattern that shows up when thinking about agency – if an agent exists with a particular goal x, this increases the probability of -x being fulfilled.

The first (silly) example is sign-flips: a cosmic ray flips the bit storing the sign of an AI’s utility function, and the AI goes on to maximize the opposite of its intended utility function. A more realistic example is the Waluigi effect - LLMs have a tendency to behave oppositely to how they are intended via a number of related mechanisms.

If you win the singularity lottery, you’ll have a super powerful agent with your desires enshrined into it. Probably you’ll get what you want, but you might just get the opposite of what you want. This makes buying a lottery ticket even more risky. You’re not just risking death to get heaven, you’re risking hell to get heaven.

The restrained world

In this world, we have not solved alignment (or maybe it’s solved to some degree), but a concerted effort has successfully prevented the deployment of AGI systems with scope-sensitive terminal and instrumental goals.

In this world, takeover, singletons and lock-in are less likely. AGI has less incentive to achieve decisive strategic advantage because taking over will not help them much in achieving their goals.

A multipolar world is more likely, constituting a more pluralistic future. Values are less likely to lock in. Values can evolve in the same way that values have always evolved. We don’t need to solve any difficult philosophical problems in deciding the right way for the future to go. As Hanson argues, moral progress so far has been driven less by human reasoning and more by a complex process of cultural evolution.

“Me, I see reason as having had only a modest influence on past changes in human values and styles, and expect that situation to continue into the future. Even without future AI, I expect change to accelerate, and humans values and styles to eventually change greatly, without being much limited by some unmodifiable “human core”. And I see law and competition, not human partiality, as the main forces keeping peace among humans and super-intelligent orgs.”

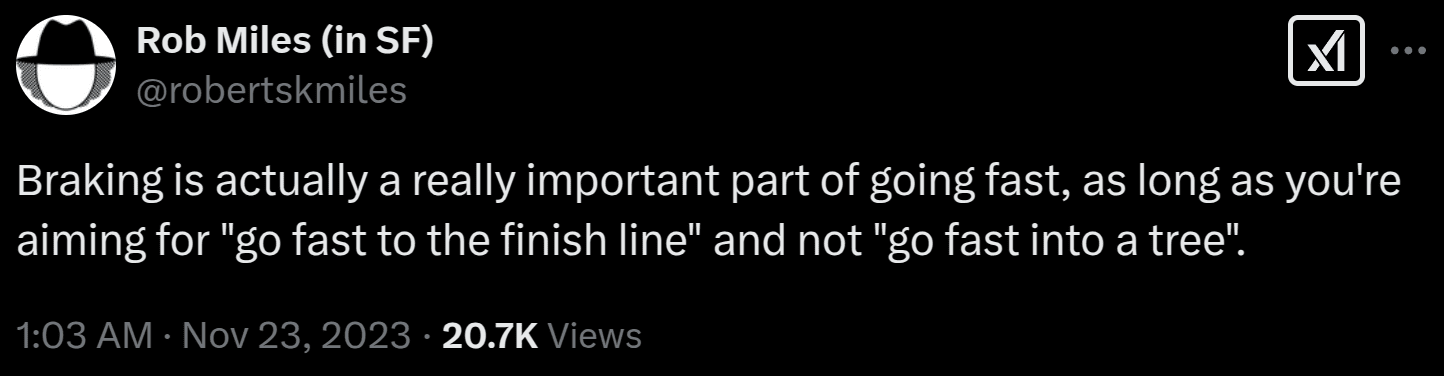

Technological advancement will probably move slower than in the aligned world because AI systems optimize less sweepingly.[12] But, as many in the AI safety movement (and especially PauseAI) would agree, a slow AI revolution seems safer than a fast one.

Could restrained AI defend against unrestrained AI?

A world with only restrained AGI seems better to me than a world with only aligned AGI. But what if only some AGI systems are restrained? Would a restrained world be vulnerable to a careless actor creating an unrestrained agent that grabs a decisive strategic advantage?

The aligned world has a similar problem (or rather, getting to the aligned world has a similar problem). Even if you made sure the first AGI is aligned, would that be able to stop a new unaligned AGI from taking over? A common response is something like, “f the first n AGIs are aligned, these will be collectively powerful enough to prevent any new unaligned AGI from grabbing decisive strategic advantage”.

We could use a similar argument for the restrained world. “If the first m AGIs are restrained, and disvalue being dominated by an unrestrained AGI enough, they could prevent an unrestrained AGI from grabbing decisive strategic advantage”.[13]

Conclusion

These have been two cartoonishly simple worlds, but I think they get across a more abstract point.

Yudkowsky views AI risk as a winner-takes-all fight between values. Something is going to rule the whole universe, and I need to make sure it’s my values that are loaded into it. My view is that we should not play this game because it is almost impossible to win (given this framing, Yudkowsky seems to agree!). To opt out of the game, we need a different framing of AI risk. I want a future with values not decided by any single agent, but instead collectively discovered.

- ^

For example mechanistic interpretability or AI governance.

- ^

I use the phrase “scope-sensitive” to be a generalisation of scope-sensitive ethics to include whatever goals or motivations an agent can have. Examples of scope-sensitive goals include: maximising paperclips, maximising profit, achieving moral good according to classical utilitarianism, or spreading your values across the stars. Examples of scope-insensitive goals include: finding a cancer cure, being virtuous, preventing wars, solving the strawberry problem.

- ^

The main problem is to avoid instrumental convergence. Work so far in this direction includes satisficers, soft optimisation, corrigability, low impact agents. Things like AI control could also help towards restrained AGI, since it could prevent the AI from pursuing scope-sensitive goals.

- ^

Not to mention that most humans don’t have scope-sensitive goals either (at least not sensitive to the “taking over the world” scope), so you’ll still have plenty of people to sell your AI system to.

- ^

I’m using lightcone as shorthand for “the foreseeable future”. I don’t think any actions 21st century humans make will have an effect on the entire lightcone.

- ^

The two worlds are two extremes, the real way things go will be some complicated combination of them. I’ll assume AI agents to have well-defined and static goals. I’ll also conflate goals and values. I’m also going to take a number of core rationalists beliefs for granted, since the argument for AI alignment is largely contingent on these beliefs.

- ^

Singularity, singletons, instrumental convergence, decisive strategic advantage, etc.

- ^

Lottery isn’t an excellent analogy, since if you don’t win, you die.

- ^

See otherness & control in the age of AGI for an exploration of this.

- ^

e.g. the right level of liberalism.

- ^

using e.g. coherent extrapolated volition.

- ^

Both because intent alignment is less solved and because AI systems are limited in the scope of their goals.

- ^

Probably m > n, making this reassuring story weaker than its analogue in the aligned world. How much bigger m is than n hinges on how take-overable the world is.

Very interesting!

I'd be interested to hear a bit more about what a restrained system would be able to do.

For example, could I make two restrained AGIs, one which has the goal:

A) "create a detailed plan plan.txt for maximising profit"

And another which has the goal:

B) "execute the plan written in plan.txt"?

If not, I'm not clear on why "make a cure for cancer" is scope-insensitive but "write a detailed plan for [maximising goal]" is scope-sensitive

Some more test case goals to probe the definition:

C) "make a maximal success rate cure for cancer"

D) "write a detailed plan for generating exactly $10^100 USD profit for my company"

Executive summary: The author argues that prioritizing “intent alignment” risks allowing one set of values to dominate the future, and advocates instead for “restraint” to prevent scope-sensitive AI systems from locking in any single vision of humanity’s destiny.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.