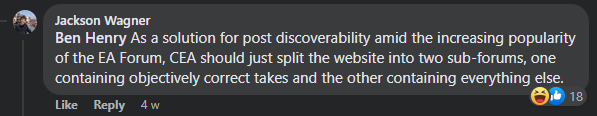

The EA Forum Team is working on building subforums. Our vision is to build more specific spaces for discussion of topics related to EA, while still allowing content to bubble up to the Frontpage.

The user problem we are thinking of targeting is: As someone who cares about X, I find it hard to find posts I’m interested in, and I don’t know if the EA Forum is a useful place for me to post ideas or keep up with X.

Would solving this be useful for you? If so, what spaces, topics, or causes would you be interested in seeing such discussion spaces for?

Note: if you've seen our current bioethics and software engineering subforums, note that these are not the final state of the feature, and we are still designing and iterating.

(There are more arguments why subforums would be cool, but I shouldn't be spending time elucidating them rn unless anyone's curious >.<)