Emrik

Bio

“In the day I would be reminded of those men and women,

Brave, setting up signals across vast distances,

Considering a nameless way of living, of almost unimagined values.”

How others can help me

I would greatly appreciate anonymous feedback, or just feedback in general. Doesn't have to be anonymous.

Posts 19

Comments303

Thank you for appreciating! 🕊️

Alas, I'm unlikely to prioritize writing except when I lose control of my motivations and I can't help it.[1] But there's nothing stopping someone else extracting what they learn from my other comments¹ ² ³ re deference and making post(s) from it, no attribution required.

(Arguably it's often more educational to learn something from somebody who's freshly gone through the process of learning it. Knowledge-of-transition can supplement knowledge-of-target-state.)

Haphazardly selected additional points on deference:

- Succinctly, the difference between Equal-Weight deference and Bayes

- "They say . | Then I can infer that they updated from to by multiplying with a likelihood ratio of . And because C and D, I can update on that likelihood ratio in order to end up with a posterior of . | The equal weight view would have me adjust down, whereas Bayes tells me to adjust up."

- Paradox of Expert Opinion

- "Ask the experts. They're likely the most informed on the issue. Unfortunately, they're also among the groups most heavily selected for belief in the hypothesis."

- ^

It's sort of paradoxical. As a result of my investigations into social epistemology 2 years ago, I came away with the conclusion that I ought to focus ~all my learning-efforts on trying to (recursively) improve my own cognition, with ~no consideration for my ability to teach anyone anything of what I learn. My motivation to share my ideas is an impurity that I've been trying hard to extinguish. Writing is not useless, but progress toward my goal is much faster when I automatically think in the language I construct purely to communicate with myself.

(Publishing comment-draft that's been sitting here two years, since I thought it was good (even if super-unfinished…), and I may wish to link to it in future discussions. As always, feel free to not-engage and just be awesome. Also feel free to not be awesome, since awesomeness can only be achieved by choice (thus, awesomeness may be proportional to how free you feel to not be it).)

Yes! This relates to what I call costs of compromise.

Costs of compromise

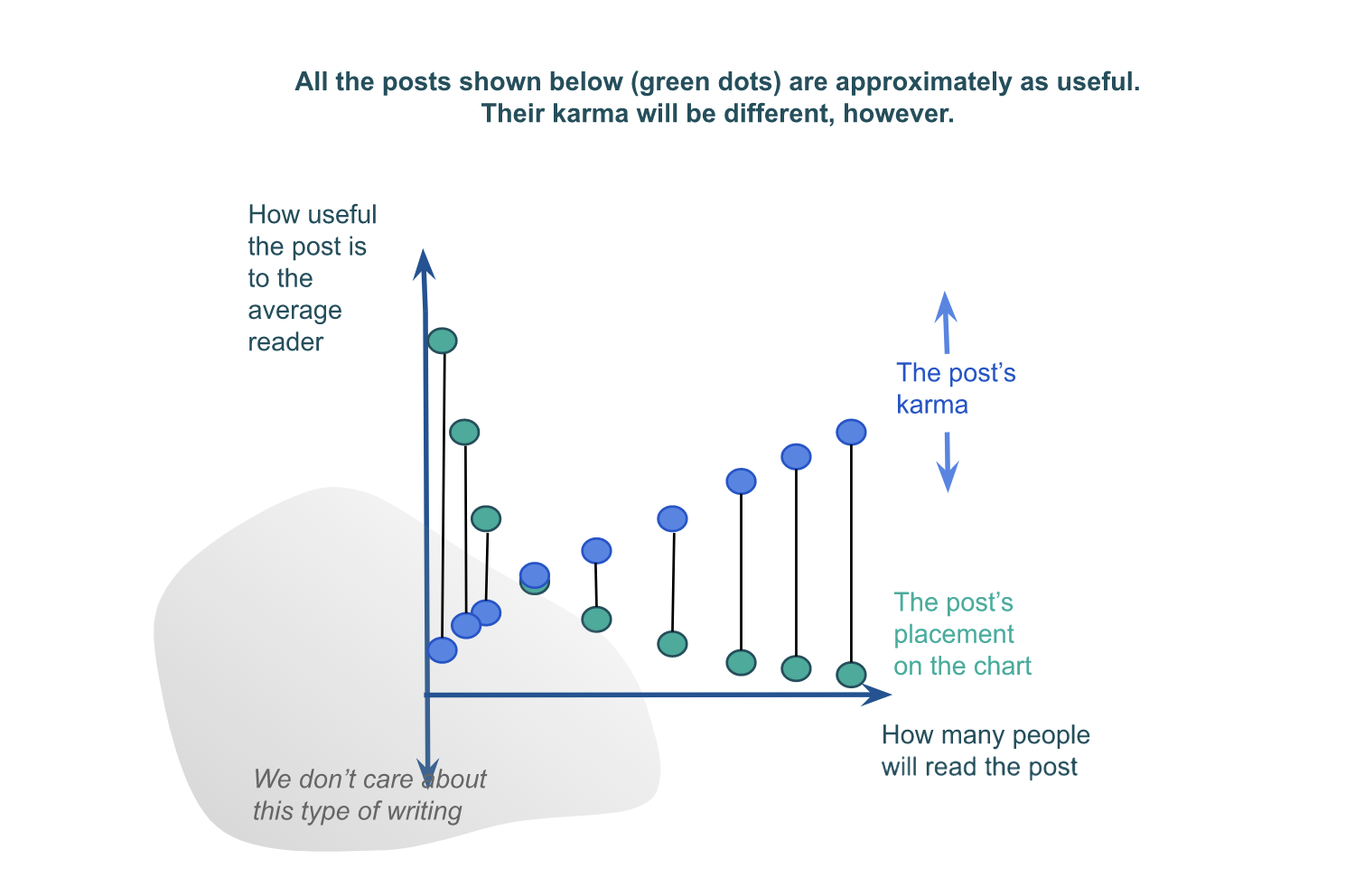

As you allude to by the exponential decay of the green dots in your last graph, there are exponential costs to compromising what you are optimizing for in order to appeal to a wider variety of interests. On the flip-side, how usefwl to a subgroup you can expect to be is exponentially proportional to how purely you optimize for that particular subset of people (depending on how independent the optimization criteria are). This strategy is also known as "horizontal segmentation".[1]

The benefits of segmentation ought to be compared against what is plausibly an exponential decay in the number of people who fit a marginally smaller subset of optimization criteria. So it's not obvious in general whether you should on the margin try to aim more purely for a subset, or aim for broader appeal.

Specialization vs generalization

This relates to what I think are one of the main mysteries/trade-offs in optimization: specialization vs generalization. It explains why scaling your company can make it more efficient (economies of scale),[2] why the brain is modular,[3] and how Howea palm trees can speciate without the aid of geographic isolation (aka sympatric speciation constrained by genetic swamping) by optimising their gene pools for differentially-acidic patches of soil and evolving separate flowering intervals in order to avoid pollinating each other.[4]

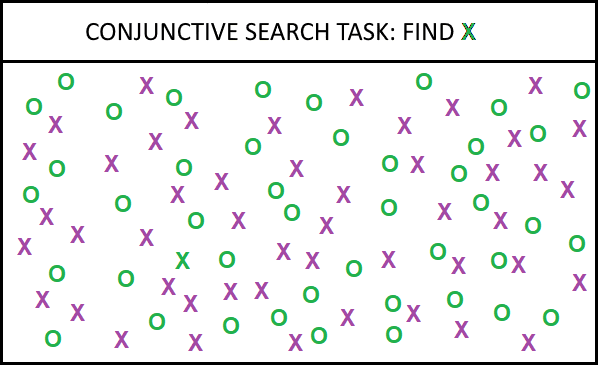

Conjunctive search

When you search for a single thing that fits two or more criteria, that's called "conjunctive search". In the image, try to find an object that's both [colour: green] and [shape: X].

My claim is that this analogizes to how your brain searches for conjunctive ideas: a vast array of preconscious ideas are selected from a distribution of distractors that score high in either one of the criteria.

10d6 vs 1d60

Preamble2: When you throw 10 6-sided dice (written as "10d6"), the probability of getting a max roll is much lower compared to if you were throwing a single 60-sided dice ("1d60"). But if we assume that the 10 6-sided dice are strongly correlated, that has the effect of squishing the normal distribution to look like the uniform distribution, and you're much more likely to roll extreme values.

Moral: Your probability of sampling extreme values from a distribution depends the number of variables that make it up (i.e. how many factors convolved over), and the extent to which they are independent. Thus, costs of compromise are much steeper if you're sampling for outliers (a realm which includes most creative thinking and altruistic projects).

Spaghetti-sauce fallacies 🍝

If you maximally optimize a single spaghetti sauce for profit, there exists a global optimum for some taste, quantity, and price. You might then declare that this is the best you can do, and indeed this is a common fallacy I will promptly give numerous examples of. [TODO…]

But if you instead allow yourself to optimize several different spaghetti sauces, each one tailored to a specific market, you can make much more profit compared to if you have to conjunctively optimize a single thing.

Thus, a spaghetti-sauce fallacy is when somebody asks "how can we optimize thing more for criteria ?" when they should be asking "how can we chunk/segment into cohesive/dimensionally-reduced segments so we can optimize for {, ..., } disjunctively?"

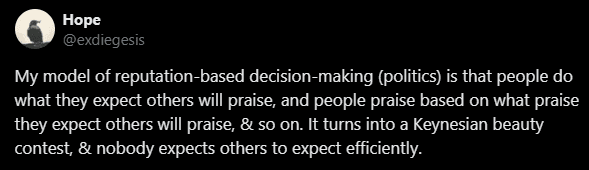

People rarely vote based on usefwlness in the first place

As a sidenote: People don't actually vote (/allocate karma) based on what they find usefwl. That's a rare case. Instead, people overwhelmingly vote based on what they (intuitively) expect others will find usefwl. This rapidly turns into a Keynesian Status Contest with many implications. Information about people's underlying preferences (or what they personally find usefwl) is lost as information cascades are amplified by recursive predictions. This explains approximately everything wrong about the social world.

Already in childhood, we learn to praise (and by extension vote) based on what kinds of praise other people will praise us for. This works so well as a general heuristic that it gets internalized and we stop being able to notice it as an underlying motivation for everything we do.

- ^

See e.g. spaghetti sauce.

- ^

Scale allows subunits (e.g. employees) to specialize at subtasks.

- ^

Every time a subunit of the brain has to pull double-duty with respect to what it adapts to, the optimization criteria compete for its adaptation—this is also known as "pleiotropy" in evobio, and "polytely" in… some ppl called it that and it's a good word.

- ^

This palm-tree example (and others) are partially optimized/goodharted for seeming impressive, but I leave it in because it also happens to be deliciously interesting and possibly entertaining as examples of a costs of compromise. I want to emphasize how ubiquitous this trade-off is.

Oh, this is excellent! I do a version of this, but I haven't paid enough attention to what I do to give it a name. "Blurting" is perfect.

I try to make sure to always notice my immediate reaction to something, so I can more reliably tell what my more sophisticated reasoning modules transforms that reaction into. Almost all the search-process imbalances (eg. filtered recollections, motivated stopping, etc.) come into play during the sophistication, so it's inherently risky. But refusing to reason past the blurt is equally inadvisable.

This is interesting from a predictive-processing perspective.[1] The first thing I do when I hear someone I respect tell me their opinion, is to compare that statement to my prior mental model of the world. That's the fast check. If it conflicts, I aspire to mentally blurt out that reaction to myself.

It takes longer to generate an alternative mental model (ie. sophistication) that is able to predict the world described by the other person's statement, and there's a lot more room for bias to enter via the mental equivalent of multiple comparisons. Thus, if I'm overly prone to conform, that bias will show itself after I've already blurted out "huh!" and made note of my prior. The blurt helps me avoid the failure mode of conforming and feeling like that's what I believed all along.

Blurting is a faster and more usefwl variation on writing down your predictions in advance.

- ^

Speculation. I'm not very familiar with predictive processing, but the claim seems plausible to me on alternative models as well.

I disagree a little bit with the credibility of some of the examples, and want to double-click others. But regardless, I think this is a very productive train of thought and thank you for writing it up. Interesting!

And btw, if you feel like a topic of investigation "might not fit into the EA genre", and yet you feel like it could be important based on first-principles reasoning, my guess is that that's a very important lead to pursue. Reluctance to step outside the genre, and thinking that the goal is to "do EA-like things", is exactly the kind of dynamic that's likely to lead the whole community to overlook something important.

I'm not sure. I used to call it "technical" and "testimonial evidence" before I encountered "gears-level" on LW. While evidence is just evidence and Bayesian updating stays the same, it's usefwl to distinguish between these two categories because if you have a high-trust community that frequently updates on each others' opinions, you risk information cascades and double-counting of evidence.

Information cascades develop consistently in a laboratory situation [for naively rational reasons, in which other incentives to go along with the crowd are minimized]. Some decision sequences result in reverse cascades, where initial misrepresentative signals start a chain of incorrect [but naively rational] decisions that is not broken by more representative signals received later. - (Anderson & Holt, 1998)

Additionally, if your model of a thing has has "gears", then there are multiple things about the physical world that, if you saw them change, it would change your expectations about the thing.

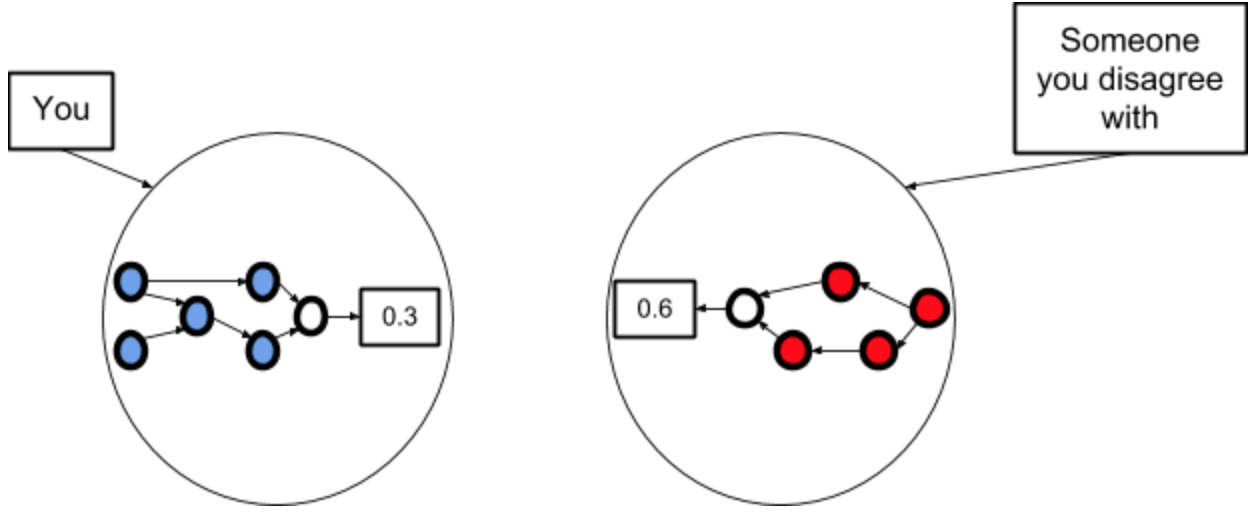

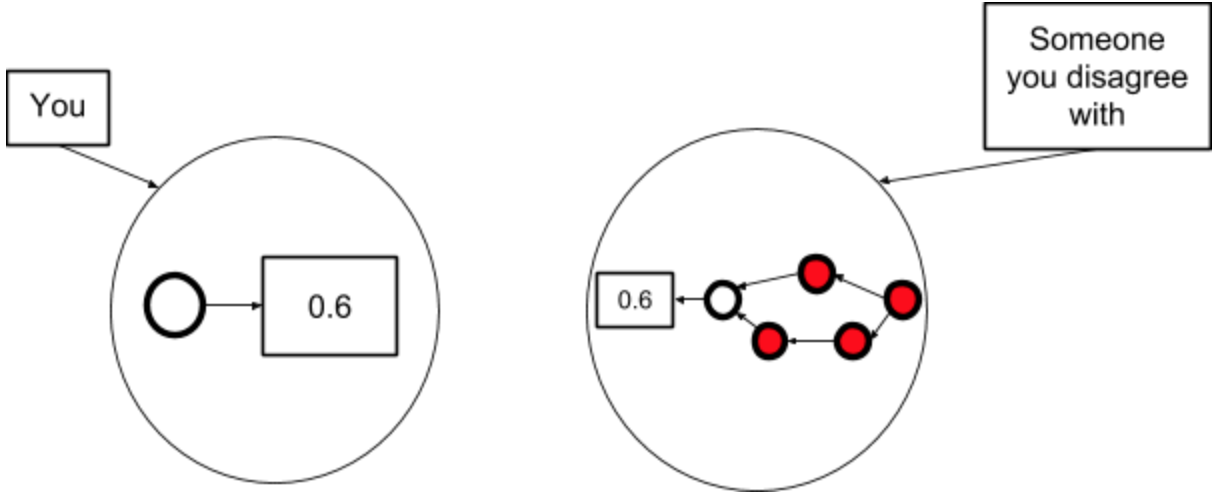

Let's say you're talking to someone you think is smarter than you. You start out with different estimates and different models that produce those estimates. From Ben Pace's a Sketch of Good Communication:

Here you can see both blue and red has gears. And since you think their estimate is likely to be much better than yours, and you want get some of that amazing decision-guiding power, you throw out your model and adopt their estimate (cuz you don't understand or don't have all the parts of their model):

Here, you have "destructively deferred" in order to arrive at your interlocutor's probability estimate. Basically zombified. You no longer have any gears, even if the accuracy of your estimate has potentially increased a little.

An alternative is to try to hold your all-things-considered estimates separate from your independent impressions (that you get from your models). But this is often hard and confusing, and they bleed into each other over time.

"When someone gives you gears-level evidence, and you update on their opinion because of that, that still constitutes deferring."

This was badly written. I just mean that if you update on their opinion as opposed to just taking the patterns & trying to adjust for the fact that you received them through filters, is updating on testimony. I'm saying nothing special here, just that you might be tricking yourself into deferring (instead of impartially evaluating patterns) by letting the gearsy arguments woozle you.

I wrote a bit about how testimonial evidence can be "filtered" in the paradox of expert opinion:

If you want to know whether string theory is true and you're not able to evaluate the technical arguments yourself, who do you go to for advice? Well, seems obvious. Ask the experts. They're likely the most informed on the issue. Unfortunately, they've also been heavily selected for belief in the hypothesis. It's unlikely they'd bother becoming string theorists in the first place unless they believed in it.

If you want to know whether God exists, who do you ask? Philosophers of religion agree: 70% accept or lean towards theism compared to 16% of all PhilPaper Survey respondents.

If you want to know whether to take transformative AI seriously, what now?

Some selected comments or posts I've written

- Taxonomy of cheats, multiplex case analysis, worst-case alignment

- "You never make decisions, you only ever decide between strategies"

- My take on deference

- Dumb

- Quick reasons for bubbliness

- Against blind updates

- The Expert's Paradox, and the Funder's Paradox

- Isthmus patterns

- Jabber loop

- Paradox of Expert Opinion

- Rampant obvious errors

- Arbital - Absorbing barrier

- "Decoy prestige"

- "prestige gradient"

- Braindump and recommendations on coordination and institutional decision-making

- Social epistemology braindump (I no longer endorse most of this, but it has patterns)

Other posts I like

- The Goddess of Everything Else - Scott Alexander

- “The Goddess of Cancer created you; once you were hers, but no longer. Throughout the long years I was picking away at her power. Through long generations of suffering I chiseled and chiseled. Now finally nothing is left of the nature with which she imbued you. She never again will hold sway over you or your loved ones. I am the Goddess of Everything Else and my powers are devious and subtle. I won you by pieces and hence you will all be my children. You are no longer driven to multiply conquer and kill by your nature. Go forth and do everything else, till the end of all ages.”

- A Forum post can be short - Lizka

- Succinctly demonstrates how often people goodhart on length or other irrelevant criteria like effort moralisation. A culture for appreciating posts for the practical value they add to you specifically, would incentivise writers to pay more attention to whether they are optimising for expected usefwlness or just signalling.

- Changing the world through slack & hobbies - Steven Byrnes

- Unsurprisingly, there's a theme to what kind of posts I like. Posts that are about de-Goodharting ourselves.

- Also ht Eliezer and Alex Lawsen for posts on the same thing.

- Unsurprisingly, there's a theme to what kind of posts I like. Posts that are about de-Goodharting ourselves.

- Hero Licensing - Eliezer Yudkowsky

- Stop apologising, just do the thing. People might ridicule you for believing in yourself, but just do the thing.

- A Sketch of Good Communication - Ben Pace

- Highlights the danger of deferring if you're trying to be an Explorer in an epistemic community.

- Holding a Program in One's Head - Paul Graham

- "A good programmer working intensively on his own code can hold it in his mind the way a mathematician holds a problem he's working on. Mathematicians don't answer questions by working them out on paper the way schoolchildren are taught to. They do more in their heads: they try to understand a problem space well enough that they can walk around it the way you can walk around the memory of the house you grew up in. At its best programming is the same. You hold the whole program in your head, and you can manipulate it at will.

That's particularly valuable at the start of a project, because initially the most important thing is to be able to change what you're doing. Not just to solve the problem in a different way, but to change the problem you're solving."

- "A good programmer working intensively on his own code can hold it in his mind the way a mathematician holds a problem he's working on. Mathematicians don't answer questions by working them out on paper the way schoolchildren are taught to. They do more in their heads: they try to understand a problem space well enough that they can walk around it the way you can walk around the memory of the house you grew up in. At its best programming is the same. You hold the whole program in your head, and you can manipulate it at will.

Principles are great! I call them "stone-tips". My latest one is:

Look out for wumps and woozles!

It's one of my favorite. ^^ It basically very-sorta translates to bikeshedding (idionym: "margin-fuzzing"), procrastination paradox (idionym: "marginal-choice trap" + attention selection history + LDT), and information cascades / short-circuits / double-counting of evidence… but a lot gets lost in translation. Especially the cuteness.

The stone-tip closest to my heart, however, is:

I wanna help others, but like a lot for real!

I think EA is basically sorta that… but a lot gets confusing in implementation.

I appreciate the point, but I also think EA is unique among morality-movements. We hold to principles like rationality, cause prioritization, and asymmetric weapons, so I think it'd be prudent to exclude people if their behaviour overly threatens to loosen our commitment to them.

And I say this as a insect-mindfwl vegan who consistently and harshly denounces animal experimentation whenever I write about neuroscience[1] (frequently) in otherwise neutral contexts. Especially in neutral contexts.

- ^

Evil experiments are ubiquitous in neuroscience btw.

My latest tragic belief is that in order to improve my ability to think (so as to help others more competently) I ought to gradually isolate myself from all sources of misaligned social motivation. And that nearly all my social motivation is misaligned relative to the motivations I can (learn to) generate within myself. So I aim to extinguish all communication before the year ends (with exception for Maria).

I'm posting this comment in order to redirect some of this social motivation into the project of isolation itself. Well, that, plus I notice that part of my motivation comes from wanting to realify an interesting narrative about myself; and partly in order to publicify an excuse for why I've ceased (and aim to cease more) writing/communicating.