Editorial note

This report is a “shallow” investigation, as described here, and was commissioned by Open Philanthropy and produced by Rethink Priorities. Open Philanthropy does not necessarily endorse our conclusions.

The primary focus of the report is a literature review of the effectiveness of prizes in spurring innovation and what design features of prizes are most effective in doing so. We also spoke to one expert. We mainly focused on large inducement prizes (i.e. prizes that define award criteria in advance to spur innovation towards a pre-defined goal). However, as there is relatively little published literature on this type of prize, we also include and discuss the evidence we found on other types of prizes (such as recognition prizes) and related concepts (such as advance market commitments and Grand Challenges).

We don’t intend this report to be Rethink Priorities’ final word on prizes and we have tried to flag major sources of uncertainty in the report. We hope this report galvanizes a productive conversation about the effectiveness of prizes within the effective altruism community. We are open to revising our views as more information is uncovered.

Key takeaways

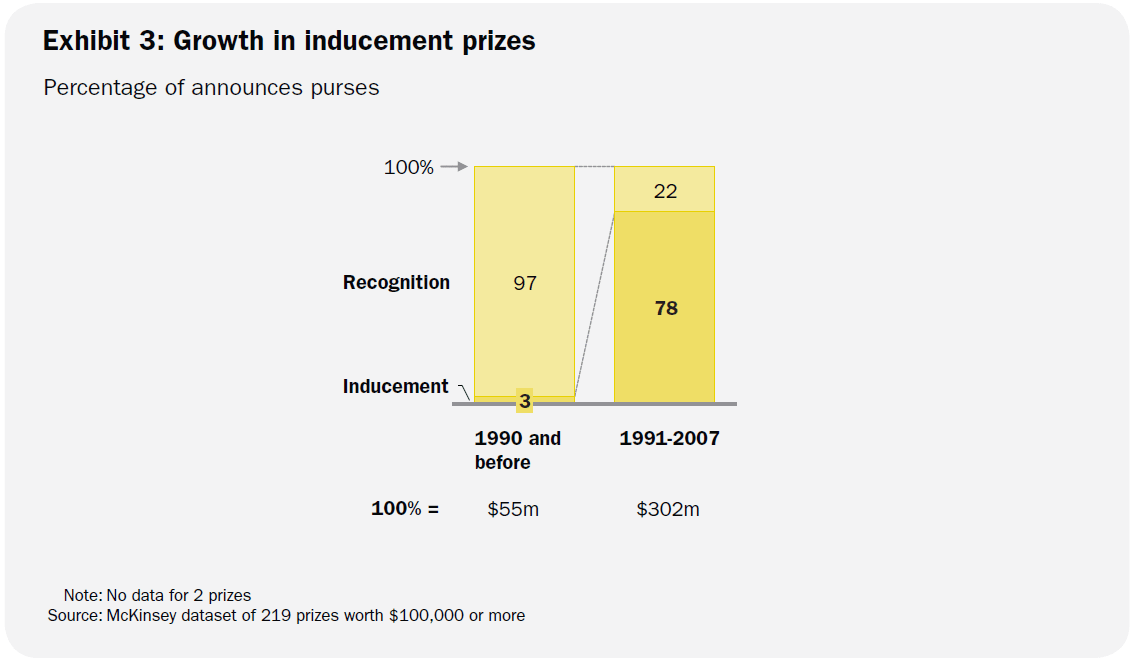

- Recent decades have witnessed a boom in large prizes. Since 1970, the total cash value offered by large (≥ $100,000) recognition and inducement prizes has grown exponentially. This growth is mainly driven by inducement prizes, which comprised 78% of the total prize purse in 2007.

- We found little quantitative empirical evidence on the effect of prizes on innovation, arguably due to two factors: First, as there is a substantial divergence between economic theory and actual prizes implemented in practice, there is little theory for empirical research to test. Second, it’s difficult to do a counterfactual analysis, and there is an over-reliance on historical case studies, which are often misleading.

- We found only a few studies on the impact of prizes on innovation and intermediate outcomes that have used a convincing counterfactual analysis (summary table here):

- For inducement prizes, we found only one study establishing a causal effect of prizes on the number of patents as a proxy for innovation in 19th / early 20th century England (Brunt, Lerner, & Nicholas, 2012). Two other articles focused on intermediate outcomes and found that prizes increased the number and diversity of coauthor collaboration and influenced the direction of research (Sigurdson, 2021).

- For recognition prizes, we found stronger evidence on their innovation-related and field-shaping effects, including large positive effects on the number of publications, citations, entrants, and incumbents for prizewinning topics since 1970 (Jin, Ma, & Uzzi, 2021). Moreover, there is evidence of recognition prizes boosting patents in late 19th and early 20th century Japan (Nicholas, 2013). We also found evidence that prizes have become increasingly concentrated among a small group of scientists and ideas in recent decades (Ma & Uzzi, 2018), and that there may be negative spillover effects of prizes on the allocation of attention (Reschke, Azoulay, & Stuart, 2018).

- Inducement prizes can leverage substantial amounts of private capital, with figures pointing to 2-50 times the amount of private capital relative to the cash rewards. We haven’t vetted these numbers, but our best guess is that the average large inducement prize (≥ $100,000) leverages 2-10 times the amount of private capital relative to the cash rewards (80% confidence interval).

- There seems to be no consensus in economic theory about when to choose prizes for innovation (over patents or grants). The policy literature provides some rules of thumb: Prizes are most useful (1) when the goal is clear but the path to achieving it is not, and (2) in industries that are susceptible to underproduction of innovation due to market failure (e.g. neglected tropical diseases or climate change interventions).

- We have found relatively little empirical (or theoretical) evidence on how to effectively design a prize. The available evidence suggests:

- There is only a weak relationship between cash amounts and innovative activity and outputs. It appears that (prestigious) medals provide stronger incentives than monetary rewards.

- No compensation scheme performed unambiguously better, but a winner-takes-all scheme in a single contest and a multiple prize scheme in a series of successive contests could yield more innovation activity and output.

- A smaller number of participants leads to higher efforts but reduces the likelihood of finding a particularly good solution. A diverse set of participants seems to be beneficial.

- Several critiques of prizes exist, including Zorina Khan’s critical review of prizes from a historical perspective highlighting that prizes can fail easily if not well-designed. Moreover, inducement prizes are associated with a number of risks, such as the exclusion of certain population groups in the pool of participants, and the potential of duplicative and wasteful efforts by participants.

- We provide a list of exemplary recent and large inducement prizes and two case studies of large-scale inducement prizes (Google Lunar X Prize and Auto X Prize).

- We also reviewed two closely related concepts to prizes:

- One idea that has recently gained momentum in the global health and development space is advance market commitments (AMCs) with the pneumococcal pilot AMC yielding promising outcomes. However, the pilot focused only on building supply capacity for an already existing vaccine and AMCs have not been tested as a tool to incentivize R&D activity yet. AMCs have received some criticism, most notably that the cost-effectiveness of the pilot was low relative to other vaccine interventions ($4,722 per child saved according to one estimate). It is not very clear when AMCs should be used (vs. prizes or other mechanisms), but it depends on the level of market maturity and the type of market failure. We believe that AMCs are a promising incentive mechanism that deserves further review.

- We also looked at Grand Challenges, which appears to be a mixed model in terms of funding: applicants are funded for their grant proposals, but also receive additional support throughout the development pipeline if successful. Additionally, they appear more problem-focused in their calls for applicants, compared to other prizes which seek a specific solution.

- In conclusion, we don’t think the evidence supports an indiscriminate use of inducement prizes, but we recommend considering them in specific circumstances (e.g. in the case of market failure and a clear goal but unclear path to success). We also recommend reviewing recognition prizes more closely, as we found them to be associated with more positive outcomes than we anticipated. We are not convinced that very large cash rewards are beneficial and advise focusing more on creating prestige and visibility around a prize instead. We also believe that it would be worthwhile to review AMCs further, especially for novel and untested applications.

- If we had more time, we would spend more time reviewing the literature on recognition prizes, as we found them to be more promising than we anticipated. We would also want to speak to scholars who have thought deeply about prizes, to check if we missed or mis-weighed any important considerations. Moreover, we would try to come up with more concrete recommendations on what incentive mechanism works best in what context (AMCs vs. inducement prizes vs. grants and other mechanisms). We would also review other potential applications and existing proposals of AMCs.

Types and definitions of prizes

In our understanding, there is no universally agreed-upon typology and set of prize definitions in the literature. According to Everett (2011, p. 7), the simplest distinction between prizes is that made between recognition prizes (also called blue-sky prizes or awards [Kay, 2011, p. 10]) and inducement prizes (also called targeted prizes)[1]. Recognition prizes are awarded ex post and in recognition of a specific or general achievement (e.g. Nobel Prize, Man Booker Prize). Inducement prizes are established ex ante, defining award criteria in advance in order to spur innovation towards a pre-defined goal (e.g. Ansari X Prize).

Within inducement prizes, some authors distinguish between grand innovation prizes and smaller-scale competitions (Murray et al., 2012, p.1), where the former refer to large-scale monetary prizes with no path to success known ex ante and believed to require a breakthrough solution and significant commitment, and the latter refer to challenges for well-defined problems that often require only limited time commitment or involve adapting existing solutions to problems (e.g. Innocentive, Topcoder).

The main focus of this report is on inducement prizes, and in particular, grand innovation prizes. However, as there is relatively little published literature on these types of prizes, we also include and discuss evidence we found on other types of prizes.

Two common features of inducement prizes are that (1) they only pay if a specific goal is achieved, and (2) they do not require the funder to decide how the goal should be met or who is in the best position to meet it (Kalil, 2006, p. 8). This stands in contrast to grants, which require the funding agency to determine who will receive funds to achieve a goal. Moreover, grants pay for efforts and are not tied to outcomes (McKinsey, 2009, p. 36).

Another focus of this report are advance market commitments (AMCs), which are conceptually related to prizes.[2] Advance market commitments offer a prospective guarantee by a donor to purchase a fixed amount of a specified technology at a fixed price (Koh Jun, 2012, p. 87). Advance market commitments are similar to prizes in that both are considered a form of “pull” funding; that is, they guarantee a reward upon an achievement that meets certain criteria (Koh Jun, 2012, p. 86). This is in opposition to “push” funding, which provides grants for the innovator’s investments whether they result in a successful product or not. The distinction between inducement prizes and AMCs is somewhat fuzzy,[3] but two exemplary differences are that (1) typically, prizes are paid out in a lump sum, while AMCs are paid out on a per-unit basis, and (2) AMCs aim to induce production, while prizes focus on inducing innovation.

Grand Challenges, a set of initiatives launched in 2003 by the Gates Foundation, are yet another related concept that we investigate in this report.[4] According to the website, it is a “family of initiatives fostering innovation to solve key global health and development problems.” While we had difficulties pinning down the details of the incentive structure, our impression is that these initiatives are some hybrid form of push and pull funding mechanisms. Grand Challenges are different from inducement prizes in that they award grants for selected research proposals, i.e. they pay for effort. On the other hand, in at least some cases grantees also have an opportunity to receive more funding based on the outcomes of their research. For example, in Grand Challenges Explorations, grantees can apply for additional support for projects that demonstrate innovative solutions (Grand Challenges Explorations Round 26 Rules and Guidelines, pp. 1, 6).

The recent boom in inducement prizes

The use of inducement prizes to incentivize technological and scientific breakthroughs dates back hundreds of years, and prizes have been proliferating as a tool used by policy-makers, firms, and NGOs in recent decades (Sigurdson, 2021, p. 1).

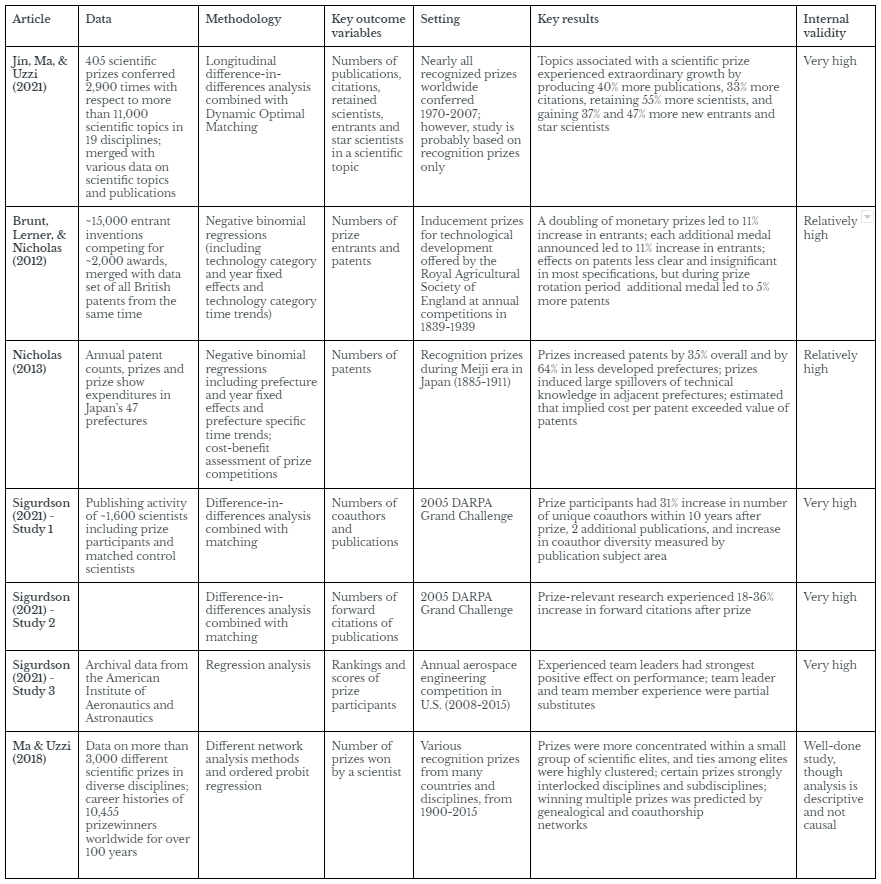

As Figure 1 shows, the total cash value offered by large (≥ $100,000) recognition and inducement prizes has grown exponentially since 1970 according to a data set of 219 prizes collected by McKinsey (2009, p. 16). They also found that the total number of prizes has increased steeply, with more than 60 of the 219 prizes having been launched since 2000.

Figure 1 - Aggregate prize purse, prizes over $100,000 (McKinsey, 2009, p. 16)

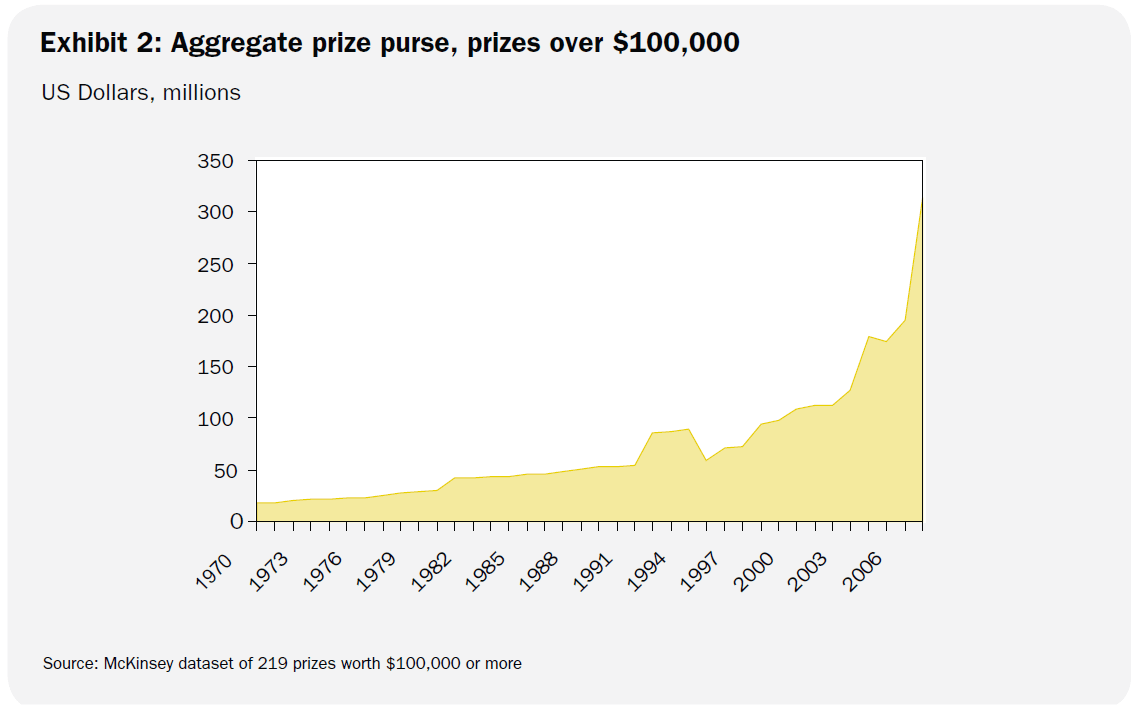

In recent decades, there has been a shift from recognition prizes towards inducement prizes. According to McKinsey’s analysis (2009, p. 17), before 1991, only 3% of the value of large prize purses (≥ $100,000) that were investigated in the report came from inducement prizes (with recognition prizes making up the other 97%). From 1991 to 2007, this number increased to 78% (see Figure 2 below). We have not found any more recent data on the growth of inducement prizes, but we suspect that the trend has continued given the extensive media coverage we found on prizes.

Figure 2 - Growth in inducement prizes (McKinsey, 2009, p. 17)

Little empirical evidence for the effect of prizes on innovation

Despite the recent boom in prizes we described in the previous section, we found a surprisingly little amount of empirical evidence on the effect of prizes on any dimension of innovation.

According to Jin, Ma, and Uzzi (2021, p. 2), prize research so far has mainly studied how awards change prizewinners’ careers, and it is unclear whether the link between prizes and growth for a single prizewinner’s work extends to changes in the growth of an entire topic. They claimed that current theoretical arguments and empirical work are at a nascent stage.

Sigurdson (2021, p. 6) found no rigorous quantitative studies of the impact of modern large-scale inducement prizes on any dimension of innovation beyond the immediate technical solution to the prize itself. He attributes two main factors to the challenge of knowing if or how inducement prizes impact innovation:

- There is little theory for empirical research on modern inducement prizes to test, as much of the economic theory on inducement prizes has considered their use mainly as an alternative to patents.[5] (See Burstein and Murray ([2016, p. 408] for a nice description of the divergence between theoretical and actual prizes.)

- There is a lack of counterfactual analysis and an over-reliance on historical case studies, which are often misleading. Sigurdson (2021) found only a handful of studies on prizes that have used counterfactual analysis, which were in many ways different from the types of prizes offered today and thus provide limited comparability to modern prizes like the X Prize.

Moreover, Sigurdson (2021) mentioned that due to many different existing forms of prizes, empirical studies are scattered across quite distinct prize forms. This limits the generalizability of findings from one study to another.

According to Burstein and Murray (2016, p. 408):

“Modern innovation prizes, as typically implemented, are a scholarly mystery. Three literatures speak to such prizes — economic, policy, and empirical — and yet none adequately justifies the use of innovation prizes in practice, explains when they should be chosen over other mechanisms, or explains whether or why they work. As a result, prizes remain little understood as an empirical matter and poorly justified as a theoretical matter.”

Available evidence suggests prizes can shape the trajectory of science and innovation

[Confidence: We have medium confidence that prizes can effectively spur innovation, which is largely based on four quasi-experimental studies and two case studies. We deem it unlikely that a longer review of the literature would yield substantially more insights. The publication of new quasi-experimental studies on the innovation-related effects of recent large prizes might change our view, but we are not aware of any forthcoming studies on this topic.]

We found little high-quality empirical evidence on the effectiveness of prizes in spurring innovation. In this section, we summarize our takeaways based on the most important pieces of available literature we found on the effects of prizes on innovation and intermediate outcomes. Please refer to Appendix 1 for a more thorough discussion of the evidence and Appendix 2 for an overview of the best quantitative studies (in our view) in a table format.

Apart from the studies we describe here, a useful summary of studies on the impact of inducement prizes can be found in Gök (2013).[6] We decided to not include the majority of studies reviewed by Gök in this section, as we deem their quality and internal validity comparatively low.[7] However, we refer to some of those studies in other sections of this report. We also created a list of other, probably less relevant, studies we came across during our research that we decided not to or didn’t have time to review here.

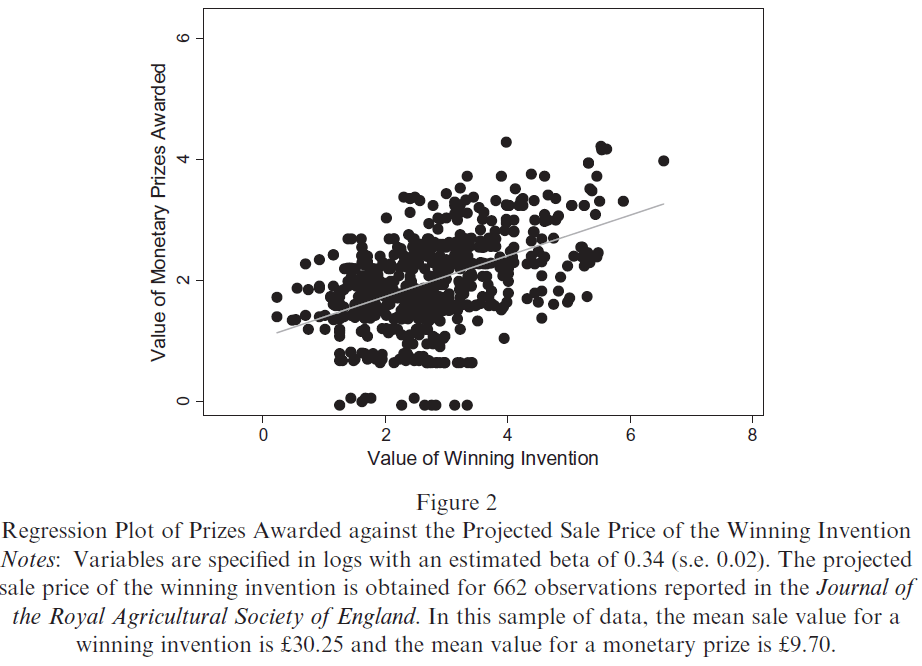

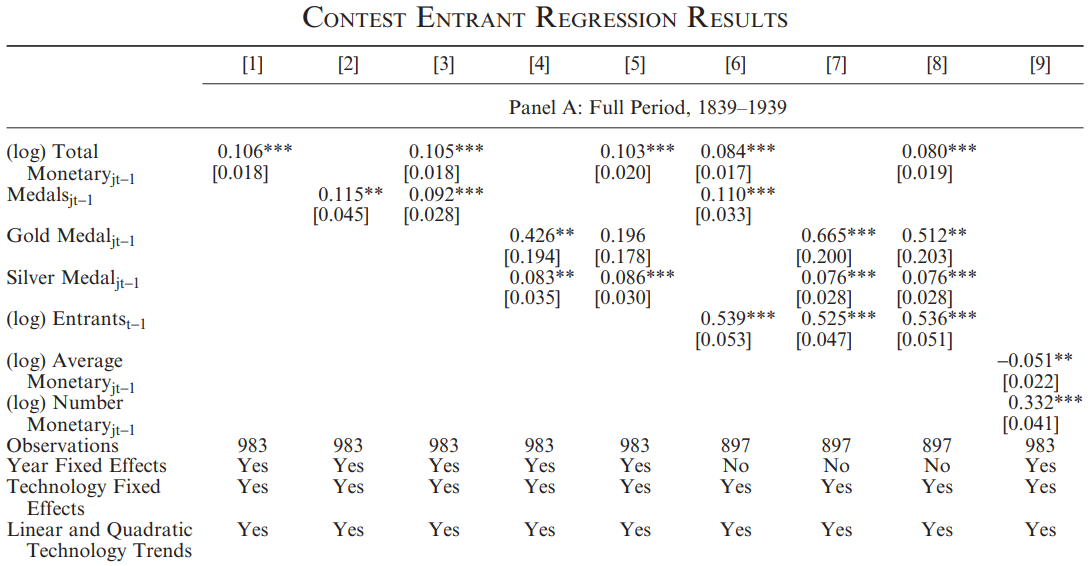

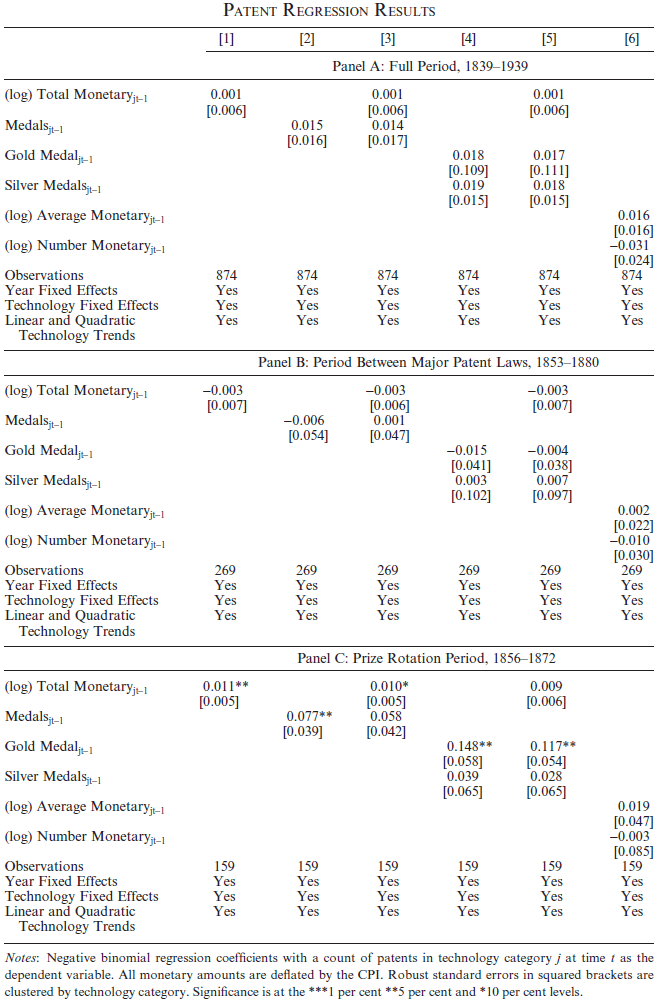

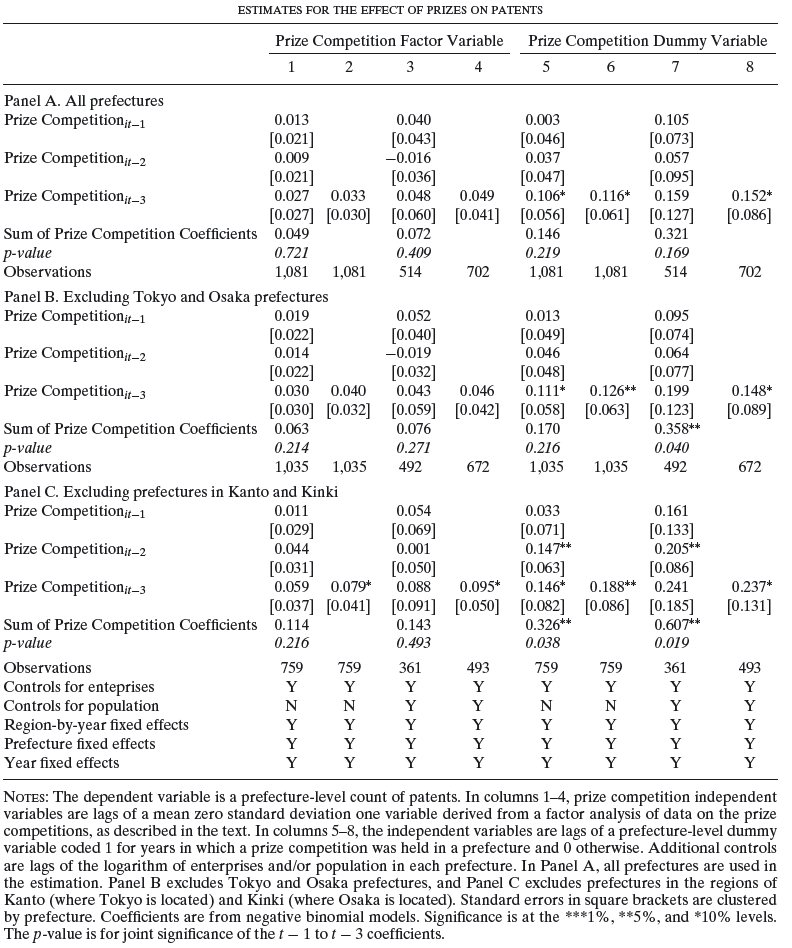

Regarding inducement prizes, we found only one article that tried to establish a causal effect of inducement prizes on innovation output (Brunt, Lerner, & Nicholas, 2012). The authors used data on nearly 2,000 awards and 15,000 entries for technological development by the Royal Agricultural Society of England (RASE) at annual competitions between 1839 and 1939. RASE awarded both medals and monetary prizes of more than £1 million. Using negative binomial regressions, the study found a significant and positive effect on the number of patents as a proxy for innovation. For example, in one specification, an additional medal was associated with an 8% increase in the number of patents. We deem the internal validity high enough that we trust at least the sign of the relationship. However, we think its external validity is quite low. We are doubtful whether inducement prizes for agricultural technology in 19th and early 20th century England can teach us much about how effective a modern inducement prize for other technologies or outcomes would be. Moreover, we’re reluctant to put too much weight on this single piece of evidence.

Another well-executed set of articles as part of a doctoral dissertation examined the causal relationship between inducement prizes and intermediate outcomes relevant to innovation (Sigurdson, 2021). The author used data on more than 1,600 participating and control scientists in the context of the 2005 DARPA Grand Challenge, an inducement prize for autonomous vehicles. Using a difference-in-differences approach combined with matching, he established that the prize increased the number of and diversity of coauthors that participants collaborated with after the prize. More precisely, prize participants had a 31% increase in the number of unique coauthors per year within the 10-year period after the prize compared to non-participating researchers. Prize participants were also more likely to publish work with coauthors from other scientific disciplines than non-participating researchers. Another interesting finding was that prizes may influence the direction of research by enabling the discovery of breakthrough ideas. However, it is not clear to us how these intermediate outcomes relate to the overall quantity and quality of innovation.

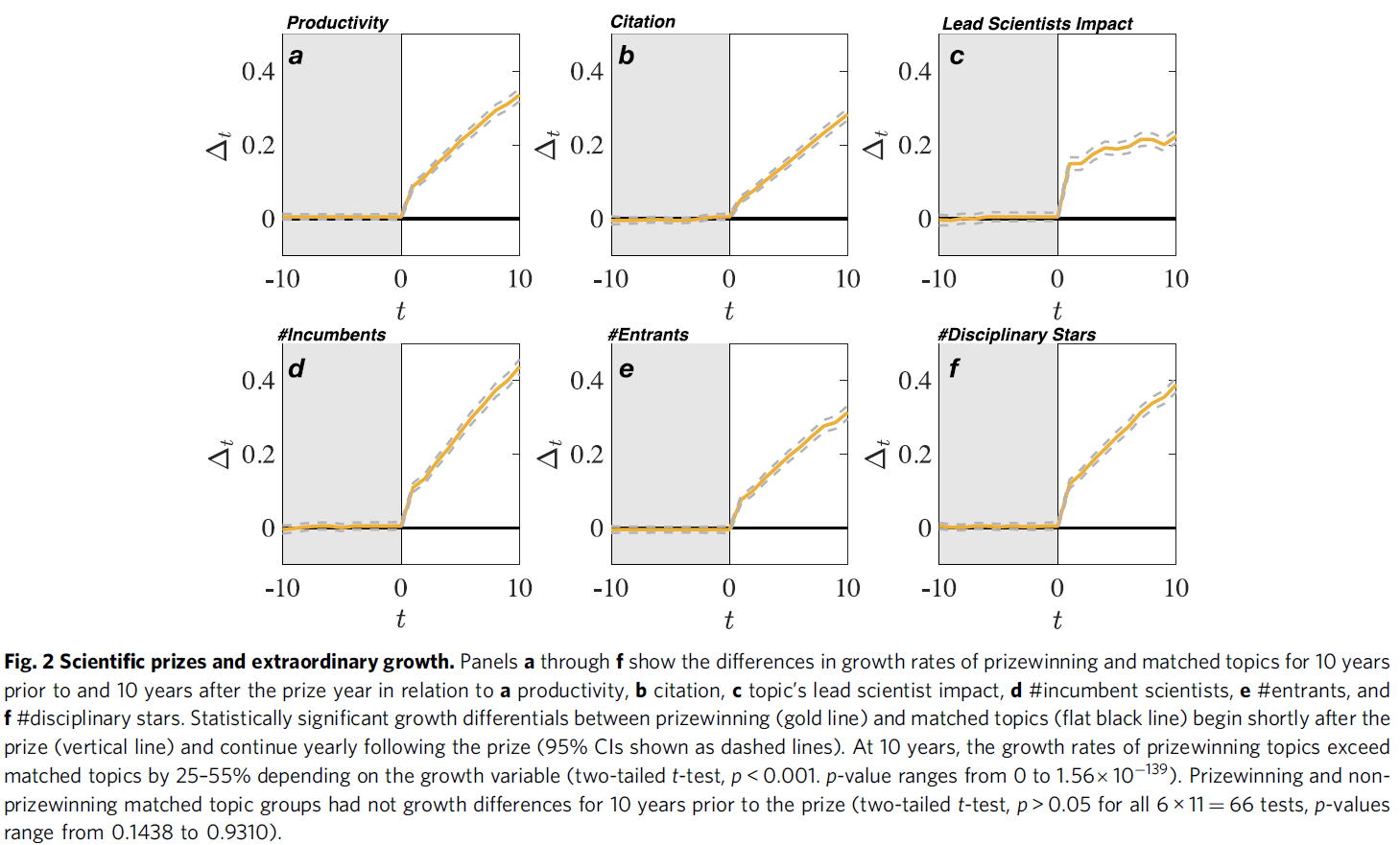

Regarding recognition prizes, there seems to be somewhat stronger evidence for their innovation-related and field-shaping effects. An impressive high-quality article focused on 405 scientific prizes that were conferred 2,900 times between 1970 and 2007 with respect to 11,000 scientific topics in 19 disciplines (Jin, Ma, & Uzzi, 2021). The data set represents almost all recognition prizes worldwide in that time period. Using a difference-in-differences regression design combined with matching, the authors found huge positive effects on various indicators of research effort for prizewinning topics, such as the number of publications (40%), citations (33%), entrants (37%), and incumbents (55%) in the first 5-10 years after the prize. While this is no direct evidence of effects on innovation, we think it’s plausible to conjecture that a large amount of additional attention received by a scientific topic increases its speed and quality of innovation. Moreover, the sheer scale and comprehensiveness of the data set and, in our view, unusually high internal validity of the study convinced us to trust and put a lot of weight on its findings.

We also found direct evidence of the effects of recognition prizes on innovation from a study focusing on late 19th and early 20th century Japan (Nicholas, 2013). Similarly to Brunt, Lerner, and Nicholas (2012), the author used a binomial regression model on a data set combining patent counts and various information on prizes. He found that recognition prizes (mostly non-pecuniary) boosted patents, but only in less technologically developed areas of Japan. However, as the estimated effects had large standard errors and we deem the study’s external validity rather limited, we do not weigh this piece of research strongly in our conclusions.

A study on more than 3,000 recognition prizes in science in diverse disciplines over more than 100 years and in over 50 countries followed the career trajectories of almost 11,000 prizewinners (Ma & Uzzi, 2018). The authors found that prizes were increasingly concentrated among a small and tightly connected elite, suggesting that a small group of scientists and ideas pushed scientific boundaries. However, it is unclear how these networks affected knowledge transfer and innovation.

We also found evidence to suggest that articles written by scientists who later became Howard Hughes Medical Institute investigators (and thus received a significant amount of no-strings-attached funding) received more citations than those who did not, though the effect was small and short-lived (Azoulay, Stuart, & Wang, 2014). Interestingly, a related study found a redistribution effect of scientific attention and recognition away from researchers that work proximate to prizewinners (measured by the number of citations), suggesting negative spillover effects of prizes on the allocation of attention (Reschke, Azoulay, & Stuart, 2018). This effect was canceled out by the extra attention of the prizewinner only for fields that are comparatively poorly cited/neglected.

Overall, the available (though scarce) evidence points to prizes having the potential and ability to affect intermediate outcomes (such as collaboration patterns among innovators) and to shape the trajectory of science and innovation. We found the evidence for the field-shaping effects of recognition prizes stronger and more convincing than for inducement prizes, though in both cases it’s difficult to anticipate the extent to which prizes affect the quantity and quality of innovation. Our view is mainly based on the fact that inducement prizes have been less researched, not because recognition prizes are necessarily better.

Prizes can effectively leverage private capital

[Confidence: We have medium confidence that prizes can leverage significant amounts of private capital, based on recent example figures and historical prizes. We deem it unlikely that a further review of the literature would change our view. However, a systematic assessment of the leveraging effect of modern large-scale inducement prizes, which to our knowledge does not exist, would likely reduce our uncertainty.]

We found some empirical evidence that prizes can leverage private sector investment greater than the cash value of the prize. While, to our knowledge, there has been no systematic assessment of the leveraging effect of different prizes, we found some example figures, which point to 2-50 times the amount of private capital leveraged by prizes relative to the cash rewards.

Khan (as quoted in Hayes, 2021) investigated a large number of historical prizes and found that, “in almost all prize competitions, the investments of time and resources on the part of the competitors generally exceed even the absolute value of the award.” Khan’s result for historical prizes seems to also hold for modern grand innovation prizes, as some example figures show, including:

- The Ansari X Prize stimulated at least $100 million in private capital with a cash value of $10 million (Hoyt & Phills, 2007).

- The Shell Springboard Prize achieved a return on investment between 200% and 900%, where the return was measured as the total spending from competitors and investment represented the total cost of the competition (Everett, 2011, p. 13).

- In the NASA Centennial Challenge, competitors pursued prizes whose value represented “about one-third of the amount it takes to win” (McKinsey, 2009, p. 25).

- In the Northrop Grumman Lunar Lander Challenge, a $2 million prize spurred $20 million total investment (Kay, 2011, p. 87).

- Brunt, Lerner, and Nicholas (2012, p. 5) found that the costs of technology development were three times higher than the monetary rewards in the RASE prizes.

- Schroeder (2004) estimated the returns on investment for three different prizes. Strikingly, he found that entrant investments were 40 times higher than the size of the cash purse for the Ansari X Prize, and 50 times higher for the DARPA Grand Challenge.

Kalil (2006, p. 7) explained:

“[T]his leverage can come from a number of different sources. Companies may be willing to cosponsor a competition or invest heavily to win it because of the publicity and the potential enhancement of their brand or reputation. Private, corporate dollars that are currently being devoted to sponsorship of America’s Cup or other sports events might shift to support prizes or teams. Wealthy individuals are willing to spend tens of millions of dollars to sponsor competitions or bankroll individual teams simply because they wish to be associated with the potentially historical nature of the prize. Most areas of science and technology are unlikely to attract media, corporate, or philanthropic interest, however.”

We would like to note that we haven’t vetted any of the aforementioned figures and we suspect that they were calculated in different ways. Moreover, we don’t know whether these figures are representative, but we think it’s possible that there is a publication bias in the sense that prizes that were less successful or received less participant and media attention were less likely to be studied. Our best guess is that the average large inducement prize (≥ $100,000) leverages 2-10 times the amount of private capital relative to the cash rewards (80% confidence interval).

When do prizes work best?

In this section, we first describe in what cases prizes should be used and might be a good choice compared to other innovation incentive mechanisms. We then discuss a number of prize design issues and their implications.

Prizes are effective when there is a clear goal with an unknown path to success or in case of market failure

[Confidence: We have relatively low confidence in our assessment of when prizes are effective, which is largely based on rules of thumb from the policy literature. We expect that 10 more hours of research (possibly consisting of a review of the policy literature and an interview with Zorina Khan) would provide us with an understanding of how these rules were derived and whether they are generalizable.]

According to Burstein and Murray (2016), economic theory on innovation incentives does not give concrete answers about when to choose prizes for innovation over patents or grants. Instead, it lists a number of factors that may influence the choice. Kapczynski (2012, p. 19) summarized:

“[T]he . . . economics literature has proliferated a series of parameters that influence the comparative efficiency of these different systems, including, most importantly, the competitiveness of the research environment; the cost of research as compared to the value of the reward; the riskiness of research or creativity; the importance of private information about the cost or value of creation; the costs of overseeing effort in the context of contracts; and the comparative costs of rent seeking, uncertainty, and the administration of each system. The information economics literature thus offers no general endorsement of any mechanism.”

Burstein and Murray (2016) also explained that the policy literature does not do much better at explaining and justifying prizes than does the economic literature, as it provides only rules of thumb for determining when and how to use prizes. According to the authors, a basic rule seems to be that prizes are most useful when the goal is clear but the path to achieving it is not (e.g. Kalil, 2006, p. 6). They explained that one line of literature suggests that prizes are useful in industries that are particularly susceptible to under-production of innovation because private actors lack a viable market. As two examples, they mentioned the market for pharmaceuticals targeting diseases endemic to the developing world, where ability to pay is lower than the need, and the market for technologies to address climate change where social value far exceeds private value. They found that these are two areas in which prizes have most frequently been proposed.

McKinsey (2009, p. 37) created a flow chart as a tool to decide when to use prizes versus other philanthropic instruments, such as grants or infrastructure investments. Essentially, they recommended using prizes “when a clear goal can attract many potential solvers who are willing to absorb risk. This formula is most obvious in so-called ‘incentive’ prizes. [...] But the formula also holds for good ‘recognition’ prizes like the Nobels.” While this sounds plausible to us, we are not sure where McKinsey derived these recommendations from, as this was not stated in their report.

In her book Inventing Ideas (2020), Zorina Khan, an economic historian and expert on prizes, wrote a list of cases and circumstances under which prizes are potentially effective (see Appendix 4 of the book). We copied this list here:

- “To achieve philanthropic or nonprofit objectives: This might include circumstances where market failure occurs, although the ultimate goal should be to enable markets to work rather than to replace the market mechanism with monopsonies

- Social objectives: Prizes can help to promote unique, qualitative, social, or technical goals that are not scalable or for which there is no market

- To publicize or draw attention to otherwise ignored issues: To focus attention, facilitate coordination, or signal quality: however, if the objective is publicity or opportunity to work with/learn from other competitors, then from a social perspective there are likely to be more effective means of marketing

- As signals of quality: In markets for experience goods and instances where informational costs are high”

We are not sure whether these points were derived from theoretical considerations or from empirical findings, as they were listed in the Appendix without any context. We skimmed through her book looking for concrete examples or justifications for these points, but we were unable to find them. A deeper read of the book or a phone call with Khan might provide clarification. Unfortunately, she was unavailable to meet with us.

To summarize, we did not find very concrete answers on when prizes are most effective compared to other mechanisms to incentivize innovation. However, two common rules we encountered were that prizes are effective (1) when the goal is clear, but the path to achieving the goal is not, and (2) when innovators lack a viable market to innovate, such as in the case of neglected tropical diseases or technologies to address climate change.

Prize design influences the ability to spur innovation

[Confidence: We have relatively low confidence in our recommendations regarding the optimal design of prizes, which are largely based on few small-scale lab or field experiments. A further review of the literature is unlikely to change our views, but the publication of new (quasi-) experimental studies on the effects of different design parameters of large-scale prizes might alter our conclusions.]

We have found relatively little empirical (or theoretical, for that matter) evidence on how to effectively design a large prize for innovation. We came across few papers that empirically tested the implications of different design features of prizes, which were largely small experiments with relatively small monetary rewards. While we deem the research to be overall of high quality and high in internal validity, the findings may not necessarily extend to other settings. Moreover, it is not clear how different design features would interact. We summarize our most important findings and recommendations in the following (see Appendix 3 for a more thorough discussion of the studies we reviewed). We would like to emphasize that our recommendations are very tentative and based on relatively little evidence that may not necessarily extend to other settings.

Prestige is a stronger incentive than monetary rewards

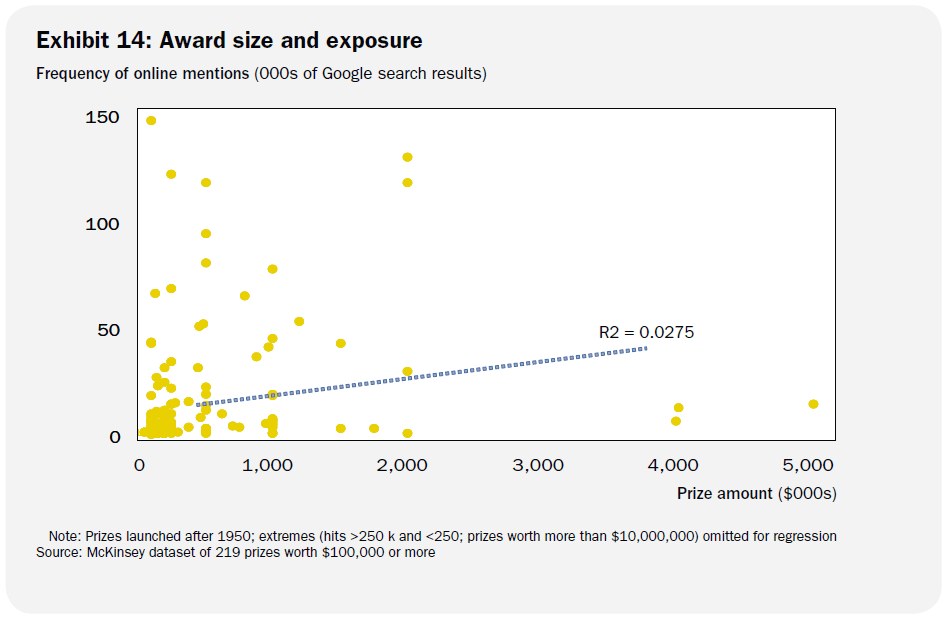

We reviewed six empirical studies (two quasi-experimental studies, one field experiment (RCT), two case studies, and one textbook that combines regression analyses with qualitative observations) on the importance of monetary vs. non-monetary prize incentives. The results from these studies are mixed. Some studies found that monetary incentives spurred innovations relative to only non-monetary incentives (e.g. Boudreau & Lakhani, 2011; Jin, Ma, & Uzzi, 2021), while others found that medals provided stronger incentives than monetary rewards (e.g. Brunt, Lerner, & Nicholas, 2012; Kay, 2011; Khan, 2020; Murray et al., 2012). Overall, there appears to be only a weak relationship between the size of the cash rewards and innovative activity and outputs. Moreover, a consensus seems to be that medals provide stronger incentives than monetary rewards, and that prize participants are typically not only motivated by monetary rewards (as assumed by economic theory), but by a host of other factors (e.g. prestige, reputation, visibility). While one could hypothesize that the prestige of an award is a function of the size of the prize, this hypothesis is not strongly supported by the data we found. A McKinsey (2009, p. 58) analysis found only a weak correlation between the cash award of a prize and the prize’s exposure (proxied by the number of online mentions in Google search results).

We have not investigated how costly it is to create publicity and visibility around a prize, but according to Jim English,[8] who was interviewed by McKinsey (2009, p. 60), “prizes fail when the sponsor fails to understand how much effort and investment is required beyond the simple ‘economic capital’ of the award itself. A sponsor might imagine that a prize that carries cash value of, say, $50,000 requires around $60,000 or $75,000 a year to run. But depending on the kind of prize and the field of endeavor, the actual costs might be $500,000 or more when you include raising public awareness that a prize exists, inducing people to nominate and apply, mounting a publicity campaign, and administering the whole program.”

Our conclusion from these results is that offering a monetary reward makes sense, but only insofar as it increases the prestige and visibility of the prize. We recommend focusing more on creating prestige around a competition, and offering medals rather than a very large cash reward. We have not investigated the costs of creating publicity, but according to one figure, the total costs (including administrative and publicity costs) required to successfully run a prize might be around 10 times the offered cash award.

Winner-takes-all scheme could generate more novel innovation than multiple prize scheme in single contest

We reviewed three studies (one field experiment, one laboratory experiment, and one study combining an online experiment with an empirical analysis of an actual innovation platform) on the effects of different prize structures and compensation schemes. Graff Zivin and Lyons (2021) ran a field experiment with 184 participants on a software innovation contest with a cash purse of up to $15,000, and found that a winner-takes-all compensation scheme generated more novel innovation relative to a multiple prize scheme that rewarded a greater number of contributors. Hofstetter et al. (2017) found the same result in an online experiment and an analysis of 260 innovation contests from an innovation platform. However, they also found that when those schemes are compared in successive contests, the winner-takes-all scheme can have a deterrent effect on participants and decrease the effort and innovativeness of those who had received no reward in the first contest. Brüggeman and Meub (2015) did a comparison between the prize for the aggregate innovativeness with a prize for the best innovation in a laboratory experiment and found that neither option is unambiguously “better” than the other.

We believe that these findings do not lend to unambiguously recommending a particular compensation scheme. However, depending on whether the prize competition is meant to be a single contest or a series of successive contests, we recommend either a winner-takes-all scheme or a multiple prize scheme.

A larger, more diverse pool of competitors is better for high-uncertainty problems

We reviewed three studies (one field experiment, and two regression analyses based on online innovation platforms) on the effects of three different competitor characteristics. Boudreau et al. (2011) ran a regression analysis on 10,000 software competitions from a software competition platform. They found that constraining the number of competitors in a contest increased the effort exerted by participants, but decreased the chance of finding a particularly good solution. However, a larger number of competitors increased overall contest performance for high-uncertainty problems. Jeppesen and Lakhani (2010) found a positive relationship between problem-solving success and marginality (i.e. being distant from the field of a problem) in a regression analysis of 166 scientific challenges from an online platform with over 12,000 participating scientists. One interpretation of this finding could be that the best way to solve problems is to have experts from vastly different fields attempt solutions. A 10-day field experiment of a software contest with over 500 software developers found that allowing contest participants to self-select into different institutional settings can increase effort and the performance of solutions (Boudreau & Lakhani, 2011).

We recommend allowing a larger number of prize entrants if the challenge is around a high-uncertainty problem, and vice versa. Moreover, designing the contest such that it attracts a diverse set of participants will probably lead to better outcomes, but we are not sure how to achieve this in practice. We would advise against assigning entrants to specific teams and settings and recommend letting the participants self-select instead.

Limitations and risks of prizes

[Confidence: We have medium confidence regarding the critical review of prizes from a historical perspective, as our conclusions are largely based on only one researcher’s (Zorina Khan) high-quality body of work. As we are not aware of any other researcher who studied prizes from a historical perspective at a similar level of depth, we think that another 10 hours of research on this topic is unlikely to substantially change our views.]

Critical review of prizes from a historical perspective

Zorina Khan, a professor of economics and a specialist in intellectual property, entrepreneurship, and innovation, has extensively investigated prizes for innovation from a historical perspective. In our impression, she seems to be the most prominent scientist who has voiced skepticism about prizes in numerous instances. In the following, we attempt to summarize her critical perspective on prizes based on her book (2020), two articles (2015; 2017), and a podcast interview (Hayes, 2021), and state our opinions on and takeaways from her reasoning.

Patents, not prizes, fueled the rise of the U.S. economy

In her podcast interview and book, Khan (Hayes, 2021; Khan, 2020) discussed how the United States succeeded in overtaking Europe in the 19th century to become the global technology leader in the 20th century. She argued that patents, not prizes, fueled the rise of the U.S. as a global economic power, and she supported her hypothesis in two lines of reasoning. First, she contended that encouraging innovation by awarding prizes is inferior to granting patents. For example, in her 2017 article, in a situation where prizes and patents were substitutes, she observed an adverse selection effect with regards to prizes. That is, inventors who had valuable ideas in the marketplace would bypass the prize system and pursue returns from commercialization (i.e. patents), whereas people with “rubbish inventions” would apply for prize awards. Second, she argued that the U.S. patent system, which was the first modern patent system with market-oriented patent policies, was superior to European patent systems and led to the democratization of inventions.[9] However, not all economists share her conclusions. For example, Moser (2016, p. 1) challenged the view that patents were the primary driver of innovation.

Overall, we are not convinced that these arguments provide strong reasons against using prizes. While patents may be superior to prizes in a context where both are substitutes for each other, we don’t think this argument necessarily extends to the modern context, where there are patent systems in all virtually countries and prizes and patents are complements.

Prizes can create market distortions

Khan (Hayes, 2021) also discussed the case when prizes and patents are complements — that is, when inventors can get both patents and prizes, as is the case today. She explained that this leads to a market distortion because inventors would get overcompensated through what she called “award stacking”: inventors chasing both a prize and a reward in the market. She argued that prizes are monopsonies: the person who is offering the prize is the only buyer. Khan explained a finding from her research that monopsonies can lead to very large social costs, including arbitrary, idiosyncratic outcomes, unjust discrimination, and even corruption. She wrote in her book (2020, p. 397):

“Prizes can be effective for private entities who are able to free-ride off the efforts of the entire cohort striving for the award, while only paying for one successful solution; however, social welfare is reduced by the lost resources and investments made by the many losers in the prize competitions. This is especially true if the objective of the competition is highly specific to the grantor and results cannot readily be transferred to other projects. Moreover, the secrecy involved in most prize systems tends to inhibit the diffusion of useful information, especially for outsiders. These net social losses suggest that prize competitions are inappropriate policy instruments for government agencies that should be promoting overall welfare.”

According to Khan, patents are different because they are market-oriented incentives. This means that if an invention is valuable, the patent is going to be rewarded with profits in the market, while if an invention is useless, they’re going to get nothing, and society also benefits because the patentee discloses all of the information to the public.[10]

More generally, Khan made the point that, whenever possible, the best option is to ensure well-functioning markets exist. For example, Khan voiced skepticism about the Carbon X Prize, a currently ongoing $100 million prize that is, according to the X Prize Foundation, the largest prize in history, which is funded by Elon Musk to incentivize innovations for carbon removal (Hayes, 2021). She opined that, while the Carbon X Prize attracted a lot of media attention to the problem of excessive carbon emissions, this could have been done much more cheaply.[11] Khan explained that instead of grand innovation prizes, there is a need to set up mechanisms to ensure correct prices for emissions and there are known ways of doing that (e.g. carbon taxes, futures markets, carbon offset credits). In her view, the best policy would be to auction off carbon rights to forms and facilitate markets for trading in emissions.

Again, we are not convinced that one can generally conclude that social welfare is reduced by the lost resources and investments made by losers in prize competitions. First of all, there are other benefits to participants and society beyond winning the prize, such as learnings and a potential commercialization of the developments. Moreover, Khan’s lines of reasoning here focus on the case where market-oriented incentives exist and are sufficient to induce innovation. This is not always the case, as there can be market failures for various reasons, such as for medicines for neglected tropical diseases. Our takeaway is that prizes should be avoided in areas where sufficient market-oriented incentives exist to induce innovation.

Historical prizes often failed

Khan (2015) surveyed and summarized empirical research using samples drawn from Britain, France, and the United States, including “great inventors” and their ordinary counterparts, and prizes at industrial exhibitions. She found that prizes suffered from a number of disadvantages in design and practice, which might be inherent to their non-market orientation. She argued that historical prizes were often much less successful than nowadays often claimed, and current debates about prizes tend to be centered around historical anecdotes and potentially misleading case studies.

In her analysis of data on early prize-granting institutions in the 18th and 19th centuries, she reviewed a number of frequently cited historical prizes (e.g. the Longitudinal Prize from 1714), and explained what lessons this evidence offers for designing effective mechanisms to incentivize innovation. She argued that the majority of organizations specializing in granting prizes for industrial innovations at that time ultimately became disillusioned with this policy, partly due to a lack of market orientation of prizes. This was for numerous reasons. For example, prizes “were not wholly aligned with the economic value of innovations for the individual industry” (p. 18). Moreover, the majority of offered prizes had never been actually granted. There was often a lack of transparency in the judging process, which led to idiosyncratic and inconsistent decisions — prizes were given out in an arbitrary manner, which reduced the incentives for inventors. Khan added that prizes tended to offer private benefits to both the proposer and the winner, largely because they served as advertisements. Winners of such prize awards were generally unrepresentative of the most significant innovations, partly because the market value of useful inventions was typically far greater than any prize that could be offered. She concluded:

“This is not to say that administered inducements are never effective, especially in the context of such market failure as occurs in the provision of tropical medicines or vaccines, where significant gaps might exist between private and social returns. However, in distinguishing between the numerous ingenious theoretical prize mechanisms that have been proposed, such transaction costs need to be recognized and incorporated. In particular, governance issues and the potential for rent seeking and corruption should be explicitly addressed, especially in countries where complementary institutions and political control mechanisms are weak or nonexistent. The historical record indicates that the evolution of the institution of innovation prizes over the past three centuries serves as a cautionary tale rather than as a success story” (p. 42).

Our conclusion from Khan’s reasoning is not that prizes are necessarily a bad idea, but that prizes may fail if not designed and implemented well. Moreover, as she explained, there might be cases where prizes are potentially effective, such as in the provision of tropical medicines, where private returns do not match the social returns and one can therefore not rely on markets to provide sufficient incentives for innovation.

Overall, our takeaways from Khan’s reasoning are that prizes should not be used carelessly and are certainly not a cure-all mechanism. Ensuring well-functioning markets and correct prices, for example for carbon emissions, might be the first-best option. However, if this is not possible or not realistic in a reasonable time frame, prizes might potentially be a good option, which Khan seems to agree with. Moreover, the failures of historical prizes teach us that prizes need to be very carefully designed and implemented to not risk more harm than good.

Prizes are associated with risks that can likely be alleviated though design

[Confidence: We have relatively little confidence regarding the risks of prizes we outline below, as these are largely based on only one review article from the grey literature. It’s possible that another 10 hours of research might bring our attention to more risks than we found so far, though we deem it unlikely that we would find risks problematic enough to prohibit the use of prizes.]

Roberts, Brown and Stott (2019, p. 21) provided a summary of the risks associated with innovation inducement prizes as identified in the literature. We summarize these in the following and state our opinions.

Excluding potential participants: Zhang et al. (2015) compared the results of an idea award to promote sexual health in China in theory to the more traditional, expert-led method of designing behavior change communications. They noted that idea awards are typically run via online platforms, which risk excluding certain sections of the population. According to Roberts, Brown, and Stott, “for Social Prizes and those where the participation in the prize itself is expected to confer benefits to the participants, who is excluded, becomes important, and especially so in a development context” (2019, p. 21).

Relatedly, as we explained here, Ma and Uzzi (2018) found that prizes tend to be concentrated within certain groups, and this is especially acute when looking at the number of prizes conferred to women. Women are underrepresented among prizewinners in physics, chemistry, and biology, and those who do win prizes get less money and prestige compared to men (Ma et al., 2019). Moreover, there is evidence of unconscious gender bias in the scientific award process favoring male researchers from Europe and America (Lincoln et al., 2012, p. 1).

Overall, while we believe the evidence that gender biases and the unintentional exclusion of certain participants are a risk in prize competitions and awards, we are not sure and have not seen evidence on whether the risk is larger for prizes compared to other incentive mechanisms, such as grants.

Risks experienced by participants: The authors cited Acar (2015) conducted a survey with participants on the InnoCentive.com online platform and mentioned the risk of opportunism, where those that receive the information generated by the prize use it opportunistically. This can, in turn, make inventors fearful of disclosing knowledge. Acar (2015) described how some participants in science contests experience fear of opportunism, and noted that female and older participants had significantly less fear of disclosing their scientific knowledge.

We don’t have a clearly formed opinion on this point, but our overall impression is that these issues can be alleviated by taking fear of opportunism into account in the design of prize contests (e.g. via intellectual property protection and compensation structure), as Acar (2015) suggested in their discussion section.

Duplicating resources: Roberts, Brown, and Stott (2019) argued that the multiplier effect of prizes — i.e. there being more than one solver — may have benefits for the funder, but can represent duplicative and potentially wasteful efforts by solvers (citing Lee [2014], who did a legal examination of social innovation). They also argued that the number of solvers can introduce risks in terms of motivation for future prizes, citing Desouza (2012), who warned of the risk of reducing the pool of potential future solvers if prize managers fail to communicate effectively with participants after the prize ends, drawing on survey data among U.S. citizens who participated in government innovation inducement prizes.

We would like to note that this potential demotivating effect of multiple solvers sounds generally plausible to us and has also been found by Boudreau et al. (2011), as we explained here. Thus, the number of prize entrants is a factor that needs to be considered in the prize design.

Power imbalance: Roberts, Brown, and Stott (2019) cited Eagle (2009), an article from social marketing (given the similarities between social prizes and community-based behavior change interventions), observing that criticisms of social marketing include being patronizing and manipulative, appealing to people’s base instincts, and extending the power imbalance between the state and individuals.

As the cited evidence is from social marketing, we are not sure whether these risks extend to prizes.

Overall, while we, by and large, find the points raised plausible, we don’t find the evidence behind them very strong and are not sure whether these risks are unique to prizes or hold equally for other incentive mechanisms, like grants. Moreover, we don’t find the risks problematic enough to prohibit the use of prizes, and we suspect that some of these risks can at least partly be alleviated by designing prizes accordingly (e.g. addressing fears of opportunism).

List of recent large prizes

We provide a list of large innovation inducement prizes here that includes information on a few key features, such as the numbers of entrants, mobilized private capital, and some information about the winners. We included all prizes we found with a cash award of at least $100,000 from the 20th century on.

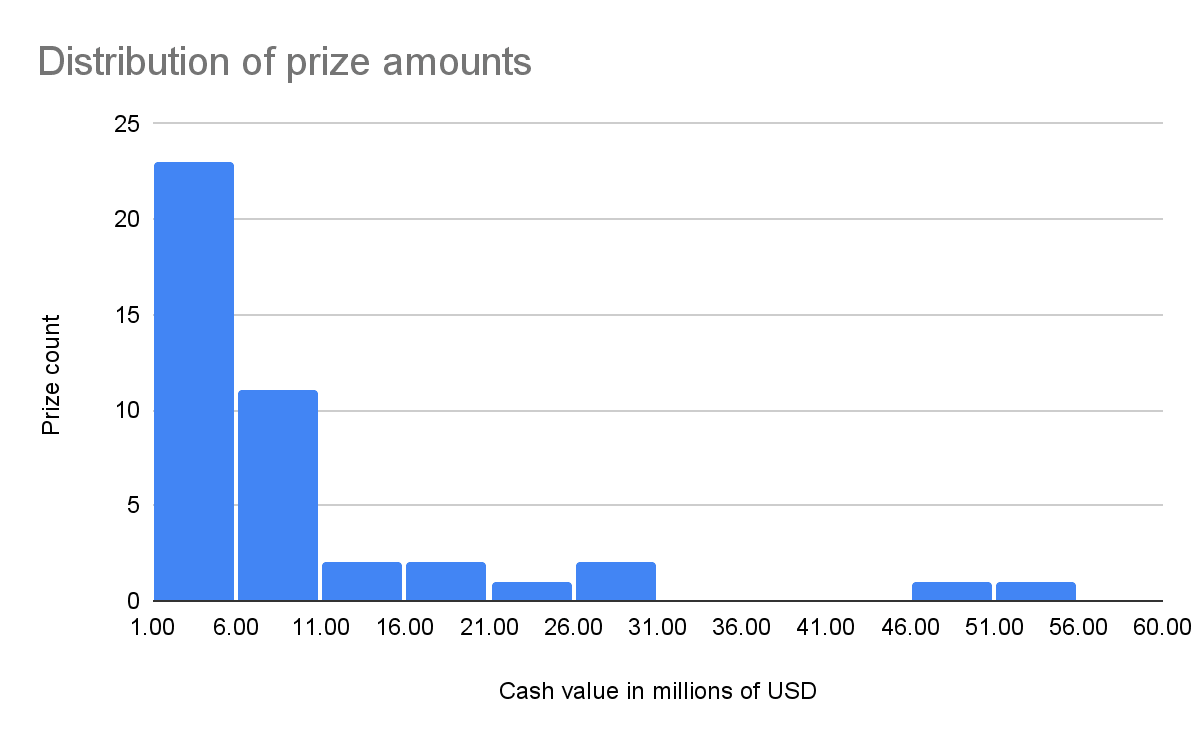

Based on this list, we created a histogram showing the distribution of prize amounts in Figure 3 below. The largest prizes we found in terms of cash awards were America’s Space Prize at $50 million and the GE Ecomagination Challenge: Powering the Grid at $55 million.

Figure 3 - Distribution of prize amounts based on the list of prizes we assembled (in millions USD)

Two case studies of large-scale inducement prizes

As we discussed above, there is a paucity of rigorous, quantitative studies on the impact of prizes. In this section, we therefore complement the empirical evidence discussed in the previous sections with the findings of two case studies of modern, large-scale prizes. We focus on two examples of the X Prize: the Google Lunar X Prize, and the Auto X Prize. According to Murray et al. (2012, p. 4), the X Prize can be assumed typical of contemporary Grand Innovation Prizes[12] in design and implementation, as the approach developed by the X Prize Foundation is emerging as a canonical design and prizes in the X Prize “tradition” seem increasingly common. Each of the X Prizes share a similar architecture, scale, and scope.

A caveat to these case studies is that there has been no unified framework or clear approach within which to evaluate prizes and undertake comparative analysis (Murray et al., 2012, p. 3). Thus, it is difficult to systematically evaluate the performance of different prizes and to summarize and compare the findings of different case studies.

Google Lunar X Prize

By far the most comprehensive case study on modern prizes we found was done as a public policy dissertation project by Kay (2011). Kay (2011) used an empirical, multiple-case-study methodology to investigate a main case study — the Google Lunar X Prize (see Appendix 4 for a more detailed description of the Google Lunar X Prize) — and two pilot cases: the Ansari X Prize and the Northrop Grumman Lunar Lander Challenge. He then examined four main aspects of these prizes: the motivations of prize entrants, the organization of prize R&D activities, the prize technologies, and the impact of prizes on technological innovation.

The study used different sources of data, such as direct observation, on-site interviews, questionnaires, and document analysis. Kay (2011) triangulated the different data sources with equal weighting for data collected through different methods. We would like to note that we were only able to read a fraction of his almost 400-page-long dissertation, which is very rich and comprehensive. Thus, it is possible that we missed some potentially important and interesting aspects of his analysis.

We summarize his results and conclusions in the following (see Kay [2011, pp. 263-264] for a nice overview of the research questions, hypotheses, variables of interests, results, and conclusions in a table format).

- Motivations of prize entrants:

The prizes attracted diverse entrants, including unconventional ones, such as individuals and organizations that were generally uninvolved with the prize technologies. Participants were primarily drawn to the non-monetary benefits of prizes (e.g. visibility, prestige, opportunity to participate in technology development) and the potential market value of the prize technologies. The author found that the monetary reward was less important to participants relative to other incentives. However, it was still important to propagate the idea of the prize. Interestingly, the prizes attracted many more people apart from the participants, such as volunteers and partners, who contributed indirectly to the prize and supported official participants. - Organization of prize R&D activities:

Prizes could increase R&D activity and redirect ongoing industry projects to target diverse technological goals. However, he concluded that the development of prize competitions was difficult to predict. The organization of prize R&D activities and participants’ effort depended on the participants’ characteristics (e.g. goals, skills, resources), and could not be directly influenced by a specific competition design. He found interactions between R&D and fundraising activities, which might, in some circumstances, divert the participants’ efforts away from technological development. - Prize technologies:

Prizes could selectively target technologies at different maturity levels (e.g. experimental research, incremental developments, commercialization). However, the quality of the innovation output was difficult to predict. - Effect of prizes on technological innovation:

Prizes could spur innovation beyond what would have happened in their absence. However, the effect of prizes depended highly on the prize entrants’ characteristics and the evolution of the prize competition’s overall context, such as whether the business context is favorable. He found the impact on innovation to be larger for larger prize incentives, more significant technology gaps, and sufficiently open-ended challenge definitions to allow for unconventional approaches. Moreover, he concluded that prizes cannot induce, but only enable technological breakthroughs, and they may require complementary incentives (e.g. commitments to purchase inventions) or support (e.g. seed funding).

Kay (2011) also concluded that prizes are particularly appropriate to, for example (the prizes in parentheses are from Table 8.3 on p. 279):

- Explore new, experimental methods that involve high-risk R&D (e.g. Food Preservation Prize, Longitude Prize)

- Develop technology to break critical technological barriers (e.g. Ansari X Prize)

- Improve technology to achieve higher performance standards (e.g. Northrop Grumman Lunar Lander Challenge, DARPA Challenges)

- Stimulate the diffusion, adoption, and/or commercialization of technologies (e.g. Google Lunar X Prize)

Furthermore, according to Kay (2011, p. 293f), prizes can selectively focus on specific technologies, and target certain innovators and geographic areas. They can also leverage significant amounts of funding. Relative to other incentive mechanisms (e.g. grants), prizes involve higher programmatic risks, as their outputs are difficult to predict. The incentive power of prizes depends on their uniqueness — that is, a prize in a context with many rival prizes has less incentive power than a similar prize that is held in a context without equivalent (or any) competing prizes. A successful prize design depends on many parameters.[13]

Auto X Prize

In this section, we summarize the findings of a case study of the Auto X Prize, conducted by Murray et al. (2012).[14] See Appendix 4 for more detailed background information on the Auto X Prize. Murray et al. (2012) provided a systematic examination of a recent Grand Innovation Prize (GIP): the Auto X Prize. To do so, the authors defined three dimensions for GIP evaluation: objectives, design (including ex ante specifications, ex ante incentives, qualification rules, and award governance), and performance. They compared observations from three domains within this framework: empirics, theory, and policy. Their analysis is based on a combination of various data sources: direct observation, interviews, surveys, and extant theory and policy documents.

The authors concluded that the empirical reality of the Auto X Prize deviated substantially from the ideal form of a prize, as described in the theoretical economic literature and advocated in policy-making documents. We find this unsurprising but interesting, as it shows that the theoretical research on prizes done so far is of limited usefulness in understanding modern prizes implemented in practice.

They found the contrasts to be particularly strong with respect to four areas. We copy these points here (p. 13):

- “Contrary to the dominant theoretical perspective,[15] which assumes GIPs have a single, ultimate objective – to promote innovative effort – we find that GIPs blend a myriad of complex goals, including attention, education, awareness, credibility and demonstrating the viability of alternatives. Paradoxically, our results suggest that prizes can be successful even when they do not yield a “winner” by traditional standards. Conversely, prizes in which a winner is identified and a prize awarded may still fail to achieve some of their most important design objectives.

- We find the types of problems that provide the target for GIPs are not easily specified in terms of a single, universal technical goal or metric.[16] The reality is not nearly as clear or simple as either theorists or advocates have assumed. The complex nature of the mission (e.g., a highly energy efficient vehicle that is both safe to drive and can be manufactured economically), and the systemic nature of the innovations required to solve the stated problem, requires that multiple dimensions of performance be assessed. Some of these dimensions can neither be quantified nor anticipated, while others may change as the competition unfolds. Common metrics used today (e.g., miles per gallon) may be driven by current technical choices (i.e., gasoline engines), and translating them to work for new approaches (e.g., hydrogen fuel cells) may not be easily achieved. If done poorly, this will bias competitions in favor of certain technical choices and away from others. [The Auto X Prize] demonstrates that contemporary GIPs are complex departures from smaller prizes examined by prior researchers, where the competitions involved individuals vying to solve relatively narrow problems (e.g., Lakhani et al., 2007). In those studies, the objective functions for solution providers are much more easily specified, as are the accompanying test procedures and mechanisms for governing and managing the process.

- We find a clear divergence between theoretical treatments of the incentive effect of a prize purse and the reality of why participants compete. Critically, there are a variety of non-prize incentives that are just as (if not more) salient to participants, many of which can be realized regardless of whether a team “wins” or not. Some of these broader incentives – publicity, attention, credibility, access to funds and testing facilities – are financial in nature, but not captured by the size of the purse. Others – such as community building – are social in nature and are difficult to measure in terms of the utility they generate for participants. Prior work has tended to view situations where prize participants collectively “spend” substantially more than the prize purse (i.e., in terms of resources) as evidence that prizes are inefficient in terms of inducing the correct allocation of inventive effort. Our observations however, provide an alternative explanation for why this may not be the case. Participants might, in fact, be responding rationally to a broader range of incentives than has been assumed in prior work.

- Our work highlights the critical and underappreciated role of prize governance and management, a topic that is notably absent in the theoretical literature. We find that the mechanisms for governance and management must be designed explicitly to suit the particular prize being developed, a costly and time-consuming activity. Furthermore, given the difficulties in specifying ex ante all that can happen, rule modifications and adaptations along the way are to be expected, and these must be handled in a way that respects the rights and opinions of those participants who are already committed to the effort.”

The authors concluded that, “our results suggest that GIPS cannot be viewed as a simple incentive mechanism through which governments and others stimulate innovation where markets have failed. Rather they are best viewed as a novel type of organization, where a complex array of incentives are considered and managed in order to assure that successful innovation occurs.”

A brief review of two related concepts to prizes

In the following, we briefly review two relatively novel concepts related to prizes that have recently gained momentum in the global health and development space. First, we review advance market commitments (AMCs) with a case study on the pneumococcal pilot AMC and a discussion of its critiques. Second, we briefly review the Grand Challenges launched by the Bill & Melinda Gates Foundation.

Advance market commitments (AMCs) have a lot of potential for impact and current critiques and issues may be resolved with more research and experience

[Confidence: We have medium confidence in our conclusions regarding AMCs, which are predominantly based on a case study of the pilot pneumococcal AMC and a conversation with one expert. Given that AMCs have received limited attention in the scientific and grey literature so far, we believe that a further review of the literature is unlikely to change our views. However, conversations with other experts might change our conclusions.]

Brief introduction to AMCs

The idea of advance market commitments is to provide money to guarantee a market for a product. AMCs were first proposed by economics professor Michael Kremer (2000a, 2000b) and gained additional momentum when a report by the Center for Global Development (Levine et al., 2005) expanded on Kremer’s ideas and introduced the concept of the AMC as a financial mechanism that could encourage the production and development of affordable vaccines tailored to the needs of developing countries.

AMCs aim to address two failings of global health markets: First, pharmaceutical companies have little incentive to develop medicines for diseases that are more prevalent in low-income countries due to the low purchasing power of those who are most affected. Thus, private R&D investments into neglected diseases are much lower than the socially desirable levels (e.g. Kremer & Glennerster, 2004). Second, once developed, medicines often reach low-income countries much later after their introduction in high-income countries, which leaves many people in poorer countries untreated or unvaccinated despite the existence of products to prevent deaths (MSF, 2020, p. 3).

In the case of vaccines, AMC donors pledge that if a firm develops a specified new vaccine and sets the price close to manufacturing cost, they will “top up” the price by a certain amount per dose. This top-up payment strengthens firms’ incentives by increasing the profitability of serving those markets. Moreover, the AMC’s price cap ensures that the vaccine remains affordable for people in poverty (Scherer, 2020).

The first AMC was piloted in 2007 to purchase pneumococcal vaccines, which we detail in the next section. Christopher Snyder, an economics professor who researches AMCs mentioned to us in a conversation that it is difficult to know exactly how many proposals and ongoing AMCs there are, as some are technically not AMCs, but used the term for branding purposes (e.g. COVAX AMC), while others are a mix between different mechanisms of push and pull finding (e.g. carbon removal AMC). We provide some examples of proposed and ongoing AMCs and related mechanisms:

- In 2020, the COVAX AMC was launched to make donor-funded doses of Covid-19 vaccines available to LMICs. Moreover, Operation Warp Speed,[17] a public-private partnership initiated by the US government had a Covid-19 AMC as one of its components.

- In 2022, the Frontier fund was launched to mobilize a $925 million for carbon removal using an AMC.

- There is an ongoing eight-year AMC for foot-and-mouth disease vaccines for animals, tailored to the needs of Eastern Africa.

- The Center for Global Development proposed an advance commitment[18] for tuberculosis (Chalkidou et al., 2020).

- An advance purchase commitment[19] for an Ebola vaccine was signed by Gavi, the Vaccine Alliance, in 2016.

Insights and lessons learned from the pilot pneumococcal AMC

Program launch

In 2007, Gavi, the Vaccine Alliance, piloted the use of an AMC to purchase pneumococcal vaccines (PCV) for children in the developing world with a total commitment of $1.5 billion by the Gates Foundation and five countries. At the time, the World Health Organization estimated that pneumococcus killed more than 700,000 children under five in developing countries per year (WHO, 2007).

According to Kremer et al. (2020, p. 270), “the design called for firms to compete for 10-year supply contracts capping the price at $3.50 per dose. A firm committing to supply X million annual doses (X/200 of the projected 200 million annual need) would secure an X/200 share of the $1.5 billion AMC fund, paid out as a per-dose subsidy for initial purchases. The AMC covered the 73 countries below the income threshold for Gavi eligibility. Country co-payments were set according to standard Gavi rules.”

Program outcomes

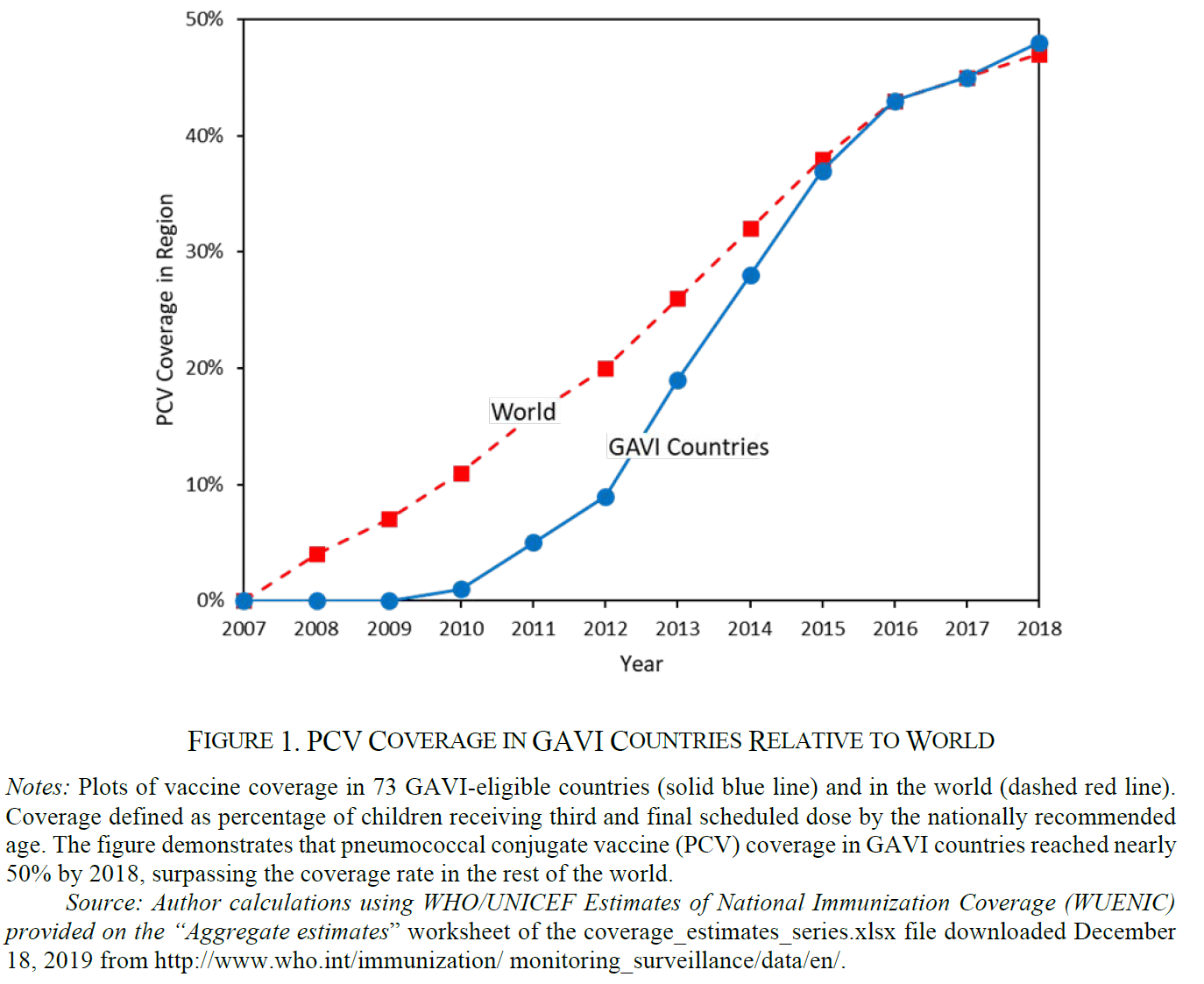

According to Kremer et al. (2020), by 2016, PCV was distributed in 60 of the 73 eligible countries, with doses sufficient to immunize over 50 million children annually. As Figure 4 below shows, by 2018, nearly half of the target child population in Gavi countries was covered, slightly surpassing the coverage rate in non-Gavi countries.

Figure 4 - PCV coverage in Gavi countries relative to non-Gavi countries (Kremer et al., 2020, online appendix)

According to estimates from Tasslimi et al. (2011), which we did not have time to review, the PCV rollout has been highly cost-effective. At initial program prices, the PCV rollout averted a DALY at $83. According to Kremer et al. (2020), evidence on the cost-effectiveness of PCV does not prove the cost-effectiveness of the overall AMC because of a lack of a valid counterfactual. However, they argue that the high cost-effectiveness of the PCV implies that the AMC would have been worthwhile were there even a small chance that it sped up PCV adoption.

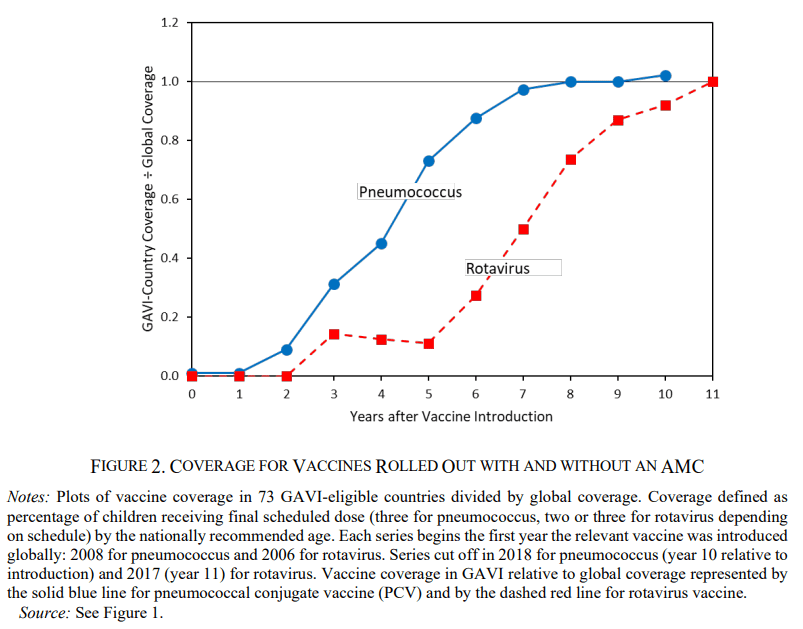

While it is impossible to know for sure whether the AMC sped up PCV adoption, Kremer et al. (2020, p. 5) compared the PCV adoption with the rotavirus vaccine adoption as an approximate counterfactual.[20] The authors claimed that according to Figure 5 below, the rate of vaccine coverage in Gavi countries converged to the global rate almost five years faster for PCV than for the rotavirus vaccine. They calculated that had PCV coverage increased at the same rate as the rotavirus vaccine (i.e. slower), over 12 million DALYs would have been lost. Thus, to the extent that we can consider the rotavirus vaccine a reasonable counterfactual (which we did not have time to investigate), we can estimate the number of DALYs averted by the PCV AMC as 12 million.

Figure 5 - Coverage for vaccines rolled out with and without an AMC (Kremer et al., 2020, online appendix)

When should AMCs be used?

According to Sigurdson (2021, p. 8), while prizes may work well for solving challenges in which innovations can be easily decoupled from implementation, in scenarios where implementation is as or more important than the innovation itself (e.g. vaccine delivery), other mechanisms such as AMCs may be more suitable to incentivize a desired solution.

An independent process and design evaluation report commissioned by Gavi (Chau et al., 2013, p. 81) laid out a few steps to follow in order to determine whether an AMC or a different type of program is appropriate. We provide a copy in Appendix 5. Although the report provides some important points for consideration (e.g. the level of market maturity and the type of market failure), it does not give very concrete guidelines for the choice.

In a conversation with Snyder, he explained that the incentives of an AMC may not align with the best outcome in all contexts. He gave the example of Ebola vaccines, where, in his view, inducement prizes with a fixed payment are better than AMCs tied to sales. He explained that if local vaccinations in an emergent outbreak are very effective, the epidemic is quelled before many units of a vaccine get sold. This limits how lucrative the AMC can be and provides perverse incentives (i.e. a highly performing vaccine that stopped the outbreak very early would be rewarded less than a poorer performing drug). In his view, a more appropriate model in this case would be rewarding firms for the social benefit gained or harm averted as a result of their product.[21] Snyder makes this point in a working paper in which he and colleagues designed the optimal mechanism for diseases like Ebola and Covid-19 (Snyder, Hoyt, & Douglas, 2022).

This approach also comes with drawbacks, as it may be hard to come to a consensus on how much harm averted is strictly attributable to the funded product.

Discussion of critiques of AMCs

We found several critiques of different aspects of AMCs, relating to both the AMC concept in general and to the pneumococcal pilot AMC specifically. A 2020 report[22] published by Médécins Sans Frontières (MSF) provided a critical analysis and lessons learned of the pilot AMC and its impact on access to pneumonia vaccines for populations in need. Others found theoretical flaws in the AMC concept (Sonderholm, 2009), or criticized high program costs (e.g. Light, 2005). In this section, we list and explain some of these critiques and provide a brief discussion.

R&D not accelerated

According to MSF (2020, p. 1), “the AMC was flawed from the outset in its selection of pneumococcal disease, which already had a vaccine on the market, since 2000. PCV was virtually inaccessible to developing countries due to its high price, not because of a lack of R&D. The selection of a disease with an existing vaccine provided little, if any, incentive for accelerating R&D timelines of other manufacturers who had already begun development prior to the AMC inception.”

We discussed this point with Christopher Snyder. He explained that while it is technically true that the pilot AMC did not speed up R&D, this was actually a deliberate design feature of this particular AMC. He explained that while the AMC concept was first proposed with a technologically distant target in mind, particularly to encourage research on vaccines such as malaria (Kremer & Glennerster, 2004), the concept was later expanded to also encompass technologically close targets in a Center for Global Development working group report (Levine et al., 2005). For a vaccine that is further in its R&D process, the challenge switches from incentivizing R&D to incentivizing adequate capacity (Kremer et al., 2020, p. 1), which is very expensive and requires a substantial investment, even after the R&D process is completed.[23] Technologically distant and close targets require different AMC designs. In our understanding, the AMC concept has not been tested for a technologically distant target yet, as originally envisioned for a malaria vaccine.

High program costs

There have been some concerns about the cost-effectiveness of the AMC. For example, according to Donald Light, a health policy researcher, the estimated cost per child saved under the PCV AMC was $4,722, whereas programs extending vaccines for diseases (such as polio, measles, and yellow fever) to children who don’t receive them would save more lives at a lower cost. Light mentioned multidrug package interventions for neglected tropical disease interventions that cost about 40 cents per person per year (Scudellari, 2011; Light, 2005). We haven’t been able to find the direct source of this cost-effectiveness estimate and we don’t know how it was calculated.

According to MSF (2020, p. 2), “a lack of transparency on costs, capacity, and pricing decisions fed criticism that the AMC acted as a vehicle for private companies to make unnecessarily high profits at the expense of broader vaccine access. The AMC design team lacked critical information and sufficient expertise to appropriately negotiate the original price per dose. If more data from the manufacturers on the costs of production and capacity scale-up had been forthcoming, and if more experts with economic or vaccine-industry experience had been involved, the initial price ceiling of $3.50 per dose might have been lower but still sufficient to incentivize manufacturers to participate in the AMC. [...] PCV remains one of the most expensive among the 12 vaccines supported by Gavi.”

We mentioned this critique to Snyder, who responded that he agrees that, for example, the polio vaccine at a couple of cents per dose is much more cost-effective than the pneumococcal AMC. However, he cautioned that average and marginal cost-effectiveness should not be confused, stating that polio might be better on average, but the pneumococcal AMC might still be worth funding on the margin. He also argued that the option value of learning should be factored into the benefits of the program, though acknowledged this benefit has diminishing returns.