I just returned from Manifest, a bay area rationalist conference hosted by the prediction market platform, Manifold. The conference was great and I met lots of cool people!

A common topic of conversation was AI and its implications for the future. The standard pattern for these conversations is dueling estimates of P(doom), but I want to avoid this pattern. It seems difficult to change anyone’s mind this way and the P(doom) framing splits all futures into heaven or hell without much detail in-between.

Instead of P(doom), I want to focus on the probability that my skills become obsolete. Even in worlds where we are safe from apocalyptic consequences of AI, there will be tumultuous economic changes that might leave writers, researchers, programmers, or academics with much less (or more) income and influence than they had before.

Here are four relevant analogies which I use to model how cognitive labor might respond to AI progress.

First: the printing press. If you were an author in 1400, the largest value-add you brought was your handwriting. The labor and skill that went into copying made up the majority of the value of each book. Authors confronted with a future where their most valued skill is automated at thousands of times their speed and accuracy were probably terrified. Each book would be worth a tiny fraction of what they were worth before, surely not enough to support a career.

With hindsight we see that even as all the terrifying extrapolations of printing automation materialized, the income, influence, and number of authors soared. Even though each book cost thousands of times less to produce, the quantity of books demanded increased so much in response to their falling cost that it outstripped the productivity gains and required more authors, in addition to a 1000x increase in books per author, to fulfill it.

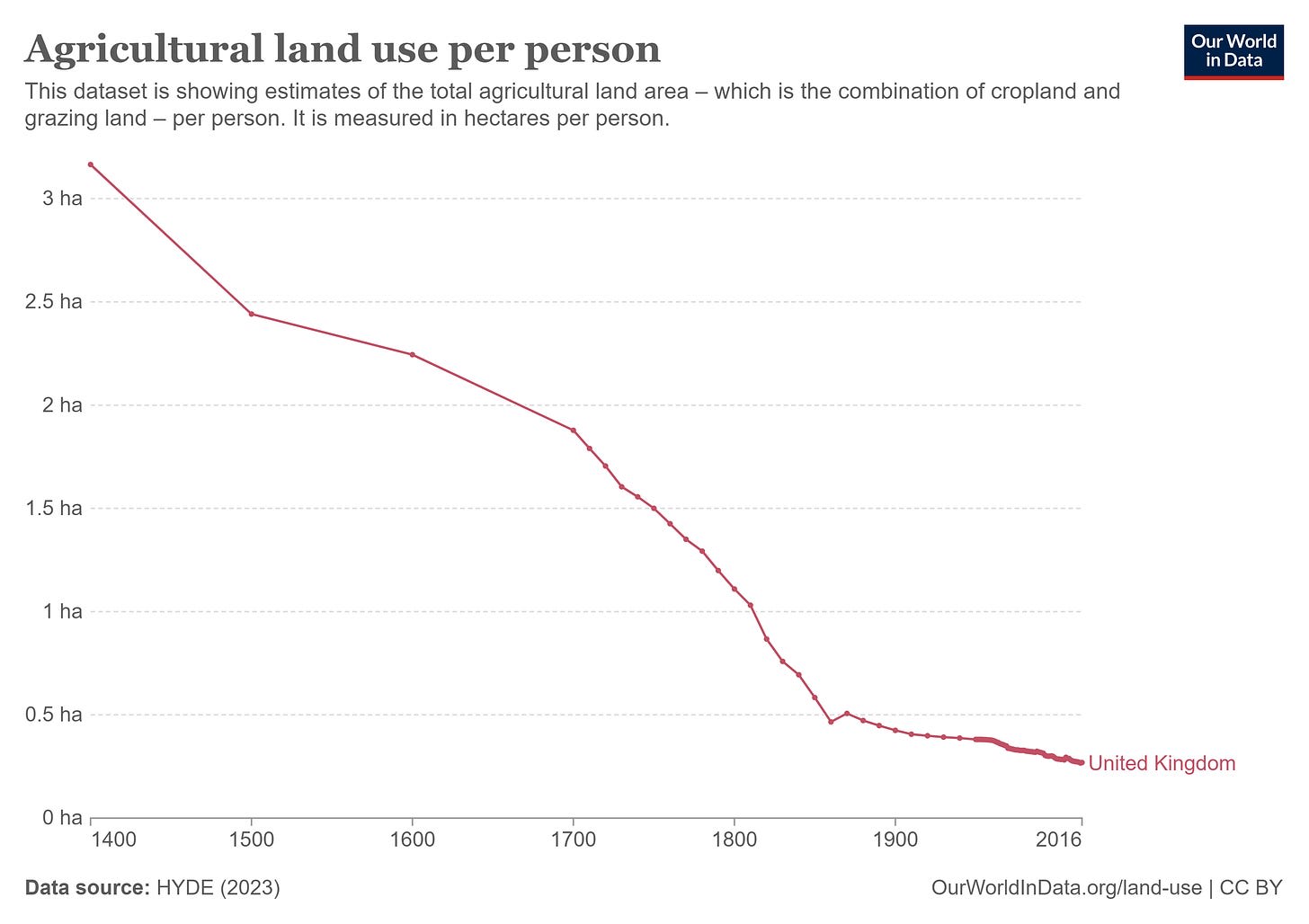

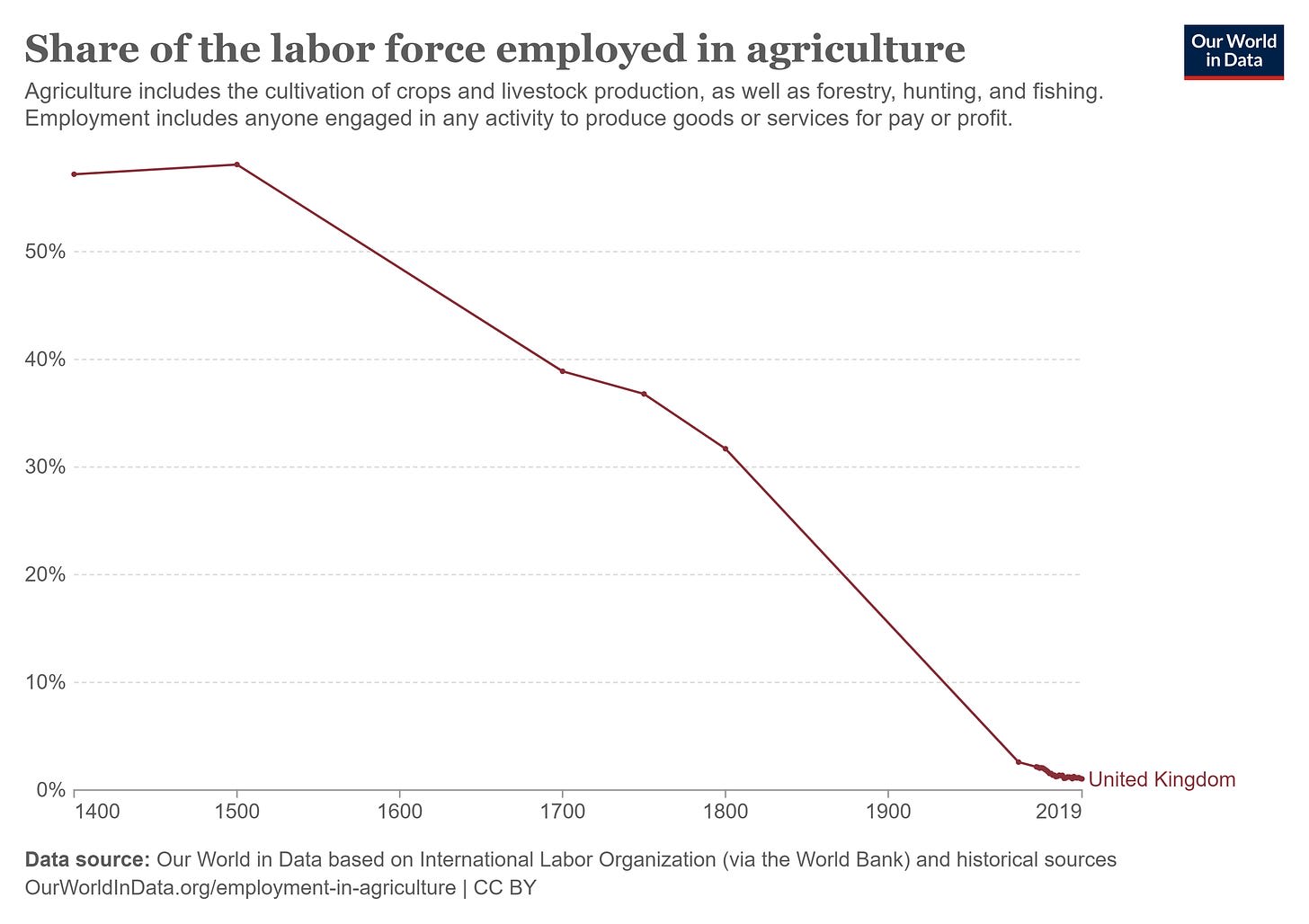

Second: the mechanization of farming. This is another example of massive productivity increases but with different outcomes.

Unlike authors and the printing press, the ratio of change in production per person and change total consumption was not low enough to grow the farming labor force or even sustain it. The per-capita incomes of farmers have doubled several times over but there are many fewer farmers, even in absolute numbers.

Third: computers. Specifically, the shift from the job title of computer to the name of the machine that replaced it. The skill of solving complicated numeric equations by hand was outright replaced. There was some intermediate period of productivity enhancement where the human computers or cashiers used calculators, but eventually these tools became so cheap and easy for anyone to use that a separate, specialized position for someone who just inputs and outputs numbers disappeared. This industry was replaced by a new industry of people who programmed the automation of the previous one. Software engineering now produces and employs many more resources, in shares and absolute numbers, than the industry of human computers did. The skills required and rewarded in this new industry are different, though there is some overlap in skills which helped some computers transition into programmers.

Finally: the ice trade. In the 19th and early 20th centuries, before small ice machines were common, harvesting and shipping ice around the world was a large industry employing hundreds of thousands of workers. In the early 19th century this meant sawing ice off of glaciers in Alaska or Switzerland and shipping it in insulated boats to large American cities and the Caribbean. Soon after the invention of electricity, large artificial ice farms popped up closer to their customer base. By WW2 the industry had collapsed and been replaced by home refrigeration. This is similar to the computer story but the replacement job of manufacturing refrigerators never grew larger than the globe spanning, labor intensive ice trade.

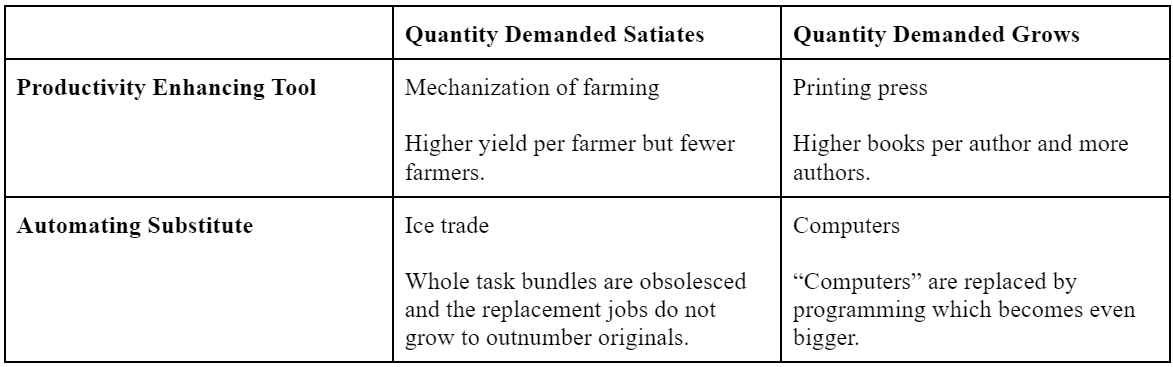

This framework of analogies is useful for projecting possible futures for different cognitive labor industries. It helps concretize two important variables: whether the tech will be a productivity enhancing tool for human labor or an automating substitute and whether the elasticity of demand for the outputs of the augmented production process is high enough to raise the quantity of labor input.

All of these analogies are relevant to the future of cognitive labor. In my particular research and writing corner of cognitive labor, the most optimistic story is the printing press. The current biggest value adds for this work: literature synthesis, data science, and prose seem certain to be automated by AI. But as with the printing press, automating the highest value parts of a task is not sufficient to decrease the income, influence, or number of people doing it. Google automated much of the research process but it has supported the effusion of thousands of incredible online writers and researchers.

Perhaps this can continue and online writing will become even more rewarding, higher quality, and popular. This requires the demand for writing and research to expand enough to more than offset the increased productivity of each writer already producing it. With the printing press, this meant more people reading more copies of the same work. With the internet, there is already an essentially infinite supply of online writing and research that can accessed and copied for free. Demand is already satiated there, but demand for the highest quality pieces of content is not. In my life, at least, there is room for more Scott Alexander quality writing despite being inundated with lots of content below that threshold. AI may enable a larger industry of people producing writing and research of the highest quality.

If quantity demanded, even for these highest quality pieces of content, does not increase enough then we will see writing and research become more like mechanized farming. An ever smaller number of people using capital investment to satiate the world’s demand. Highly skewed winner-take-all markets on youtube, larger teams in science, and the publishing market may be early signs of this dynamic.

The third and fourth possibilities, where so many tasks in a particular job are automated that the entire job is replaced, seems most salient for programmers and paralegals. It would be a poetic future where programmer, the job title which rose to replace the now archaic “computer,” also becomes an archaic term. This could happen if LLMs fully automate writing programs to fulfill a given goal and humans graduate to long term planning and coordination of these programmers.

The demand for the software products that these planners could make in cooperation with LLMs seems highly elastic, so most forms of cognitive labor seem safe from the ice trade scenario for this reason. Perhaps automating something like a secretary would not increase not increase the quantity demanded for the products they help produce enough to offset that decline in employment

When predicting the impact of AI on a given set of skills most people only focus on the left-hand axis of the 2x2 table above. But differences in the top axis can flip the sign of expected impact. If writing and research will get enhanced productivity from AI tools, they can still be bad skills to invest in if all of the returns to this productivity will go to a tiny group of winners in the market for ideas. If your current job will be fully automated and replaced by an AI, it can still turn out well if you can get into a rapidly expanding replacement industry.

The top axis also has the more relevant question for the future of cognitive labor, at least the type I do: writing and research. Is there enough latent demand for good writing to accommodate both a 10x more productive Scott Alexander and a troupe of great new writers? Or will Mecha-Scott satiate the world and leave the rest of us practicing our handwriting in 1439?

I think none of these analogies are very good because they fail to capture what I see as the key difference between AI and previous technologies. In short, unlike the printing press or mechanized farming, I think AI will eventually be capable of substituting for humans in virtually any labor task (both existing and potential future tasks), in a functional sense.

This dramatically raises the potential for both economic growth and effects on wages, since it effectively means that—absent consumer preferences for human-specific services, or regulations barring AIs from doing certain tasks—AIs will be ~perfectly substitutable for human labor. In a standard growth model, this would imply that the share of GDP paid to human labor will fall to near zero as the AI labor force scales. In that case, owners of capital would become extremely rich, and AIs would collectively have far more economic power than humans. This could be very bad for humans if there is ever a war between the humans and the AIs.

I think the correct way to analyze how AI will affect cognitive labor is inside of an appropriate mathematical model, such as this one provided by Anton Korinek and Donghyun Suh. Analogies to prior technologies, by contrast, seem likely to mislead people into thinking that there's "nothing new under the sun" with AI.

Quickly - "absent consumer preferences for human-specific services, or regulations barring AIs from doing certain tasks—AIs will be ~perfectly substitutable for human labor." -> This part is doing a lot of work. Functionally, I expect these to be a very large deal for a while.

> AIs would collectively have far more economic power than humans.

I mean, only if we treat them as individuals with their own property rights. We don't treat any other sort of software that way now. I would guess that we really don't want to do this - and I'd also guess that they won't be conscious unless we explicitly want that.

Perhaps you can expand on this point. I personally don't think there are many economic services for which I would strongly prefer a human perform them compared to a functionally identical service produced by an AI. I have a hard time imagining paying >50% of my income on human-specific services if I could spend less money to obtain essentially identical services from AIs, and thereby greatly expand my consumption as a result.

However, if we are counting the value of interpersonal relationships (which are not usually counted in economic statistics), then I agree the claim is more plausible. Nonetheless, this also seems somewhat unimportant when talking about things like whether humans would win a war with AIs.

In this context, it doesn't matter that much whether AIs have legal property rights, since I was talking about whether AIs will collectively be more productive and powerful than humans. This distinction is important because, if there is a war between humans and AIs, I expect their actual productive abilities to be more important than their legal share of income on paper, in determining who wins the war.

But I agree that, if humans retain their property rights, then they will likely be economically more powerful than AIs in the foreseeable future by virtue of their ownership over capital (which could include both AIs and more traditional forms of physical capital).

I ultimately definitely agree that humans would very much lose, at some point of AI. I was instead trying to just discuss economic situations in the cases where AIs don't rebel.

> I personally don't think there are many economic services for which I would strongly prefer a human perform them compared to a functionally identical service produced by an AI

I think that even small bottlecks would eventually become a large deal. If 0.1% of a process is done by humans, but the rest gets automated and done for ~free, then that 0.1% is what gets paid for.

For example, solar panels have gotten far cheaper, but solar installations haven't, negating much of the impact past a point.

So I think that wherever humans can't be automated, there might be a lot more human work being done, or at least pay available for it.

I agree with this in theory, but in practice I expect these bottlenecks to be quite insignificant in both the short and long-run.

We can compare to an analogous case in which we open up the labor market to foreigners (i.e., allowing them to immigrate into our country). In theory, preferences for services produced by natives could end up implying that, no matter how many people immigrate to our country, natives will always command the majority of aggregate wages. However, in practice, I expect that the native labor share of income would decline almost in proportion to their share of the total population.

In the immigration analogy, the reason why native workers would see their aggregate share of wages decline is essentially the same as the reason why I expect the human labor share to decline with AI: foreigners, like AIs, can learn to do our jobs about as well as we can do them. In general, it is quite rare for people to have strong preferences about who produces the goods and services they buy, relative to their preferences about the functional traits of those goods and services (such as their physical quality and design).

(However, the analogy is imperfect, of course, because immigrants tend to be both consumers and producers, and therefore their preferences impact the market too -- whereas you might think AIs will purely be producers, with no consumption preferences.)

Again, I'm assuming that the AIs won't get this money. Almost everything AIs do basically gets done for "free", in an efficient market, without AIs themselves earning money. This is similar to how most automation works.

If AIs do get the money, things would be completely different to my expectations. Though in that case, I'd imagine that tech might move much more slowly, if these AIs didn't have some extreme race to the bottom, in terms of being willing to do a lot of work for incredibly cheap. I'm really not sure how to price the marginal supply curve for AIs.

That's not what I meant. I expect the human labor share to decline to near-zero levels even if AIs don't own their own labor.

In the case AIs are owned by humans, their wages will accrue to their owners, who will be humans. In this case, aggregate human wages will likely be small relative to aggregate capital income (i.e., GDP that is paid to capital owners, including people who own AIs).

In the case AIs own their own labor, I expect aggregate human wages will be both small compared to aggregate AI wages, and small compared to aggregate capital income.

In both cases, I expect the total share of GDP paid out as human wages will be small. (Which is not to say humans will be doing poorly. You can enjoy high living standards even without high wages: rich retirees do that all the time.)

I imagine some of the question would be "how monopolistic will these conditions be?" If there's one monopoly, they'd charge a ton, and I'd expect them to quickly dominate the entire world.

If there's "perfect competition", I'd expect this area to be far cheaper.

Right now LLMs seem much closer to "perfect competition" to me - companies are losing money selling them (I'm quite sure). I'm not sure what to expect going forwards. I assume that people won't allow 1-2 companies to just start owning the entire economy, but it is a possibility. (This is basically a Decisive Strategic Advantage, at that point)

All that said, I don't imagine the period I'm describing lasting all too long. Once humans become simulated well, and we really reach TAI++, lots of bets are off. It seems really tough to have a great model of that world, outside of "humans basically split up light cone, by dividing the sources of production, which will basically be AIs"[1]

I agree that humans will basically stop being useful at that point.

But if that point is far away (40-90 years), this could be enough time for many humans to make a lot of that money/capital, for that time.

[1] "Split up" could mean "The CCP gets all of it"

Basically, I naively expect there to be some period where we have a lot of AI, but humans are still getting paid a lot - followed by some point where humans just altogether stop. (unless weird lock-in happens)

Maybe one good forecasting question is something like, "How much future wealth will be owned by AIs themselves, at different points in time?" I'll guess that the answer is likely to either be roughly 0% (as with most automations), or 100% (AI Takeover, though in these cases, it's not clear how to define the market)

Executive summary: The impact of AI on cognitive labor will depend on whether AI enhances productivity or automates jobs, and whether demand for the outputs is elastic enough to increase the quantity of labor.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.