All of Austin's Comments + Replies

To be clear, "10 new OpenPhils" is trying to convey like, a gestalt or a vibe; how I expect the feeling of working within EA causes to change, rather than a rigorous point estimate

Though, I'd be willing to bet at even odds, something like "yearly EA giving exceeds $10B by end of 2031", which is about 10x the largest year per https://forum.effectivealtruism.org/posts/NWHb4nsnXRxDDFGLy/historical-ea-funding-data-2025-update.

Some factors that could raise giving estimates:

- The 3:1 match

- If "6%" is more like "15%"

- Future growth of Anthropic stock

- Differing priorities and timelines (ie focus on TAI) among Ants

Also, the Anthropic situation seems like it'll be different than Dustin in that the number of individual donors ("principals") goes up a lot - which I'm guessing leads to more grants at smaller sizes, rather than OpenPhil's (relatively) few, giant grants

Appreciate the shoutout! Some thoughts:

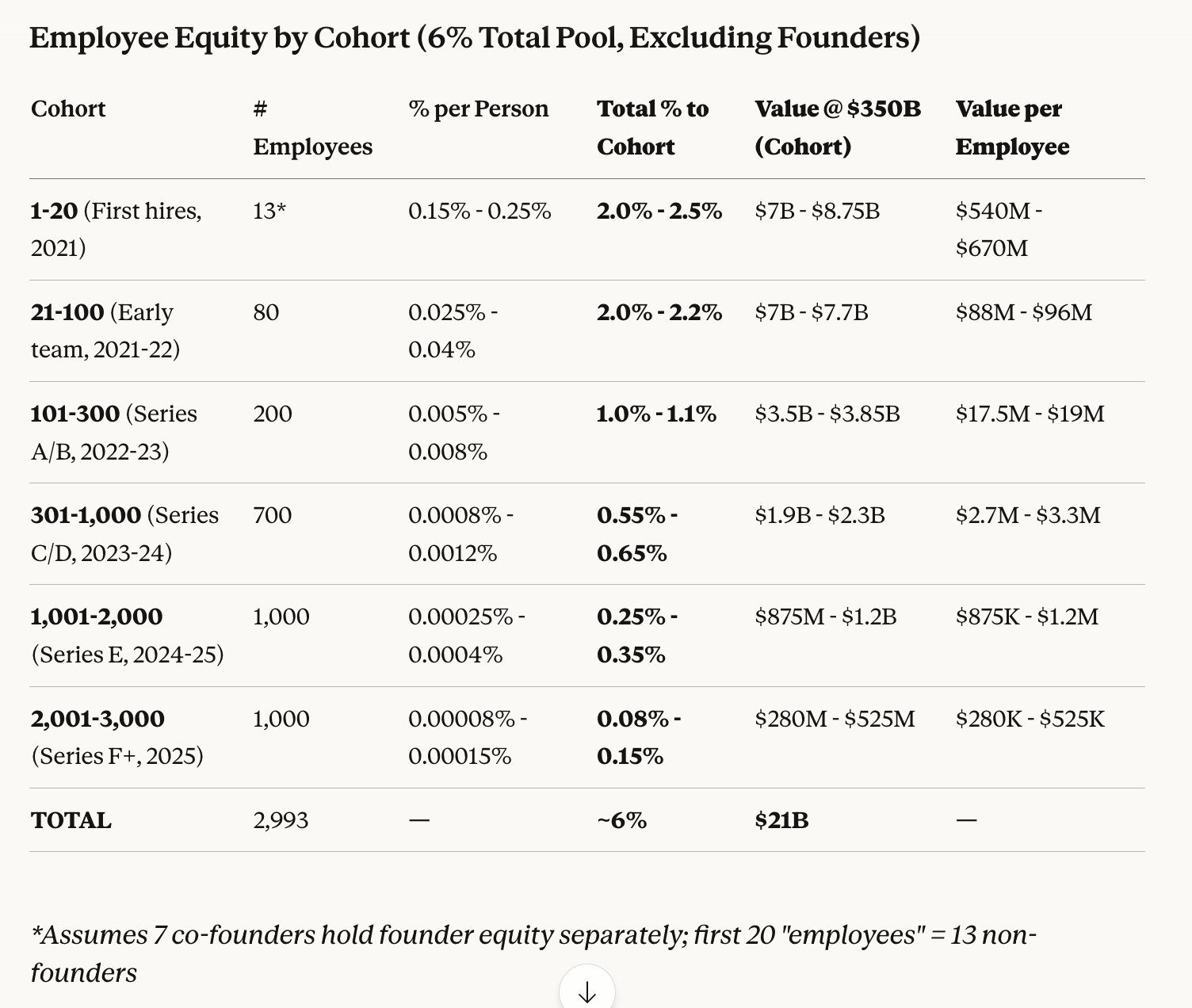

- Anthropic's been lately valued at $350b; if we estimate that eg 6% of that is in the form of equity allocated to employees, that's $21B between the ~3000 they currently have, or an average of $7m/employee.

- I think 6% is somewhat conservative and wouldn't be surprised if it were more like 12-20%

- Early employees have much (OOMs) more equity than new hires. Here's one estimated generated by Claude and I:

- Even after discounting for standard vesting terms (4 years), % of EAs, and % allocated to charity, that's still mindboggl

For the $1m estimate, I think the figures were intended to include estimated opportunity cost foregone (eg when self-funding), and Marcus ballparked it at $100k/y * 10 years? But this is obviously a tricky calculation.

tbh, I would have assumed that the $300k through LTFF was not your primary source of funding -- it's awesome that you've produced your videos on relatively low budgets! (and maybe we should work on getting you more funding, haha)

Thanks for the thoughtful replies, here and elsewhere!

- Very much appreciate data corrections! I think medium-to-long term, our goal is to have this info in some kind of database where anyone can suggest data corrections or upload their own numbers, like Wikipedia or Github

- Tentatively, I think paid advertising is reasonable to include. Maybe more creators should be buying ads! So long as you're getting exposure in a cost-effective way and reaching equivalently-good people, I think "spend money/effort to create content" and "spend money/effort to distribute c

Hey Liron! I think growth in viewership is a key reason to start and continue projects like Doom Debates. I think we're still pretty early in the AI safety discourse, and the "market" should grow, along with all of these channels.

I also think that there are many other credible sources of impact other than raw viewership - for example, I think you interviewing Vitalik is great, because it legitimizes the field, puts his views on the record, and gives him space to reflect on what actions to take - even if not that many people end up seeing the video. (compare irl talks from speakers eg at Manifest - much fewer viewers per talk but the theory of impact is somewhat different)

I have some intuitions in that direction (ie for a given individual to a topic, the first minute of exposure is more valuable than the 100th), and that would be the case for supporting things like TikToks.

I'd love to get some estimates on what the drop-off in value looks like! It might be tricky to actually apply - we/creators have individual video view counts and lengths, but no data on uniqueness of viewer (both for a single video and across different videos on the same channel, which I'd think should count as cumulative)

The drop-off might be...

A way you can quantify this is by looking at ad CPMs - how much advertisers are willing to pay for ads on your content.

This is a pretty objective metric for how much "influence" a creator has over their audience.

Podcasters can charge much more per view than longform Youtubers, and longform Youtubers can charge much more than shortform and so on.

This is actually understating it. It's incredibly hard for shortform only creators to make money with ads or sponsors.

There is clearly still diminishing returns per viewer minute, but the curve is not that ste...

Super excited to have this out; Marcus and I have been thinking about this for the last couple of weeks. We're hoping this is a first step towards getting public cost-effectiveness estimates in AI safety; even if our estimates aren't perfect, it's good to have some made-up numbers.

Other thoughts:

- Before doing this, we might have guessed that it'd be most cost-effective to make many cheap, low effort-videos. AI in Context belies this; they've spent the most per video (>$100k/video vs $10-$20k that others) but still achieve strong QAVM/$

- I think of Dwarkesh

Before doing this, we might have guessed that it'd be most cost-effective to make many cheap, low effort-videos. AI in Context belies this; they've spent the most per video (>$100k/video vs $10-

I think this is an artifact of the way video views are weighted, for two reasons:

- I suspect that impact is strongly sub-linear with minutes watched. The 100th minute watched for a particular viewer probably matters far less than the 1st minute watched. So judging impact by viewer-minutes over-rates long videos and under

Broadly agree that applying EA principles towards other cause areas would be great, especially for areas that are already intuitively popular and have a lot of money behind them (eg climate change, education). One could imagine a consultancy or research org that specializes as "Givewell of X".

Influencing where other billionaires give also seems great. My understanding is that this is Longview's remit, but I don't think they've succeeded at moving giant amounts yet, and there's a lot of room for others to try similar things. It might be harder to advise bil...

We've been considering an effort like this on Manifund's side, and will likely publish some (very rudimentary) results soon!

Here are some of my guesses why this hasn't happened already:

- As others mentioned, longtermism/xrisk work has long feedback loops, and the impact of different kinds of work is very sensitive to background assumptions

- AI safety is newer as a field -- it's more like early-stage venture funding (which is about speculating on unproven teams and ideas) or academic research, rather than public equities (where there's lots of data for analysts

Excellent list! I think these are broadly promising ideas and would love to see people try them out.

I just had an in-person discussion about one of these specifically (4. AI safety communications consultancy), and thought I'd state some reasons why I think that might be less good:

- I'm not sure how much demand there would be for a consultancy; unsure whether having AI safety orgs communicate more broadly about their work is useful/good for a vast swath of AI safety orgs (especially TAIS, which I think is trying to produce research for other researchers to ad

Quick responses:

- I'm not familiar on the tradeoffs between farmed animals/mammals vs wild/invertebrates; I think arguments like yours are plausible but also brittle

- My question is, "given that humans care so much about human welfare, why isn't there already a human welfare market? The lack of such a market may be evidence that animal welfare markets will be unpopular"

- I think you're taking the ballparks a bit too literally - though I also invite you to put down your own best guess about how much eg US consumers would be willing to pay for welfare, I probably

Without knowing a ton about the economics, my understanding is that Project Vesta, as a startup working on carbon capture and sequestration, costs more per ton than other initiatives currently, but the hope is with continued revenue & investment they can go down the cost curve. I agree it's hard to know for sure what to believe -- the geoengineering route taken by Make Sunsets is somewhat more controversial than CC&S (and I think, encodes more assumptions about efficacy), and one might reasonably prefer a more direct if expensive route to reversing...

We'd definitely want to avoid establishing perverse incentives (such as the famous "paying for dead cobras => cobra farming"). At first glance, your example seems to gesture at a problem with establish credits for "alleviating suffering" versus "producing positive welfare", which I agree we might want to avoid.

I don't think that paying for welfare has to be implemented as "paying for someone not to do something bad" -- some carbon credits are set up way (paying not to chop down trees), but others are not (Stripe Frontier's carbon capture & sequestra...

Hey Trevor! One of the neat things about Manival is the idea that you can create custom criteria to try and find supporting information that you as a grantmaker want to weigh heavily, such as for adverse selection. So for example, one could create their own scoring system that includes a data fetcher node or a synthesizer node, which looks for signals like "OpenPhil funded this two years ago, but has declined to fund this now".

Re: adverse selection in particular, I still believe what I wrote a couple years ago: adverse selection seems like a relevant consi...

I believe this! Anecdotally:

- I suspect that in the 3 years I've been in the community, I've been day-to-day happier and find more meaning in work; in contrast to eg the same period of time when I joined Google just out of school

- My sense is a lot of my peers from high school or college don't get the same kind of support that EA provides around community, friends, dating, and mission-orientation

For example, Substack is a bigger deal now than a few years ago, and if the Forum becomes a much worse platform for authors by comparison, losing strong writers to Substack is a risk to the Forum community.

I've proposed to the LW folks and I'll propose to y'all: make it easy to import/xpost Substack posts into EA Forum! RIght now a lot of my writing goes from Notion draft => our Substack => LW/EAF, and getting the formatting exactly right (esp around images, spacing, and footnotes) is a pain. I would love the ability to just drop in our Substack link and have that automatically, correctly, import the article into these places.

I'm also not sure if this is what SWP is going for, but the entire proposal reminds me of Paul Christiano's on humane egg offsets, which I've long been fond of: https://sideways-view.com/2021/03/21/robust-egg-offsetting/

With Paul's, the egg certificate solves a problem of "I want humane eggs, but I can buy regular egg + humane cert = humane egg". Maybe the same would apply for stunned shrimp, eg a supermarket might say "I want to brand my shrimp as stunned for marketing or for commitments; I can buy regular shrimp + stun cert = stunned shrimp"

Really appreciate this post! I think it's really important to try new things, and also have the courage to notice when things are not working and stop them. As a person who habitually starts projects, I often struggle with the latter myself, haha.

(speaking of new projects, Manifund might be interested in hosting donor lotteries or something similar in the future -- lmk if there's interest in continuity there!)

Hey! Thanks for the thoughts. I'm unfortunately very busy these days (including, with preparing for Manifest 2025!) so can't guarantee I'll be able to address everything thoroughly, but a few quick points, written hastily and without strong conviction:

- re non sequitor, I'm not sure if you've been on a podcast before but one tends to just, like, say stuff that comes to mind; it's not an all-things-considered take. I agree that Hanania denouncing his past self is a great and probably more central example of growth, I just didn't reference it because the SWP s

Thank you Caleb, I appreciate the endorsement!

And yeah, I was very surprised by the dearth of strong community efforts in SF. Some guesses at this:

- Berkeley and Oakland have been historical nexus for EA and rationality, with a rich-get-richer effect where people migrating to the bay choose East Bay

- In SF, there's much more competition for talent: people can go work on startups, AI labs, FAANG, VC

- And also competition for mindshare: SF's higher population and density means there are many other communities (eg climbing, biking, improv, yimby, partying)

Thanks Angelina! It was indeed fun, hope to have you join in some future version of this~

And yeah definitely great to highlight that list of projects, many juicy ideas in there for any aspiring epistemics hacker, still unexplored. (I think it might be good for @Owen Cotton-Barratt et al to just post that as a standalone article!)

I agree that the post is not well defended (partly due to brevity & assuming context); and also that some of the claims seem wrong. But I think the things that are valuable in this post are still worth learning from.

(I'm reminded of a Tyler Cowen quote I can't find atm, something like "When I read the typical economics paper, I think "that seems right" and immediately forget about it. When I read a paper by Hanson, I think "What? No way!" and then think about it for the rest of my life". Ben strikes me as the latter kind of writer.)

Similar to the way B...

I'm a bit disappointed, if not surprised, with the community response here. I understand veganism is something of a sacred cow (apologies) in these parts, but that's precisely why Ben's post deserves a careful treatment -- it's the arguments you least agree with that you should extend the most charity to. While this post didn't cause me to reconsider my vegetarianism, historically Ben's posts have had an outsized impact on the way I see things, and I'm grateful for his thoughts here.

Ben's response to point 2 was especially interesting:

...If factory farming se

<I'm a bit disappointed, if not surprised, with the community response here.>

I can't speak for other voters, but I downvoted due to my judgment that there were multiple critical assumption that were both unsupported / very thinly supported and pretty dubious -- not because any sacred cows were engaged. While I don't think main post authors are obliged to be exhaustive, the following are examples of significant misses in my book:

- "animals successfully bred to tolerate their conditions" -- they are bred such that enough of them don't die before we decid

It's a good point about how it applies to founders specifically - under the old terms (3:1 match up to 50% of stock grant) it would imply a maximum extra cost from Anthropic of 1.5x whatever the founders currently hold. That's a lot!

Those bottom line figures doesn't seem crazy optimistic to me, though - like, my guess is a bunch of folks at Anthropic expect AGI on the inside of 4 years, and Anthropic is the go to example of "founded by EAs". I would take an even-odds bet that the total amount donated to charity out of Anthropic equity, excluding matches, is >$400m in 4 years time.

Anthropic's donation program seems to have been recently pared down? I recalled it as 3:1, see eg this comment on Feb 2023. But right now on https://www.anthropic.com/careers:

> Optional equity donation matching at a 1:1 ratio, up to 25% of your equity grant

Curious if anyone knows the rationale for this -- I'm thinking through how to structure Manifund's own compensation program to tax-efficiently encourage donations, and was looking at the Anthropic program for inspiration.

I'm also wondering if existing Anthropic employees still get the 3:1 terms, or th...

Thanks for the recommendation, Benjamin! We think donating to Manifund's AI Safety regranting program is especially good if you don't have a strong inside view among the different orgs in the space, but trust our existing regrantors and the projects they've funded; or if you are excited about providing pre-seed/seed funding for new initiatives or individuals, rather than later-stage funding for more established charities (as our regrantors are similar to "angel investors for AI safety").

If you're a large donor (eg giving >$50k/year), we're also happy to...

This makes sense to me; I'd be excited to fund research or especially startups working to operationalize AI freedoms and rights.

FWIW, my current guess is that the proper unit to extend legal rights is not a base LLM like "Claude Sonnet 3.5" but rather a corporation-like entity with a specific charter, context/history, economic relationships, and accounts. Its cognition could be powered by LLMs (the way eg McDonald's cognition is powered by humans), but it fundamentally is a different entity due to its structure/scaffolding.

FWIW, my current guess is that the proper unit to extend legal rights is not a base LLM like "Claude Sonnet 3.5" but rather a corporation-like entity with a specific charter, context/history, economic relationships, and accounts. Its cognition could be powered by LLMs (the way eg McDonald's cognition is powered by humans), but it fundamentally is a different entity due to its structure/scaffolding.

I agree. I would identify the key property that makes legal autonomy for AI a viable and practical prospect to be the presence of reliable, coherent, and long-te...

Habryka clarifies in a later comment:

...Yep, my model is that OP does fund things that are explicitly bipartisan (like, they are not currently filtering on being actively affiliated with the left). My sense is in-practice it's a fine balance and if there was some high-profile thing where Horizon became more associated with the right (like maybe some alumni becomes prominent in the republican party and very publicly credits Horizon for that, or there is some scandal involving someone on the right who is a Horizon alumni), then I do think their OP funding would

I'm not aware of any projects that aim to advise what we might call "Small Major Donors": people giving away perhaps $20k-$100k annually.

We don't advertise very much, but my org (Manifund) does try to fill this gap:

- Our main site, https://manifund.org/, allows individuals and orgs to publish charitable projects and raise funding in public, usually for projects in the range of $10k-$200k

- We generally focus on: good website UX, transparency (our grants, reasoning, website code and meeting notes are all public), moving money fast (~1 week rather than months)

- We

I encourage Sentinel to add a paid tier on their Substack, just as an easy mechanism for folks like you & Saul to give money, without paywalling anything. While it's unlikely for eg $10/mo subscriptions to meaningfully affect Sentinel's finances at this current stage, I think getting dollars in the bank can be a meaningful proof of value, both to yourselves and to other donors.

@Habryka has stated that Lightcone has been cut off from OpenPhil/GV funding; my understanding is that OP/GV/Dustin do not like the rationalism brand because it attracts right-coded folks. Many kinds of AI safety work also seem cut off from this funding; reposting a comment from Oli :

...Epistemic status: Speculating about adversarial and somewhat deceptive PR optimization, which is inherently very hard and somewhat paranoia inducing. I am quite confident of the broad trends here, but it's definitely more likely that I am getting things wrong here than in othe

This looks awesome! $1k struck me as a pretty modest prize pool given the importance of the questions; I'd love to donate $1k towards increasing this prize, if you all would accept it (or possibly more, if you think it would be useful.)

I'd suggest structuring this as 5 more $200 prizes (or 10 $100 honorable mentions) rather than doubling the existing prizes to $400 -- but really it's up to you, I'd trust your allocations here. Let me know if you'd be interested!

The act of raising funding from "EA general public" is quite rare at the moment - most orgs I'm familiar with get the vast majority of their funding from a handful of institutions (OP, EA Funds, SFF, some donor circles).

I do think fundraising from the public can be a good forcing function and I wish more EA nonprofits tried to do so. Especially meta/EA internal orgs like 80k or EA Forum or EAG (or Lightcone), since there, "how much is a user willing to donate" could be a very good metric for the how much value they are receiving from their work.

One of the ...

If you are a mechanical engineer digging around for new challenges and you’re not put off by everyone else’s failure to turn a profit, I’d be enthusiastic about your building a lamp and would do my best to help you get in touch with people you could learn from.

If this describes you, I'd also love to help (eg with funding) -- reach out to me at austin@manifund.org!

Thanks for posting this! I appreciate the transparency from the CEA team around organizing this event and posting about the results; putting together this kind of stuff is always effortful for me, so I want to celebrate when others do it.

I do wish this retro had a bit more in the form of concrete reporting about what was discussed, or specific anecdotes from attendees, or takeaways for the broader EA community; eg last year's MCF reports went into substantial depth on these, which really enjoyed. But again, these things can be hard to write up, perfect sho...

Thanks for the questions! Most of our due diligence happens in the step where the Manifund team decides whether to approve a particular grant; this generally happens after a grant has met its minimum funding bar and the grantee has signed our standard grant agreement (example). At that point, our due diligence usually consists of reviewing their proposal as written for charitable eligibility, as well as a brief online search, looking through the grant recipient's eg LinkedIn and other web presences to get a sense of who they are. For larger grants on our p...

I'd really appreciate you leaving thoughts on the projects, even if you decided not to fund them. I expect that most project organizers would also appreciate your feedback, to help them understand where their proposals as written are falling short. Copy & paste of your personal notes would be great!

Thanks for the post! It seems like CEA and EA Funds are the only entities left housed under EV (per the EV website); if that's the case, why bother spinning out at all?