All of eca's Comments + Replies

Hi, computational protein engineer and person who-thinks-biology-can-do-amazing-stuff here.

Just wanted to report that while "Proteins are like Folded Spaghetti Held Together By Static Cling" is obviously incorrect as a matter of fact, I immediately thought it was a pretty good analogy for capturing some critical and often under-appreciated aspects of the functionally important character of proteins. When I read the sentences you've quoted him saying about proteins held together by covalent bonds, I (think) I understood what he was pointing at with this and...

One impression I could imagine having after reading this post for the first time is something like: "eca would prefer fewer connections to people and doesn't value that output of community building work" or even more scandalously, "eca thinks community builders are wasting their time".

I don't believe that, and would have edited the draft to make that more clear if I had taken a different approach to writing it.

A quick amendment to that vibe.

- Community building is mission critical. It's also complicated, and not something I expect to have good opinions abo

Meta note: this was an experiment in jotting something down. I've had a lot of writers block on forum posts before and thought it would be good to try erring on the side of not worrying about the details.

As I'm rereading what I wrote late last night I'm seeing things I wish I could change. If I have time, I'll try writing these changes as comments rather than editing the post (except for minor errors).

(Curious for ideas/approaches/ recommendations for handling this!)

This seems like a great idea- I actually woke up this morning realizing I forgot it from my list!

One part of my perspective which is possibly worth reemphasizing: IMO, what you choose to work together does not need to be highly optimized or particularly EA. At least to make initial progress in this direction, it seems plausible that you should be happy with anything challenging/ without an existing playbook, collaborative, and “real” in the sense of requiring you to act like you would if you were solving a real problem instead of playing a toy game.

So in ...

thanks for the kind words! I agree that we didn't have much good stuff for ppl to do 4 yrs ago when i started in bio but don't feel like my model matches yours regarding why.

But I'm also wanting to confirm I've understood what you are looking for before I ramble.

How much would you agree with this description of what I could imagine filling in from what you said re 'why it took so long':

"well I looked at this list of projects, and it didn't seem all that non-obvious to me, and so the default explanation of 'it just took a long time to work out these project...

Well I hope it works out for ya! Thanks haha

In case you are looking for content and have interests similar to me I like the following for audio:

- Institute for Advanced Study lectures (random fun science)

- Yannic Kilcher (ML paper summaries)

- Wendover Productions/ Kurzgesagt (random probably not as useful but interesting science and econ funfacts)

- LiveOverflow (Security)

And i find that searching for random academics names is more likely to turn up lectures/ convos than podcasts

- Order groceries online! Maybe this is obvious but I have the impression not as many ppl do this as they should. Saves me at least 1 hr (usually closer to 2) for < $20

- Pay for a bunch of disk space. I find it generates a lot of overhead to have files in different places. For me, the solution has been a high performance workstation plus remote desktop forwarding to my laptop when I travel so I can always have the same disk and workspace

- Buy more paid apps/ premium upgrades/ digital subscriptions. I haven’t done the math on this so might not be as good as

What a cool project! I listen to the vast majority of my reading these days and am perpetually out of good things to read.

The linked audio is reasonably high quality, and more importantly, it doesn't have some of the formatting artifacts that other TTS programs have. Well done.

Your story for why this is a potentially high impact project is plausible to me, especially given how much you've automated. I have independently been thinking about building something similar, but with a very different story for why it could be worth my time to do it. That means th...

And there are various things one could probably do to make it not illegal but still messed up and the wrong thing to do! Like make it mandatory to check a box saying you waive your copyright for audio on a thing before you post on the forum. I think if, like some of the tech companies, you made this box really little and hard to find, most people would not change their posting behavior very much, and would now be totally legal (by assumption).

but it would still be a bad thing to do.

This is a reason to fix the system! My point is that it reduces to "make all the authors happy with how you are doing things", there is not some spooky extra thing having to do with illegality

TBC I do not endorse using people's content in a way they aren't happy with, but I would still have that same belief if it wasn't illegal at all to do so.

FWIW I think I endorse Kat's reasoning here. I don't think it matters if it is illegal if I'm correct in suspecting that the only people who could bring a copyright claim are the authors, and assuming the authors are happy with the system being used. This is analogous to the way it is illegal, by violating minimum wage laws, to do work for your own company without paying yourself, but the only person who has standing to sue you is AFAIK yourself.

Not a lawyer, not claiming to know the legal details of these cases, but I think this standing thing is real and an appropriate way to handle

Empirical differential tech development?

Many longtermist questions related to dangers from emerging tech can be reduced to “what interventions would cause technology X to be deployed before/ N years earlier than/ instead of technology Y”.

In, biosecurity, my focus area, an example of this would be something like "how can we cause DNA synthesis screening to be deployed before desktop synthesizers are widespread?"

It seems a bit cheap to say that AI safety boils down to causing an aligned AGI before an unaligned, but it kind of basically does, and I suspect ...

I wonder how these compare with fitting a Beta distribution and using one of its statistics? I’m imagining treating each forecast (assuming they are probabilities) as an observation, and maximizing the Beta likelihood. The resulting Beta is your best guess distribution over the forecasted variable.

It would be nice to have an aggregation method which gave you info about the spread of the aggregated forecast, which would be straightforward here.

It's not clear to me that "fitting a Beta distribution and using one of it's statistics" is different from just taking the mean of the probabilities.

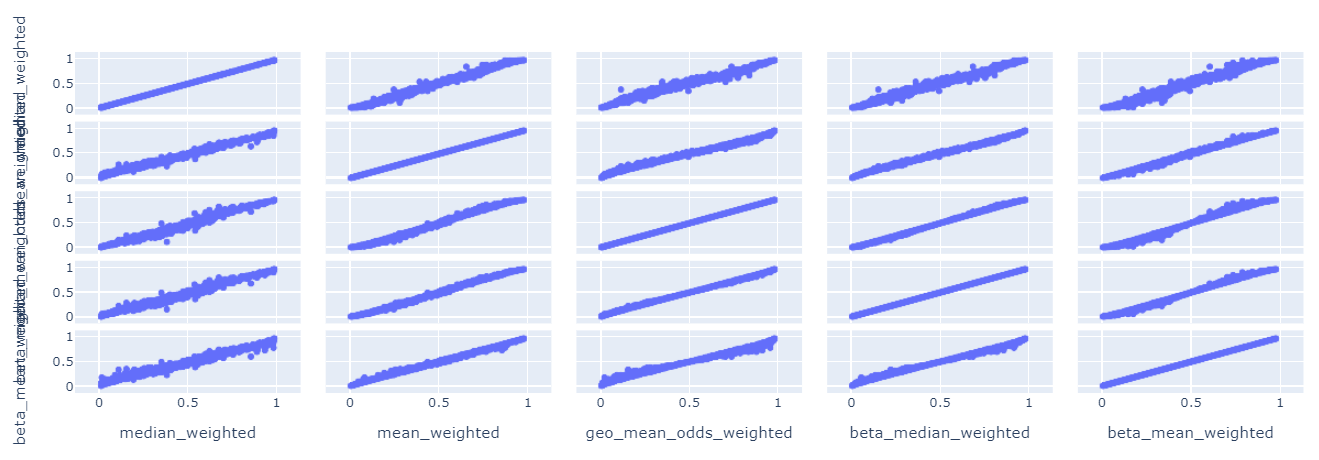

I fitting a beta distribution to Metaculus forecasts and looked at:

- Median forecast

- Mean forecast

- Mean log-odds / Geometric mean of odds

- Fitted beta median

- Fitted beta mean

Scattering these 5 values against each other I get:

We can see fitted values are closely aligned with the mean and mean-log-odds, but not with the median. (Unsurprising when you consider the ~parametric formula for the mean / median).

The performan...

I’m vulnerable to occasionally losing hours of my most productive time “spinning my wheels”: working on sub-projects I later realize don’t need to exist.

Elon Musk gives the most lucid naming of this problem in the below clip. He has a 5 step process which nails a lot of best practices I’ve heard from others and more. It sounds kind of dull and obvious to write down, but somehow I think staring at the steps will actually help. Its also phrased somewhat specifically to building physical stuff, but I think there is a generic version of each. I’m going to try ...

One more unsolicited outreach idea while I’m at it: high school career / guidance counselors in the US.

I’m not sure how idiosyncratic this was of my school, but we had this person whose job it was to give advice to older highschool kids about what to do for college and career. Mine’s advice was really bad and I think a number of my friends would have glommed onto 80k type stuff if it was handed to them at this time (when people are telling you to figure out your life all of a sudden). This probably hits the 16yo demographic pretty well.

Could look like addi...

Exciting!

This is probably not be the best place to post this but I’ve been learning recently about the success of hacking games in finding and training computer security people (https://youtu.be/6vj96QetfTg for a discussion, also this game I got excited about in high school: https://en.m.wikipedia.org/wiki/Cicada_3301).

I think there might be something to an EA/ rationality game. Like something with a save-the-world but realistically plot and game mechanics built around useful skills like Fermi estimation. This is a random gut feeling I’ve had for a while ...

I appreciate the answers so far!

One thing I realized I'm curious about in asking this is something about how many groups of people/ governing bodies are actually crazy enough to use nuclear weapons even if self-annihilation is assured. This seems like an interesting last check against horrible mutual destruction stuff. The hypothesis to invalidate is: maybe the types of people assembled into the groups we call "governments" are very unlikely to carry an "activate mutual destruction" decision all the way through. To be clear, I don't believe this, and I th...

Great set of links, appreciate it. Was especially excited to see lukeprog's review and the author's presentation of Atomic Obsession.

I'm inclined toward answers of the form "seems like they would have been used more or some civilizational factor would need to change" (which is how I interpret Jackson's answer on strong global policing). Which is why I'm currently most interested in understanding the Atomic Obsession-style skeptical take.

If anyone is interested, the following are some of the author's claims which seem pertinent, at least as far as I can te...

Re direct military conflicts between nuclear weapons states: this might not exactly fit the definition of "direct" but I enjoyed skimming the mentions of nuclear weapons in this wikipedia on the yom kippur war, which saw a standoff between Israel (nuclear) and Egypt (not nuclear, but had reportedly been delivered warheads by USSR). There is some mention of Israel "threatening to go nuclear" possibly as a way of forcing the US to intervene with conventional military resources.

Interesting! For (1) how do you expect the economic superpowers to respond to smaller nations using nuclear weapons in this world? It sounds like because of MAD between the large nations, your model is that they must allow small nuclear conflicts, or alternatively pivot into your scenario 2 of increased global policing, is that correct?

Thanks for this post Luisa! Really nice resource and I wish I caught it earlier. A couple methodology questions:

-

Why do you choose an arithmetic mean for aggregating these estimates? It seems like there is an argument to be made that in this case we care about order-of-magnitude correctness, which would imply taking the average of the log probabilities. This is equivalent to the geometric mean (I believe) and is recommended for fermi estimates e.g. (here)[https://www.lesswrong.com/posts/PsEppdvgRisz5xAHG/fermi-estimates].

-

Do you have a sense for how

Why do you choose an arithmetic mean for aggregating these estimates?

This is a good point.

I'd add that as a general rule when aggregating binary predictions one should default to the average log odds, perhaps with an extremization factor as described in (Satopää et al, 2014).

The reasons are a) empirically, it seems to work better, b) the way Bayes rules works it seems to suggest very strongly than log odds are the natural unit of evidence, c) apparently there are some complex theoretical reasons ("external bayesianism") why this is better (the ...

Thanks Seb. I don't think I have energy to fully respond here, possibly I'll make a separate post to give this argument its full due.

One quick point relevant to Crux 2: "I can also think of many examples of groundbreaking basic science that looks defensive and gets published very well (e.g. again sequencing innovations, vaccine tech; or, for a recent example, several papers on biocontainment published in Nature and Science)."

I think there are many-fold differences in impact/dollar between the tech you build if you are trying to actually solve the problem a...

I bet it is! The example categories I think I had in mind at time of writing would be 1) people in ML academia who want to be doing safety instead doing work that almost entirely accelerates capabilities and 2) people who want to work on reducing biological risk instead publish on tech which is highly dual use or broadly accelerates biotechnology without deferentially accelerating safety technology.

I know this happens because I've done it. My most successful publication to date (https://www.nature.com/articles/s41592-019-0598-1) is pretty much entirely c...

This is interesting and also aligns with my experience depending on exactly what you mean!

- If you mean that it seems less difficult to get tenure in CS (thinking especially about deep learning) than the vibe I gave, (which is again speaking about the field I know, bioeng) I buy this strongly. My suspicion is that this is because relative to bioengineering, there is a bunch of competition for top research talent by industrial AI labs. It seems like even the profs who stay in academia also have joint appointment in companies, for the most part. There isn't

"Working backwards" type thinking is indeed a skill! I find it plausible a PhD is a good place to do this. I also think there might be other good ways to practice it, like for example seeking out the people who seem to be best at this and trying to work with them.

+1 on this same type of thinking being applicable to gathering resources. I don't see any structural differences between these domains.

This is an excellent comment, thanks Adam.

A couple impressions:

- Totally agree there are bad incentives lots of places

- I think figuring out what existing institutions have incentives that best serve your goals, and building a strategy around those incentives, is a key operation. My intent with this article was to illustrate some of that type of thinking within planning for gradschool. If I was writing a comparison between working in academia and other possible ways to do research I would definitely have flagged the many ways academic incentives are better

Sorry for the (very) delayed reply here. I'll start with the most important point first.

But compared to working with a funder who, like you, wants to solve the problem and make the world be good, any of the other institutions mentioned including academia look extremely misaligned.

I think overall the incentives set up by EA funders are somewhat better than run-of-the-mill academic incentives, but I think the difference is smaller than you seem to believe, and I think we're a long way from cracking it. I think this is something we can get better at, but ...

Appreciate your comment! I probably won't be able to give my whole theory of change in a comment :P but if I were to say a silly version of it, it might look like: "Just do the thing"

So, what are the constituent parts of making scientific progress? Off the cuff, maybe something like:

- You need to know what questions are worth asking / problems are worth solving

- You need to know how to decompose these questions in sub-questions iteratively until a subset are answerable from the state of current knowledge

- You need to have good research project management ski

Thanks Charles! I think of your two options I most closely mean (1). For evidence I don't mean 2: "Optimize almost exclusively for compelling publications; for some specific goals these will need to be high-impact publications."

My attempt to restate my position would be something like: "Academic incentives are very strong and its not obvious from the inside when they are influencing your actions. If you're not careful, they will make you do dumb things. To combat this, you should be very deliberate and proactive in defining what you want and how you want i...

Publishing good papers is not the problem, deluding yourself is.

Big +1 to this. Doing things you don't see as a priority but which other people are excited about is fine. You can view it as kind of a trade: you work on something the research community cares about, and the research community is more likely to listen on (and work on) things you care about in the future.

But to make a difference you do eventually need to work on things that you find impactful, so you don't want to pollute your own research taste by implicitly absorbing incentives or others opinions unquestioningly.

I am doing 1. 2 is an incidental from the perspective of this post, but is indeed something I believe (see my response to bhalperin). I think my attempt to properly flag my background beliefs may have led to the wrong impression here. Or alternatively my post doesn't cover very much on pursuing academia, when the expected post would have been almost entirely focused on this, thereby seeming like it was conveying a strong message?

In general I don't think about pursuing "sectors" but instead about trying to solve problems. Sometimes this involves trying to g...

Ugh. Shrug. That isn't supposed to be the point of this post. All my comments on this are to alert the reader that I happen to believe this and haven't tried to stop it from seeping into my writing. It felt disingenuous not to.

But since you raised, I feel like making it clear, if it isn't already, that I do not recommend reversing this advice. At least if you are considering cause areas/ academic domains that I might know about (see my preamble). I have no idea how applicable this is outside of longtermist technical-leaning work.

If you think you might be a...

I'm not convinced that academia is generally a bad place to do useful technical work. In the simplest case, you have the choice between working in academia, industry or a non-profit research org. All three have specific incentives and constraints (academia - fit to mainstream academic research taste; industry - commercial viability; non-profit research - funder fit, funding stability and hiring). Among these, academia seems uniquely well-suited to work on big problems with a long (10-20 year) time horizon, while having access to extensive expertise and col...

You approximately can't get directly useful/ things done until you have tenure.

At least in CS, the vast majority of professors at top universities in tenure-track positions do get tenure. The hardest part is getting in. Of course all the junior professors I know work extremely hard, but I wouldn't characterize it as a publication rat race. This may not be true in other fields and outside the top universities.

The primary impediment to getting things done that I see is professors are also working as administrator and teaching, and that remains a problem post-tenure.

Neat. I'd be curious if anyone has tried blinding the predictive algorithm to prestige: ie no past citation information or journal impact factors. And instead strictly use paper content (sounds like a project for GPT-6).

It might be interesting also to think about how talent vs. prestige-based models explain the cases of scientists whose work was groundbreaking but did not garner attention at the time. I'm thinking, e.g. of someone like Kjell Keppe who basically described PCR, the foundational molbio method, a decade early.

If you look at natural ...

Interesting! Many great threads here. I definitely agree that some component of scientific achievement is predictable, and the IMO example is excellent evidence for this. Didn't mean to imply any sort of disagreement with the premise that talent matters; I was instead pointing at a component of the variance in outcomes which follows different rules.

Fwiw, my actual bet is that to become a top-of-field academic you need both talent AND to get very lucky with early career buzz. The latter is an instantiation of preferential attachment. I'd guess for each top-...

Great post! Seems like the predictability questions is impt given how much power laws surface in discussion of EA stuff.

More precisely, future citations as well as awards (e.g. Nobel Prize) are predicted by past citations in a range of disciplines

I want to argue that things which look like predicting future citations from past citations are at least partially "uninteresting" in their predictability, in a certain important sense.

(I think this is related to other comments, and have not read your google doc, so apologies if I'm restating. But I think it...

Thanks! I agree with a lot of this.

I think the case of citations / scientific success is a bit subtle:

- My guess is that the preferential attachment story applies most straightforwardly at the level of papers rather than scientists. E.g. I would expect that scientists who want to cite something on topic X will cite the most-cited paper on X rather than first looking for papers on X and then looking up the total citations of their authors.

- I think the Sinatra et al. (2016) findings which we discuss in our relevant section push at least slightly against a story

To operate in the broad range of cause areas openphil does, I imagine you need to regularly seek advice from external advisors. I have the impression that cultivating good sources of advice is a strong suite of both yours and OpenPhils.

I bet you also get approached by less senior folks asking for advice with some frequency.

As advisor and advisee: how can EAs be more effective at seeking and making use of good advice?

Possible subquestions: What common mistakes have you seen early career EAs make when soliciting advice, eg on career trajectory? When do you s...

Interesting point. Note that a requirement for retaliation is knowledge of the actor to retaliate against. This is called “attribution” and is a historically hard problem for bioweapons which is maybe getting easier with modern ML (COI- I an a coauthor: https://www.nature.com/articles/s41467-020-19149-2)

Yea, idk. I was thinking of the quotes where you explicitly mentioned Van der Waals forces. Tbc, my preference would be to not be forced to pick a single force