All of Toby Tremlett🔹's Comments + Replies

Thanks for the comments @Clara Torres Latorre 🔸 @NickLaing @Aaron Gertler 🔸 @Ben Stevenson. This is all useful to hear. I should have an update later this month.

Cheekily butting in here to +1 David's point - I don't currently think it's currently reasonable to assume that there is a relationship between the inner workings of an AI system which might lead to valenced experience, and its textual output.

For me I think this is based on the idea that when you ask a question, there isn't a sense in which an LLM 'introspects'. I don't subscribe to the reductive view that LLMs are merely souped up autocorrect, but they do have something in common. An LLM role-plays whatever conversation it finds itself in. They have...

Thanks to everyone who voted for our next debate week topic! Final votes were locked in at 9am this morning.

We can’t announce a winner immediately, because the highest karma topic (and perhaps some of the others) touches on issues related to our politics on the EA Forum policy. Once we’ve clarified which topics we would be able to run, we’ll be able to announce a winner.

Once we have, I’ll work on honing the exact wording. I’ll write a post with a few options, so that you can have input into the exact version we end up discussing.

PS: ...

I think allowing this debate to happen would be a fantastic opportunity to put our money where our mouth is regarding not ignoring systemic issues:

https://80000hours.org/2020/08/misconceptions-effective-altruism/#misconception-3-effective-altruism-ignores-systemic-change

On the other hand, deciding that democratic backsliding is off limits, and not even trying to have a conversation about it, could (rightfully, in my view) be treated as evidence of EA being in an ivory tower and disconnected from the real world.

As the person who led the development of that policy (for whatever that's worth), I think the Forum team should be willing to make an exception in this case and allow looser restrictions around political discussion, at least as a test. As Nick noted, the current era isn't so far from qualifying under the kind of exception already mentioned in that post.

(The "Destroy Human Civilization Party" may not exist, but if the world's leading aid funder and AI powerhouse is led by a group whose goals include drastically curtailing global aid and accelerating AI progress with explicit disregard for safety, that's getting into natural EA territory -- even without taking democratic backsliding into account.)

I'm a bit surprised by this decision. This is the EA forum and this is an emerging area which could be important to discuss (personally I'm super skeptical of tractability but keen to hear ideas). It doesn't seem very "EA" to censor topics because they might be difficult - heck I've been downvoted for suggesting that at time before.

If the topic is specifically about "countering democatic backsliding" vs. other long termist concerns, I think we could (almost) discuss this without violating the forum norms tooooo much. And you even say at the bot...

Hey Karen Maria, welcome to the EA Forum! Thanks for joining us.

I'm Toby, from the EA Forum team. If you have any questions, I'm happy to answer them. If you haven't already listened to it, I'd recommend the 80,000 Hours podcast.

Let me know if you'd like specific kind of recommendations, I have an extensive knowledge of EA Forum posts :)

Cheers,

Toby

Unlike an asteroid impact, which leaves behind a lifeless or barely habitable world, the AI systems that destroyed humanity would presumably continue to exist and function. These AI systems would likely go on to build their own civilization, and that AI civilization could itself eventually expand outward and colonize the cosmos.

This is by no means certain. For example we should still be worried about extinction via misuse, which could kill off humans before AI is developed enough to be self-replicating/ autonomous. For example, the development of bioweapon...

"In expectation, work to prevent the use of weapons of mass destruction (nuclear weapons, bio-engineered viruses, and perhaps new AI-powered weapons) as funded by Longview Philanthropy (Emerging Challenges Fund) and Founders Pledge (Global Catastrophic Risks Fund) is more effective at saving lives than Givewell's top charities."

"Conditional on avoiding existential catastrophes, the vast majority of future value depends on whether humanity implements a comprehensive reflection process (e.g., long reflection or coherent extrapolated volition)"

"Countering democratic backsliding is now a more urgent issue than more traditional longtermist concerns."

Note that due to our Politics on the EA Forum policy, this conversation would have to stay quite meta, and be pretty actively moderated. We reserve the right to disqualify the option if we feel we can't moderate it adequately at our current capacity.

Hey all, here’s a quick reminder of some relevant Forum norms, since this is a bit of a heated topic, and we might have some new users taking part:

- Be kind.

- Stay civil, at the minimum. Don’t sneer or be snarky. In general, assume good faith. We may delete unnecessary rudeness and issue warnings or bans for it.

- Substantive disagreements are fine and expected. Disagreements help us find the truth and are part of healthy communication.

- Mass downvoting - it’s a potentially bannable offence to downvote a user’s posts faster than you could read them.

- And just to seco

"By default, the world where AI goes well for humans will also go well for other sentient beings"

Based on this comment from @Kevin Xia 🔸

I'm curating this post.

I think it's a great example of reasoning transparency on an important topic. Specifically, I really like how Jan has shared his model in ~enough detail for a reader to replicate the investigation for themselves. I'd like to see readers engaging with the argument (and so it seems would Jan).

I also think this post is pretty criminally underrated, and part of the purpose of curation is surfacing great content which deserves more readers.

Maybe premature to call this a win, but I think it's major that EA can still raise and promote new causes like risks from mirror biology.

As far as I know, this is because of great work from the (partially coefficient giving funded) Mirror Biology Dialogues Fund. They coordinated and published this article, and then oversaw a very successful media push.

Looking forward to seeing more work on this in 2026!

My read was that a major success was that they seem to have broad, initial agreement, even among previously bullish scientists, that we should be extremely cautious when developing the scaffolding of mirror bio, if at all. I think that is truly remarkable, borderline historic. This is agreement across national borders, scientific disciplines and the argument they put forward was not watertight - there was no definite proof that mirror bio would assuredly be catastrophic. So this consensus was built on plausible risk only. It was extremely well pulled off. It is what skeptics might easily and still do dismiss as "sci-fi".

Lol yep that's fair. This is surprisingly never the direction the conversation has gone after I've shared this thought experiment.

Maybe it should be more like: in a world where resources are allocated according to EA priorities (allocation method- silent), 80,000 Hours would be likelier to tell someone to be a post officer than an AI safety researcher... Bit less catchy though.

Yeah, totally a contextual call about how to make this point in any given conversation, it can be easy to get bogged down with irrelevant context.

I do think it's true that utilitarian thought tends to push one towards centralization and central planning, despite the bad track record here. It's worth engaging with thoughtful critiques of EA vibes on this front.

Salaries are the most basic way our economy does allocation, and one possible "EA government utopia" scenario is one where the government corrects market inefficiencies such that salaries perfectly tr...

The UK's new Animal Welfare Strategy aims to phase out cages for chickens, farrowing crates, and CO2 gassing for pigs.

There's still more to do, but this is pretty encouraging.

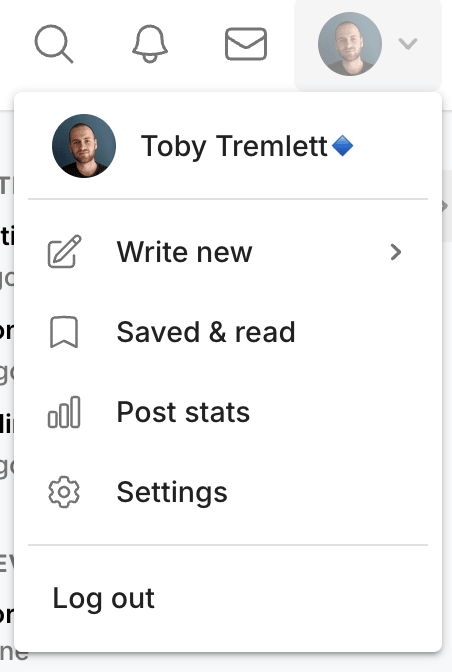

Reminder that you can check out your post stats (for individual posts and overall). I personally find this very cool - I get a lot more readers on the Forum than I do on Substack.

PS- LMK if there are stats you'd like to see that you can't currently access. Can't promise to add anything in particular, but we'll consider.

Generally I curate posts from the last few weeks. This post is from 12 years ago. I'm doing this because I've had a few discussions recently about how much evergreen content there is on the EA Forum, and how it is surfaced too rarely.

I'd like present-day commenters to feel encouraged to comment on this post. Specifically, I think it's interesting to look at this post more than a decade after it was written, and ask - was our allocation of resources correct in that decade? Should we in fact have focused more on building funding, power, generalist expe...

Over the last decade, we should have invested more in community growth at the expense of research.

Being very confident on this question because would be questioning a pretty marked success, but it does seems like 1) we're short of the absolute talent/power threshold big problems demand and 2) like energy/talent/resources have been sucked out of good growth engines multiple times in the past decade.

Would you say your skepticism is mainly tied into the specific framing of "offsetting" as opposed to just donating? How would your answer change if the offset framing was dropped and it was just a plain donation ask?

I think if it is just donating then there isn't anything very revolutionary here... animal welfare charities don't only market to vegetarian/vegans.

I both take it to be true that offsetting is the new and exciting angle here, and that common sense morality doesn't have much of a place for offsetting.

On the identity thing - I think t...

We should present veganism as commendable, and offsetting as a legitimate stopping point for individual supporters.

I like the concept here, though I'm a little sceptical that it'll lead to the best consequences because Veganism might just be a simpler idea than Offsetting.

AFAIK the idea of offsetting was first shared in reference to emissions from flights. My (non quantitative) take is that this wasn't very successful. A lot of flights offer some sort of charity donation at the end of the purchasing process, but they don't usually make the claim that...

My understanding was that the administration hadn't changed the appropriations, they'd just ensured that those appropriations couldn't be spent on anything (i.e. they did everything through the executive, not congress). I'm hoping I can be happy about this, but it reads to me like status quo.

The global fund bit is maybe more of an update.

(I'm aware I could just ask an LLM but I thought there may be value in being confused in public, in case anyone else feels the same)

I'll take this post off the frontpage later today. This is just a quick note to say that you can always message me (or use the intercom feature - chat symbol on the bottom right of your desktop screen) if you'd like to make suggestions or give feedback about the EA Forum.

You will see all posts if you click "Load more" on this page. 'Frontpage' is just everything that isn't a 'Personal blogpost'.

We did a bit of ad hoc cross-posting last year and it was fairly successful (one of the 'top 10 most valuable' posts was cross-posted by our team - thanks @Dane Valerie).

I'd be careful with cross-post spamming, since we should only crosspost with consent from the author. But if you want to crosspost a lot of content at once to the Forum for reasons of curation, you might want to untick the frontpage checkbox (i.e. personal blog the posts), and put them in a sequence.

"Automatically cross-posting should be possible and simple in current tech" - I...

I like this idea. Might be especially good in the context of symposia (like this one). Last year I found it fairly tricky to get enough questions on AMAs to make them worthwhile.

Any particular people/ viewpoints you'd like to see on the Forum?

Thanks for writing this Christopher!

@Will Howard🔹 @Alex Dial - you guys might be interested in this.

I’m curating this post.

It's a fairly simple observation (captured in the title — nice), but worth sharing.

It reminds me a little bit of Four Ideas You Already Agree With in that it chips away at the weirdness or uniqueness of EA ideas. This can feel disappointing — we all like to be unique — but ultimately it’s great, it means we have more allies.