Social Change Lab is an EA-aligned non-profit that conducts and disseminates social movement research. For the past six months, we’ve been researching the outcomes of protests and protest movements using a variety of methods, including literature reviews, polling (see our previous post on the EA Forum here, which goes into more detail), and interviewing experts and policymakers. Today, we’re releasing an in-depth report on the work we’ve done in the last six months that relates to protest outcomes. We’ll also be releasing another report soon on the factors that make social movements more or less likely to succeed. Specifically, we’re looking at just how much of a difference protest movements can make, and the areas in which they seem to be particularly effective.

We think this is relevant to Effective Altruists for a few reasons: firstly, protests and grassroots activities seem to be a tactic that social movements can use that has been fairly neglected by EAs. As Will MacAskill points out in What We Owe The Future, social movements such as Abolitionism have had a huge impact in the past, and we think that it’s likely that they will do so again in the future. It seems extremely valuable to look at this in more detail: how impactful are protests and grassroots pressure? What are the mechanisms by which they can make a difference? Is it mostly by influencing public opinion, the behaviour of legislators, corporations, or something else?

Secondly, Effective Altruism is itself a social movement. Some interesting work has been done before (for instance, this post on why social movements sometimes fail by Nuño Sempere), but we think it seems valuable to think in more detail about both the impact that social movements can have, and also what makes them likely to succeed or fail (which we’ll be talking about in a report that we intend to release soon). Research on how different social movements achieved outsized impacts seems like it would be useful in helping positively influence the future impact of Effective Altruism.

We hope that you enjoy reading the report, and would be hugely appreciative for any feedback that people have about what we’ve been doing so far (we’re a fairly new organisation and there are definitely things we still have to learn). The rest of this post includes the summary of the report, as well as the introduction and methodology. The full results examining protest movements outcomes on public opinion, policy change, public discourse, voting behaviour and corporative behaviour are best seen in the full report here. You can also read it in a Google Doc version here.

Executive Summary

Social Change Lab has undertaken six months of research looking into the outcomes of protests and protest movements, focusing primarily on Western democracies, such as those in North America and Western Europe. In this report, we synthesise our research, which we conducted using various research methods. These methods include literature reviews, public opinion polling, expert interviews, policymaker interviews and a cost-effectiveness analysis. This report only examines the impacts and outcomes of protest movements. Specifically, we mostly focus on the outcomes of large protest movements with at least 1,000 participants in active involvement.[1] This is because we want to understand the impact that protests can have, rather than the impact that most protests will have. Due to this, our research looks at unusually influential protest movements, rather than the median protest movement. We think this is a reasonable approach, as we generally believe protest movements are a hits-based strategy for doing good. In short, we think that most protest movements have little success in achieving their aims, or otherwise positive outcomes. However, it’s plausible that a small percentage of protest movements will achieve outcomes large enough to warrant funding a portfolio of social movement organisations. In future work, we plan on researching and estimating the likelihood for a given social movement organisation to achieve large impacts.

We believe this report could be useful for grantmakers and advocates who want to pursue the most effective ways to bring about change for a given issue, particularly those working on climate change and animal welfare. We specifically highlight these two causes, as we believe they are currently well-suited to grassroots social movement efforts.[2] A subsequent report will examine the factors that make some protest movements more successful than others.

The report is structured so we only present the key evidence from our various research methods for the main outcomes of interest, such as public opinion, policy change, or public discourse. Full reports for each research method are also linked throughout for readers to gain additional insight.

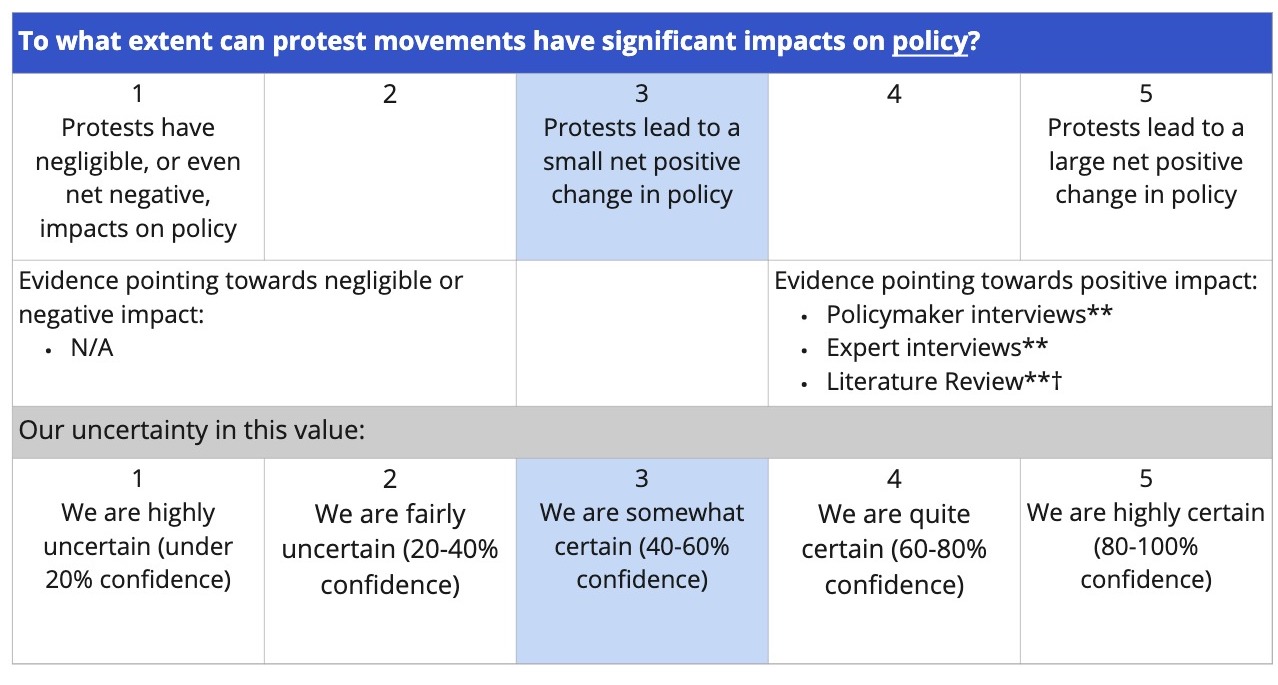

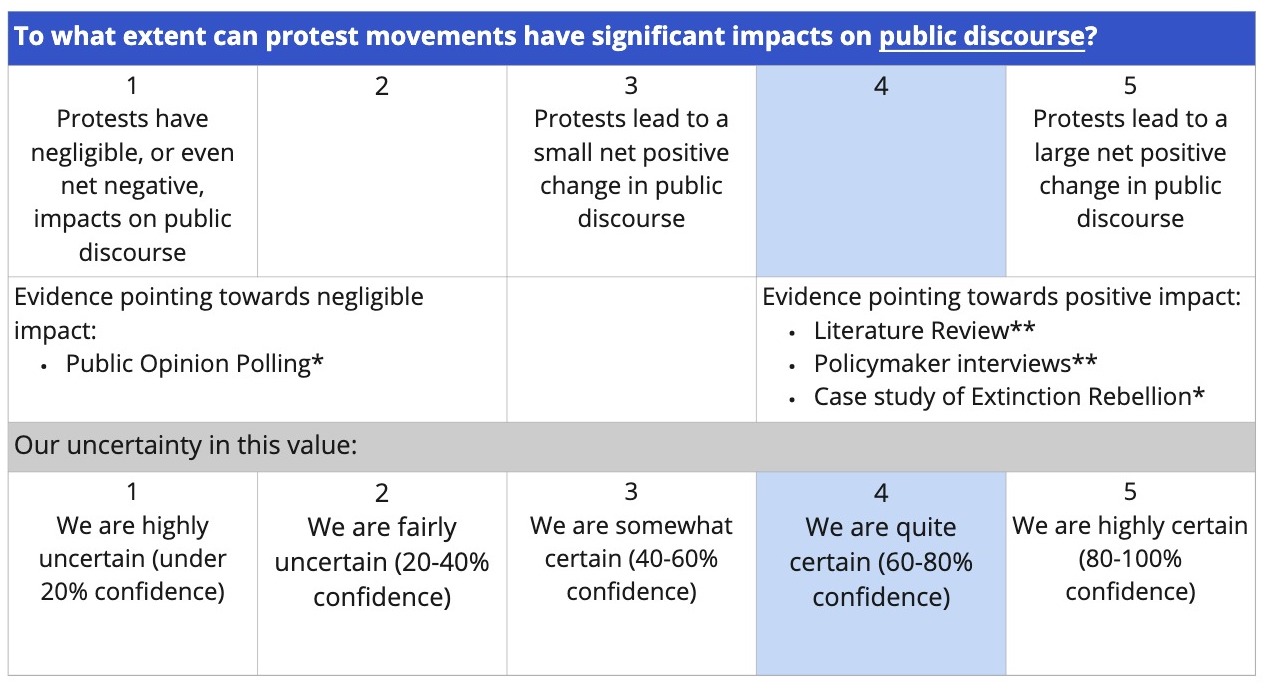

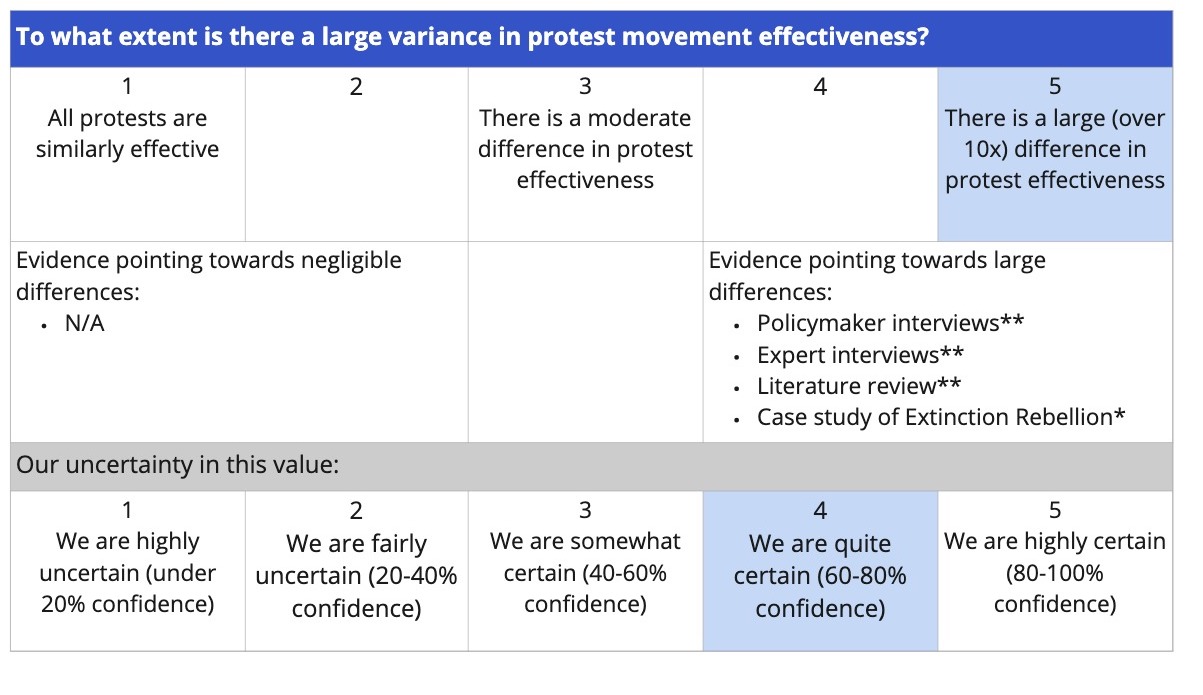

1.1 Summary Tables

Below, we scored answers to our various research questions on a 1-5 scale, to highlight our current estimates. We also provide our confidence level on a 1-5 scale (which can also be interpreted as 0-100% confidence intervals). Additionally, we indicate which research methodologies have updated us towards a particular direction, and how much weight we put on that particular methodology given the research question. A single star (*) indicates low weight on that methodology, two stars (**) indicates moderate weight and three stars (***) indicates high weight on that methodology. We based our relative weights on the research methods based on empirical robustness, breadth of information covered (e.g. number of protest movements studied), relevance of that research method to the research question, and other limitations that might have been present. The evidence related to each outcome, and how we arrived at these values, are explained further in the full report.

1.2 Other select findings

Below are some of our notable findings, across various research methods:

- Our literature review on protest outcomes found:

- Voting behaviour across four protest movements was influenced by approximately 1-6 percentage points, observed via natural experiments.

- Shifts in public opinion of 2-10% were observed, across both experimental and natural experiment settings.

- Sustained interest in novel discourse put forward by Black Lives Matter a year after the majority of protests, and shown to be up to 10x larger than pre-protest activity.

- Our bespoke public opinion polling found that disruptive nonviolent climate protest in the UK did not cause any “backfire” effect i.e. there was no negative impact on public support for climate despite disruptive tactics. There’s also weak evidence that it raised the number of people willing to take part in climate activism by 2.6 percentage points, equivalent to an additional 1.7 million people.

- Our expert interviews with academics and movement experts revealed that large protests can be seen as credible signals of public opinion, and public opinion plays an important role in policymaking.

- Our policymaker interviews revealed that while some policymakers believe most protest movements have little impact, they also highlighted a small number of protest movements who have achieved significant impacts on UK policy – primarily across animal welfare and climate change.

- Our case study and cost-effectiveness estimate found that Extinction Rebellion may have abated 0.1-71 (median 8) tonnes of CO2e per £ spent on advocacy. We think this is our most uncertain finding, so readers should interpret this result with caution.

1.3 How to read this report

In this report, we summarise the key findings from various research methods (e.g. our literature review), rather than replicating them in full. For example, in the public opinion section, we reference the most relevant papers from our literature review that we think provide the most valuable evidence, rather than all the papers that tackle the question of protest impacts on public opinion. For those who want to read more into a particular outcome or methodology, we encourage you to read the full reports that are linked. All sections are intended to be standalone pieces, so you can read them independently of other sections. However, this does lead to some points being repeated in several sections.

1.4 Who we are

Social Change Lab is a new Effective Altruist-aligned organisation that is conducting research into whether grassroots social movement organisations (SMOs) could be a cost-effective way to achieve positive social change. We’re initially focusing on climate change and animal advocacy, as this is where we believe we can learn the most, due to existing active movements. We aim to understand whether protests and social movement activism should play a larger, or smaller, role in accelerating progress in these cause areas.

We’re also interested in how social movements have the greatest chances in achieving their goals, and why some fail. We hope these findings can be applied to other important issues, such as building the effective altruism movement, reducing existential risks, and more.

2. Introduction

2.1 Why we think this work is valuable

There are many pressing problems in the world, and it’s unclear what is the most effective way to tackle these problems. There is some agreement that we need a variety of approaches to effectively bring about positive social change. However, it’s not obvious how we should allocate resources (e.g. time, money and people) to the various approaches if we want to maximise social good. We seek to understand whether social movement organisations could be more effective than current well-funded avenues to change, and how much resources we should allocate towards grassroots strategies relative to other alternatives.

Despite the potentially huge benefits of our work, we believe that social movement research is neglected. We believe we are the first to conduct a (preliminary and uncertain) cost-effectiveness analysis of a social movement organisation, and the second to commission bespoke public opinion polling to understand the impact of protest on public opinion. We see this as an opportunity to add value by providing research that addresses some unanswered questions about the role of social movements in improving the world.

Why Protest?

We spent the first six months of our research focusing on two main questions, the outcomes of protest movements and the factors that make some protest movements more successful than others. We chose to initially focus on Social Movement Organisations (SMOs) that use protest as a main tactic, as we believed this to be an especially neglected area of research given that protests are an extremely commonly used tactic for achieving social change. We define a social movement organisation as a named formal organisation engaged in activities to advance a movement’s cause (e.g. environmentalism or anti-racism).

SMOs such as Greta Thunberg’s Fridays For Future, Extinction Rebellion and Black Lives Matter have been said to have large impacts on public opinion and public discourse around their respective issues. As seen below in Figure 1, polling by YouGov suggests that Extinction Rebellion may have played a key role in the increase in UK public concern for climate change, leading to a potential rise of 10% in just a matter of months.

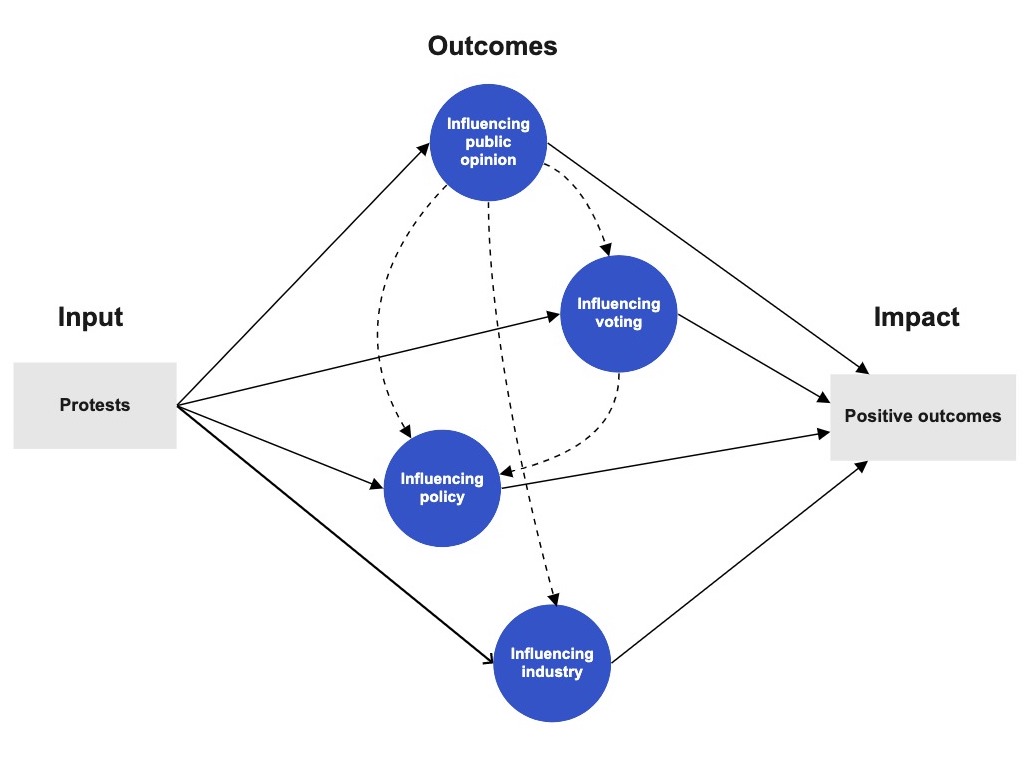

We think that if the claimed impacts of protests are accurate – that they can significantly alter public opinion and/or affect policymaker’s beliefs – then there is a strong case that philanthropists and social change advocates should be considering this type of advocacy alongside other methods. We also think that there are several other mechanisms by which protest can influence social change. Specifically, protests can affect voting behaviour, corporate behaviour and policy. We outline a simplified theory of change diagram below in Figure 2 which illustrates some (but not all) of the pathways where protests can lead to significant social change.

If further research uncovers evidence that suggests protest is not a particularly effective tool (or contingent on very specific criteria), this information value will still be useful in allocating resources going forward.

We seek first to look into the impact of protest-focused movements, whilst slowly expanding our scope to social movements more generally.

2.2 Research Questions & Objectives

Primary research questions we have been investigating for this report:

- What are the outcomes of protest movements, and how large are they? Specifically, what are the impacts on:

- Voting behaviour

- Public Opinion

- Policy

- Corporate Behaviour

- Policymaker behaviour

- Are there crucial considerations that might hinder our ability to gather good evidence on our questions above?

Research questions that are tackled in our upcoming report on success factors:

- Which tactics, strategies and factors of protest movements (e.g. size, frequency, target, etc.) most affect chances of success?

- How important is movement agency relative to external societal conditions?

- What are the main bottlenecks faced by social movements, and to what extent are promising social movements funding constrained?

- Can we predict which movements will have large positive impacts? If so, how?

Other questions we are tackling or will tackle in the near future can be seen in our section on future promising work.

2.3 Audience

We think this work can be valuable to two audiences in particular:

- Philanthropists seeking to fund the most effective routes to positive change in their respective cause area - where grassroots organisations could be a contender. For example, if we discover that grassroots animal advocacy movements are more cost-effective in reducing animal suffering relative to existing funded work, it makes sense we also fund these opportunities (all else being equal) if we seek to maximise our impact. Additionally, grantmakers could possibly use our success factors research to identify promising organisations to fund.

- Existing social movements - who can employ and integrate best practices from social science literature to make their campaigns more effective. This includes Effective Altruist community-builders, who thus far seem to have made relatively little effort to learn from previous social movements and examine why and how they succeeded or failed.

3. Methodology

As a preliminary step, we sought to understand the state of the academic literature pertaining to social movements. This was integral to measuring how promising research on social movements would be in terms of (i) how important social movements themselves are, and (ii) how useful any incremental knowledge contribution in this area would be. We thus conducted a comprehensive literature review to identify findings in this area of research, and analyse the robustness of these findings. If we had found here that there was a general and rigorously-derived consensus that social movements are ineffective at resulting in social and policy change, then any further research on social movements would not be meaningful. To the contrary, our literature review found that academic studies point to the effectiveness of nonviolent protests in inducing social change in some contexts. However, the evidence base we found was relatively small, so we believe additional research will be useful to inform social movement strategies and funding allocations.

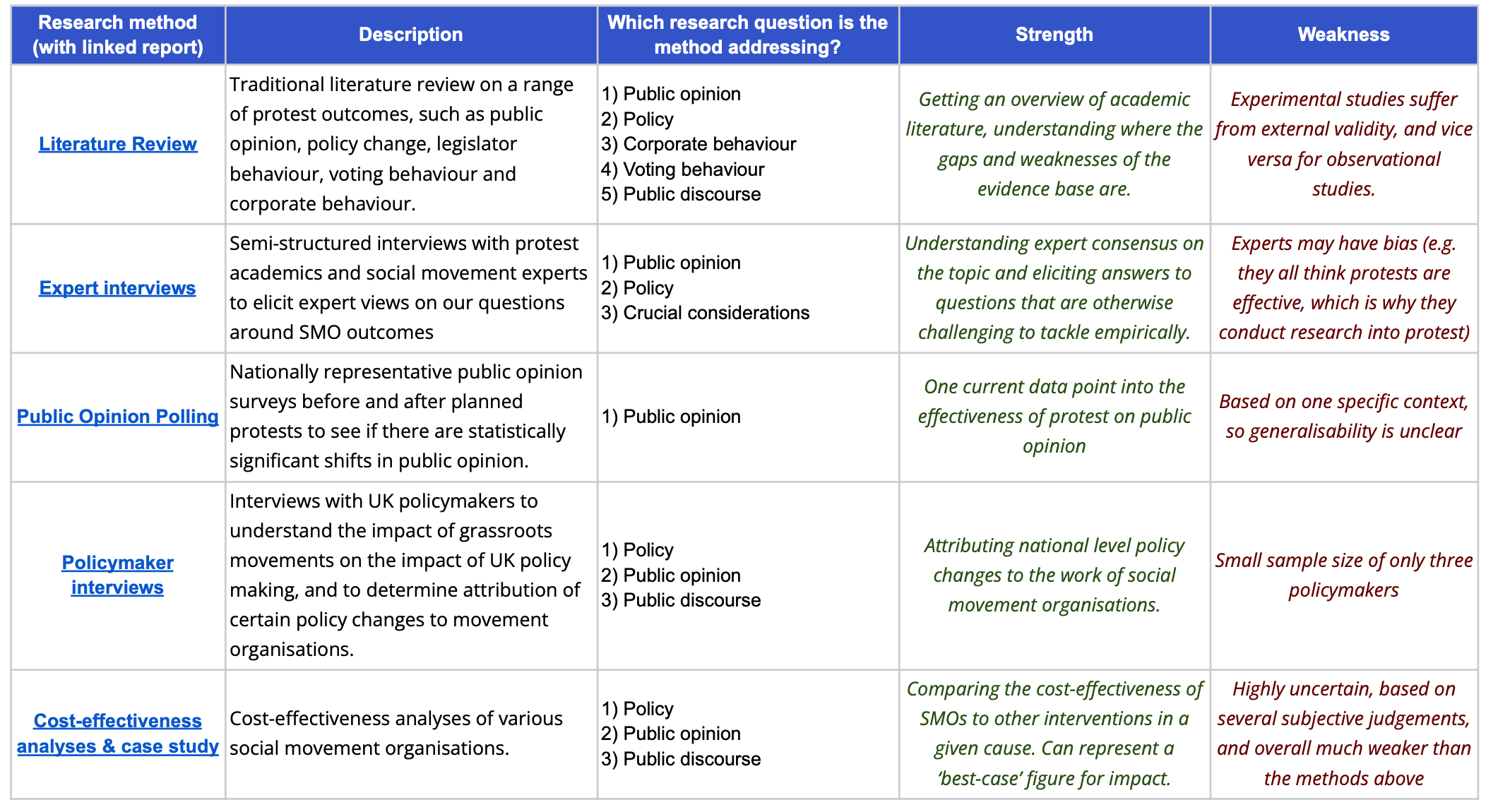

We decided to tackle subsequent research by approaching our research questions from many different angles and using several different methods. We believe this is a more robust way of tackling our research questions, as no single methodology provides an empirically foolproof approach, due to the difficulty in making causal inferences in empirical social science. Instead, we hope to evaluate the evidence base for social movement impacts using a variety of different methods, to understand where this broad evidence base converges or diverges. We explain the methodologies in-depth in Appendix A and in the full reports, but in summary, the research methods we used are shown in the table below. The limitations of our overall methodology are addressed in more detail in the Limitations section.

You can read the rest of the report here on our website. Again - we hope that you enjoy reading the report - and we would be hugely appreciative for any feedback that people have about our work so far!

- ^

By “actively involved”, we mean people are attending in-person demonstrations, donating, going to meetings or otherwise participating in the movement.

- ^

It’s not clear that climate change and animal welfare are the most suited to grassroots social movement efforts, but the current existence of similar activities in these fields indicates that external conditions are already somewhat favourable.

Updated view

Thank you to James for clarifying some of the points below. 1, 3 and 4 all result from miscommunications in the report that on clarification don't reflect the authors' intentions. I think 2 continues to be relevant, and we disagree here.

I've updated towards putting somewhat more credence in parts of the work, although I have other concerns beyond the most glaring ones I flagged here. I'm reticent to get into them here since this comment has a long contextual thread; perhaps I'll write another comment. I do want to represent my overall view here, which is that I think the conclusions are overconfident given the methods.

Original comment

I share other commentator's enthusiasm for this research area. I also laud the multi-method approach and attempts to quantify uncertainty.

However, I think severe methodological issues are pervasive unfortunately. Accordingly, I did not update based off this work.

I'll list 4 important examples here:

In the literature review, strength of evidence was evaluated based on the number of studies supporting a particular conclusion. This metric is entirely flawed as it will find support for any conclusion with a sufficiently high number of studies. For example, suppose you ran 1000 studies of an ineffective intervention. At the standard false positive rate of p-values below 0.05, we would expect 50 studies with significant results. By the standards used in the review, this would be strong evidence the intervention was effective, despite it being entirely ineffective by assumption.

The work surveying the UK public before and after major protests is extremely susceptible to social desirability, agreement and observer biases. The design of the surveys and questions exacerbates these biases and the measured effect might entirely reflect these biases. Comparison to a control group does not mitigate these issues as exposure to the intervention likely results in differential bias between the two groups.

The research question is not clearly specified. The authors indicate they're not interested in the effects of a median protest, but do not clarify what sort of protest exactly they are interested in. By extension, it's not clear how the inclusion criteria for the review reflects the research question.

In this summary, uncertainty is expressed from 0-100%, with 0% indicating very high uncertainty. This does not correspond with the usual interpretation of uncertainty as a probability, where 0% would represent complete confidence the proposition was false, 50% total uncertainty and 100% complete confidence the proposition was true. Similarly, Table 4 neither corresponds to the common statistical meaning of variance nor defines a clear alternative.

These critiques are based off having spent about 3 hours total engaging in various parts of this research. However, my assessment was far from comprehensive, in part because these issues dissuaded me from investigating further. Given the severity of these issues, I'd expect many more methodological issues on further inspection. I apologize in advance if these issues are addressed elsewhere and for providing such directly negative feedback.

Hi Jacob - thanks for giving critical feedback. It’s much appreciated so thank you for your directness. Whilst I agree with some aspects of your comment, I also disagree with some parts (or don’t think they’re important enough to not update at all based on the research).

I think this is down to a miscommunication on our part, so I’ll try to clarify. In essence, I think we shouldn't have said “the number of papers we found”' but “the percentage of total papers we found”, as we looked for all papers that both would have supported or gone against the conclusions laid out in the literature review table. For example, for the statement:

“Protest movements can have significant impacts (2-5% shifts) on voting behaviour and electoral outcomes”

We found 5 studies that seemed methodologically robust and studied the outcomes of nonviolent protest on voting behaviour. 4 out of these 5 studies found significant positive impacts. One of these 5 studies found negligible impacts of protest on voting behaviour of protest in some contexts, but significant in others. Obviously there is some publication bias here, as this is not clearly not always true, but nonetheless all of the literature that does exist which examines the impact of protest on voting behaviour finds positive results in some cases (5/5 studies).

We’re fairly aware of multiple hypothesis testing, and the evidence base for protest interventions is very small, so it’s exceedingly unlikely that there would be a scenario like what you outline in your comment. The chance of 5/5 studies all finding positive results purely by chance is extremely small. Specifically, it would be 0.0003% (0.05^5), which I think we can both agree is fairly unlikely. It seems this was down to poor communication on our part, so I’ll amend that.

I definitely agree the survey does have some limitations (and we’ve discussed this off the Forum). That’s the main reason we’ve only given a single star of weighting (i.e. what we think is our weakest form of evidence), and haven’t updated our views particularly strongly based on it.

That said, I think the important thing for our survey was that we were only looking at how responses changed over time. This, in my opinion, means levels of bias matter less as we think they’ll be roughly constant at all 3 time periods, given it was three fresh samples of respondents. I’ll also note that we got feedback from what I deem experienced researchers/academics prior to this survey, and they thought the design was sufficiently good.

Additionally, the main conclusion of our survey was that it found no “backfire” effect, or that people didn’t support climate policies less as a result of disruptive protest. In other words, we found no significant changes - which seems unlikely if there were significant positive biases at play as you claim.

Edited after: I think your social desirability critique is also misguided as only 18% of people in the UK were supportive of these protests (according to our survey). I find it hard to believe that this is the case, whilst also eliciting high levels of social desirability in our post-protest surveys as you claim.

I disagree somewhat strongly with this, and I think we clearly say what we’re interested in. Specifically, in our executive summary, we say the following:

Maybe you disagree with this being a sufficient level of clarity - but I think this fairly clearly sets out the types of protest movements we’re including for the purposes of this research. If you don’t think this is particularly clear, or you think specific bits are ambiguous, I would be interested to hear more about that.

Edit: In hindsight, I think we could have given a specific list of countries we focus on, but it really is overwhelmingly the US, UK and other countries in Western Europe (e.g. Belgium, Spain, Germany) so I thought this was somewhat clear.

This feels fairly semantical, and I think is quite a weak criticism. I think it’s quite common for people to express their confidence in a belief using confidence intervals (see Reasoning Transparency) where they use confidence in the way we used uncertainty. I think if your specific issue is that we’ve used the word ‘uncertainty’ when we should have used ‘confidence’, I think that’s a very minor point that would hardly affect the rest of our work. We do also in brackets right confidence intervals (e.g. 60-80% confidence) but I’m happy to update it if you think it’ll provide clarity. That being said, this feels quite nitpick-y and I assume most readers understood what we meant.

Regarding variance, I agree that we could have been clearer on the exact definition we wanted, but again I think this is quite nitpick-y as we do outline what we consider to be the variance in this case, namely in section 7:

“..there are large differences between the effectiveness of the most impactful protest movements, and a randomly selected (or median) protest movement. We think this difference is large enough such that if we were able to accurately model the cost-effectiveness of these protest movements, it would be different by a factor of at least 10. “

I don’t think this is a substantive criticism as it’s largely over a choice in wording, that we do somewhat address (even though I do think we could better explain our use of the term).

Overall, I think most of your issues are semantic or minor, which either I'm happy to amend (as in point 1 and 4) or think we've already made adjustments for weak survey evidence (as you point out in point 2). Based on this, I don't think what you've said substantially detracts from the report, such that people should put significantly less weight on it. Due to that, I also disagree with your claim that "Given the severity of these issues, I'd expect many more methodological issues on further inspection." as in my opinion, the issues you raise are largely not severe nor methodological.

That being said, I don't expect anyone to update from thinking very negatively about protest movements to thinking very positively about protest movements based on this work. If anything, I expect it to be a reasonably minor-moderate update, given the still small evidence base and difficulties with conducting reasonable research in this space.

This feedback has definitely been useful, so if you do have other criticisms, I would be interested in hearing them. I do appreciate you taking the time to leave this comment, and being direct about your thoughts. If you’re at all interested, we would be happy to compensate you, or other willing and able reviewers, for their time to look through the work more thoroughly and provide additional criticism/feedback.

Thank you for your responses and engagement. Overall, it seems like we agree 1 and 2 are problems; still disagree about 3; and I don't think I made my point on 4 understood and your explanation raises more issues in my mind. While I think these 4 issues are themselves substantive, I worry they are the tip of an iceberg as 1 and 2 are in my opinion relatively basic issues. I appreciate your offer to pay for further critique; I hope someone is able to take you up on it.

Great, I think we agree the approach outlined in the original report should be changed. Did the report actually use percentage of total papers found? I don't mean to be pedantic but it's germane to my greater point: was this really a miscommunication of the intended analysis, or did the report originally intend to use number of papers founds, as it seems to state and then execute on: "Confidence ratings are based on the number of methodologically robust (according to the two reviewers) studies supporting the claim. Low = 0-2 studies supporting, or mixed evidence; Medium = 3-6 studies supporting; Strong = 7+ studies supporting."

It seems like we largely agree in not putting much weight in this study. However, I don't think comparisons against a baseline measurement mitigates the bias concerns much. For example, exposure to the protests is a strong signal of social desirability: it's a chunk of society demonstrating to draw attention to the desirability of action on climate change. This exposure is present in the "after" measurement and absent in the "before" measurement, thus differential and potentially biasing the estimates. Such bias could be hiding a backlash effect.

The issue lies in defining "unusually influential protest movements". This is crucial because you're selecting on your outcome measurement, which is generally discouraged. The most cynical interpretation would be that you excluded all studies that didn't find an effect because, by definition, these weren't very influential protest movements.

Unfortunately, this is not a semantic critique. Call it what you will but I don't know what the confidences/uncertainties you are putting forward mean and your readers would be wrong to assume. I didn't read the entire OpenPhil report, but I didn't see any examples of using low percentages to indicate high uncertainty. Can you explain concretely what your numbers mean?

My best guess is this is a misinterpretation of the "90%" in a "90% confidence interval". For example, maybe you're interpreting a 90% CI from [2,4] to indicate we are highly confident the effect ranges from 2 to 4, while a 10% CI from [2.9, 3.1] would indicate we have very little confidence in the effect? This is incorrect as CIs can be constructed at any level of confidence regardless of the size of effect, from null to very large, or the variance in the effect.

Thank you for pointing to this additional information re your definition of variance; I hadn't seen it. Unfortunately, it illustrates my point that these superficial methodological issues are likely just the tip of the iceberg. The definition you provide offers two radically different options for the bound of the range you're describing: randomly selected or median protest. Which one is it? If it's randomly selected, what prevents randomly selecting the most effective protests, in which case the range would be zero? Etc.

Lastly, I have to ask in what regard you don't find these critiques methodological? The selection of outcome measure in a review, survey design, construction of a research question and approach to communicating uncertainty all seem methodological—at least these are topics commonly covered in research methods courses and textbooks.

Thanks for your quick reply Jacob! I think I still largely degree on how substantive you think these are, and address these points below. I also feel sad that your comments feel slightly condescending or uncharitable, which makes it difficult for me to have a productive conversation.

The first one - Our aim was to examine all the papers (within our other criteria of recency, democratic context, etc) that related to the impacts of protest on public opinion, policy change, voting behaviour, etc. We didn’t exclude any because they found negative or negligible results - as that would obviously be empirically extremely dubious.

I didn’t make this clear enough in my first comment (I’ve now edited it) but I think your social desirability critique feels somewhat off. Only 18% of people in the UK were supportive of these protests (according to our survey), with a fair bit of negative media attention about the protests. This makes it hard to believe that respondents would genuinely feel any positive social desirability bias, when the majority of the public actually disapprove of the protests. If anything, it would be much more likely to have negative social desirability bias. I'm open to ways on how we might test this post-hoc with the data we have, but not sure if that's possible.

Just to reiterate what I said above for clarity: Our aim was to examine all the papers that related to the impacts of protest on public opinion, policy change, voting behaviour, etc. We didn’t exclude any because they found negative or negligible results - as that would obviously be empirically extremely dubious. The only reason we specified that our research looks at large and influential protest movements is that this is by default what academics study (as they are interesting and able to get published). There are almost no studies looking at the impact of small protests, which make up the majority of protests, so we can’t claim to have any solid understanding of their impacts. The research was largely aiming to understand the impacts for the largest/most well-studied protest movements, and I think that aim was fulfilled.

Sure - what we mean is that we’re 80% confident that our indicated answer is likely to be the true answer. For example, for our answers on policy change, we’re 40-60% confident that our finding (highlighted in blue) is likely to be correct e.g. there’s a 60-40% chance we’ve also got it wrong. One could also assume from where we’ve placed it on our summary table that if it was wrong, it’s likely to be in the boxes immediately surrounding what we indicated.

E.g. if you look at the Open Phil report, here is a quote similar to how we’ve used it:

I understand that confidence intervals can be constructed for any effect size, but we indicate the effect sizes using the upper row in the summary table (and quantify it where we think it is reasonable to do so).

The reasons why I don’t find these critiques as highlighting significant methodological flaws is that:

I'm really sorry to come off that way, James. Please know it's not my intention, but duly noted, and I'll try to do better in the future.

Got it; that's helpful to know, and thank you for taking the time to explain!

SDB is generally hard to test for post hoc, which is why it's so important to design studies to avoid it. As the surveys suggest, not supporting protests doesn't imply people don't report support for climate action; so, for example, the responses about support for climate action could be biased upwards by the social desirability of climate action, even though those same respondents don't support protests. Regardless, I don't allege to know for certain these estimates are biased upwards (or downwards for that matter, in which case maybe the study is a false negative!). Instead, I'd argue the design itself is susceptible to social desirability and other biases. It's difficult, if not impossible, to sort out how those biases affected the result, which is why I don't find this study very informative. I'm curious why, if you think the results weren't likely biased, you chose to down-weight it?

Understood; thank you for taking the time to clarify here. I agree this would be quite dubious. I don't mean to be uncharitable in my interpretation: unfortunately, dubious research is the norm, and I've seen errors like this in the literature regularly. I'm glad they didn't occur here!

Great, this makes sense and seems like standard practice. My misunderstanding arose from an error in the labeling of the tables: Uncertainty level 1 is labeled "highly uncertain," but this is not the case for all values in that range. For example, suppose you were 1% confident that protests led to a large change. Contrary to the label, we would be quite certain protests did not lead to a large change. 20% confidence would make sense to label as highly uncertain as it reflects a uniform distribution of confidence across the five effect size bins. But confidences below that, in fact, reflect increasing certainty about the negation of the claim. I'd suggest using traditional confidence intervals here instead as they're more familiar and standard, eg: We believe the average effects of protests on voting behavior is in the interval of [1, 8] percentage points with 90% confidence, or [3, 6] pp with 80% confidence.

Further adding to my confusion, the usage of "confidence interval" in "which can also be interpreted as 0-100% confidence intervals," doesn't reflect the standard usage of the term.

Sorry, I think this was a miscommunication in our comments. I was referring to "Issues you raise are largely not severe nor methodological," which gave me the impression you didn't think the issues were related to the research methods. I understand your position here better.

Anyway, I'll edit my top-level comment to reflect some of this new information; this generally updates me toward thinking this research may be more informative. I appreciate your taking the time to engage so thoroughly, and apologies again for giving an impression of anything less than the kindness and grace we should all aspire to.

Looking for feedback

We're seeking some broad feedback about this report, such that we can improve our future work. Even if you only read certain sections of this work or just the summary, we would still be really interested in getting any feedback. You can give (anonymous) feedback using this feedback form, where all questions are optional. Thanks in advance and feel free to reach out personally if you want to give more detailed feedback!

Thanks, I think this is really valuable, and really like the summary tables!

I have noticed that you mentioned here that social movement organisations (SMOs) on climate change are "potentially not useful". However, in this post, you refer that protests on climate change and animal welfare could be particularly effective.

Is the contrast due to creating SMOs being different from supporting protests, or have you updated your views?

Hi Vasco - thanks for your kind feedback - much appreciated!

Good catch - I think this is something I've updated a bit on recently (although my views are relatively unstable!). In short, I think that disruptive protest / protest-focused SMOs are less marginally useful in the UK relative to 3-4 years ago, due to now high concern from climate change in the UK. That's not to say I don't think they're useful at all, but I think if the aim is "general awareness raising" then it's less useful than previously, or relative to other countries (e.g. East Europe). We see some evidence of this in our public opinion polling which found no changes in climate concern now but there was 3-4 years ago after similar disruptive protests.

However, I do think there's some space for SMOs/protest movements to be more focused on specific policies, as we have seen recently with the likes of Stop Cambo/Stop Jackdaw or Just Stop Oil. This seems more useful in the UK and Western Europe where concern is high, but implementation of climate policies could still be improved.

Overall, I'm still unsure whether, given a magical button, I would incubate/kickstart new additional climate SMOs based in the UK. I think a lot of it depends on their target, their strategies, the audience they want to mobilise, etc.

Thanks for the clarification!

This is very valuable!

I'm an undergrad, and I've been juggling EA and non-EA activism for several years.[1] I always felt EA could benefit more from research on social movements, because activism can yield very cost-effective results with shockingly little expenditure. I do get why it's not a big focus for the broader movement (protests being hard to quantify, controversy, EAs not really being activist types), but I'd certainly consider it neglected.

For example, climate activism is, in my opinion, a successful example of global activism bringing a niche x-risk into the mainstream, resulting in real policy change. Climate support suffers from pluralistic ignorance, where individuals underestimate public support for certain beliefs, and hence publicly understate their own support. Essentially, "I support climate action but don't believe others do." is an incredibly common concern I've heard, and it seems the only consistent way to bypass this is frequent public displays of widespread support.

More research on how public opinion for important issues can be funneled into solutions is very valuable.

I ended up on BBC, Al Jazeera and others for climate activism. It was very interesting to watch people say "I agree climate change is a big deal but I disagree with your methods" in a thousand different ways.

I wish there were more examples of cost-effectiveness estimates for activism that I can use to discuss with skeptics. I am convinced myself that the most impactful historical examples of activism are world-changing, but I'm usually at a loss when challenged to fermi estimate them.

One example I know of is this post (by one of the OP's authors, James Ozden) suggesting that Extinction Rebellion (XR) has abated 16 tonnes of GHGs per pound spent on advocacy, using median estimates, which is 7x more cost-effective than Clean Air Task Force (CATF), the top-recommended climate change charity (90% CI of 0.2x - 18x). There are valid criticisms of Ozden's estimates, but a good quantitative estimate is usually better than just a verbal argument; I wish there were more such estimates to reference in conversation.

(Also, to be honest, it makes me look bad when I claim something is cost-effective but can't provide a single real-world example with numbers to back up that claim.)

I definitely agree with you that it would be great to have more research on how public opinion for important issues can be funneled into solutions – for instance, all the policy change and public support in the world wouldn't matter if greenhouse gas emissions weren't actually being averted (relative to the no-activism counterfactual world).

I think it's an epistemic issue inherent to impacts caused by influencing decision making.

For example, let's say I present a very specific, well-documented question: "Did dropping the atomic bombs on Hiroshima and Nagasaki save more lives than it took?"

The costs here are specific: Mainly deaths caused by the atomic bombs (roughly 200k people)

The lives saved are also established: Deaths caused by each day the Japanese forces continued fighting, projections of deaths caused by the planned invasion of mainland Japan and famines caused the naval blockade of Japan.

There are detailed records of the entire decision making process at both a personal and macro level. In fact, a lot of the key decision makers were alive post-war to give their opinions. However, the question remains controversial in public discourse because it's hard to pin a number to factors influencing policy decisions. Could you argue that since the Soviet declaring war was the trigger for surrender, mayyyybe the surrender would have happened without the bombs?

Activism is way more nebulous and multivariate, so even significant impacts would be hard to quantify in an EA framework. Personally, I do see the case for underweighing activism within EA. So many other movement already prioritise activism, and in my experience it's super difficult to make quantitative arguments when a culture centres around advocacy. However, I would really like to see some exploration, if only so I don't have to switch gears between my diff friend groups.

The question remains though – especially when talking to funders and nonprofit leaders skeptical of advocacy (a lot of them jaded from personal experience), I'm unpersuasive when I claim something is cost-effective but can't provide a single real-world example with numbers to back up that claim, so I've stopped doing that. Sure activism is nebulous and multivariate; so are many business decisions (to analogize), and yet it's still useful to do quantitative estimation if you keep in mind that

I have no idea honestly if activism should be over- or underweighted in EA; I suspect the answer is the economist's favorite: "it depends". I'd like to see more research on what makes some advocacy efforts more effective that's adaptable to other efforts/contexts. And as a (small) donor myself I'm interested in allocating part of my "bets" capital to advocacy-related orgs like XR if I see / can make a persuasive marginal cost-effectiveness estimate for their efforts.

Thank you! Agreed that EA as a community often overlooks the value of protests and social change. Excited to look more deeply into the report

On “backfire” - do you have any view on backfire of BLM protests? I’ve been concerned with the pattern of protest -> police stop enforcing in a neighborhood -> murder rates go up. Seems like if this does happen, it really raises the bar as the long run positive effects protests like this need to achieve in order to offset the medium term murder increase.

But maybe I’m thinking of this wrong. Or maybe this wouldn’t be considered backfire - more of an unintended side effect?

Source: https://marginalrevolution.com/marginalrevolution/2022/06/what-caused-the-2020-spike-in-murders.html

I'm a bit late to the party but:

I wouldn't consider this a "backfire", although murder rates going up is definitely a bad thing. In the context of protests, a backfire isn't when anything bad happens, it's when the protests hurt the protesters' goals. If "police stop enforcing in a neighborhood" is a goal of BLM protests (which it basically is), then this is a success, not a backfire, and the increase in murder rate is an unfortunate consequence.

A backfire effect would be something like: protest -> protests make people feel unsafe -> city allocates more funding to the police.

Many successful protests have had some element of striking/boycott/business or economic disruption.

Is it possible to examine whether this specifically improves the effectiveness of protests? This would be my working hypothesis.

Not OP but I have organised protests and researched the topic to inform my organising.

My answer is that it's like any strategy: you have to make the right tradeoffs aligning with your goals. You will find many example of massively unpopular but effective protests (MLK's protests for example had a >70% disapproval rating) or larger more popular protests that did not achieve their goals (pro-democracy protests in HK). A general rule like "don't protest like this, it doesn't historically work" is massively dependent on your specific context, and how the organisers execute. It's entire possible for the same strategy to have inconsistent results.

I find it more productive to view protests as campaigns with defined goals. For example, economic disruptions and strikes would work if your issue has a broad support base, like issues of inequality, climate change or cost of living. It is highly unlikely to work in cases of minority rights, such as gay rights or protection of racial-religious minorities. Because if you do that, the majority could easily distance themselves from you and not display solidarity. Gender issues are a mixed bag.

Sorry if that's not a helpful answer, but if you give me an example I can probably tailor the recommendations better.

Good points.

Small-scale targeted labour disputes tend to not have that much broad support. The 2021 John Deere strike for example did not have that broad societal support. There may have been some support from the wider labour movement, but also opposition from the local court system, and business groups/ chamber of commers etc.

But it is hard to think of this happening at a larger scale, without broad support. I can't think of any examples.

There are examples I can think where economic disruption has helped for minority rights, although some of these would for sure have had some level of popular societal support.

- In Bolivia, rural indigenous groups caused roadblocks bringing the economy to a standstill, and successfully were able to resume delayed elections.

- Recent Chile constitutional changes include gender parity and indigenous issues, was the product of very disruptive protetsts

- Longshoremen refusing to move cargo from South African ships during Apartheid.

- The ILWU many activities, including desegregating work gangs. Also oppposed Japanese American internment and were involved in the civil rights movement but I don't know if there particular efforts were effective.

- The Tailoress strikes which led to pay equality for women

Those are the sorts of examples on my mind.

Sources:

https://inthesetimes.com/article/dockworkers-ilwu-racism-workers-apartheid-black-freedom-movement-bay-area

https://www.actu.org.au/about-the-actu/history-of-australian-unions

This is awesome -- I've been wanting someone to do this research forever. Thank you :)

I'm glad you produced this. One thing I found annoying, though, was that you said:

"The evidence related to each outcome, and how we arrived at these values, are explained further in the respective sections below."

But, they weren't? The report was just partially summarised here, with a link to your website. Why did you choose to do this?

Ah yes thanks for pointing that out! That sentence is there because we literally copied it from the report executive summary (so in that case it is below). It's a fair point though so will change that to reflect it not actually being below.

I chose to not put the whole report on the forum just because it's so long (62 pages) and I was worried it would (a) put people off reading anything at all, (b) take me longer than what was reasonable to get all the formatting right and (c) provide a worse reading experience given it was all formatted for PDF/Google Docs (and that I wasn't going to spend loads of time formatting it perfectly).

Curious though - would you prefer to read / find it easier if it was all on the Forum, or what would be your preferred reading location for longer reports?

62 pages is quite long - I understand then why you wouldn't put it on the forum.

I really dislike reading PDFs, as I read most non work things on mobile, and on Chrome based web browsers they don't open in browser tabs, which is where I store everything else I want to read.

I think I'd prefer some web based presentation, ideally with something like one web page per chapter/ large section. I don't know if this is representative of others though.

I've made a Google Doc version if this is better - thanks for the feedback, it's been very useful!

There is a lot of text. Within the 62 page documents there are further links to literature reviews, interviews, etc.

Could someone who has read through all of this (or did the research) give me a few of the most convincing empirical tests/findings that support protests being effective? I kind of want to see more numbers.

I'm gonna copy a section from the "Select Findings" header as you might find some of it useful. I'll link a few papers to back up each point but there is more in the lit review if you do want to look further.

Let me know if this is useful or if you've got more questions!

Oh right, I guess this would have been easy to find if I didn't skip straight to the report.