Epistemic status: speculative

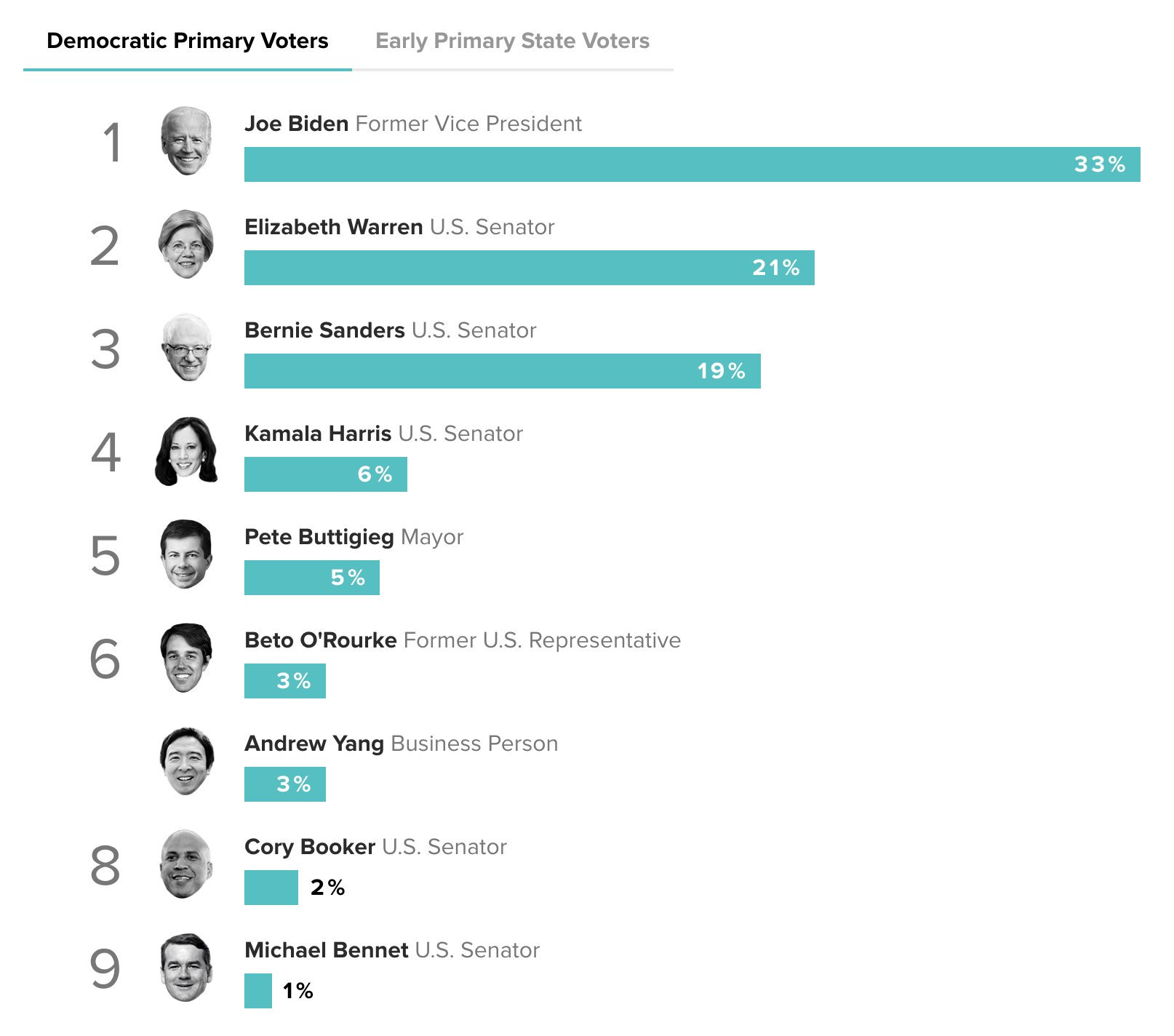

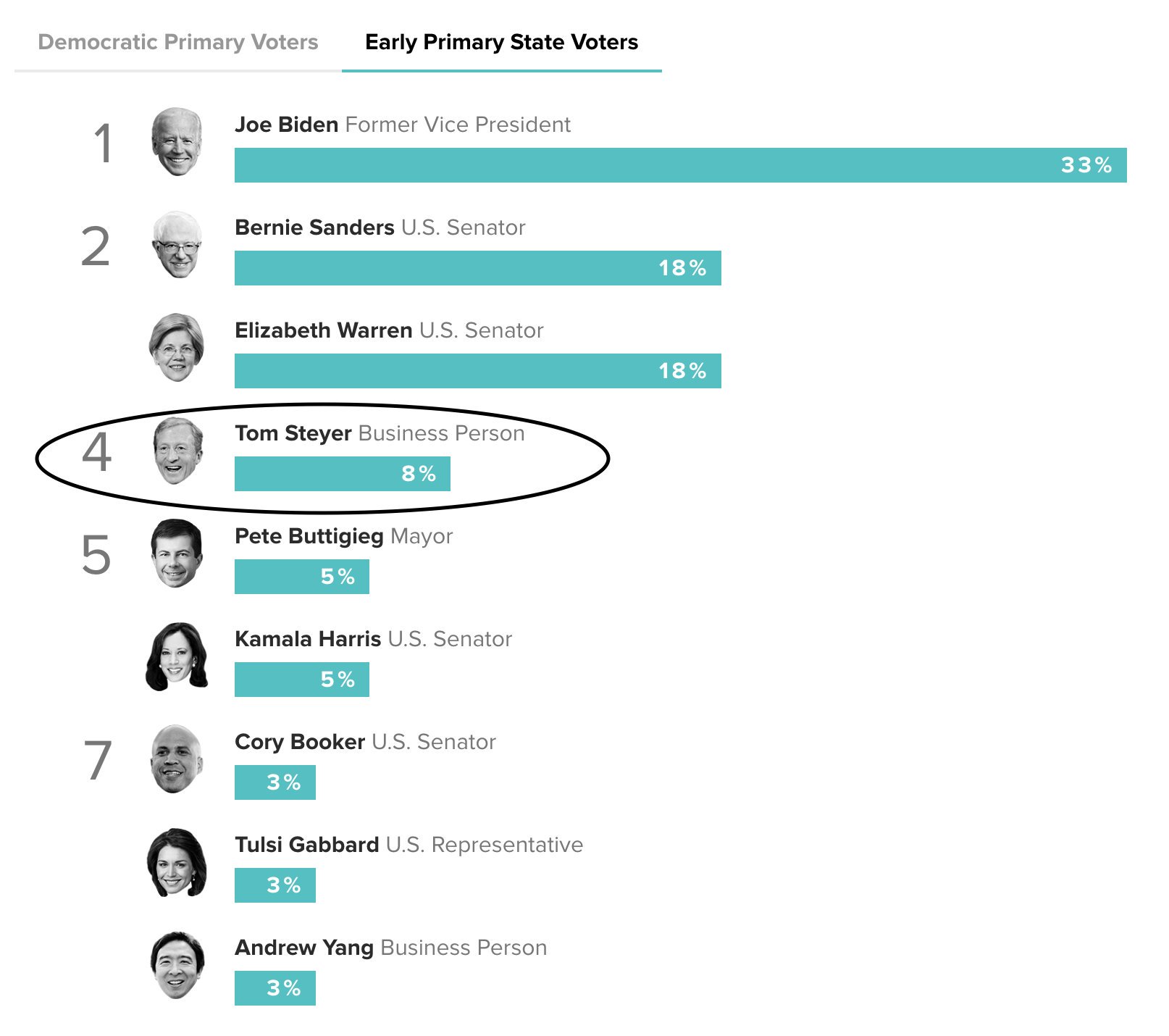

Andrew Yang understands AI X-risk. Tom Steyer has spent $7 million on adds in early primary states, and it has had a big effect:

If a candidate gets more than 15% of the vote in Iowa (in any given caucus), they get delegates. Doing that consistently in many caucuses would be an important milestone for outsider-candidates. And I'm probably biased because I think many of his policies are correct, but I think that if Andrew Yang just becomes mainstream, and accepted by some "sensible people" after some early primaries, there's a decent chance he would win the primary. (And I think he has at least a 50% chance of beating Trump). It also seems surprisingly easy to have an outsize influence in the money-in-politics landscape. Peter Thiel's early investment in Trump looks brilliant today (at accomplishing the terrible goal of installing a protectionist).

From an AI policy standpoint, having the leader of the free world on board would be big. This opportunity is potentially one that makes AI policy money constrained rather than talent constrained for the moment.

I think that we largely cannot give a president any actionable advice regarding AI alignment or policy, for the reasons outlined in Tom Kalil's interview with 80k. I wrote a post here with the relevant quotes. I can imagine this being good but mostly it's unclear to me and I think I can see a lot of ways for this to be strongly negative.

Are you saying that advocating for AI alignment would fail at this point?:

Yes. I think mostly nobody knows what to do, a few people have a few ideas, but nothing that the president can help with.

OpenPhil largely has not been able to spend 5% of its $10 billion dollars, more funding will not help. Nick Bostrom has no idea what policies to propose, he is still figuring out the basic concepts (unilateralist's curse, vulnerable world, etc). His one explicit policy paper doesn't even recommend a single policy - again, it's just figuring out the basic concepts. I recall hearing that the last US president tried to get lots of AI policy things happening but nothing occurred. Overall it's not clear to me that the president is able to do useful stuff in the absence of good ideas for what to do.

I think that the most likely strongly negative outcome is that AI safety becomes attached to some standard policy tug-o-war and mostly people learn to read it as a standard debate between republicans and democrats (re: the candidate discussed in the OP, their signature claim is automation of jobs is currently a major problem, which I'm not even sure is happening, and it'd sure be terrible if the x-risk perspective on AI safety and AI policy was primarily associated with that on a national or global scale).

There's a slightly different claim which I'm more open to arguments on, which is that if you run the executive branch you'll be able to make substantial preparations for policy later without making it a big talking point today, even if you don't really know what policy will be needed later. I imagine that CSET could be interested in having a president who shared a mood-affiliation with them on AI, and probably they have opinions about what can be done now to prepare, though I don't actually know what any of those things are or whether they're likely to make a big difference in the long-run. But if this is the claim, then I think you actually need to make the plan first before taking political action. Kalil's whole point is that 'caring about the same topic' will not carry you through - if you do not have concrete policies or projects, the executive branch isn't able to come up with those for you.

I think that someone in office who has a mood affiliation with AI and alignment and x-risk who thinks they 'need to do something' - especially if they promised that to a major funder - will be strongly net negative in most cases.

I don't think this is very likely (see my other comment) but also want to push back on the idea that this is "strongly negative".

Plenty of major policy progress has come from partisan efforts. Mobilizing a major political faction provides a lot of new support. This support is not limited to legislative measures, but also to small bureaucratic steps and efforts outside the government. When you have a majority, you can establish major policy; when you have a minority, you won't achieve that but still have a variety of tools at your disposal to make some progress. Even if the government doesn't play along, philanthropy can still continue doing major work (as we see with abortion and environmentalism, for instance).

A bipartisan idea is more agreeable, but also more likely to be ignored.

Holding everything equal, it seems wise to prefer being politically neutral, but it's not nearly clear enough to justify refraining from making policy pushes. Do we refrain from supporting candidates who endorse any other policy stance, out of fear that they will make it into something partisan? For instance, would you say this about Yang's stance to require second-person authorization for nuclear strikes?

It's an unusual view, and perhaps reflects people not wanting their personal environments to be sucked into political drama more than it reflects shrewd political calculation.

This is closer to what seems compelling to me.

Not so much "Oh man, Yang is ready to take immediate action on AI alignment!"

More "Huh, Yang is open to thinking about AI alignment being a thing. That seems good + different from other candidates."

This could be ameliorated by having the funder not extract any promises, and further by the funder being explicit that they're not interested in immediate action on the issue.

I'm not hearing any concrete plans for what the president can do, or why that position you quote is compelling to you.

To clarify again, I'm more compelled by Yang's openness to thinking about this sort of thing, rather than proposing any specific plan of action on it. I agree with you that specific action plans from the US executive would probably be premature here.

It's compelling because it's plausibly much better than alternatives.

[Edit: it'd be very strange if we end up preferring candidates who hadn't thought about AI at all to candidates who had thought some about AI but don't have specific plans for it.]

That doesn't seem that strange to me. It seems to mostly be a matter of timing.

Yes, eventually we'll be in an endgame where the great powers are making substantial choices about how powerful AI systems will be deployed. And at that point I want the relevant decision makers to have sophisticated views about AI risk and astronomical stakes.

But in the the decades before that final period, I probably prefer that governmental actors not really think about powerful AI at all because...

1. There's not much that those governmental actors can usefully do at this time.

2. The more discussion of powerful AI there is in the halls of government, the more likely someone is to take action.

Given that there's not much that can be usefully done, it's almost a tautology that any action taken is likely to be net-negative.

Additionally, there are specific reasons to to think that governmental action is likely to be more bad than good.

My overall crux here is point #1, above. If I thought that there were concrete helpful things that governments could do today, I might very well think that the benefits outweighed the risks that I outline above.

I think that's too speculative a line of thinking to use for judging candidates. Sure, being intelligent about AI alignment is a data point for good judgment more generally, but so is being intelligent about automation of the workforce, and being intelligent about healthcare, and being intelligent about immigration, and so on. Why should AI alignment in particular should be a litmus test for rational judgment? We may perceive a pattern with more explicitly rational people taking AI alignment seriously as patently anti-rational people dismiss it, but that's a unique feature of some elite liberal circles like those surrounding EA and the Bay Area; in the broader public sphere there are plenty of unexceptional people who are concerned about AI risk and plenty of exceptional people who aren't.

We can tell that Yang is open to stuff written by Bostrom and Scott Alexander, which is nice, but I don't think that's a unique feature of Rational people, I think it's shared by nearly everyone who isn't afflicted by one or two particular strands of tribalism - tribalism which seems to be more common in Berkeley or in academia than in the Beltway.

Totally agree that many data points should go into evaluating political candidates. I haven't taken a close look at your scoring system yet, but I'm glad you're doing that work and think more in that direction would be helpful.

For this thread, I've been holding the frame of "Yang might be a uniquely compelling candidate to longtermist donors (given that most of his policies seem basically okay and he's open to x-risk arguments)."

If you read it, go by the 7th version as I linked in another comment here - most recent release.

I'm going to update on a single link from now on, so I don't cause this confusion anymore.

moved comment to another spot.

The other thing is that in 20 years, we might want the president on the phone with very specific proposals. What are the odds they'll spend a weekend discussing AGI with Andrew Yang if Yang used to be president vs. if he didn't?

But as for what a president could actually do: create a treaty for countries to sign that ban research into AGI. Very few researchers are aiming for AGI anyway. Probably the best starting point would be to get the AI community on board with such a thing. It seems impossible today that consensus could be built about such a thing, but the presidency is a large pulpit. I'm not talking about making public speeches on topic; I mean inviting the most important AI researchers to the White House to chat with Stuart Russell and some other folks. There are so many details to work out that we could go back and forth on, but that's one possibility for something that would be a big deal if it could be made to work.

You only mean this as a possibility in the future, if there is any point where AGI is believed to be imminent, right?

Still, I think you are really overestimating the ability of the president to move the scientific community. For instance, we've had two presidents now who actively tried to counteract mainstream views on climate-change, and they haven't budged climate scientists at all. Of course, AI alignment is substantially more scientifically accepted and defensible than climate skepticism. But the point still stands.

They may not have budged climate scientists, but there other ways they may have influenced policy. Did they (or other partisans) alter the outcomes of Washington Initiative 1631 or 732? That seems hard to evaluate.

Yes, policy can be changed for sure. I was just referring to actually changing minds in the community, as he said - "Probably the best starting point would be to get the AI community on board with such a thing. It seems impossible today that consensus could be built about such a thing, but the presidency is a large pulpit."

I have updated in your direction.

Yep.

No I meant starting today. My impression is that coalition-building in Washington is tedious work. Scientists agreed to avoid gene editing in humans well before it was possible (I think). In part, that might have made it easier since the distantness of it meant fewer people were researching it to begin with. If AGI is a larger part of an established field, it seems much harder to build a consensus to stop doing it.

FWIW I don't think that would be a good move. I don't feel like fully arguing it now, but main points (1) sooner AGI development could well be better despite risk, (2) such restrictions are hard to reverse for a long time after the fact, as the story of human gene editing shows, (3) AGI research is hard to define - arguably, some people are doing it already.

Can you expand on this?

Making AI Alignment into a highly polarized partisan issue would be an obvious one.

I don't think that making alignment a partisan issue is a likely outcome. The president's actions would be executive guidance for a few agencies. This sort of thing often reflects partisan ideology, but doesn't cause it. And Yang hasn't been pushing AI risk as a strong campaign issue, he only acknowledged it modestly. If you think that AI risk could become a partisan battle, you might want to ask yourself why automation of labor - Yang's loudest talking point - has NOT become subject to partisan division (even though some people disagree with it).

My (relatively weak and mostly intuitive) sense is that automation of labor and surrounding legislation has become a pretty polarized issue on which rational analysis has become quite difficult, so I don't think this seems like a good counterexample.

By 'polarized partisan issue' do you merely mean that people have very different opinions and settle into different camps and make it hard for rational dialogue across the gap? That comes about naturally in the process of intellectual change, it has already happened with AI risk, and I'm not sure that a political push will worsen it (as the existing camps are not necessarily coequal with the political parties).

I was referring to the possibility that, for instance, Dems and the GOP take opposing party lines on the subject and fight over it. Which definitely isn't happening.

Right. Important to clarify that I'm more compelled by Yang's open-mindedness & mood affiliation than the particular plan of calling a lot of partisan attention to AI.

If you are looking at presidential candidates, why restrict your analysis to AI alignment?

If you're super focused on that issue, then it will definitely be better to spend your money on actual AI research, or on some kind of direct effort to push the government to consider the issue (if such an effort exists).

When judging among the presidential candidates, other issues matter too! And in this context, they should be weighted more by their sheer importance than by philanthropic neglectedness. So AI risk is not obviously the most important.

With some help from other people I comprehensively reviewed 2020 candidates here: https://t.co/kMby2RDNDx

The conclusion is that yes, Yang is one of the best candidates to support - alongside Booker, Buttigieg, and Republican primary challengers. Partially due to his awareness of AI risk. But in the updates I've made for the 8th edition (and what I'm about to change now, seeing some other comments here about the lack of tractability for this issue), Buttigieg seems to move ahead to being the best Democrat by a small margin. Of course these judgments are pretty uncertain so you could argue that they are wrong if you find some flaw or omission in the report. Very early on, I decided that both Yang and Buttigieg were not good candidates, but that changed as I gathered new information about them.

But it's wrong to judge a presidential candidate merely by their point of view on any single issue, including AI alignment.

I am, and that's what I'm wondering. The "definitely" isn't so obvious to me. Another $20 million to MIRI vs. an increase in the probability of Yang's presidency by, let's say, 5%--I don't think it's clear cut. (And I think MIRI is the best place to fund research).

What about simply growing the EA movement? That clearly seems like a more efficient way to address x-risk, and something where funding could be used more readily.

That is plausible. But "definitely" definitely wouldn't be called for when comparing Yang with Grow EA. How many EA people who could be sold on an AI PhD do you think could recruited with $20 million?

I meant that it's definitely more efficient to grow the EA movement than to grow Yang's constituency. That's how it seems to me, at least. It takes millions of people to nominate a candidate.

Well, there are >100 million people who have to join some constituency (i.e. pick a candidate), whereas potential EA recruits aren't otherwise picking between a small set of cults philosophical movements. Also, AI PhD-ready people are in much shorter supply than, e.g. Iowans, and they'd be giving up much much much more than someone just casting a vote for Andrew Yang.

There are numerous minor, subtle ways that EAs reduce AI risk. Small in comparison to a research career, but large in comparison to voting. (Voting can actually be one of them.)

Hey Kbog,

Your analysis seems to rely heavily on the judgement of r/neoliberal.

Can you explain to a dummy like me, isn't this a serious weakpoint in your analysis.

I'm sure this is overly simplifying things, but I would have thought that actually it's the social democracies which follow very technocratic keynesian economics that produce better economic outcomes (idk, greater growth, less unemployment, more entrepreneurship, haha I have no idea how true any of this is tbh - I just presume). Espescially now considering that the US/globe is facing possible recession, I would think fiscal stimulus would be even more ideal.

I can't seem to find anywhere that neoliberalism holds some kind of academic consensus.

Very little, actually. Only time I actually cite the group is the one thread where people are discussing baby bonds.

It's true that in many cases, we've arrived at similar points of view, but I have real citations in those cases.

As I say in the beginning of the "evaluations of sources" sections, a group like that is more for "secondary tasks such as learning different perspectives and locating more reliable sources." The kind of thing that usually doesn't get mentioned at all in references. I'm just doing more work to make all of it explicit.

And frankly I don't spend much time there anyway. I haven't gone to their page in months.

Do you think that they are poor even by the standards of social media groups? Like, compared to r/irstudies or others?

It seems like most economists approve of them in times of recession (New Keynesianism), but don't believe in blasting stimulus all the time (which would be post-Keynesianism). I'm a little unsure of the details here and may be oversimplifying. Frankly, it's beyond my credentials to get into the weeds of macroeconomic theory (though if someone has such credentials, they are welcome to become involved...). I'm more concerned about the judgment of specific policy choices - it's not like any of the candidates have expressed views on macroeconomic theory, they just talk about policies.

I found research indicating that countries with more public spending have less economic growth:

https://journalistsresource.org/wp-content/uploads/2011/08/Govt-Size-and-Growth.pdf

http://rcea.org/RePEc/pdf/wp18-01.pdf

Perhaps the post-Keynesian economists have some reasons to disagree with this work, but I would need to see that they have some substantive critique or counterargument.

In any case, if 90% of the field believes something and 10% disagrees, we must still go by the majority, unless somehow we discover that the minority really has all the best arguments and evidence.

Of course, just because economic growth is lower, doesn't mean that a policy is bad. Sometimes it's okay to sacrifice growth for things like redistribution and public health. But remember that (a) we are really focusing on the long run here, where growth is a bit more important, and (b) we also have to consider the current fiscal picture. Debt in the US is quite bad right now, and higher spending would worsen the matter.

Candidates are going to come into office in January 2021 - no one has a clue what the economy will look like at that time. Now if a candidate says "it's good to issue large economic stimulus packages in times of recession," I suppose I would give them points for that, but none have recently made such a statement as far as I know. For those politicians who were around circa 2009, I could look to see whether they endorsed the Recovery and Reinvestment Act (and, on a related note, TARP)... maybe I will add that, now that you mention it, I'll think about it/look into it.

So Sorry for replying so late - I've been caught up with life/ exams.

Yes, sorry I did misrepresent you by thinking you relied heavily on r/neoliberal.

From digging around I totally understand and sympathise with why you chose to rely on partly on r/neoliberal - There are surprisingly few places where ecomonic discussion is occuring on the primary election where it is accessible to non-economists. But nonetheless they are a subreddit.

In a lot of your analysis though, you do seem to caricature Keynesian economics as non-mainstream. Is that ... true? I don't think this is at all correct. Isn't Keynesian economics the backbone of mainstream economics alongside neoclassical economics?

I have some other points, I will try run them by you later.

Can I say one thing, Thank You for championing Onedrive. MY God, I am so sick to death of Google Drive. It is a blight upon our species. HAHA

In the old version of the report, in maybe a couple of sentences, I incorrectly conflated the status of Keynesianism in general with Post-Keynesian in particular. In reality, New Keynesianism is accepted whereas Post-Keynesian ideas are heterodox, as I describe in the above comment. I have already updated the language in revisions. But this error of mine didn't matter anyway because I wasn't yet judging politicians for their stances on economic stimulus bills (although it is something to be added in the future). If I had been judging politicians on Keynesian stimulus then I would have looked more carefully before judging anything.

If Post-Keynesian ideas are correct, that could change a lot of things because it would mean that lots of government spending all the time will stimulate the economy. However, I am pretty sure this is not commonly accepted.

I am glad you agree on Drive vs OneDrive.

[I am not an expert on any of this.]

Is that tweet the only (public) evidence that Andrew Yang understands/cares about x-risk?

A cynical interpretation of the tweet is that we learned that Yang has one (maxed out) donor who likes Bostrom.

My impression is that: 1) it'd be very unusual for somebody to understand much about x-risk from one phone call; 2) sending out an enthusiastic tweet would be the polite/savvy thing to do after taking a call that a donor enthusiastically set up for you; 3) a lot of politicians find it cool to spend half an hour chatting with a famous Oxford philosophy professor with mind blowing ideas. I think there are a lot of influential people who'd be happy to take a call on x-risk but wouldn't understand or feel much different about it than the median person in their reference class.

I know virtually nothing about Andrew Yang in particular and that tweet is certainly *consistent* with him caring about this stuff. Just wary of updating *too* much.

On Yang's site (a):

Cool. That's a bit more distinctive although not more than Hillary Clinton said in her book.

https://lukemuehlhauser.com/hillary-clinton-on-ai-risk/

Yeah, though from my quick look it's not mentioned on her 2016 campaign site: 1, 2

I am in general more trusting, so I appreciate this perspective. I know he's a huge fan of Sam Harris and has historically listened to his podcast, so I imagine he's head Sam's thoughts (and maybe Stuart Russell's thoughts) on AGI.

Presumably should say "that Yang has one (maxed out) donor who likes Bostrom"

Thanks

If I recall correctly, Weinstein & Yang talk about apocalyptic / dark future stuff a bit during their interview, but not about x-risk specifically.

Independent of the desirability of spending resources on Andrew Yang's campaign, it's worth mentioning that this overstates the gains to Steyer. Steyer is running ads with little competition (which makes ad effects stronger), but the reason there is little competition is because decay effects are large; voters will forget about the ads and see new messaging over time. Additionally, Morning Consult shows higher support than all other pollsters. The average for Steyer in early states is considerably less favorable.

Good to know.

Really?

Yes. People aren't spending much money yet because people will mostly forget about it by the election.

Thanks for this!

I think it's a great hypothesis and deserves careful consideration by EA. (Is any other presidential candidate taking AI alignment seriously? Would any other candidate take a meeting with Bostrom?)

Seems especially compelling when coupled with Scott's recent observation that there's actually not that much money in politics and Carl's observation that systemic change can be done effectively.

Here's an archived version of Yang's tweet in case the original goes away, is altered, etc.

1) Thx, I have Twitter blocked, now I get to see the tweet.

2) There is a distinction between "understands the technical reasons why a civilization should focus on extinction risks" and "knows the relevant rallying flags to tweet", and people running for elections often explicitly try to make you falsely infer the former type of thing from the latter.

Sure. I think having a presidential candidate using AI alignment as a rallying flag is a good thing (i.e. should at least lead to vetting whether he's doing the former or the latter).

Feels relevant to note that Cari & Dustin donated $20M to Hillary & Democratic efforts in late 2016.

Certainly a different situation, though my model is that smaller donations earlier are more leveraged.

Can you elaborate on this?

Is your claim that AI policy is currently talent-constrained, and having Yang as president would lead to more people working on it, thereby making it money-constrained?

No--just that there's perhaps a unique opportunity for cash to make a difference. Otherwise, it seems like orgs are struggling to spend money to make progress in AI policy. But that's just what I hear.

First pass: power is good. Second pass: get practice doing things like autonomous weapons bans, build a consensus around getting countries to agree to international monitoring of software, get practice doing that monitoring, negotiate minimally invasive ways of doing this that protect intellectual property, devote resources to AI safety research (and normalize the field), and ten more things I haven't thought of.

This is naive. The low amount of money in politics is presumably an equilibrium outcome, and not because everyone has failed to consider the option of buying elections. And the reasonable conclusion is that Thiel got lucky, given how close the election was, not that he single-handedly caused Trump's victory.

The stake of the public good in any given election is much larger than the stake of any given entity, so the correct amount for altruists to invest in an election should be much larger than for a self-interested corporation or person.

Didn't claim this.

Not sure what this adds.

Yes, you're right that altruists have a more encompassing utility function, since they focus on social instead of individual welfare. But even if altruists will invest more in elections than self-interested individuals, it doesn't follow that it's a good investment overall.

Sorry for being harsh, but my honest first impression was "this makes EAs look bad to outsiders".

Yes, it doesn't by itself--my point was only meant as a counterargument to your claim that the efficient market hypothesis precluded the possibility of political donations being a good investment.