Epistemic status: speculative

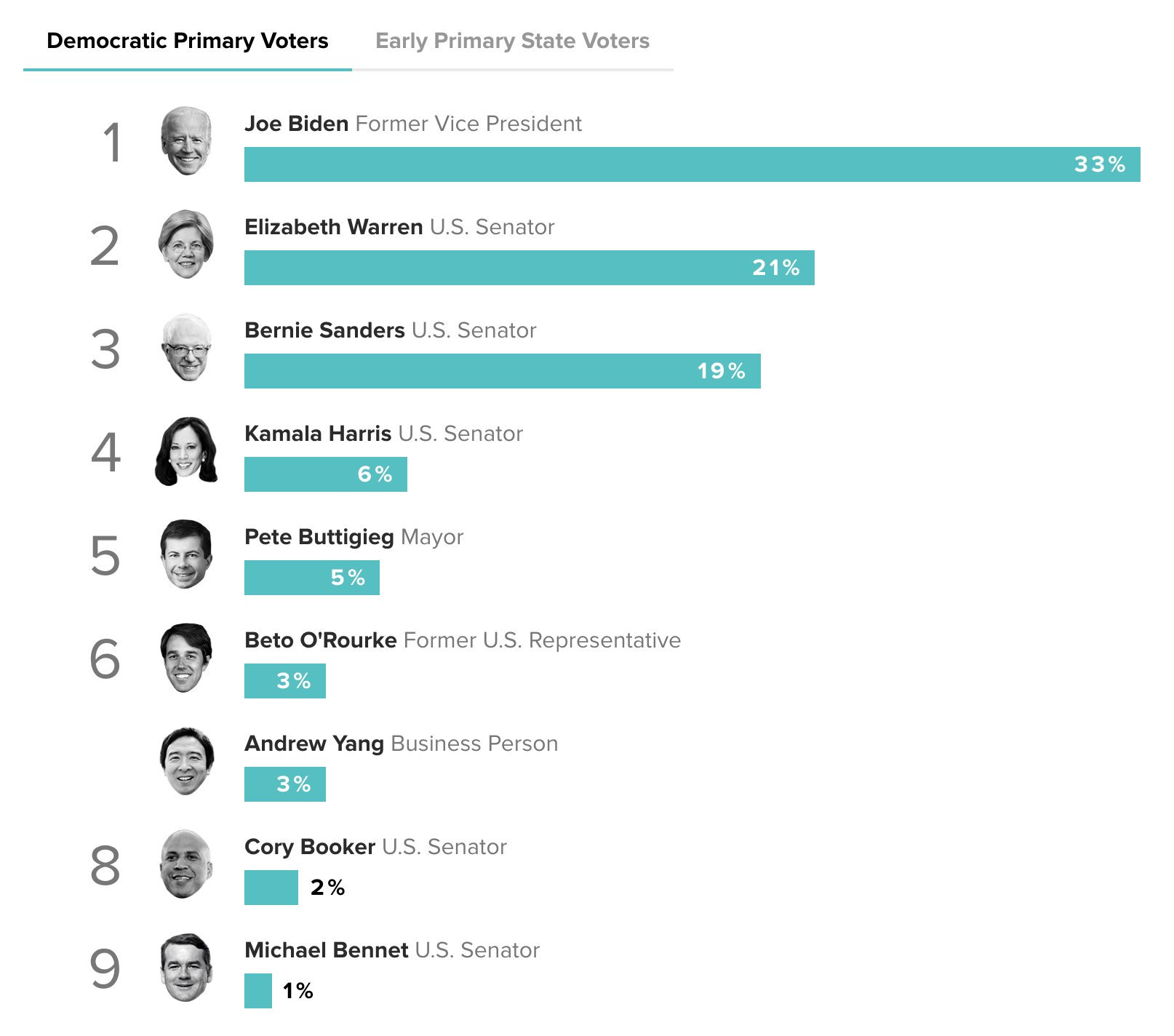

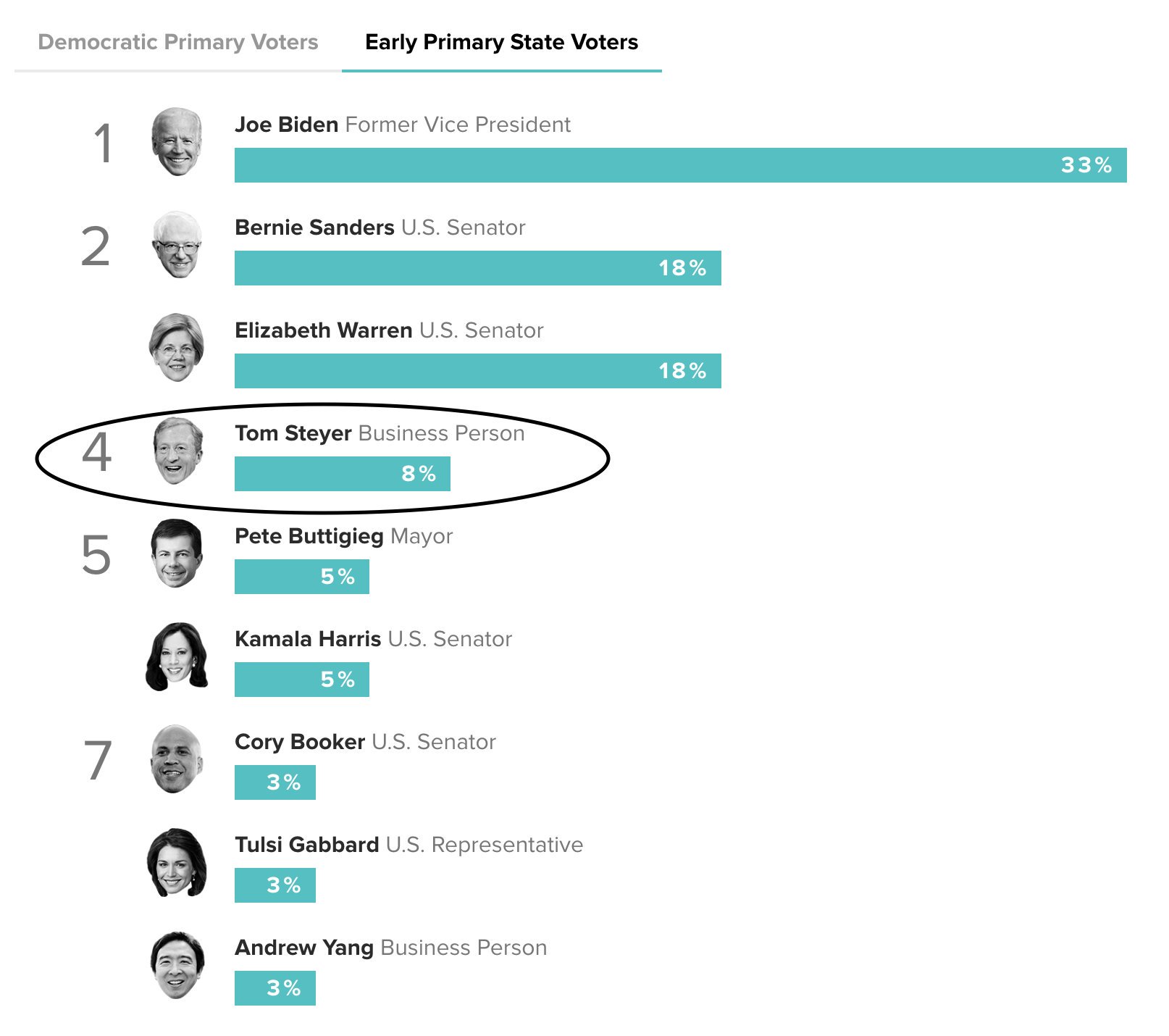

Andrew Yang understands AI X-risk. Tom Steyer has spent $7 million on adds in early primary states, and it has had a big effect:

If a candidate gets more than 15% of the vote in Iowa (in any given caucus), they get delegates. Doing that consistently in many caucuses would be an important milestone for outsider-candidates. And I'm probably biased because I think many of his policies are correct, but I think that if Andrew Yang just becomes mainstream, and accepted by some "sensible people" after some early primaries, there's a decent chance he would win the primary. (And I think he has at least a 50% chance of beating Trump). It also seems surprisingly easy to have an outsize influence in the money-in-politics landscape. Peter Thiel's early investment in Trump looks brilliant today (at accomplishing the terrible goal of installing a protectionist).

From an AI policy standpoint, having the leader of the free world on board would be big. This opportunity is potentially one that makes AI policy money constrained rather than talent constrained for the moment.

[I am not an expert on any of this.]

Is that tweet the only (public) evidence that Andrew Yang understands/cares about x-risk?

A cynical interpretation of the tweet is that we learned that Yang has one (maxed out) donor who likes Bostrom.

My impression is that: 1) it'd be very unusual for somebody to understand much about x-risk from one phone call; 2) sending out an enthusiastic tweet would be the polite/savvy thing to do after taking a call that a donor enthusiastically set up for you; 3) a lot of politicians find it cool to spend half an hour chatting with a famous Oxford philosophy professor with mind blowing ideas. I think there are a lot of influential people who'd be happy to take a call on x-risk but wouldn't understand or feel much different about it than the median person in their reference class.

I know virtually nothing about Andrew Yang in particular and that tweet is certainly *consistent* with him caring about this stuff. Just wary of updating *too* much.

I am in general more trusting, so I appreciate this perspective. I know he's a huge fan of Sam Harris and has historically listened to his podcast, so I imagine he's head Sam's thoughts (and maybe Stuart Russell's thoughts) on AGI.