Epistemic status: speculative

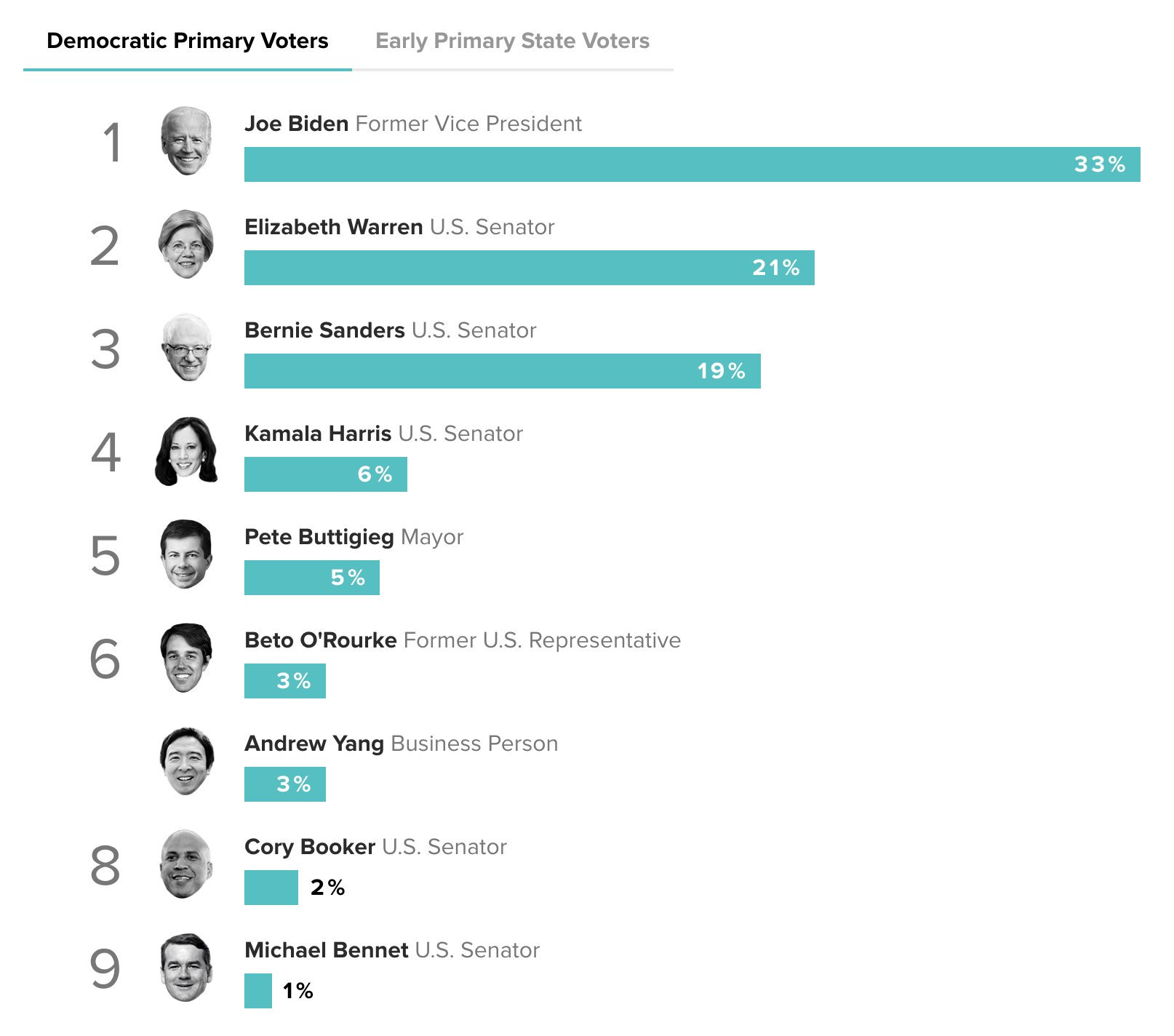

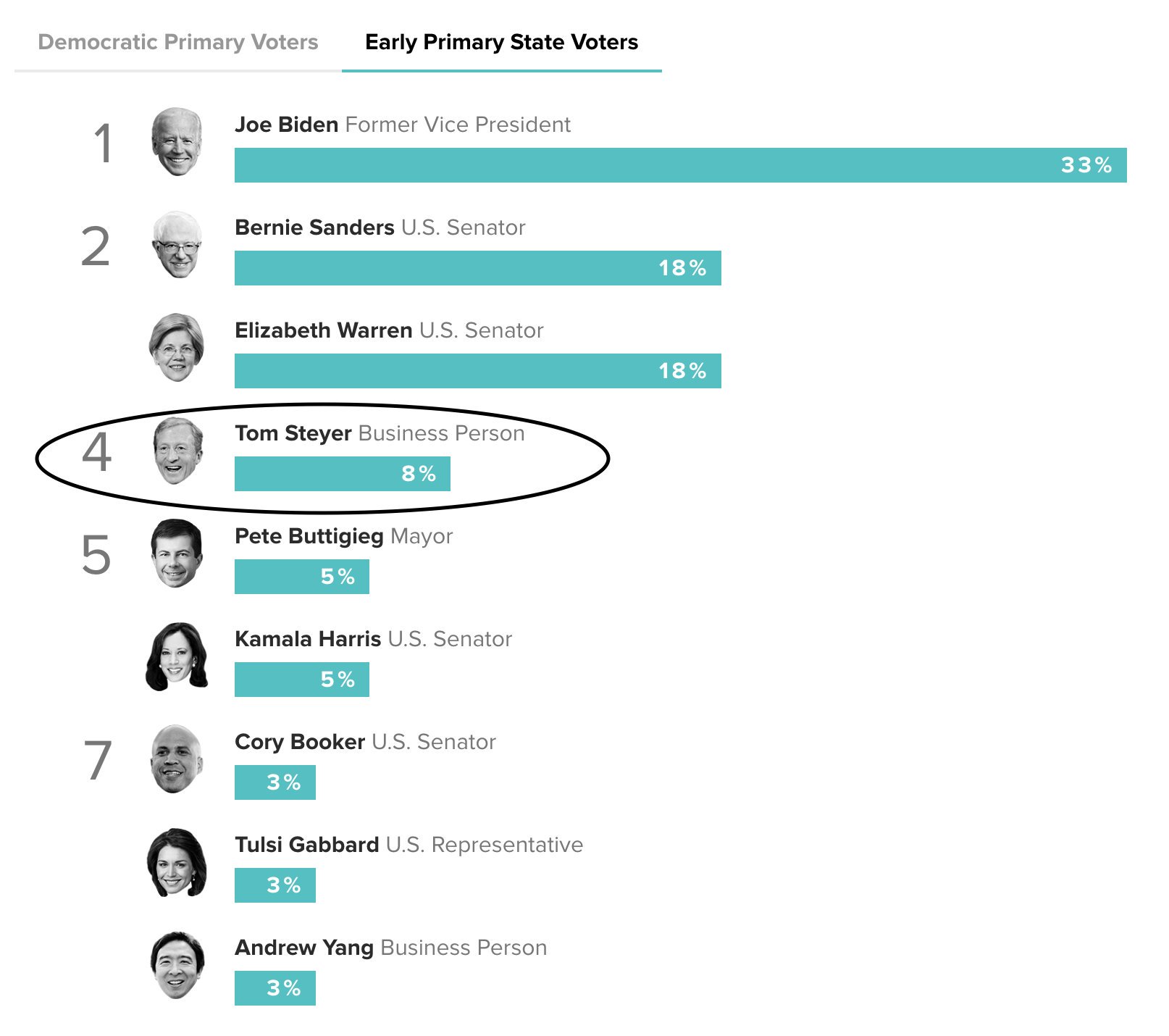

Andrew Yang understands AI X-risk. Tom Steyer has spent $7 million on adds in early primary states, and it has had a big effect:

If a candidate gets more than 15% of the vote in Iowa (in any given caucus), they get delegates. Doing that consistently in many caucuses would be an important milestone for outsider-candidates. And I'm probably biased because I think many of his policies are correct, but I think that if Andrew Yang just becomes mainstream, and accepted by some "sensible people" after some early primaries, there's a decent chance he would win the primary. (And I think he has at least a 50% chance of beating Trump). It also seems surprisingly easy to have an outsize influence in the money-in-politics landscape. Peter Thiel's early investment in Trump looks brilliant today (at accomplishing the terrible goal of installing a protectionist).

From an AI policy standpoint, having the leader of the free world on board would be big. This opportunity is potentially one that makes AI policy money constrained rather than talent constrained for the moment.

Yes. I think mostly nobody knows what to do, a few people have a few ideas, but nothing that the president can help with.

OpenPhil largely has not been able to spend 5% of its $10 billion dollars, more funding will not help. Nick Bostrom has no idea what policies to propose, he is still figuring out the basic concepts (unilateralist's curse, vulnerable world, etc). His one explicit policy paper doesn't even recommend a single policy - again, it's just figuring out the basic concepts. I recall hearing that the last US president tried to get lots of AI policy things happening but nothing occurred. Overall it's not clear to me that the president is able to do useful stuff in the absence of good ideas for what to do.

I think that the most likely strongly negative outcome is that AI safety becomes attached to some standard policy tug-o-war and mostly people learn to read it as a standard debate between republicans and democrats (re: the candidate discussed in the OP, their signature claim is automation of jobs is currently a major problem, which I'm not even sure is happening, and it'd sure be terrible if the x-risk perspective on AI safety and AI policy was primarily associated with that on a national or global scale).

There's a slightly different claim which I'm more open to arguments on, which is that if you run the executive branch you'll be able to make substantial preparations for policy later without making it a big talking point today, even if you don't really know what policy will be needed later. I imagine that CSET could be interested in having a president who shared a mood-affiliation with them on AI, and probably they have opinions about what can be done now to prepare, though I don't actually know what any of those things are or whether they're likely to make a big difference in the long-run. But if this is the claim, then I think you actually need to make the plan first before taking political action. Kalil's whole point is that 'caring about the same topic' will not carry you through - if you do not have concrete policies or projects, the executive branch isn't able to come up with those for you.

I think that someone in office who has a mood affiliation with AI and alignment and x-risk who thinks they 'need to do something' - especially if they promised that to a major funder - will be strongly net negative in most cases.