Epistemic status: speculative

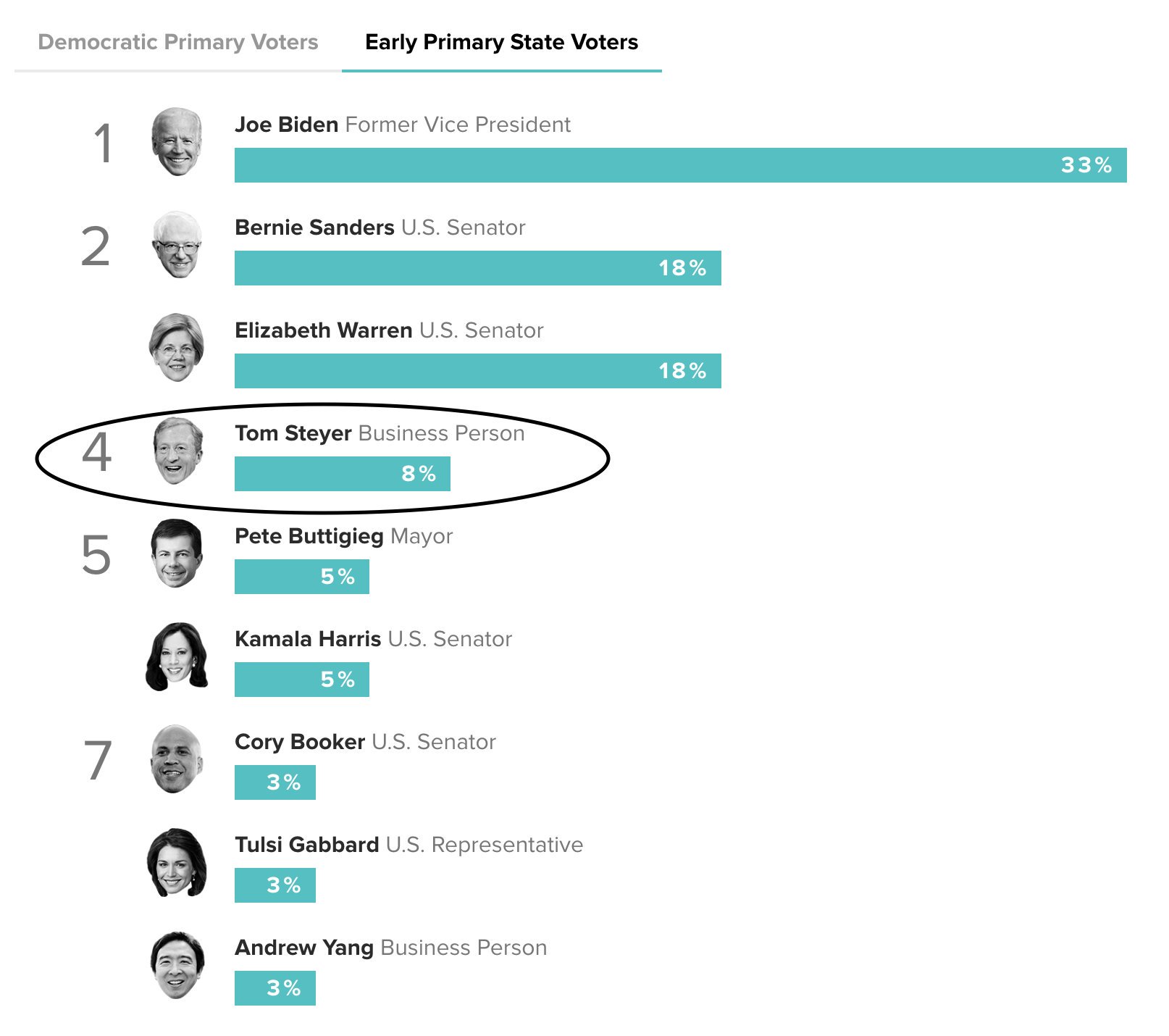

Andrew Yang understands AI X-risk. Tom Steyer has spent $7 million on adds in early primary states, and it has had a big effect:

If a candidate gets more than 15% of the vote in Iowa (in any given caucus), they get delegates. Doing that consistently in many caucuses would be an important milestone for outsider-candidates. And I'm probably biased because I think many of his policies are correct, but I think that if Andrew Yang just becomes mainstream, and accepted by some "sensible people" after some early primaries, there's a decent chance he would win the primary. (And I think he has at least a 50% chance of beating Trump). It also seems surprisingly easy to have an outsize influence in the money-in-politics landscape. Peter Thiel's early investment in Trump looks brilliant today (at accomplishing the terrible goal of installing a protectionist).

From an AI policy standpoint, having the leader of the free world on board would be big. This opportunity is potentially one that makes AI policy money constrained rather than talent constrained for the moment.

No--just that there's perhaps a unique opportunity for cash to make a difference. Otherwise, it seems like orgs are struggling to spend money to make progress in AI policy. But that's just what I hear.

First pass: power is good. Second pass: get practice doing things like autonomous weapons bans, build a consensus around getting countries to agree to international monitoring of software, get practice doing that monitoring, negotiate minimally invasive ways of doing this that protect intellectual property, devote resources to AI safety research (and normalize the field), and ten more things I haven't thought of.