I think I'm gonna leave it a bit longer before actually doing this second step, sorry.

I reckon it's too soon to do this, given what we don't know. I'll probably wait a bit.

Summary

I am running the listening exercise I'd like to see.

- Get popular suggestions

- Run a polis poll on those suggestions and things around them [This Post]

- Make a google doc where we research consensus suggestions/ near consensus/consensus for specific groups

- Poll again

Here is a polis poll based on yesterday's comments. The aim is to find why people like these suggestions. What underlies our motivations? Are there better ways to get the same results? By understanding what we think about these things we can think better.

Polis Poll https://pol.is/5kfknjc9mj

My thoughts

I often see people wish they had been listened to. But most listening processes are hard to engage with and support only the loudest voices. This aims to be a bit different. It's gonna take about 30 minutes to engage over the 4 stages and hopefully provide some suggestions that might generalise across the community. And it will be slow enough with room for thought, so that we don't have reactionary responses. It's not perfect, but I think it's better.

Report

The full report is here: https://pol.is/report/r6r5kkdfz3kwpfankmkmb

Visualisation

Group A cares more about X-risk and is concerned that scrutinising decisionmakers might make them make worse choices.

Group B wants more transparency, more critics at EAG and doesn't like the work with under 18s.

Issue by issue:

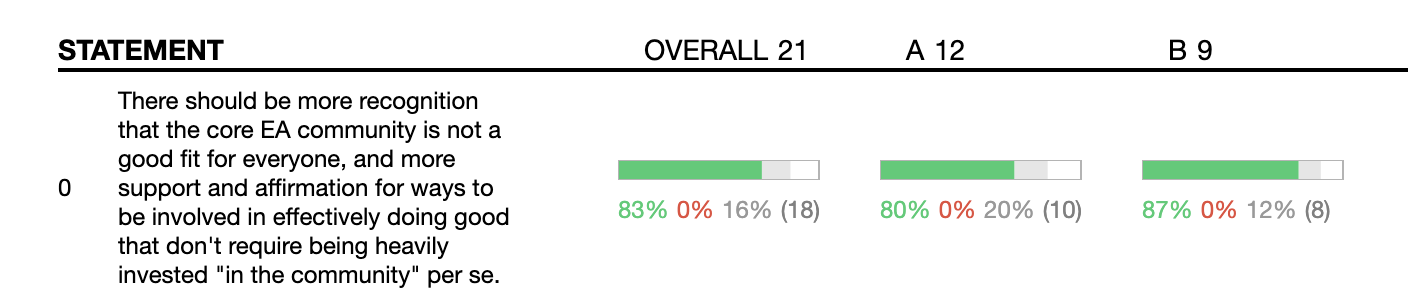

The Community isn't a good fit for everyone

This was the top comment from yesterday's request for comments.

Literally no one has disagreed with this so far in the Polis today.

I guess it's a bit vague. How could there be more of this?

Most respondents thought that GWWC could a way to do this.

Relatedly 55% of respondents in the group B express that they identify with exhaustion when thinking about more community engagement and 44% that they think their careers have been disadvantaged. Feels like room for a representative poll looking into this in more detail.

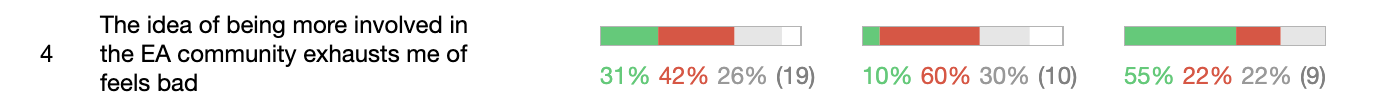

Will MacAskill stepping back

The second most-upvoted comment was about Will MacAskill taking a smaller role[1].

I tried to give a few comments around this to tease apart what people were feeling.

These questions are pretty polarising among the two groups. Group B is much more negative in every case. I tried to isolate why people wanted Will to reduce his visibility.

Can you think of anything else that might be relevant here?

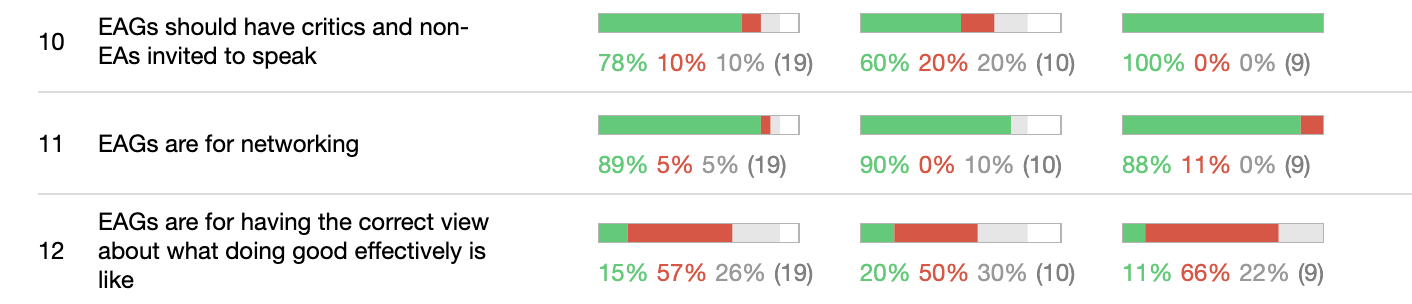

EAGs and Critics

This one seems pretty trivial. Almost everyone seems to agree with it. Enough that I'm confused why this didn't happen more anyway. I think it was Zoe Cremer who I saw originally suggest this, so props to her.

I tried to isolate why some might not like this suggestion. Perhaps it's because they see EAGs as primarily for networking? Maybe it's something else. Suggestions please.

Note also the feelings on different groups of critics.

While most respondents thought that 80k should have more critical voices, no group of critics got more than 50% of group A's approval. And even the more critical group B had few members who wanted Gebru and Torres. I guess people don't know who Bender and Lenton are.

I suggest that it's hard to have critics you like who are really gonna be critical.

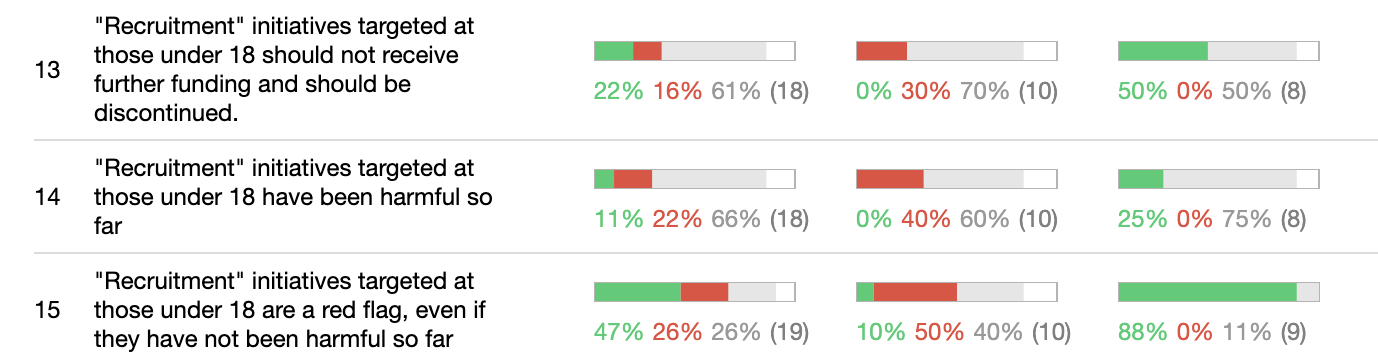

Work with Teenagers

Another popular comment was the below

People were deeply unsure about these and broke more or less down group lines, but still I think a surprising lack of support for an EA activity. What could reasonable next steps as a community be here?

I will do some more of this later.

- ^

I note that it's uncomfortable to even type that. Take from that what you want.

Can we get the Polis report* too?

*I can't help but wonder if this potential pun is the reason they chose this name

Who is the most underrated critic of EA who EAs should engage with more? (one name per answer)

Larry Temkin is a decent candidate. I think he has plenty of misunderstandings about EA broadly, but he also defends many views that are contrary to common EA approaches and wrote a whole book about his perspective on philanthropy. As far as philosopher critics go, he is a decent mixture of a) representing a variety of perspectives unpopular among EAs and 2) doing so in a rigorous and analytic way EAs are reasonably likely to appreciate, in particular he has been socially close to many EA philosophers, especially Derek Parfit.

Anand Giridharadas

What concrete steps could the EA community take to signal that it's not a good fit for everyone and that there are different levels of useful commitment?

Can people who missed the post yesterday add more suggestions?

Yes though maybe add them to both. I may remove them though because last polis poll just had way too many suggestions so I'm trying to get those that are concensus.

Fair theoretically, but I think some core issues are missing from this poll as it is now, which I tried to fix (and got some upvotes on at least some), and I wonder how many votes they got before being removed.

What does a good whistleblowing process look like? Aim to write it succinctly

Really weird to group together Gebru and Torres.

I don't think so. They often retweet and cite each other.

When I wrote yesterday's comment (about the core community not being a good fit for everyone), I think I had a few things in mind, one of which is captured well in the GWWC-related comment about different levels of involvement. The other two, which are more difficult to make concrete, were:

I agree with you in theory, but in practice I'm worried that the first bullet point will serve as an excuse to make EA culture even less inclusive.

One day doesn't seem like very long to get topics? I guess you got a decent amount of engagement, though.

Suggestion: I think building epistemic health infrastructure is currently the most effective way to improve EA epistemic health, and is the biggest gap in EA epistemics.

I elaborated on this in my shortform. If the suggestion above seems too vague, there're also examples in the shortform. (I plan to coordinate a discussion/brainstorming on this topic among people with relevant interests; please do PM me if you're interested)

(I was late to the party, but since Nathan encourages late comments, I'm posting my suggestion anyways. I'm posting the comment also under this post besides the previous one, because Nathan said that "maybe add them to both"; please correct me if that's my misunderstanding)