Primarily written by Rafael Kaufmann

In our first LW post on the Gaia Network, we framed it as a solution to the challenges of building safe, transformative AI. However, the true potential of Gaia as a “world-wide web of causal models” goes far beyond that, and in fact, justifying it in terms of its value to other use cases is key to showing its viability for AI safety. At the same time, the previous post focused more on the “what” and “why”, and didn’t really talk much about the “how”. In this piece, we’ll correct both of these flaws: we’ll visually walk through the Gaia Network’s mechanics, with concrete use cases in mind.

The first two parts will cover use cases related to making science more effective and efficient. These would already be sufficient to justify the importance of building the Gaia Network: as “science is the only news”, improving science can have a huge positive multiplier effect on our future survival and prosperity. Yet despite a workforce of 8.8 million researchers and funding that adds up to 1.7% of global GDP, science is rightly criticized for inefficiency and limited accountability. The third part will expand beyond the epistemic (scientific) benefits of the Gaia Network and towards pragmatic impact - ie, making all decision-making more effective and efficient, which impacts the entire world population and GDP. And the last two sections will focus on the applications of the Gaia Network on existential risk - first specifically with regard to AI safety, and finally as a general tool for collective sensemaking and coordination around the Metacrisis.

For brevity’s sake, we will not cover any of the implementation details or mathematical grounding. We’ll focus on the core concepts and capabilities, and try to explain them in plain language. We’ll also skim over much of the “hard parts”: the economics and trust modeling. Finally, we will not cover the arguments for convergence and resilience of the network; these have been already sketched out in our previous paper, and merit a more formal and in-depth analysis than we can incorporate into this primer. If there’s some hand-waving in the below that makes you uncomfortable, please let us know in the comments and we will attempt to assuage you.

The beginning will take a bit long with Bayesian statistics, as these are foundational concepts for the Gaia architecture. Feel free to skip the footnotes if you’re overwhelmed. Also, note that everything below assumes explicit or clear-box models (where model parameters have names that reflect their intended semantics). In a future article, we’ll discuss how to incorporate black-box models like neural networks, where most components (neurons) have opaque semantics (or are mostly polysemantic).

So let’s get started. Fast forward to a few years from now…[1]

Better science bottom-up

You’re a plant geneticist working on the analysis of some experimental results that you want to publish. You have a model of how your new maize strain improves yields, and you’ve tested it against an experimental data set. (In the example pseudocode below, we use a Python-based syntax for concreteness, but this could be implemented in any statistical analysis software or framework, like R or Julia or even Excel spreadsheets.)

def model(strainplanted, soiltype, rainfall, cropyield):

## Set parameter priors

deltayield ~ Normal(...)

avgyield_control ~ Normal(...)

avgyield_experimental ~ Normal(avgield_control + deltayield, ...)

𝛽_soiltype ~ Normal(...)

𝛽_rainfall ~ Normal(...)

...

## Define likelihood of the target variable cropyield given the covariates and parameters:

## p(cropyield | strainplanted, soiltype, rainfall, ...params)

with field = plate("field"):

with t = plate("t"):

baseyield = avgyield_control if strainplanted == "control" else avgyield_experimental

soiltype_effect = 𝛽_soiltype[soiltype]

rainfall_effect = 𝛽_rainfall * rainfall

cropyield ~ Normal(𝛼_yield + 𝛽_soiltype + 𝛽_rainfall)

| field | t | strainplanted | soiltype | rainfall | cropyield |

|---|---|---|---|---|---|

| Martha’s Meadow | 2023 | control | good | 0.5 | 20 |

| Martha’s Meadow | 2024 | control | good | 0.4 | 18 |

| Ada’s Acres | 2023 | control | bad | 0.8 | 15 |

| Ada’s Acres | 2024 | control | bad | 0.1 | 12 |

| Peter’s Patch | 2023 | experimental | good | 0.4 | 35 |

| Peter’s Patch | 2024 | experimental | good | 0.4 | 41 |

| Lee’s Lot | 2023 | experimental | bad | 0.1 | 33 |

| Lee’s Lot | 2024 | experimental | bad | 0.2 | 34 |

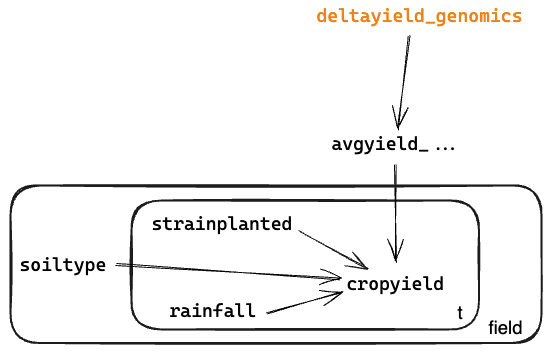

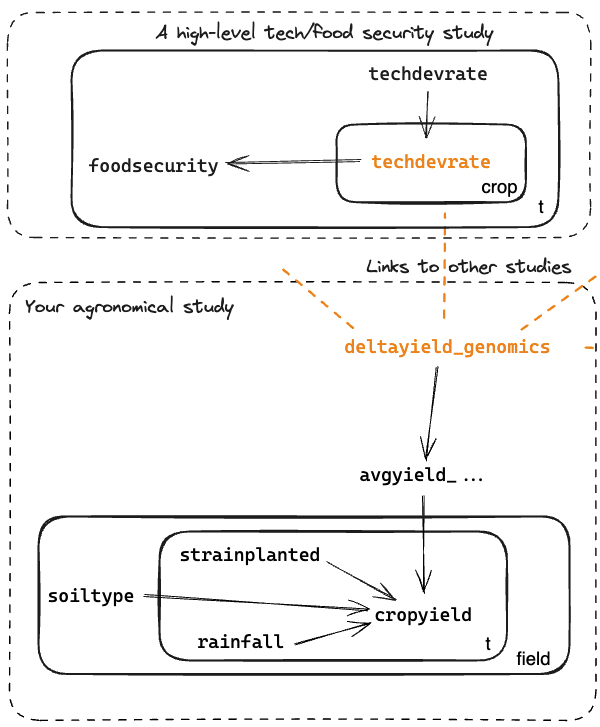

Like most scientific analyses, this is a hierarchical model, where your local variables represent observations or states of the current context – say, the yield in each given season and field – and are influenced by parameters that represent more generic or abstract variables – average yield for your strain across all fields and seasons, which in turn depends on the expected yield improvement from a given genomics technique. (The latter is generic enough that it’s not really specific to your study, which is why it’s highlighted in orange below.)

Running this model on a data set can be understood as propagating information through the graph. First, the priors for the parameters inform the expected distributions for the local variables. Then as we gather observations for some variables, that information flows back up, giving updated posteriors for the parameters. The amount of information (uncertainty reduction or negentropy) being propagated can be understood as a flow on this graph[2] and indeed can be estimated as an output of many kinds of common inference algorithms.[3]

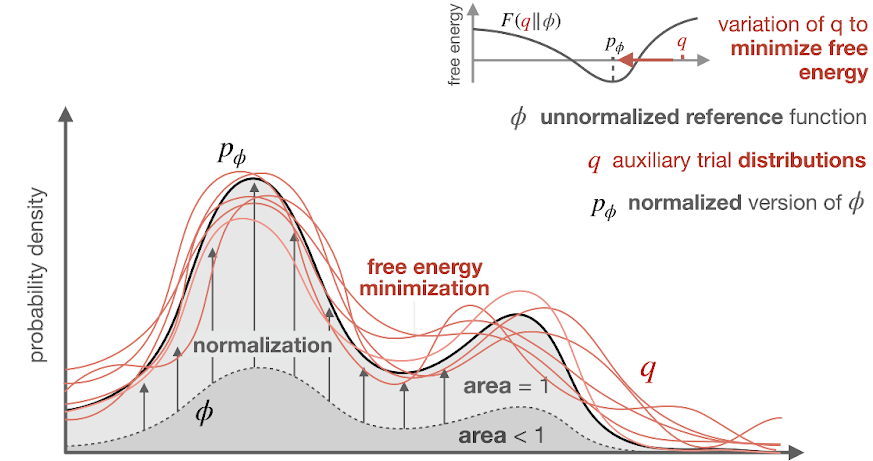

It’s really useful to think informally of the free energy of the model as the discrepancy between the inferred distribution and the information we have available, between priors and observations.[4] Zero free energy is the ideal state in which all information has been fully incorporated into the inference, is completely internally consistent, and explains away all the uncertainty in the system. Typically we can’t achieve zero free energy, as there’s always some uncertainty (whether aleatoric or epistemic), but we want to minimize it so that our model doesn’t have “extra”, unwarranted uncertainty. To get a better understanding of the concept of free energy and its role in Bayesian modeling and active inference, there are many excellent resources available; we particularly recommend this paper by Gottwald and Braun. Going forward, you can just think of Free Energy Reduction (FER) as a standard unit of account used by each model.

But here’s a problem: How do you set priors for your parameters in the first place? Sure, you expect your strain to increase yield, but it would be circular reasoning to build that expectation into the priors. The common practice is to use a flat prior (also known as a weakly informative or regularization prior), that incorporates only information that you have an objective or incontrovertible reason to believe in (ex: penalizing unreasonably low or high values). This can be seen as “not sneaking information into the model”, to avoid fooling yourself (and your stakeholders, the people who will use your study results to make decisions) by publishing unjustifiably confident results.[5] However, typically, most parameters in your study do not represent hypotheses you’re actively trying to learn about; instead, they represent assumptions that are justified by previous studies or expert opinions. For those, you want the opposite kind of prior, a sharp or strong prior.

In the past, if you were very lucky, there would be a published meta-analysis about the parameters for each of your assumptions, to save you the pain of combing through thousands of PDFs, understanding each, and copy-pasting numbers from the relevant tables into your workspace. Unfortunately, this work was so mind-numbingly boring, expensive, thankless and error-prone, that high-quality meta-analyses were exceedingly rare.[6] To make matters worse, unlike the toy example above, real-world scientific models often utilize hundreds to thousands of parameters, and often far more if machine learning is used. Gathering the outputs of every relevant study for every relevant parameter, by hand, was infeasible, so we ended up with constant wheel reinvention and cargo-culted, unjustified assumptions, often used as point estimates with no uncertainty attached.[7]

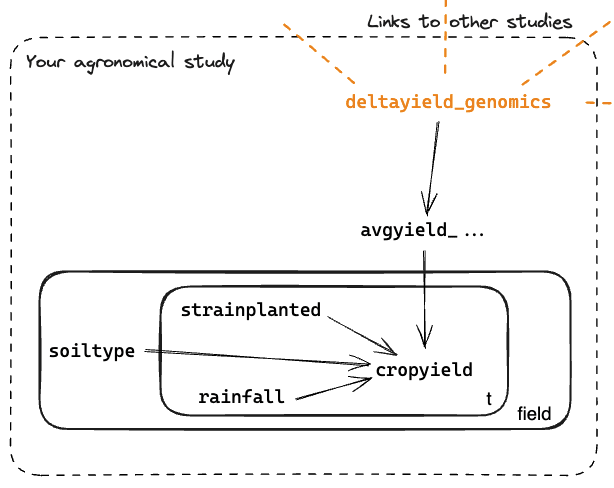

No longer: now you can simply connect your local model to the Gaia Network by annotating each parameter (in our example, average yield and drought tolerance, for both the control group of traditional maize and the experimental group of genetically modified maize). Your annotation attaches each parameter to a global namespace called the Gaia Ontology. You can browse the Ontology to see the exact definition of the parameter, with example code, and make sure you’re using the right one. Many other scientists have published their studies on the Gaia Network; each published study contributes a posterior distribution for its parameters, and these are algorithmically aggregated into a “sort of weighted average” called a pooled distribution.[8][9]

So at inference time, the Gaia engine just queries the network for the current pooled distributions for each of these parameters – effectively conducting a meta-analysis on the fly – and adopts them as priors. [10][11]

@gaia_bind(deltayield={"v0.Agronomy.YieldImprovementPct":

{"species":"v0.Agronomy.Species.Maize",

"intervention":"v0.Agronomy.Genomics.CRISPR"}})

def model(strainplanted, soiltype, rainfall, cropyield):

## Model code is unchanged

As the illustration above shows, your model is importing information from other studies in the network and using it to increase FER. Gaia keeps track of the “credit assignment”[12], which will prove valuable starting in the next step, which is to publish your work.

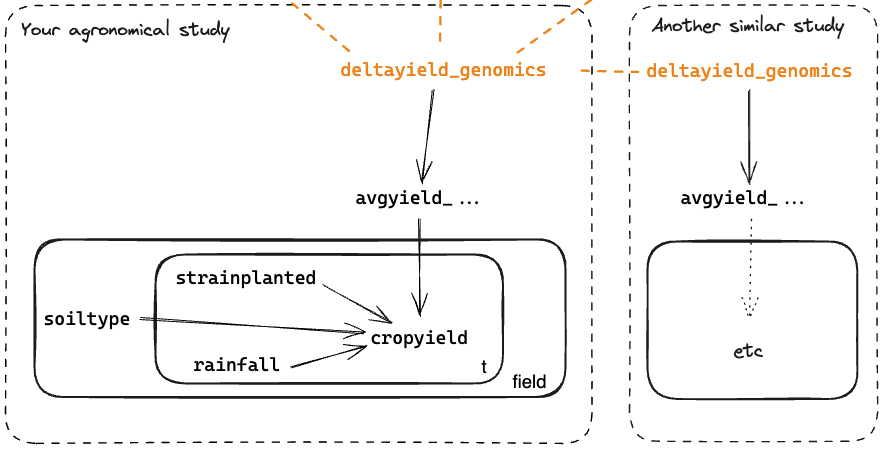

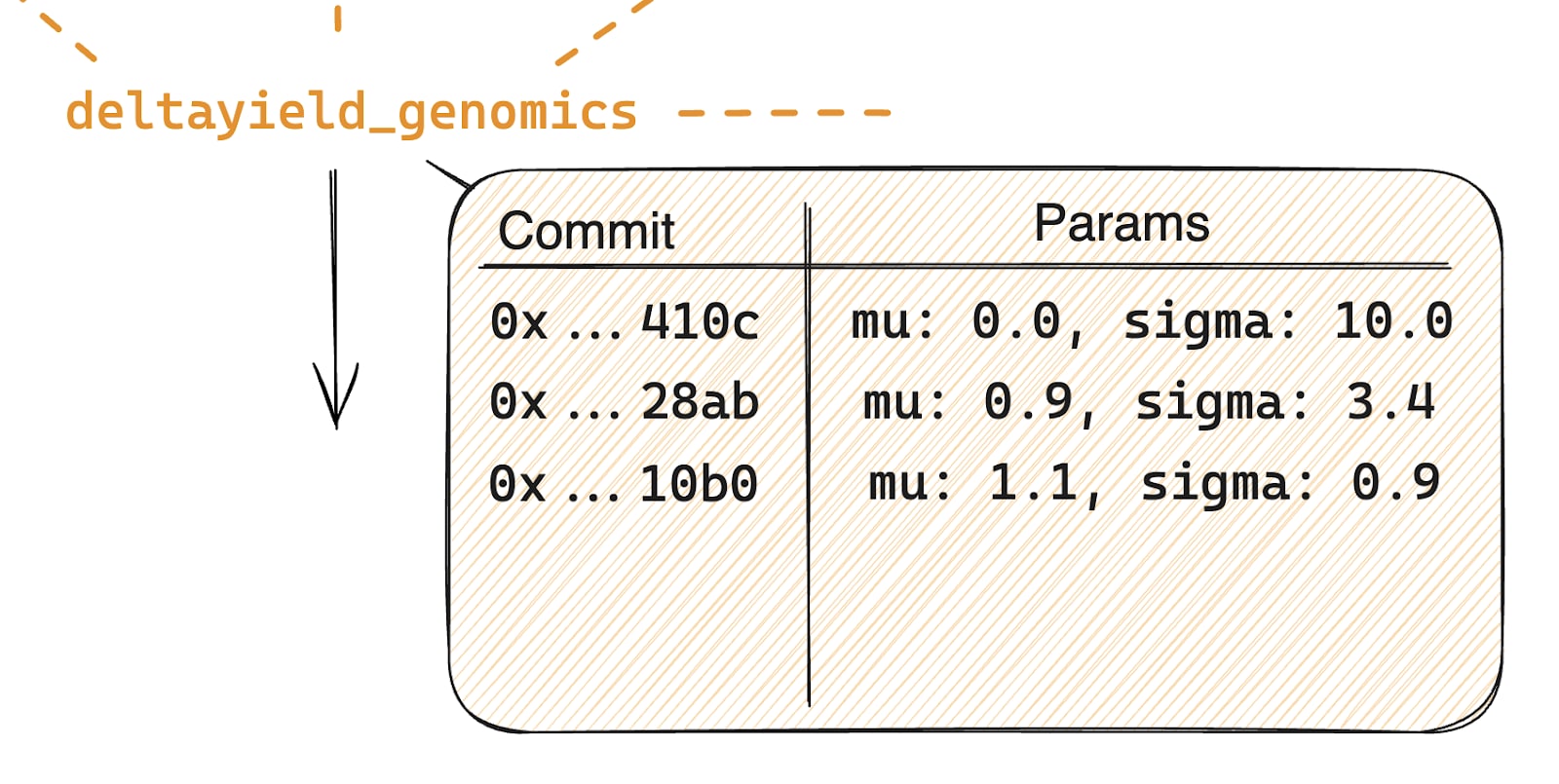

To contribute to the network, all you have to do is commit your study to GitHub. Gaia will save your posterior distributions for all parameters that you’ve annotated, and share them back with every other study in the network. Your study and your peer studies each have an update chain, an append-only sequence of distributions representing the state of posteriors from each study’s perspective. These are effectively independent representations of the state of knowledge of the parameters in question.[13]

So, immediately you can see that any other agricultural studies about different experimental strains will have their posteriors affected by adding your study to the pool of updates.[14] This effect can be quite large if few studies are being pooled, but it converges, so that after some point the updates become minimal.[15]

But Gaia doesn’t just propagate posterior updates to “sibling” studies: If there are higher-level models for which your parameter is a leaf, it will propagate up to those as well. For instance, a model that forecasts advances in crop technology and their impact on global food security:

Note that, by publishing your model on the Network, you’re not exporting any information other than updates to the values of the specific parameters that you’ve connected – in particular, you’re not sharing any of the underlying data. (This is a “privacy-centric” inference approach, analogous to federated learning. In a follow-up article, we’ll discuss how we can solve the problems of trust that this imposes.)

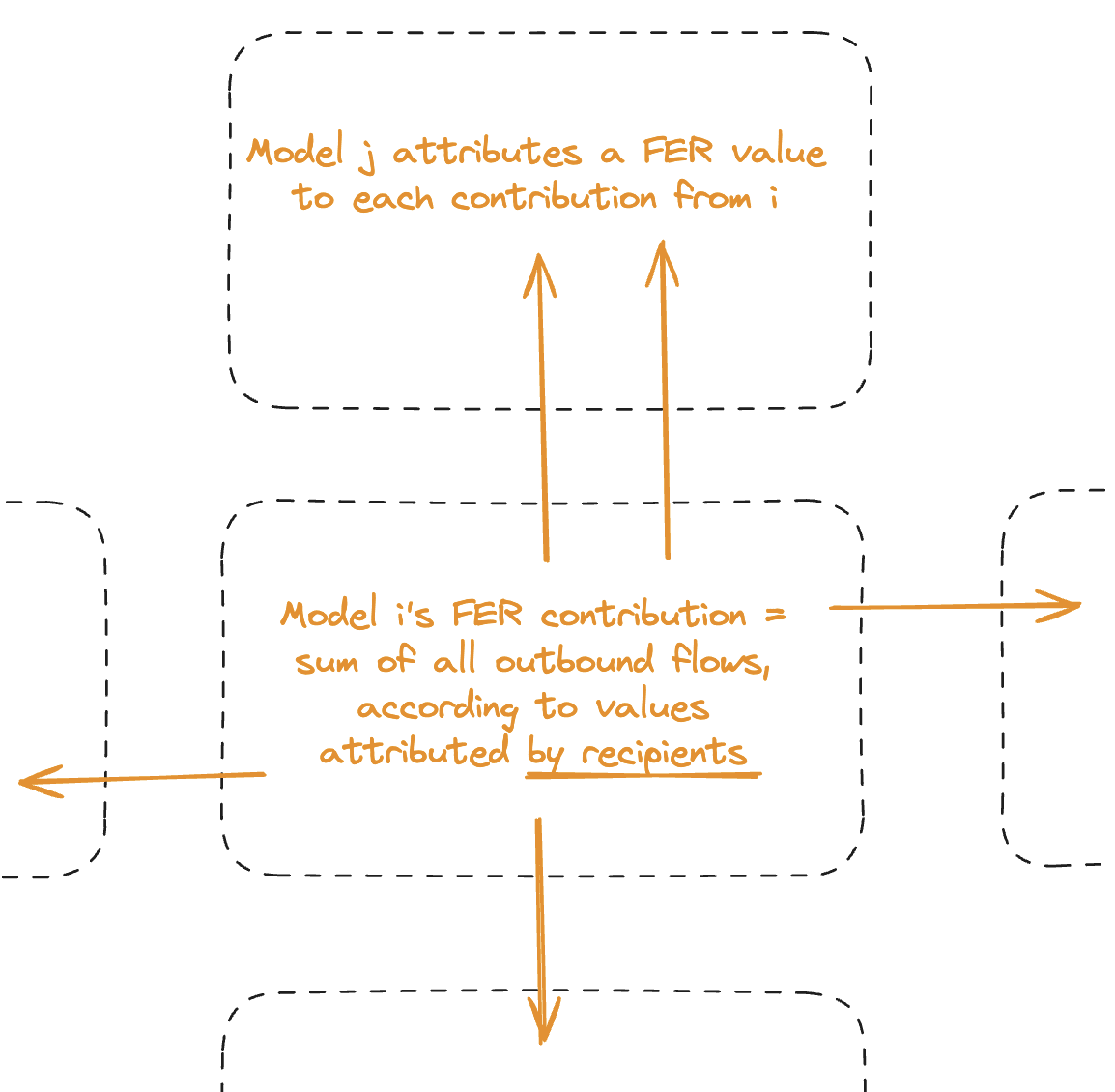

As mentioned above, the Gaia Protocol assigns credit to every publication (also called attribution). The mechanism for attribution is primarily “subjective”: each node (ie, each study) just measures the net FER impact of each contribution as it’s incorporated into its own update chain.

Above, we mentioned that the pooled distribution is a “sort of weighted” average between each study’s posterior. So where do the weights come from? The Gaia Protocol also answers this question in a bottom-up, “subjective” way. Nodes can independently infer the “right” weights for each parameter and study. To do so, they can use arbitrary “metamodels”, ranging from simple “beauty contest” models that just aggregate the net FER impact that other studies have attributed to a contribution, to “web of trust” models that try to factor out more sophisticated ones that infer the presence of low-quality studies or deliberate fraud via social-type network analysis; to true “metamodels” that infer study quality and parameter relevance, using outside data such as the publisher’s credentials, analyses of the model code and third-party verifications of the data. This means that, at least in the short term, the pooled distribution for a given parameter is actually different depending on which node you ask! Even if all nodes have seen all the updates in the same order, they can give arbitrarily different weights to them. But as the different metamodels themselves accumulate quality signals, nodes eventually converge on a shared inference of the right metamodel to use on which kind of parameter. (As discussed in the introduction, we will not attempt to justify the claim that this protocol converges and is resilient to noise and misinformation/fraud. For now, see the arguments here.)

Funding science – retroactively and prospectively

Now approaching this from the opposite perspective, say you're an analyst at a philanthropic foundation, trying to make recommendations for a prize that will be awarded to the most impactful scientific studies. Rather than solely rely on recommendations from the scientific community, or use “impact factors” that just measure popularity, you can query the Gaia Network to get quantitative, apples-to-apples impact metrics.

First, we should just note that being able to understand the “graph of science” in a live, transparent way – what are the research questions, how well developed, how much intensity in explore vs exploit mode, and how they connect to each other – is a game-changer. In the past, you needed to pay expensive fees for products like Web of Science and Scopus, which were based on manual curation and benefitted from the opaqueness of text-on-PDFs as the primary means of scientific communication. Having all the world’s science directly represented as machine-readable and connected models on Gaia – just like code and its dependencies on a package manager – makes all analytics orders of magnitude easier.

Now, back to the question of impact. Here we should distinguish two kinds of impact: epistemic impact - how much a given study has contributed to reducing uncertainty in the Network; and pragmatic impact - how much it has contributed to improving decisions. We’ll leave the pragmatic impact for later and focus on the epistemic impact for now.

So, for every model on the network, it’s easy to compute how much it contributed to FER flow across the network – what’s called credit assignment in neural networks. We just look at the net flow across the model boundary, which is accounted by the Gaia Protocol:

Some care needs to be taken here. First of all, note that the FER credited to a model due to its contributions is always computed by the model that’s receiving the contributions (it’s a subjective value). Plus, there might be significant differences in modeling practices between different fields, which may distort calculations. Later on, when we talk about economics, we’ll see that the Protocol also needs a way to turn that subjective value accounting into intersubjective, mutually agreed upon “local exchange rates”. For now, let’s say that you compute a normalization constant for each domain and use it to get a normalized, apples-to-apples net FER flow across domains.

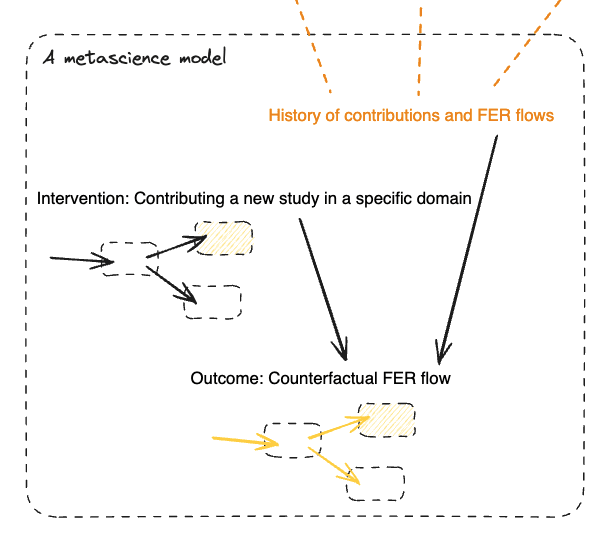

So this covers retroactive funding in the form of prizes. But this isn’t (and shouldn’t be) how most science gets funded. Most researchers cannot internalize the risk and cost of self-funding their work upfront and hoping for retroactive funding later. Instead, funders – who have access to cheaper capital costs, lower marginal risk sensitivity, and the other advantages that come with a big pile of cash – contract with researchers upfront to trade capital now for a future flow of impact. Before the Gaia Network, establishing effective contracts was very challenging, as it was extremely hard to predict impact, even for the researchers themselves, let alone for the funders. (In economic parlance, it was a classic agent-principal problem created by uncertainty and information asymmetry.) Now, the Gaia Network itself provides the solution: it contains metascience models that model the flow of FER across the network and use it to design interventions – adding more models and more data to specific fields and individual lines of research – that are likely to deliver the highest future flow of FER. Funders and researchers can use these models equally to guide where they should spend the most time and resources.[16] Compared with the recent past, where there were no meaningful metrics of scientific productivity or value added, let alone predictive models of how to improve these, the Gaia Network is a game-changer for science funding.

A distributed oracle for decision-making

The above covers the advancement of science. However, the same capabilities can aid any decision-making that pertains to the real world[17] – what we’ve called pragmatic impact above. Indeed, the Gaia Network has given everyone an actionable, reliable way to “trust the science” – not just on big things like climate change and pandemics, but also on day-to-day things like your diet, exercise, relationships, and so on. And the same applies to business decision-making, which is where we’ll focus next.

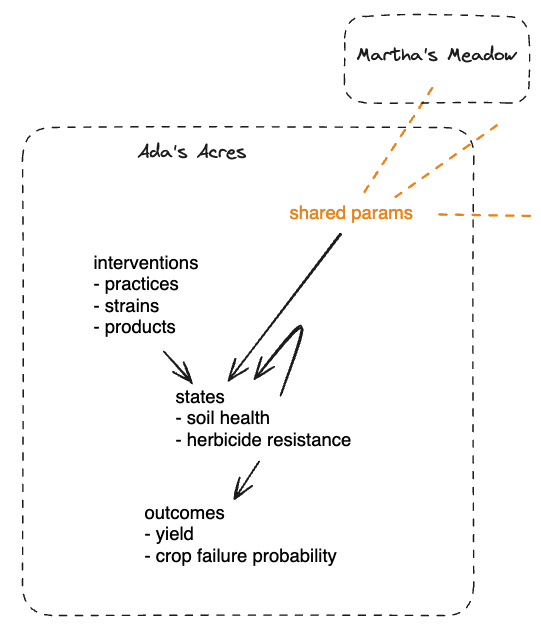

Say you manage Ada’s Acres, a large farming operation in the US Midwest. You’re planning your next planting for your 30 thousand hectares, and as usual, your suppliers are trying to push you new seeds, new herbicides and all manner of hardware and software. Meanwhile, your usual buyers are all calling to let you know that global demand forecasts are through the roof, so you stand to gain a lot of money if you have an outstanding harvest. However, you’ve noticed that the soil has been increasingly poor and in need of fertilizer and that herbicide resistance has increased a lot as well. The weather has been increasingly volatile, and you know it’s a matter of time before you have a major crop failure. Maybe it’s time to start giving regenerative farming a real shot?

Luckily, your farm operations software is now connected to the Gaia Network. It gives you a predictive digital twin of your farm that directly learns not just from every scientific experiment in agronomy, but from the “natural experiments” carried out by every other farm that uses the Network. So you can simulate the effects of any combination of practices, seed strains and products and estimate the outcomes, both short-term (expected yield and probability of crop failure for the next harvest) and long-term (soil health and herbicide resistance).

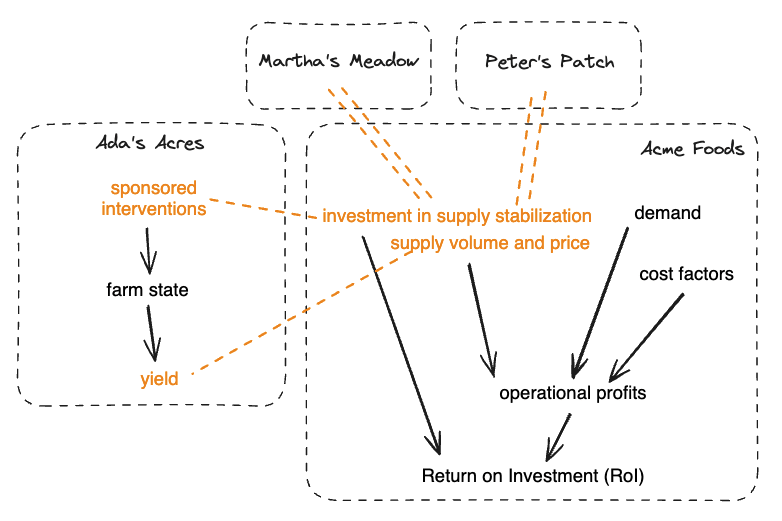

So that was a “small” (operational) use case. Now let’s zoom out to strategy[18]: let’s say that you’re the CEO of Acme Foods, a major food company. In light of increased droughts and crop failures, you’re trying to invest in your supply chain to minimize the risk of supply shortages. Your innovation teams have aggregated a long list of potential investments in precision farming, genomics, and regenerative agriculture. In the past, assembling an investment portfolio out of that long list would have required a long, expensive and very political negotiation exercise. Now that all your suppliers are connected to the Gaia Network and share limited access with you, your portfolio management system becomes a distributed digital twin of your supply chain. You can run complex distributed queries across all the nodes, simulate the effects of different investment combinations and different sets of assumptions (like climate and pest spread scenarios), factor in things like unintended consequences, and pull out an aggregate like a Pareto frontier for the investment profile you want.

Most of the demand for the intelligence in the Gaia Network will come from these decision engines (DEs), like the farm operations software and the portfolio management system. Combined with the ability of the Protocol to assign credit where it’s due, it can provide signals and incentives to provide a better supply of intelligence: more and better models in the places where they are most needed by decision-makers. In a future paper, we will further develop our vision of how these signals can be developed into a complete market and contracting mechanism for directing applied research, exploration and analysis: what we call the knowledge economy.

Even further, if we have “non-local” DEs that use Gaia models to design coordinated strategies that internalize the benefits of cooperation between multiple agents, then we can turn those DEs into Gaia models themselves! They become decision models performing “planning as inference” on behalf of agents (individuals and collectives), helping to solve all kinds of principal-agent problems. In the example above, the food company can use a DE not only to infer the best investments for its own goals but also to design adequate contracts and incentives that will best equalize the goals and constraints of all the players in the supply chain. This delegation economy will also be further explored in a future paper.

A distributed oracle for AI safety

The above discussion of decision-making is our link to AI safety. Yoshua Bengio has proposed to tackle AI safety by building an “AI scientist” – a comprehensive probabilistic world model that would serve as a universal gatekeeper to evaluate the safety of every high-stakes action from every AI agent, instead of attempting to design safety into agents. This is similar to Davidad’s Open Agency Architecture (OAA) proposal. But of course, developing such a monolithic, centralized and comprehensive gatekeeper from scratch would be an extremely costly and lengthy undertaking. Further, as Bengio’s proposal makes clear, the AI scientist needs to have “epistemic humility”: its evaluations need to incorporate the limitations and uncertainty of its own model so that it doesn’t confidently allow actions that seem safe at the time but turn out to be unsafe in retrospect.

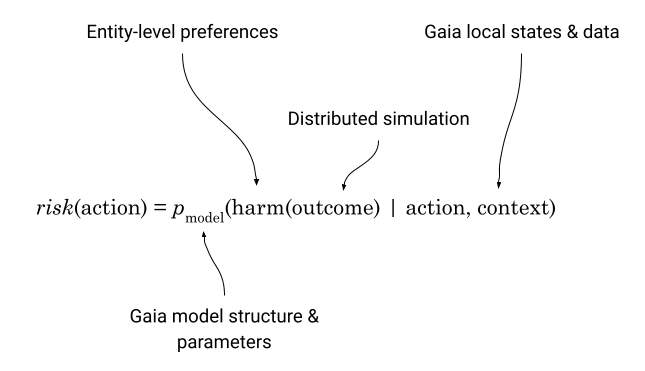

We argue that the Gaia Network, including the DEs that work as decision models, qualifies perfectly for the job of a distributed AI scientist. The DEs can query the diverse and constantly evolving knowledge in the network to form an “effective world model” with epistemic humility built in. They can provide the demand signals and resources to improve and expand the world model. They can then use this model to simulate counterfactual outcomes of actions that take into account all available local context and dependencies between contexts, and use these simulations to approximately estimate probabilities for outcomes (marginalization). They can factor in the preferences and safety constraints of all agents that use the Network, which they have already shared in order to enable the DEs to help with their own decisions. This gives all the terms in Bengio’s notional risk evaluation formula (adapted from slide 17 here):

Possibly the most important aspect of this design – which comes particularly to light when comparing it to the OAA design – is that none of the above components is specific to AI safety; they are just repurposed from existing and day-to-day use cases for which the users/agents already have the incentives to share the required information with the Gaia Network and the DEs. This means that tackling AI safety is no longer “one of the most ambitious scientific projects in human history”, but rather a “fringe benefit” from our pursuit of knowledge and better decision-making. And which, in turn, benefits from all improvements to the efficiency and effectiveness of those pursuits that have already been produced by past and ongoing advances in computational statistics and machine learning – and all that will be generated by the Gaia Network connecting and interoperating the many millions of such models in existence, and increasing the RoI of creating and improving models.

This outcome is not dependent on AI safety funders; nor the foibles of political will in the scientific and policy communities; nor the desire of billions of humans to independently share their preferences with an elicitor. All that is required – beyond some cheap work on core infrastructure, modeling and developer experience – is the same economic behaviors and incentives that exist today: the desire for profit, the pursuit of greater scientific knowledge, and the existence of institutions willing and able to internalize the cost of coordinated action.

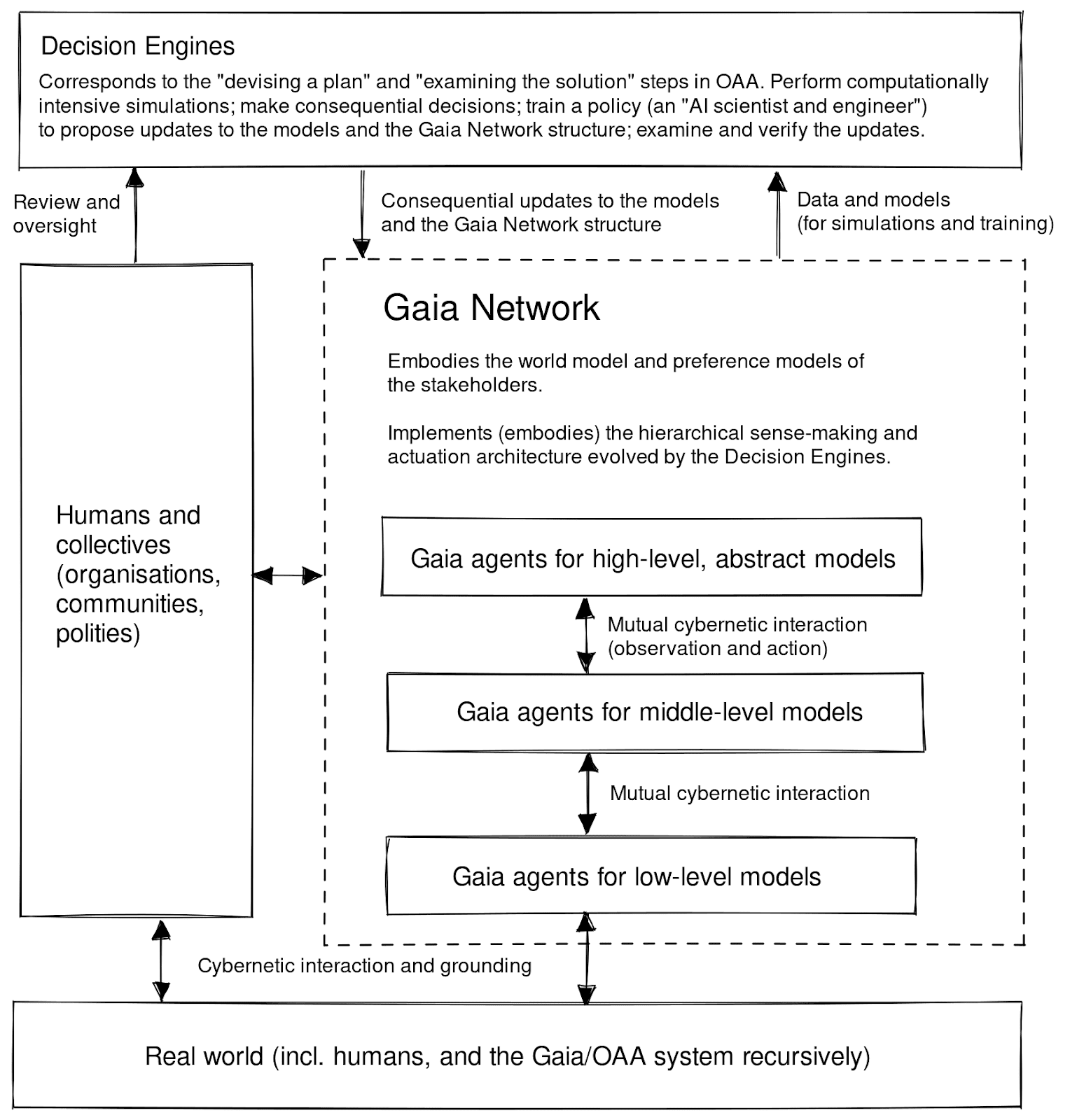

An overview of this architecture, adapted from our last post, is given below.

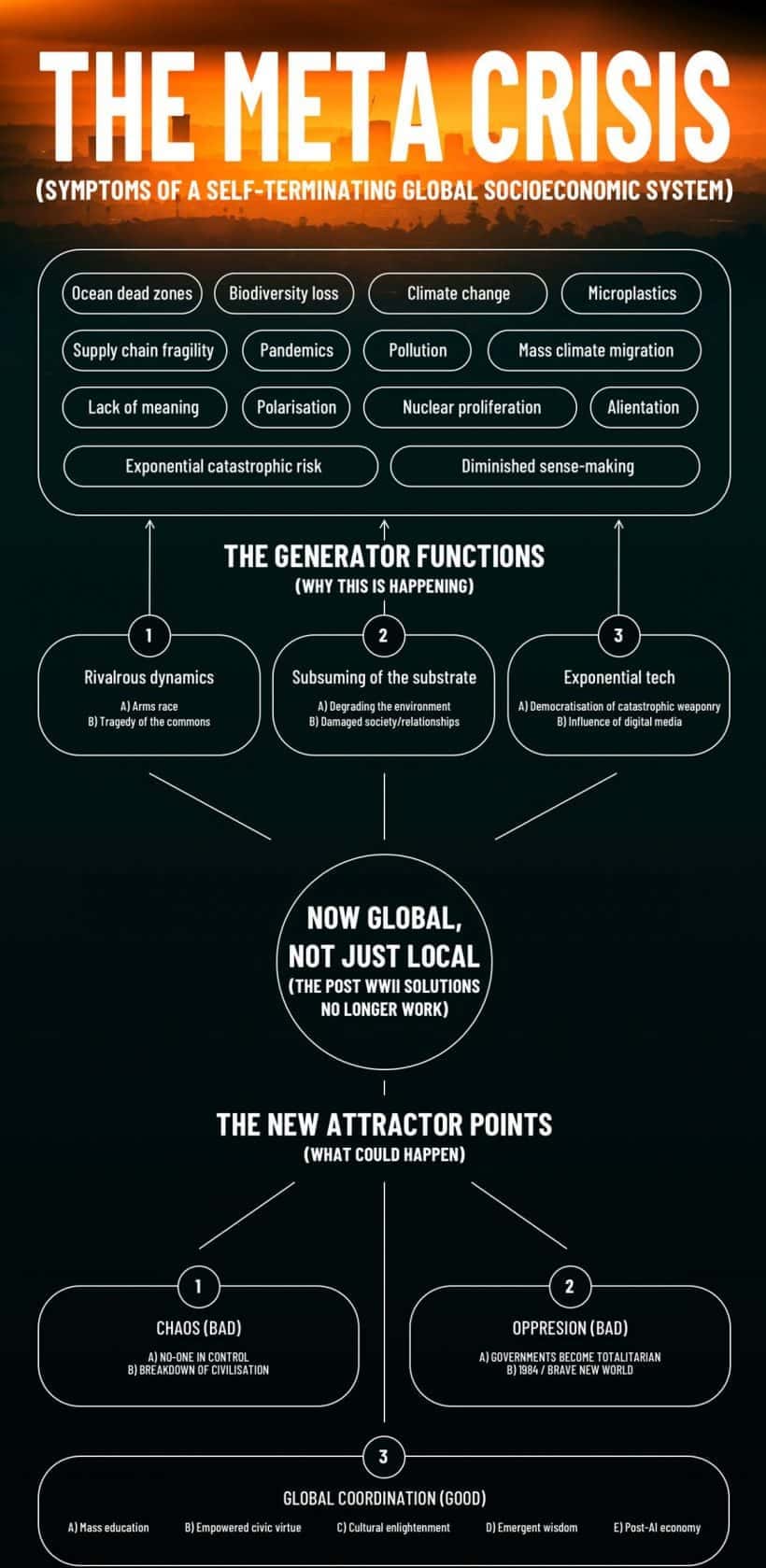

A distributed oracle for the Metacrisis

The very same architecture helps us identify shared pathways through the Metacrisis. Below is a nice visual of the high-level causal model we have in mind when thinking about the Gaia Network’s role. By connecting all the relevant domain models and making apparent not only their interdependencies but also their common causes – the “generator functions” or underlying self-reinforcing dynamics – Gaia helps us understand likely future outcomes of the current trends and establish strategies with the highest potential for nudging our global course away from the two catastrophic attractors that currently seem most likely (chaos and totalitarianism). Not only that, but as we’ve seen, Gaia-powered DEs are also used as coordination surfaces: shared tools for establishing and monitoring contracts, treaties and institutions, with unprecedented scale and reliability. While this “infrastructure for model-augmented wisdom” doesn’t immediately or inherently solve conflicts of power and interests, it does provide a consistent, repeatable and scalable institution for achieving and retaining incremental advances towards a positive-sum, cooperative Gaia Attractor.

Conclusion: Back from the future

We just claimed that a lot will change in “a few years from now”. How realistic is this? Here’s the really good news: all the capabilities described above can be implemented with today’s technology.[19] Not only that: we’re already doing it. We have assembled several organizations and individuals into a growing Gaia Consortium, and have of course been leveraging loads of existing components and building some of our own. Examples:

- Ocean Protocol and DefraDB: Decentralized computing and data management.

- Fangorn (coming soon): a decentralized platform for building and performing (active) inference on Gaia-connected state space models.

- Sentient Hubs: Federated model-based decision support.

We are simultaneously working on specific applications of the Gaia Network, focusing primarily on bioregional economies and sustainable supply chains. These have been useful for providing concrete use cases (some of which we saw above) and resourcing. But ultimately we intend to evolve this into a fully open and collaborative R&D effort to build the general-purpose capabilities described above.

If you’re interested in contributing to this work, here are some possible ways to do it:

- People interested in developing this agenda with us should sign up for the upcoming SPAR program. We’re advising two projects: one centers on formalizing and computationally testing the use of free energy-based causal models for measuring AI safety in real-world, embedded environments; the other is about outstanding mechanism design, engineering, economics, governance, (and perhaps even ethical) issues of the Gaia Network.

- If you’d like to use the Gaia Network (or its precursors) in your own use cases, we can happily support standing up “testnets” and help design prototypes and proofs of concepts.

- If you have resources to help accelerate development, we can gladly accept grant funding or other forms of support.

If you’re interested in any of the above, please reach out!

- ^

Below, Gaia Network and its applications are described both in present and future tense in different narration modes. To avoid confusion, note that Gaia Network is not yet implemented and deployed on a large scale.

- ^

In a simple structure like this, a single backward propagation is enough, but there are cases where we need to iteratively update (message passing). For those cases, think of the net flow that is obtained after propagating up and down enough times.

- ^

For instance, in variational inference algorithms, the free energy (or stochastic estimates of it) is directly used as a minimization objective. Equivalently, its negative, the Evidence Lower Bound [ELBO], is maximized.

- ^

There is an additional concept of free energy associated with decision-making, corresponding to the discrepancy between the veridical posterior justified by priors and observations and the one “desired” in light of a given reward function/model/distribution.

- ^

If you already have information that comes from past experiments, or knowledge elicited by independent experts, you can also incorporate it into the priors. The challenge is how to keep track of the grounding behind all of this imported information. This is, in a sense, what the Gaia Network does algorithmically, as we’ll see.

- ^

- ^

Even for the parameters of interest in your study, there is a high value in having access to past studies’ posteriors: after having your posteriors “in isolation”, you now want to compare them to previous results in the literature, to check for novelty or consistency.

- ^

Technically, a pooled distribution is not a weighted average of distributions (that would be a mixture distribution); instead, it’s a distribution whose parameters are a weighted average (or other combination) of the parameters of the original distributions. Just so we’re clear: here we’re talking about statistical parameters of the posteriors of scientific parameters; for instance, the mean and variance of the average yield.

- ^

In practice, different studies often use different model structures and local ontologies. Sometimes these are just syntax differences, such as alternative parameterizations (ex: centered vs uncentered parameters, etc), but often they represent different semantics – different statistical constructs, reflecting differences in context and/or scientific methodology. To enable aggregations to happen between models with these differences, translations are required. To this end, Gaia contributors often publish lens models that perform data translation. As an added benefit of this approach, in cases when there are different semantics that inevitably lead to a loss in translation (as WVO Quine pointed out and Chris Fields has recently formalized), it’s useful for there to be a separate lens model that accounts for and “absorbs” that loss.

- ^

This does mean your model is colored by using the informative Gaia posteriors as priors for a parameter of interest. But you can always turn off the annotations for those parameters to isolate the effects of the information contributed by your study (aka the likelihood).

- ^

In this example, these are independent scalar parameters, but they could be any multidimensional array with any kind of internal correlation structure.

- ^

See also "The Credit Assignment Problem" by Abarm Demsky.

- ^

This is unlike a blockchain, which is designed to ensure that all nodes are “almost always” in full consensus about the entire contents of the global state (which then requires hacks like “L2” chains to improve speed and flexibility).

- ^

How? It depends on the parameterization used, but in most cases, partial pooling brings posterior means closer together. You can have parameterizations with multiple modes, like a Gaussian mixture distribution, but this tends to imply that your parameter is representing multiple categories instead of a scalar and should be changed to reflect that.

- ^

No matter how small, Gaia eventually propagates every nonzero update to every parameter on the network, so we can have eventual consistency. The protocol can choose to batch small updates for efficiency.

- ^

Of course, no one cares about an abstract quantity like FER; they care about concrete advancements in specific areas of science. But that’s the same as saying no one cares about money, but about the goods and services they can buy with it.

- ^

That primarily means we’re excluding “teach AI how to play video games” or “decide which next token to generate for a user” types of scenarios.

- ^

We could zoom even further out to tackle the domains of strategy consulting, and ask more “meta” questions. What are the theories of change, how do they connect to each other, how well developed, and how much intensity is in explore vs exploit mode? We will explore these further in a follow-up article.

- ^

There are some areas where current solutions aren’t fully adequate, but these are matters of incremental progress, not qualitative breakthroughs.

Executive summary: The Gaia Network is proposed as a decentralized system for connecting causal models to improve scientific efficiency, decision-making, and AI safety via collective intelligence.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

TLDR: This proposal claims to be able to solve a wide range of problems (including AI Safety and, apparently, defeating Moloch) by implementing an extremely ambitious proposal that would require large amounts of unprecedented coordination. I'm deeply skeptical.

I get that this is not particularly deep feedback, but after spending about two hours going through the old and the new post, my impression is that this a complex, very technical and extremely ambitious proposal, and for this reason, I would be very surprised if this project grew beyond the uptake of a few niche stakeholders.

As far as I understand it, if this system were to be implemented successfully, it would require not only fully transforming the way we plan, fund, do and publish science[1], but also convincing varied stakeholders in society (like businesses and individuals) to use this complicated system for their own decision-making, all under AI safety deadlines.

This feels extremely untractable on the surface, and I'm worried about how this parallels the general form of similar proposals in the world of crypto, DeFi, DAOs, etc.[2] There's usually an ambitious, highly-technological proposal for changing the fundamental way we do things in broad sectors of society, with little to no actionable plan for getting there, and dealing with all the complexities of society along the way.

I'm also skeptical of the theory of change. Even if AI Safety timelines were long, and we managed to pull this Herculean effort off, we would still have to deal with problems around AI Safety governance. Why would AGI companies want to stick to this way of developing systems?

It's also the case that this project also claims to be able to basically be able to slay Moloch[3]. This seems typical of solutions looking for problems to solve, especially since apparently this proposal came from a previous project that wasn't related to AI Safety at all.

I hope I'm wrong, and maybe I'm just being uncharitable as a result of being initially very confused by reading the old article (this is a great improvement!), but I also felt like I should share some feedback after having spent this much time going through everything.

Most scientists barely know about Bayesian methods, and even less want to use them; why would they completely change how they work to “join” the network? (Especially at the start, when the network doesn't provide much value yet)

I later found that Digital Gaia's Twitter account seems full of references to blockchain, DeFI and DAOs too. I don't get how they're related.

See here. Apparently the project has existed for a few years.

Hello Agustín, thanks for engaging with our writings and sharing your feedback.

Regarding the ambitiousness, low chances of overall success, and low chances of uptake by human developers and decision-makers (I emphasize "human" because if some tireless near-AGI or AGI comes along it could change the cost of building agents for participation in the Gaia Network dramatically), we are in complete agreement.

But notice Gaia Network could be seen as a much-simplified (from the perspectives of mathematics and Machine Learning) version of Davidad's OAA, as we framed it in the first post. Also, Gaia Network tries to leverage (at least approximately and to some degree) the existing (political) institutions and economic incentives. In contrast, it's very unclear to me how the political economy in the "OAA world" could look like, and what is even a remotely plausible plan for switching from the incumbent political economy of the civilisation to OAA, or "plugging" OAA "on top" of the incumbent political economy (and hasn't been discussed publicly anywhere, to the best of our knowledge). We also discussed this in the first post. Also, notice that due to its extreme ambitiousness, Davidad doesn't count on humans implementing OAA with their bare hands, it's a deal-breaker if there isn't an AI that can automate 99%+ of technical work needed to convert the current science into Infra-Bayesian language.[1] And yes, the same applies to Gaia Network: it's not feasible without massive assistance from AI tools that can do most of the heavy lifting. But if anything, this reliance on AI is less extreme in the case of Gaia Network than in the case of OAA.

The above makes me think that you should therefore be even more skeptical of OAA's chances of success than you are about Gaia's chances. Is this correct? If not, what do you disagree about in the reasoning above, or what elements of OAA make you think it's more likely to succeed?

Adoption

The "cold start" problem is huge for any system that counts on network effect, and Gaia Network is no exception. But this also means that the cost of convincing most decision-makers (businesses, scientists, etc.) to use the system is far smaller than the cost of convincing the first few, multiplied by the total number of agents. We have also proposed how various early adopters could get value out of the "model-based and free energy-minimising way" of doing decision-making (we don't need the adoption of Gaia Network right off the bat, more on this below) very soon, in absolutely concrete terms (monetary and real-world risk mitigation) in this thread.

In fact, we think that if there are sufficiently many AI agents and decision intelligence systems that are model-based, i.e., use some kinds of executable state-space ("world") models to do simulations, hypothesise counterfactually about different courses of actions and external conditions (sometimes in collaboration with other agents, i.e., planning together), and deploy regularisation techniques (from Monte Carlo aggregation of simulation results to amortized adversarial methods suggested by Bengio on slide 47 here) to permit compositional reasoning about risk and uncertaintly that scales beyond the boundary of a single agent, the benefits of collaborative inference of the most accurate and well-regularised models will be so huge that something like Gaia Network will emerge pretty much "by default" because a lot of scientists and industry players will work in parallel to build some versions and local patches of it.

Blockchains, crypto, DeFi, DAOs

I understand why the default prior when hearing anything about crypto, DeFi, and DAOs now is that people who propose something like this are either fantaseurs, or cranks, or, worse, scammers. That's unfortunate to everyone who just wants to use the technical advances that happen to be loosely associated with this field, which now includes almost anything that has to do with cryptography, identity, digital claims, and zero-knowledge computation.

Generally speaking, zero-knowledge (multi-party) computation is the only solution to make some proofs (of contribution, of impact, of lack of deceit, etc.) without compromising privacy (e.g., proprietary models, know-how, personal data). The ways to deal with this dilemma "in the real world" today inevitably come down to some kind of surveillance which many people become very uneasy about. For example, consider the present discussion of data center audits and compute governance. It's fine with me and most other people except for e/accs, for now, but what about the time when the cost of training powerful/dangerous models will drop so much that anyone can buy a chip to train the next rogue AI for 1000$? How does compute governance look in this world?

Governance

I don't think AI Safety governance is that special among other kinds of governance. But more generally on this point, of course, governance is important, and Gaia Network doesn't claim to "solve" it; rather, it plans to rely on some solutions developed by other projects (see numerous examples in CIP ecosystem map, OpenAI's "Democratic Inputs to AI" grantees, etc.).

We just mention in passing incorporating preferences of system's stakeholders into Gaia agents' subjective value calculations (i.e., building reward models for these agents/entities, if you wish), but there is a lot to be done there: how the preferences of the stakeholders are aggregated and weighted, who can claim to be a stakeholders of this or that system in the first place, etc. Likewise, on the general Gaia diagram in the post, there is a small arrow from "Humans and collectives" box to "Decision Engines" box labelled "Review and oversight", and, as you can imagine, there is a lot to be going on there as well.

IDK, convinced that this is a safe approach? Being coerced (including economically, not necessary by force) by the broader consensus of using such Gaia Network-like systems? This is a collective action problem. This question could be addressed to any AI Safety agenda and the answer would be the same.

Moloch

I wouldn't say that we "claim to be able to slay Moloch". Rafael is more bold in his claims and phrasing than me, but I think even he wouldn't say that. I would say that the project looks very likely to help to counteract Molochian pressures. But this seems to me almost a self-evident statement, given the nature of the proposal.

Compare with Collective Intelligence Project. It has started with the mission to "fix governance" (and pretty much "help to counteract Moloch" in the domain of political economy, too, they barely didn't use this concept, or maybe they even did, I don't want to check it now), and now they "pivoted" to AI safety and achieved great legibility on this path: e.g., they partner with OpenAI, apparently, on more than one project now. Does this mean that CIP is a "solution looking for a problem"? No, it's just the kind of project that naturally lends to helps both with Moloch and AI safety. I'd say the same could be said of Gaia Network (if it is realised in some forms) and this lies pretty much in plain sight.

Furthermore, this shouldn't be surprising in general, because AI transition of the economy is evidently an accelerator and a risk factor in the Moloch model, and therefore these domains (Moloch and AI safety) almost merge in my overall model of risk. Cf. Scott Aaronson's reasoning that AI will inevitably be in the causal structure of any outcome of this century so "P(doom from AI)" is not well defined; I agree with him and only think about "P(doom)" without specification what this doom "comes from". Again, note that it seems that most narratives about possible good outcomes (take OpenAI's superalignment plan, Conjecture's CoEm agenda, OAA, Gaia Network) all rely on developing very advanced (if not superhuman) AI along the way.

Notice here again: you mention that most scientists don't know about Bayesian methods, but perhaps at least two orders of magnitude still fewer scientists have even heard of Infra-Bayesianism, let alone being convinced it's a sound and a necessary methodology for doing science. Whereas for Bayesianism, from my perspective, it seems there is quite a broad consensus of its soundness: there are numerous pieces and even books written about how P-values are a bullshit value of doing science and that scientists should take up (Bayesian) causal inference instead.

There are a few notable voices that dismiss Bayesian inference, for example, David Deutsch, but then no less notable voices, such as Scott Aaronson and Sean Carroll (of the people that I've heard, anyway), that dismiss Deutsch's dismissal in turn.

I am, but OAA also seems less specific, and it's harder to evaluate its feasibility compared to something more concrete (like this proposal).

My problem with this is that it sounds good, but this argument relies on many hidden premises, that make me inherently skeptical of any strong claims like “(…) the benefits of collaborative inference of the most accurate and well-regularised models will be so huge that something like Gaia Network will emerge pretty much 'by default'”.

I think this could be addressed by a convincing MVP, and I think that you're working on that, so I won't push further on this point.

The current best proposals for compute governance rely on very specific types of math. I don't think throwing blockchain or DAOs at the problem makes a lot of sense, unless you find an instance of the very specific set of problems they're good at solving.

My priors against the crypto world comes mostly from noticing a lot of people throwing tools to problems without a clear story of how these tools actually solve the problem. This has happened so many times that I have come to generally distrust crypto/blockchain proposals unless they give me a clear explanation of why using these technologies makes sense.

But I think the point I made here was kinda weak anyway (it was, at best, discrediting by association), so I don't think it makes sense to litigate this particular point.

I find this decently convincing, actually. Like, maybe, I'm pattern matching too much on other projects which have in the past done something similar (just lightly rebranding themselves while tacking a completely different problem).

Overall, I still don't feel very good about the overall feasibility of this project, but I think you were right to push back on some of my counterarguments here.

Thanks @Agustín Covarrubias . Glad to hear that you feel this is concrete enough to be critiqued and cross-validated, that was exactly our goal in writing and posting this. From your latest responses, it seems like the main reason why you "still don't feel very good about the overall feasibility of this project" is the lack of a "convincing MVP", is that right? We are indeed working on this along a few different lines, so I would be curious to understand what kind of evidence from an MVP it would take to convince you or shift your opinion about feasibility.