Edit 1/29: Funding is back, baby!

Crossposted from my blog.

(This could end up being the most important thing I’ve ever written. Please like and restack it—if you have a big blog, please write about it).

A mother holds her sick baby to her chest. She knows he doesn’t have long to live. She hears him coughing—those body-wracking coughs—that expel mucus and phlegm, leaving him desperately gasping for air. He is just a few months old. And yet that’s how old he will be when he dies.

The aforementioned scene is likely to become increasingly common in the coming years. Fortunately, there is still hope.

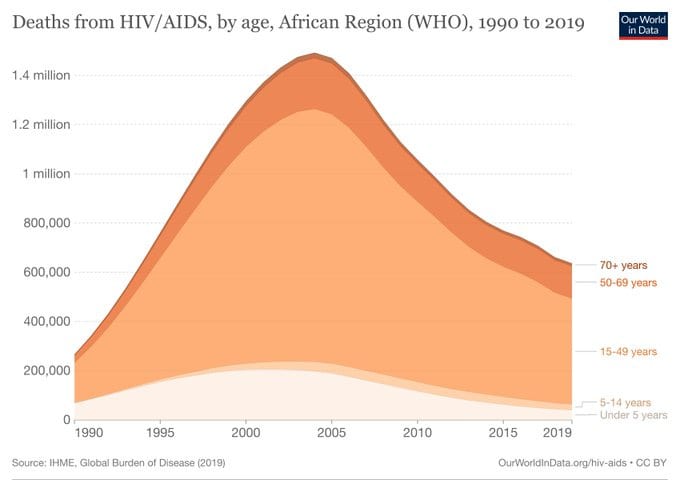

Trump recently signed an executive order shutting off almost all foreign aid. Most terrifyingly, this included shutting off the PEPFAR program—the single most successful foreign aid program in my lifetime. PEPFAR provides treatment and prevention of HIV and AIDS—it has saved about 25 million people since its implementation in 2001, despite only taking less than 0.1% of the federal budget. Every single day that it is operative, PEPFAR supports:

> * More than 222,000 people on treatment in the program collecting ARVs to stay healthy;

> * More than 224,000 HIV tests, newly diagnosing 4,374 people with HIV – 10% of whom are pregnant women attending antenatal clinic visits;

> * Services for 17,695 orphans and vulnerable children impacted by HIV;

> * 7,163 cervical cancer screenings, newly diagnosing 363 women with cervical cancer or pre-cancerous lesions, and treating 324 women with positive cervical cancer results;

> * Care and support for 3,618 women experiencing gender-based violence, including 779 women who experienced sexual violence.

The most important thing PEPFAR does is provide life-saving anti-retroviral treatments to millions of victims of HIV. More than 20 million people living with HIV globally depend on daily anti-retrovirals, including over half a million children. These children, facing a deadly illness in desperately poor countries, are now going

warning - mildly spicy take

In the wake of the release, I was a bit perplexed by how much of Tech Twitter (answered by own question there) really thought this a major advance.

But in actuality a lot of the demo was, shall we say, not consistently candid about Gemini's capabilities (see here for discussion and here for the original).

At the moment, all Google have released is a model inferior to GPT-4 (though the multi-modality does look cool), and have dropped an I.O.U for a totally-superior-model-trust-me-bro to come out some time next year.

Previously some AI risk people confidently thought that Gemini would be substantially superior to GPT-4. As of this year, it's clearly not. Some EAs were not sceptical enough of a for-profit company hosting a product announcement dressed up as a technical demo and report.

There have been a couple of other cases of this overhype recently, notably 'AGI has been achieved internally' and 'What did Ilya see?!!?!?' where people jumped to assuming a massive jump in capability on the back on very little evidence, but in actuality there hasn't been. That should set off warning flags about 'epistemics' tbh.

On the 'Benchmarks' - I think most 'Benchmarks' that large LLMs use, while the contain some signal, are mostly noisy due to the significant issue of data contamination (papers like The Reversal Curse indicate this imo), and that since LLMs don't think as humans do we shouldn't be testing them in similar ways. Here are two recent papers - one from Melanie Mitchell, one about LLMs failing to abstract and generalise, and another by Jones & Bergen[1] from UC San Diego actually empirically performing the Turing Test with LLMs (the results will shock you)

I think this announcement should make people think near term AGI, and thus AIXR, is less likely. To me this is what a relatively continuous takeoff world looks like, if there's a take off at all. If Google had announced and proved a massive leap forward, then people would have shrunk their timelines even further. So why, given this was a PR-fueled disappointment, should we not update in the opposite direction?

Finally, to get on my favourite soapbox, dunking on the Metaculus 'Weakly General AGI' forecast:

tl;dr - Gemini release is disappointing. Below many people's expectations of its performance. Should downgrade future expectations. Near term AGI takeoff v unlikely. Update downwards on AI risk (YMMV).

I originally thought this was a paper by Mitchell, this was a quick system-1 take that was incorrect, and I apologise to Jones and Bergen.

Thanks for the response!

A few quick responses:

Good to know! That certainly changes my view of whether or not this will happen soon, but also makes me think the resolution criteria is poor.

You might be interested in the recent OpenPhil RFP on benchmarks and forecasting.

... (read more)