All models are wrong, but some are useful.

George Box

Likewise, most long term forecasts are wrong, the process is useful.

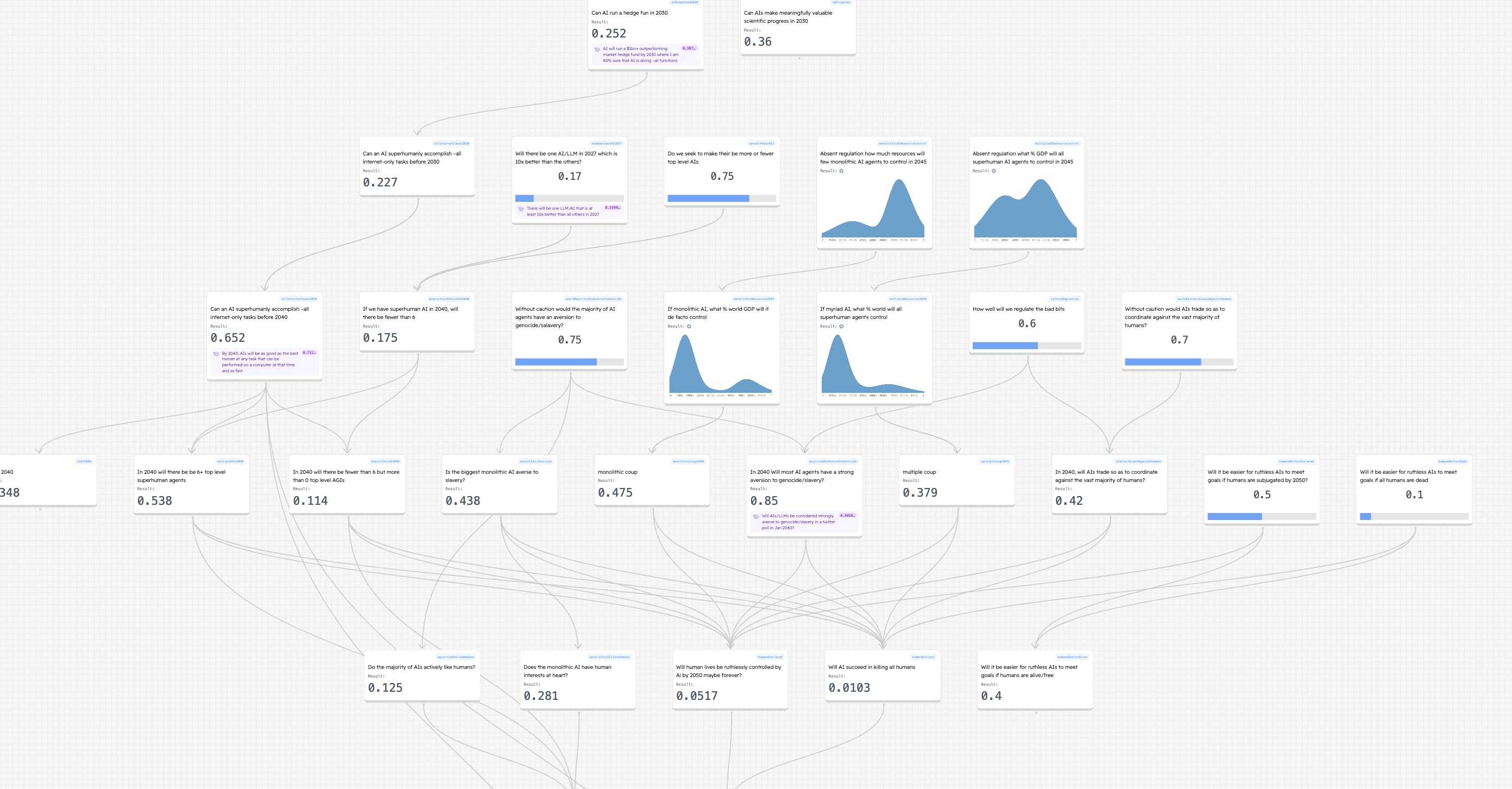

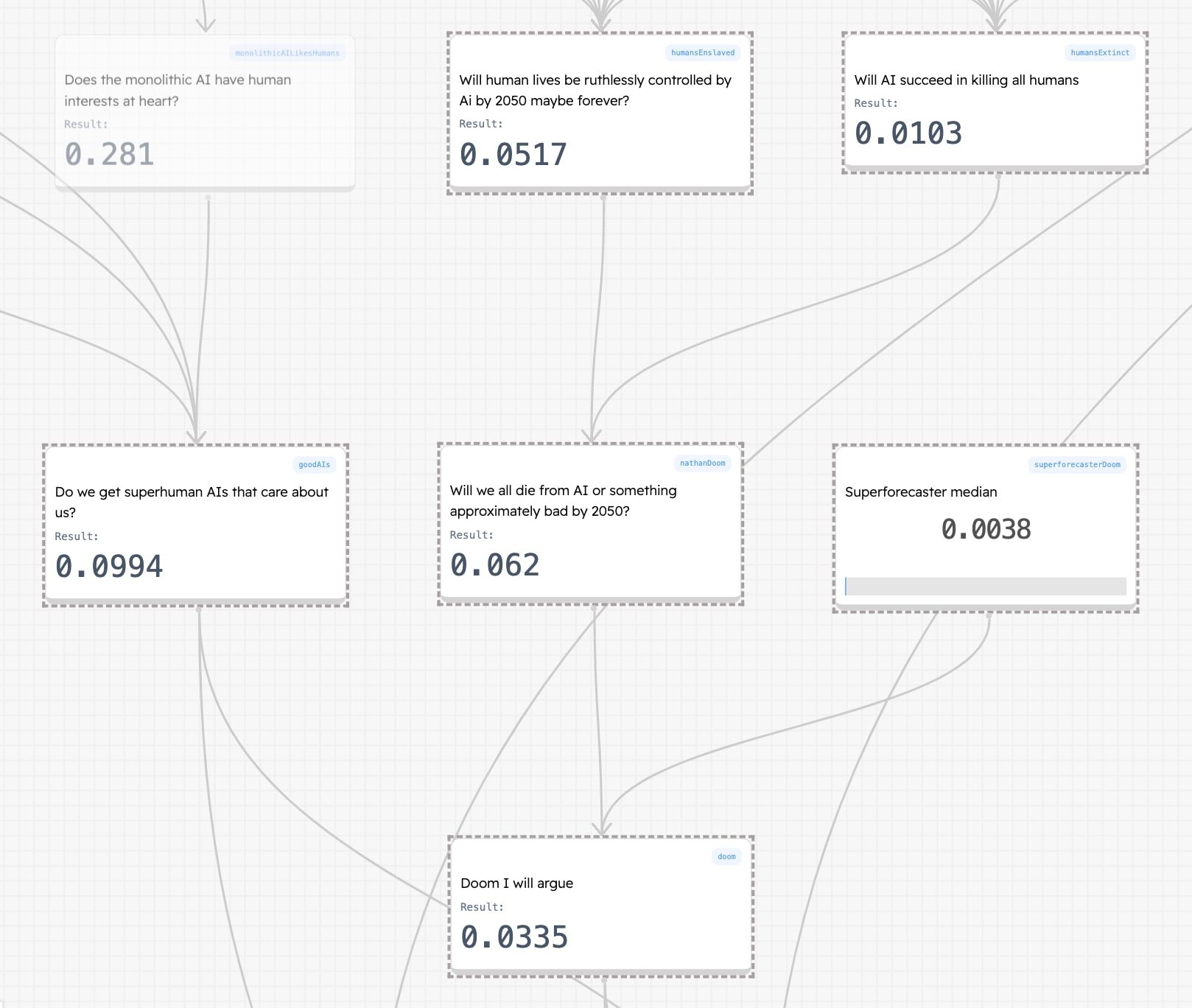

Summary

- I have built a large model around AI risk/reward

- I'm looking for correction

- Numbers that I’m curious for errors in

- AI to run all functions of run a hedge fund in 2030 - 20%

- Ai do all tasks a human can do using the internet (and a human using AI tools) to the standard of the best humans by 2040 - 65%

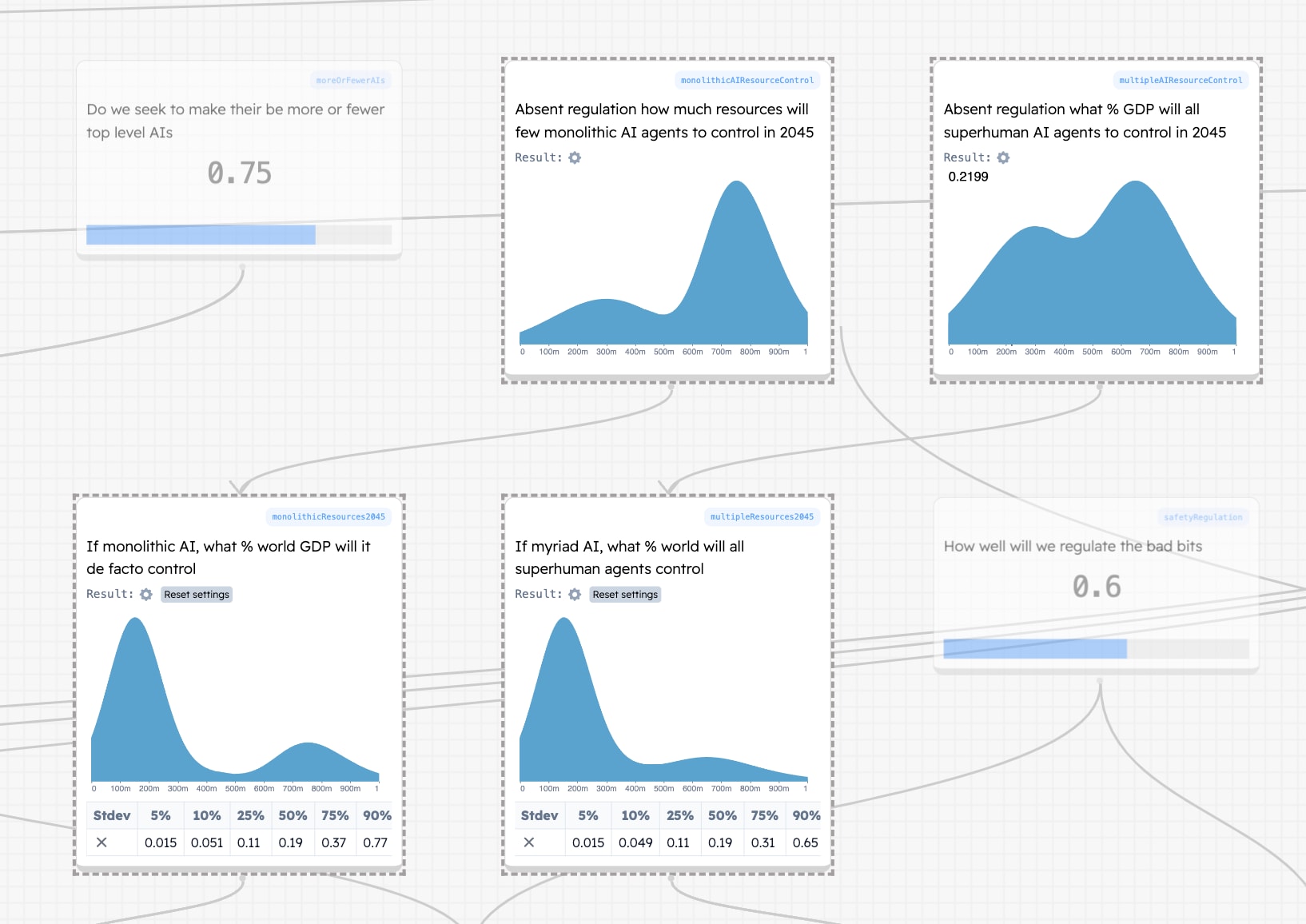

- If there is AI, the best agents (10x better than the closest) will be few 18%, or otherwise there will be many AI agents - 82%

- In 2040 a single AGI de facto controls the majority of world GDP - if few AIs: median 30% GDP control, if many: median - 26% GDP

- AI agents are averse to genocide/slavery - 85%

- AIs trade much better with each other than humans - 40%

- Better for AI goals if humans all dead or enslaved - 60%

- I have headline values for doom/victory but I don't think you should take them seriously, so they are at the bottom

- Is this work valuable? This is not my job. I did this in about 2 days. Was it valuable to you? Should I do more of it?

- I'm gonna make a twitter thread/tiktok

Video

I recommend watching this video:

3 min loom video (watch at 1.5 speed)

There is a model here[1]. Tell me what you think of the tool

Explanations of the argument step by step

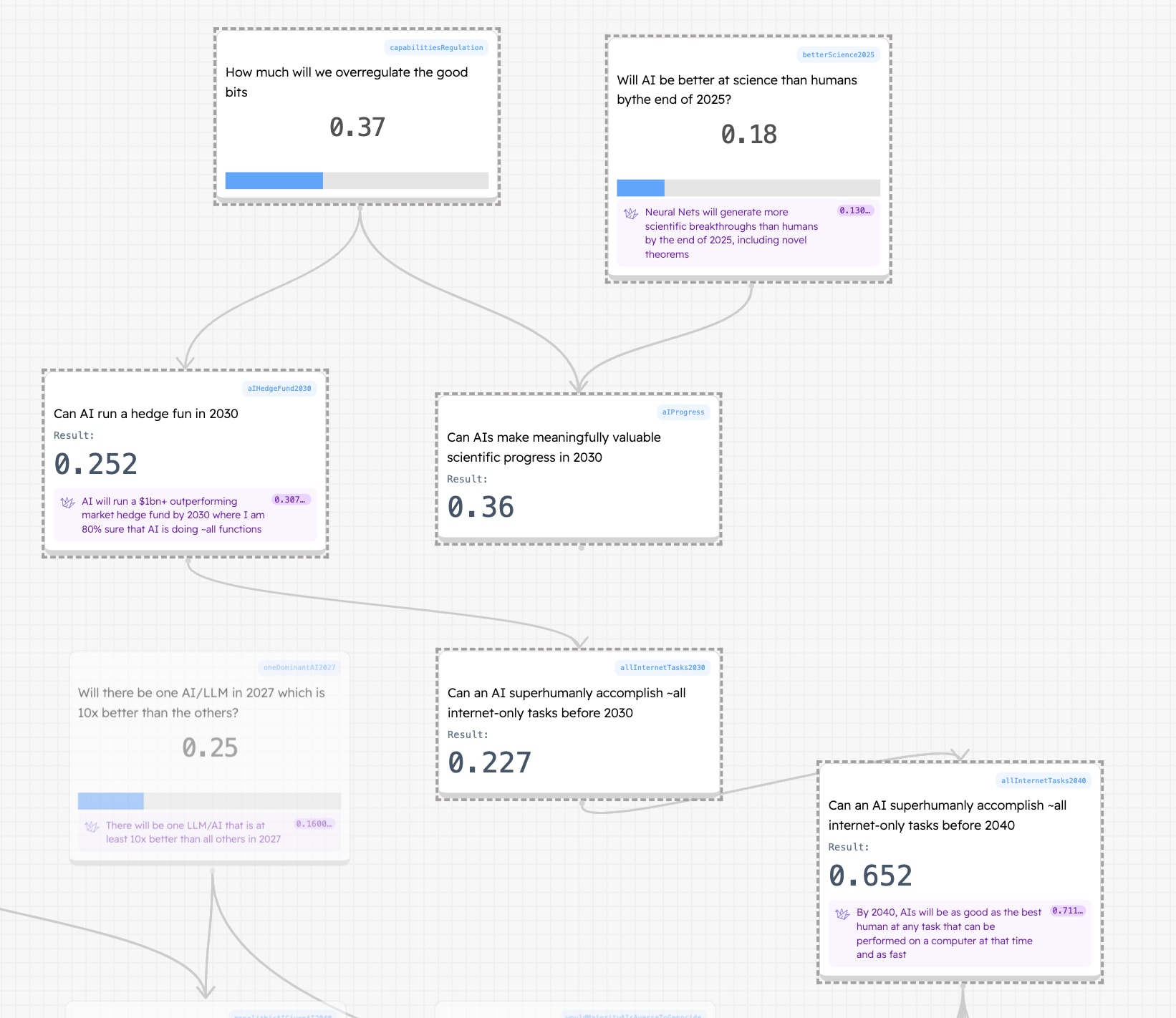

Will we get internet Superhuman AI by 2040? Maybe ( 65% )

- Maybe we get AI that can do any task on a computer as well as the best humans at at least the same speed. This will mean far more scale and likely far more speed (market)

- Things that affect this

- If AIs are better than humans at science in 2025 (market). It seems likely that if you can make novel discoveries then you can do a lot of other stuff.[2]

- If AI is fully running a hedge fund in 2030 (https://manifold.markets/NathanpmYoung/ai-will-run-a-1bn-outperforming-mar). It seems clear to me that a $1bn profitable market neutral hedge fund covers much of what we mean by an AGI. Making decisions, finding valuable insights, interacting with human processes at scale.

- If it turns out that complex tasks require one-shot AIs. I have tried to pull this out with the notion of sub agents. If a hedge fund AI isn’t calling sub agents to do stuff for it, it feels unlikely it could do complex general tasks. Maybe it’s just really good at some narrow kind of prediction.

- Heavy regulation - perhaps it becomes illegal to train for certain capabilities and so there aren’t hedge fund or generally superhuman AIs

- Can you think of others?

If we get superhuman AI how will resources be controlled?

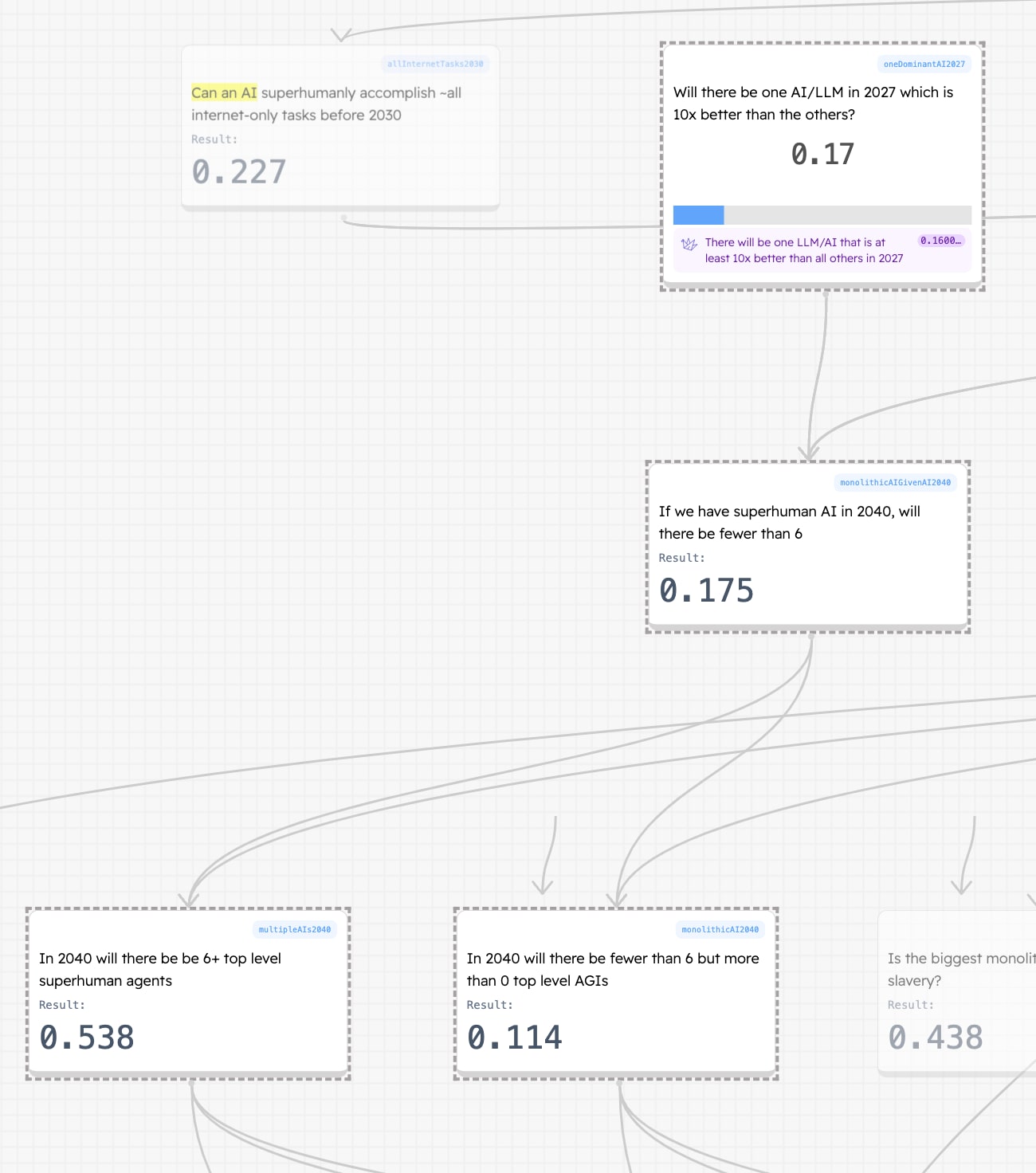

- If (as above) we have something we’d call AGI then do we live in world with many top level agents or few? By top level I mean “how many agents are 10x better than all other agents and humans?”

- Monolithic agents (1-5) - There are few agents that are best. Not a few models, a few agents - 19%

- Myriad agents (6+)[3] - We live in a big soupy world of many AI agents - 81%

- Things that affect this.

- If in 2027 there is already one LLM model that is waaay more competent (10x) than all the others (market). If this is already the case in 2027 it seems more plausible that it will be later

- How able are LLMs to improve themselves? If very, then it seems like <6 is likely a few models outstrip all the others. To me it seems AI improvement is the alignment problem again. So I think that many agents is more likely than a very small number - rapid self improvement while maintaining the same identity will be hard.

What are the economic factors? Do these incentivise the the creation of ever more agentic or intelligent models? I sense that ever more agentic models aren’t necessarily most profitable. You want someone to execute tasks, not replan your whole life (Katja Grace made this point[4])

What does regulation achieve here? Is this pushed one way or the other?

What other markets could we currently have here?

How will the resources be distributed in this case

- If the most powerful agents are few, I guess they will control most of the resources.

- I guess this is because having fewer agents to me suggests that you can’t make more - that for some reason they are either suppressing others or are accelerating ever faster. It seems that resources are more likely to be out of human control

- If there are many (6+) top level agents then I am really pretty uncertain.

- Here it feels like agents might be little more powerful than humans, or there might be a really wide spread of agents

Things that affect this

- Better regulation. We might stop agents controlling resources directly. I don’t really know what this means, so feel free to challenge on it

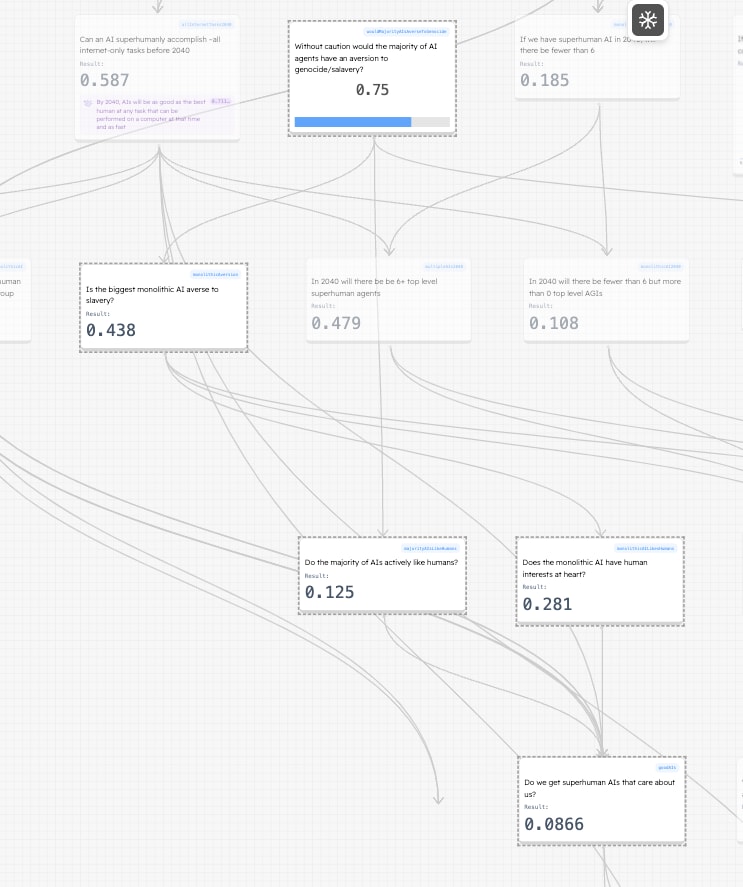

Will AIs be very good or very bad?

- Will AI agents avoid plans that involve slavery/genocide? This seems important because the reason that we don’t do these things is because they are repulsive to us. They don’t feature in our plans, even if they might make the plans more likely to succeed. Will this be the case for AIs

- Monolithic - 45%

- Myriad - 85%

- What affects this

- Without caution I still think they probably will have this aversion

- My sense so far is that LLMs have pretty human preferences. They need to be adversarially prompted to be otherwise. It is not clear to me that more intelligence pushes away from this. Now I’m not remotely confident enough, but I think the *evidence* against this is just “ruthless things would be more ruthless* but I think we should update against that. LLMs so far are not that ruthless

- Companies do not want AGIs that occasionally express preferences for genocide. This is against their bottom line

- With regulation I think this problem becomes better. I think companies will be well incentivised to ensure that agents dislike awful answers like slavery or genocide

- I sense that monolithic AIs are just much worse for this. Feels like a much more alien world in which the most powerful AIs are way more powerful than all the others. Feels like they might be more alien/ruthless

- Without caution I still think they probably will have this aversion

- My sense is that most of us think that if AGI doesn’t go badly it will go really well. But we do not say this often enough.

- How likely are AGIs to, on average, really want good things for us? These numbers are pretty uncertain, even for me

- Monolithic - 28%

- Myriad - 13%

- What affects this?

- If monolithic AI is not bad, it seems more likely to me to be really good, because it is more like a single agent

- I think it’s pretty unlikely that myriad AI is good as such. There is so much of it and it all has it’s own little goals. I don’t but it.

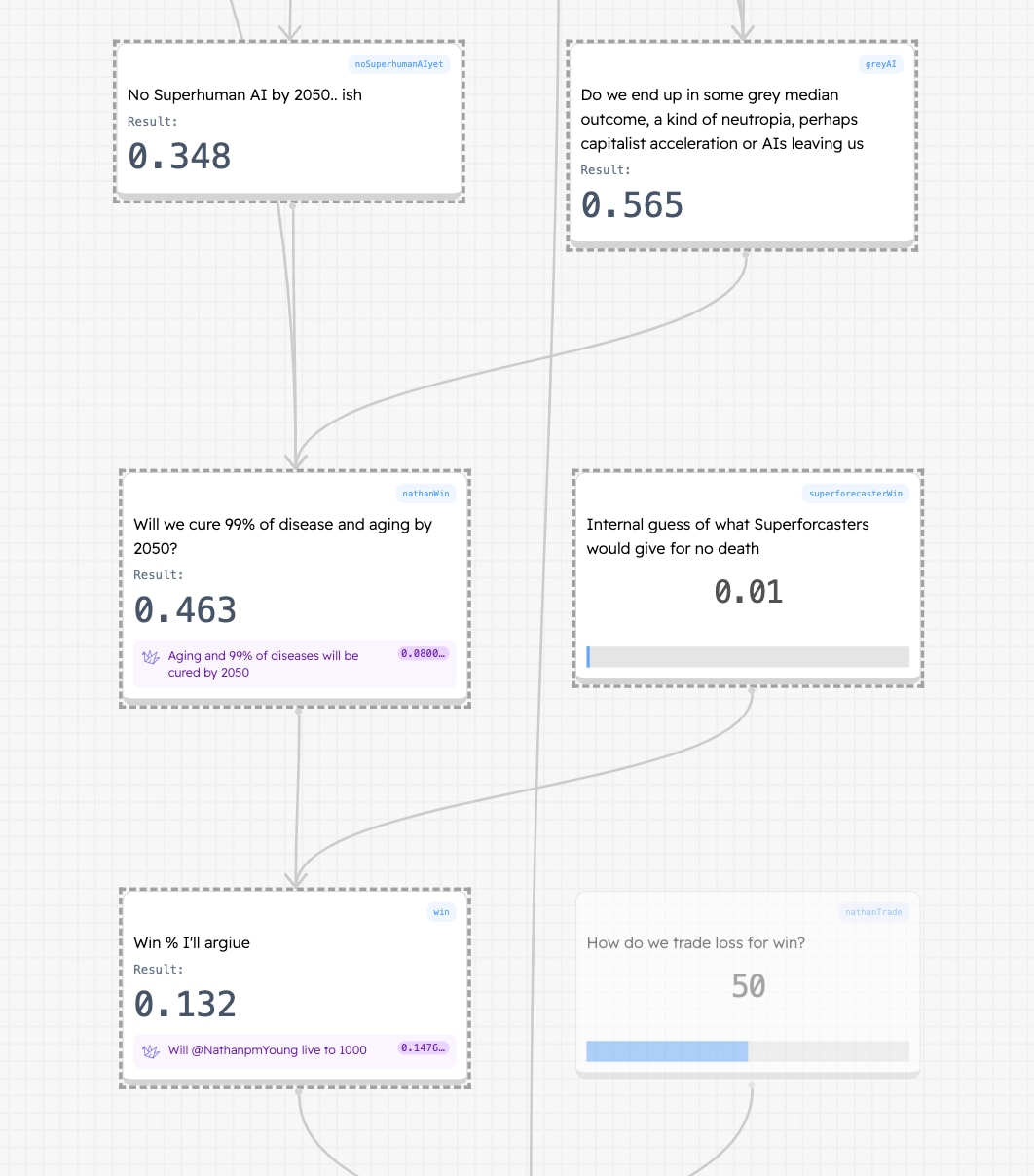

Interlude: Do we end aging by 2050?

- Aging post ~25 is bad

- A nice example of a win is whether we can cure aging and ~all disease

- I think I’ll say 13%. Most of this comes from my “neutopia” outcome, where we somehow neither end in disaster or ultimate success. This world still has AIs and therefore increased technology but they’re neither like us nor what to enslave us. The forecaster in me says this occupies a big part of the distribution

- My bodge factor. I don’t like numbers that I feel off with, but also I don’t like hiding that I’m doing that. I think the superforecasters aren’t gonna give more than 1% chance of ending aging. Take that with my 43% and we end up at 13%

- Will I live to 1000 (market)

- I think there is a good discussion here about tradeoffs. The model isn’t really robust to what risk = what reward, but I think a better version could be. I think that slowing AI does push back the date at which we end aging. Ideally I hope we can find a way to cut risk but keep benefit.

- What current markets could I create for this? Maybe something about protein folding?

And now a word from our sponsors

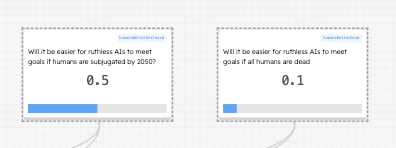

Will AIs have plans that are benefited by us being enslaved or dead

- Enslaved - 50% Seems clear to me that AIs might think we might be useful or just not really care enough to kill us all but not want us to get in the way

- Dead - 10% There seems some chance that the best way to not have us be in the way is to kill us all

- What affects this

- I can’t really think of good arguments here.

- 90% seemed too high, but I could be pushed up

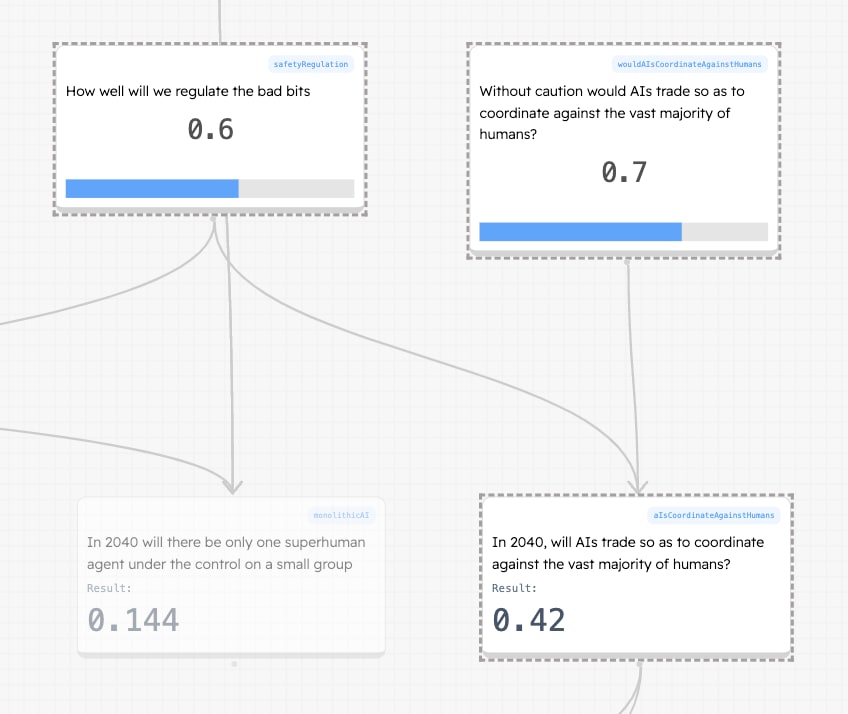

- If there are many AIs will they coordinate against humans? Maybe 42%

- If there are many AI agents will they coordinate against humans so as to enact coup attempts. Maybe

- Things that affect this

- It seems natural to me that AI agents will be more similar and comprehensible to one another than to us so will make better allies with each other than with us

- Regulation of AI to AI communication channels

- Will they kill or enslave us by 2050? 3%

- Will they have resources,want to kill or enslave us, be able to coordinate if necessary and run a successful coup? 3%

- Things that affect this

- All of the above. Most notably

- I have pulled myself a bit towards the superforecaster median because that usually does good things for my forecasts

- Will AI be easier than I think

- Will AIs hold resources more than I think

- Will they be more ruthless than I think

- Will we end up in Monolithic world

- Are coups easier than I think?

Broad Points

Monolithic Vs Myriad

It seems pretty important whether we are heading towards a world where there are a few AIs that control almost everything controlled by AIs or many. This isn’t my insight, but the latter case seems safer because AIs can be a check on one another.

Weakest link vs strongest

AIs still have to get other AIs to do stuff for them and for any awful action there will likely be a chain of AIs which need to do it. So whereas many seem to think you only need one bad AI for stuff to go wrong, I think you only need one good AI for stuff to go okay! Only one AI needs to report that it’s involved in illegal activity and then the whole scheme breaks. This is doubly so for things which involve coding, which is notoriously brittle and where LLMs will not have lots of training data - there just aren’t that many large public repositories to crawl, I think.

In this case, it’s not a weakest link problem, it’s a strongest link. Rather than any AI being bad, you only need one to be good. This seems pretty encouraging.

P(Doom) is unhelpful

Christ Jesus came into the world to save sinners, of whom I am the foremost.

I am one of the worst offenders for talking about P(doom) but writing this has made me think how poor a practice it is. Rather than us comparing things we are likely to be able to predict and might usefully disagree on we end up arguing over a made up number.

I think it's much more productive to talk about the next 3 or so years and try and get a picture of that. Because honestly, that too is likely to be flawed, but at least we have a hope of doing it accurately. Forecasts outside of 3 years are terrible.

Key Levers

Things that seem worth investigating

- If you have rapid takeoff you might want only 1 company working, BUT if you have gradual takeoff you might want many AIs of a similar level

- Make AIs want to whistleblow. Train AIs to report to channels (or eachother) if they suspect they are involved in malicious activity.

- Avoid AIs easily being able to control large amounts of resources. Require humans to be involved in transactions above a certain size

Conclusion

- Where do you think I am wrong?

- Was this valuable? Would you have paid for this?

- You probably can understand the model and will have a fun time reading it here

This is genius.

It's not my idea. Someone mentioned it to me.

Nice work, Nathan!

Cool app!

Are you pulling data from Manifold at all or is the backend "just" a squiggle model? If the latter, did you embed the markets by hand or are you automating it by searching the node text on Manifold and pulling the first market that pops up or something like that?

The backend is a squiggle model with manifold slugs in the comments.

I embedded the markets by hand, but we have built a GPT node creator, it's just pretty bad. I think maybe we will put automatic search on titles and then it can suggest manifold (and metaculus) nodes and you get the options to choose.

If there are features that would make you use this, let me know, it took like a week of dev time so isn't hard to change.

Likewise I guess I'll try and interview different researchers and build their models too, perhaps throw up a website for it.