We invest heavily in fellowships, but do we know exactly where people go and the impact the fellowships have? To begin answering this question I manually analyzed over 600 alumni profiles from 9 major late-stage fellowships (fellowships that I believe could lead directly into a job following). These profiles represent current participants and alumni from MATS, GovAI, ERA, Pivotal, Talos Network, Tarbell, Apart Labs, IAPS, and PIBBS.

Executive Summary

- I’ve compiled a dataset of over 600 alumni profiles of 9 major 'late stage' AI Safety and Governance Fellowships.

- I found over 10% of fellows did another fellowship after their fellowship. This doesn’t feel enormously efficient.

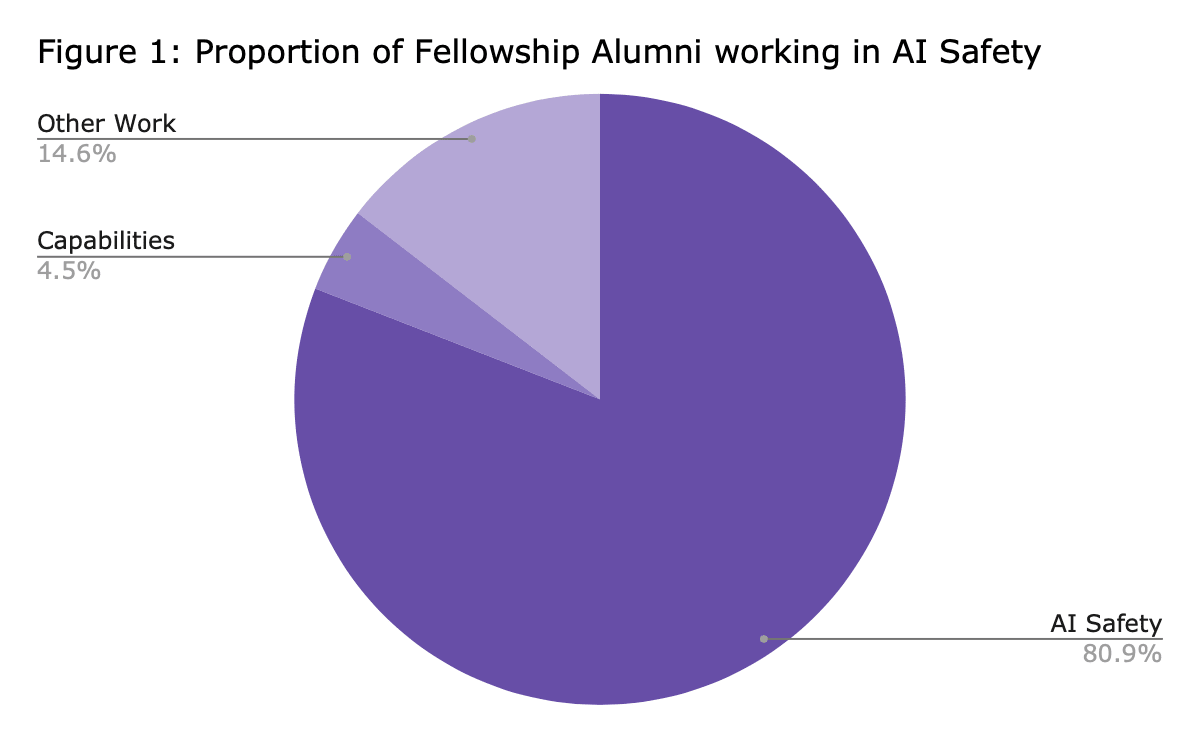

- This is more directional than a conclusion, but according to preliminary results around 80% of alumni are still working in AI Safety.

- I'm actively looking for collaborators/mentors to analyse counterfactual impact.

Key Insights from mini-project

Of the target fellowships I looked at, 21.5% (139) did at least one other fellowship alongside their target fellowship. 12.4% of fellows (80) had done a fellowship before the fellowship and 11.1% (72) did a fellowship after.

Since these fellowships are ‘late-stage’ - none of them are designed to be much more senior than many of the others - I think it is quite surprising that over 10% of alumni do another fellowship following the target fellowship.

I also think it’s quite surprising that only 12.4% of fellows had done an AI Safety fellowship before - only slightly higher than those who did one after. This suggests that fellowships are most of the time taking people from outside of the ‘standard fellowship stream’.

Individual fellowships

Whilst most fellowships tended to stick around the average, here are some notable trends:

Firstly, 20.2% (17) of ERA fellows did a fellowship after ERA, whilst only 9.5% (8) had done a fellowship before. This suggests ERA is potentially, and somewhat surprisingly, an earlier stage fellowship than other fellowships, and more of a feeder fellowship. I expect this will be somewhat surprising to people, since ERA is as prestigious and competitive as most of the others.

Secondly, MATS was the other way round, with 15.1% (33) having done a fellowship before and only 6.9% (15) doing a fellowship after. This is unsurprising, as MATS is often seen as one of the most prestigious AI Safety Fellowships.

Thirdly, Talos Network had 32.3% overall doing another fellowship before or after Talos, much higher than the 21.5% average. This suggests Talos is more enmeshed in the fellowship ecosystem than other fellowships.

| Fellowship | Alumni | Alumni who did another fellowship | Percentage who did another fellowship | Alumni who did a fellowship before | Percentage before | Alumni who did a fellowship after | Percentage after |

| Total | 647 | 139 | 21.5% | 80 | 12.4% | 72 | 11.1% |

| MATS | 218 | 45 | 20.6% | 33 | 15.1% | 15 | 6.9% |

| GovAI | 118 | 24 | 20.3% | 15 | 12.7% | 12 | 10.2% |

| ERA | 84 | 25 | 29.8% | 8 | 9.5% | 17 | 20.2% |

| Pivotal | 67 | 17 | 25.4% | 8 | 11.9% | 10 | 14.9% |

| Talos | 62 | 20 | 32.3% | 11 | 17.7% | 12 | 19.4% |

| Apart | 52 | 11 | 21.2% | 6 | 11.5% | 9 | 17.3% |

| PIBBS | 31 | 8 | 25.8% | 5 | 16.1% | 3 | 9.7% |

| Tarbell | 21 | 1 | 4.8% | 1 | 4.8% | 0 | 0.0% |

| IAPS | 12 | 4 | 33.3% | 4 | 33.3% | 0 | 0.0% |

Links between fellowships

On the technical side, I found very strong links between MATS and SPAR, AI Safety Camp and ARENA (13, 9 and 7 fellows respectively had gone directly between one and the other), which is unsurprising.

Perhaps more surprisingly, on the governance side I found equally strong links between GovAI and ERA, IAPS and Talos, which also had 13, 9 and 7 links respectively. All of these fellowships are also half the size of MATS, which makes this especially surprising.

| Strongest Bidirectional Links between Fellowships | |

| Fellowships | Number of Links |

| MATS x SPAR | 13 |

| GovAI x ERA | 13 |

| MATS x AI Safety Camp | 9 |

| GovAI x IAPS | 9 |

| MATS x ARENA | 7 |

| GovAI x Talos | 7 |

| MATS x ERA | 6 |

| APART x SPAR | 5 |

| GovAI x Pivotal | 4 |

| MATS x Talos | 4 |

For fun, I also put together a Sankey Visualisation of this. It’s a little jankey but I think it gives a nice visual view of the network. View the Sankey Diagram Here.

Preliminary Directional Signals: IRG Data

As part of the IRG project I participated in this summer (during which I produced this database) I used this data to produce the following datapoints:

- That 80% of fellowship alumni are now working in AI Safety. This put the average fellowship in line with MATS in terms of retention rate, which is very encouraging.

- That the majority of those working in AI Safety are now working in the Non-Profit sector.

However, these results were produced very quickly. They used both AI tools to extract data and a manual, subjective judgement to decide whether someone worked in AI Safety or not. Whilst I expect they are in the right ballpark, view them as directional rather than conclusional.

Notes on the Data

- Proportion of Alumni: Of course, this does not cover every alumnus of each fellowship - only the ones that posted their involvement on LinkedIn. I estimate this population represents ⅓ - ½ of all alumni.

- Choice of fellowships: The selection was somewhat arbitrary, focusing on 'late-stage fellowships' where we expect graduates to land roles in AI Safety.

- Seniority of Fellowships: Particularly for my link analysis, fellows are much less likely to post about less competitive and senior fellowships on their LinkedIn than later stage ones.

- Fellowship Diversity: These programs vary significantly. ERA, Pivotal, MATS, GovAI, PIBBS, and IAPS are primarily research-focused, whereas Tarbell and Talos prioritize placements.

- Experience Levels: Some fellowships (like PIBBS, targeting PhDs) aim for experienced researchers, while others welcome newcomers. This disparity suggests an interesting area for future research: analyzing the specific "selection tastes" of different orgs.

- Scale: Sizes vary drastically; MATS has over 200 alumni profiles, while IAPS has 11.

Open Questions: What can this dataset answer?

Beyond the basic flow of talent, this dataset is primed to answer deeper questions about the AIS ecosystem. Here are a few useful questions I believe the community could tackle directly with this data. For the first 4, the steps are quite straightforward and would make a good project. The last may require some thinking (and escapes me at the moment):

- Retention Rates: What percentage of alumni are still working in AI Safety roles 1, 2, or 3 years post-fellowship?

- The "Feeder Effect": Which fellowships serve as the strongest pipelines into specific top labs (e.g., Anthropic, DeepMind) versus independent research?

- Background Correlation: How does a candidate’s academic background (e.g., CS vs. Policy degrees) correlate with their path through multiple fellowships?

- Fellowship tastes: How do the specialism and experience of people different fellowships select differ?

- The "Golden Egg": Counterfactual Impact.

- What proportion of people would have entered AI Safety without doing a given fellowship?

- What is the marginal value-add of a specific fellowship in a candidate's trajectory? (Multiple fellowship leads have expressed a strong desire for this metric).

The Dataset Project

I wanted to release this dataset responsibly to the community, as I believe fellowship leads, employers, and grantmakers could gain valuable insights from it.

Request Access: If you'd like access to the raw dataset, please message me or fill in this form. Since the dataset contains personal information, I will be adding people on a person-by-person basis.

Note: If you're not affiliated with a major AI Safety Organization, please provide a brief explanation of your intended use for this data.

Next Steps

Firstly, I’d be very interested in working on one of these questions, particularly over the summer. If you’d be interested in collaborating with or mentoring me, have an extremely low bar for reaching out to me.

I would be especially excited to hear from people who have ideas for how to deal with the counterfactual impact question.

Secondly, if you’re an organisation and would like some kind of similar work done for your organisation or field, also have an extremely low bar for reaching out.

If you have access or funding for AI tools like clay.com, I’d be especially interested.

Thanks for writing this Christopher!

@Will Howard🔹 @Alex Dial - you guys might be interested in this.

So glad to have seen this published, great stuff Chris!

Cool! How were you defining/measuring working in AI safety? (I'm very surprised the percentage is so high!)

Similar curiosity, and similarly surprised! 🤓

Unfortunately as I produced those stats quite quickly in the summer, I didn't have a formal definition and was eyeballing it a bit! It's something I might look back into more rigorously in the future.

What I would point out is that MATS had a very similar proportion remaining in AI Safety according to their analysis - https://www.lesswrong.com/users/ryankidd44?from=search_autocomplete. Although I agree it definitely would be surprising if the retention rate of all these fellowships was as high as MATS.

Seems plausibly fine to me. If you think about a fellowship as a form of "career transition funding + mentorship", it makes sense that this will take ~3 months (one fellowship) for some people, ~6 months (two fellowships) for others, and some either won't transition at all or will transition later.

Thanks for working on this! As a grantmaker I've found it a bit puzzling to evaluate fellowship programs and I hope you continue the project.

Biggest unanswered but I think critical question:

What proportion are working for frontier labs (not "for profit" generally, but the ones creating the risks,) in which roles (how many are in capabilities work now?) and at which labs?

Absolutely! My estimate in the summer was 4.5% (around 30 fellows), but this was excluding people at frontier labs who were explicitly on the safety teams. If specifics are important I'd be more than happy to revisit!

I'd find a breakdown informative, since the distribution both between different frontier firms and between safety and not seems really critical, at least in my view of the net impacts of a program. (Of course, none of this tells us counterfactual impact, which might be moving people on net either way.)

As an advisor at Successif, the question of how/to what extent AI safety fellowships serve as effective ramps for an AI safety career transition come up frequently with advisees.

I'm interested to see how your project develops, to support better informed decision-making.

As co-founder of SyDFAIS, an interactive visual map of the AI safety and AI development ecosystems, I'm tickled thinking how your data can feed into the visual map to facilitate sense-making and even imagining future scenarios across multiple filters (e.g. gender, location, fellowship focus, skill profile, etc) and for multiple stakeholder groups (funders, fellowship program designers, career transitioners, etc) . Will bookmark this @Christopher Clay, to revisit once our prototype goes public soon.

It’s great to see you continuing to explore this question and develop your research even after IRG has ended - really interesting to read!