I gave a talk introducing AI alignment / risks from advanced AI in June 2022, aimed at a generally technical audience. However, given how fast AI has been moving, I felt I needed an updated talk. I've made a new one closely based off Richard Ngo's Twitter thread, itself based off of The Alignment Problem from a Deep Learning Perspective. There's still too much text, but these slides are updated through March 2023 and have a more technical lens.

People are welcome to use this without attribution, and I hope it's useful for any fieldbuilders who want to improve it! I'm also happy to give this talk if people would like me to -- the slides come out to about 45m, with whatever time remaining for discussion.

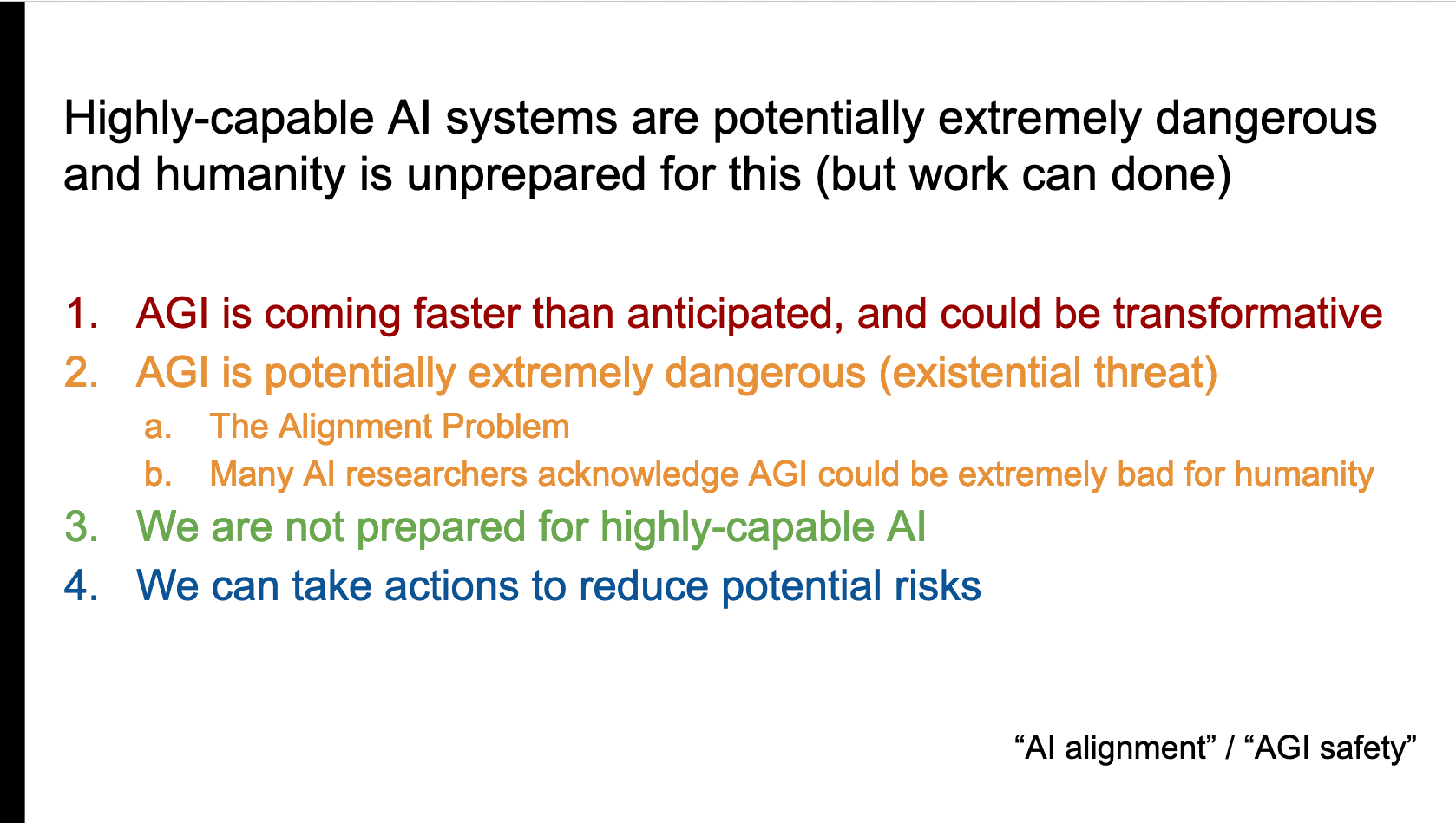

New talk slides: The Alignment Problem: Potential Risks from Highly-Capable AI

Main thesis slide:

Appendix

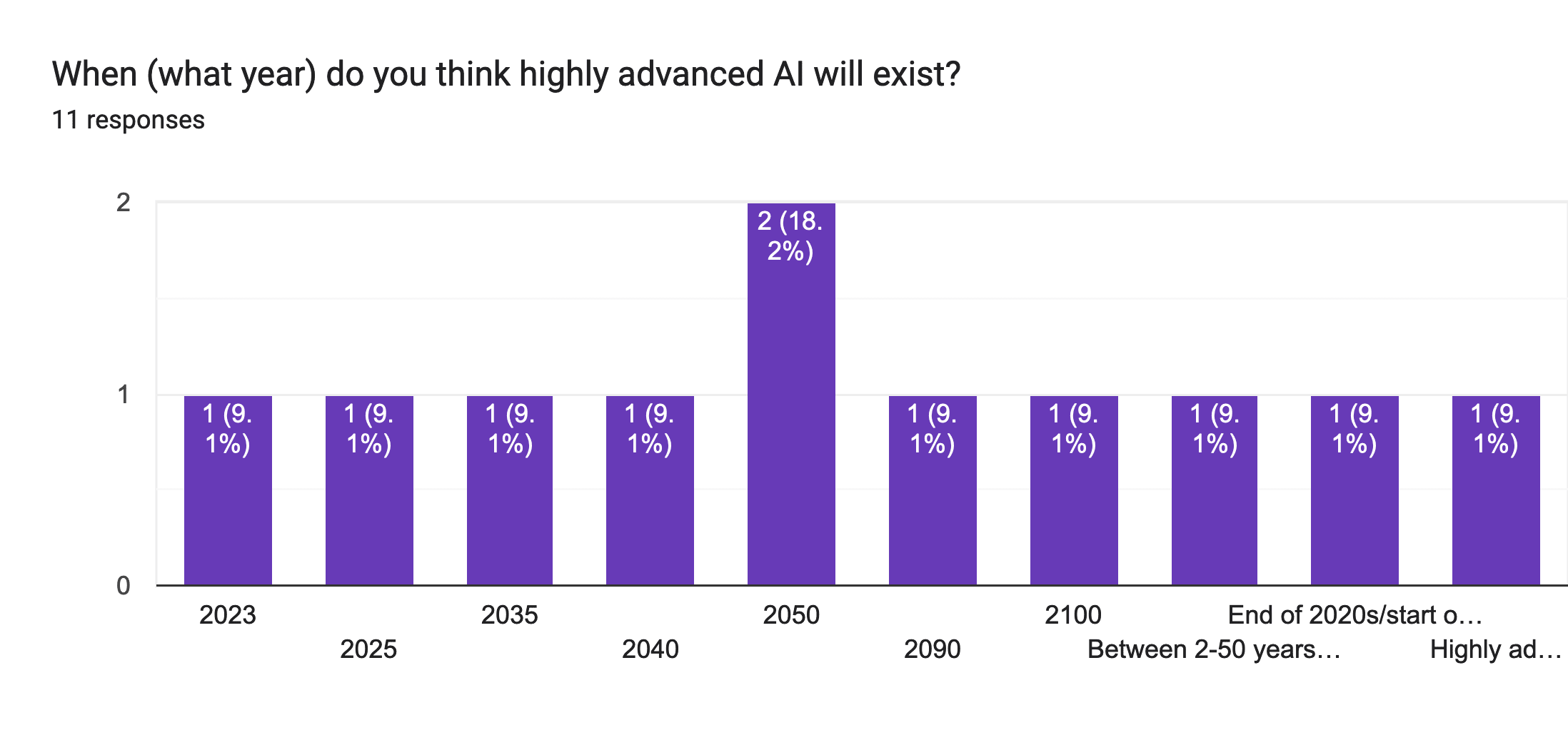

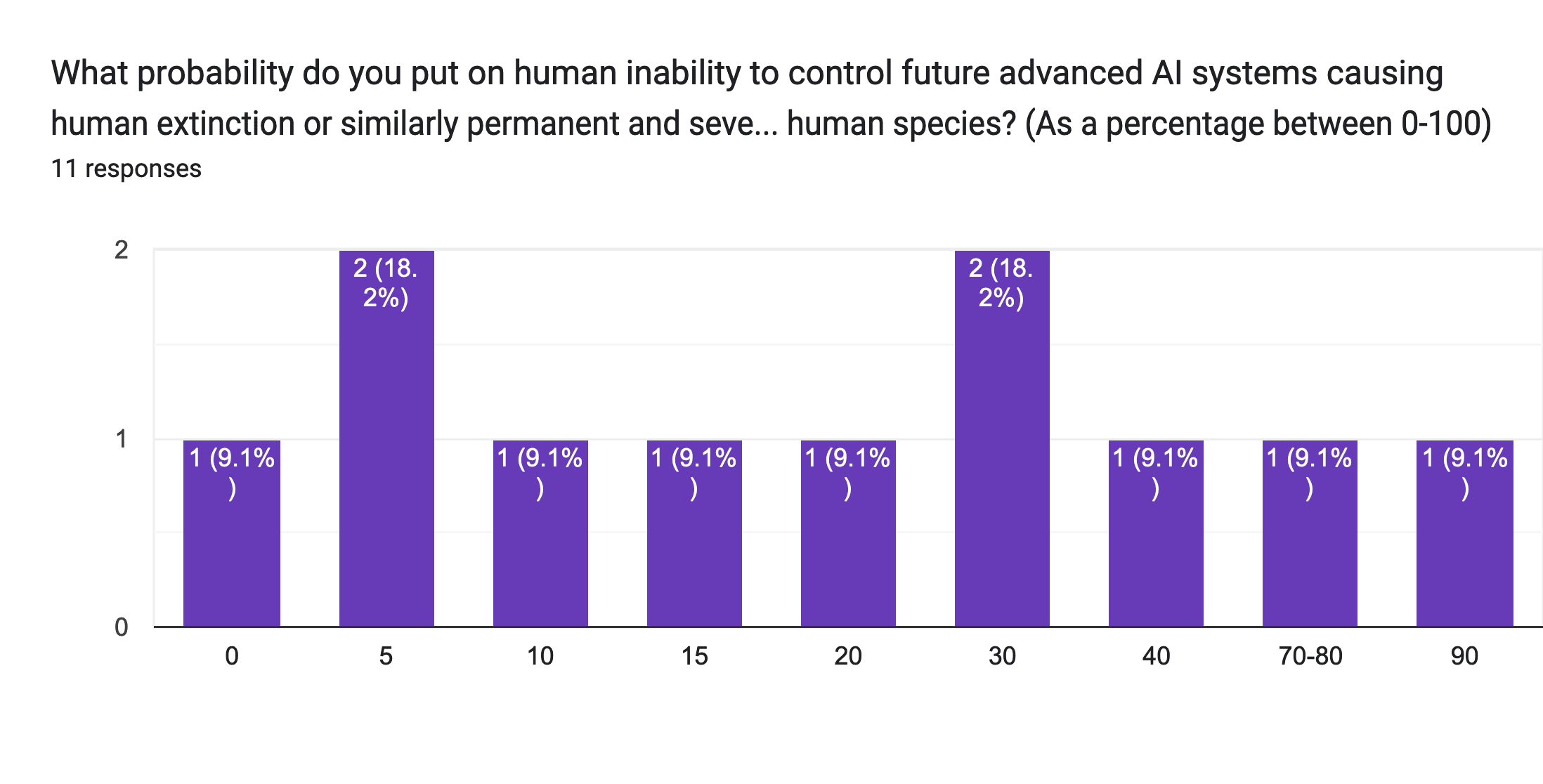

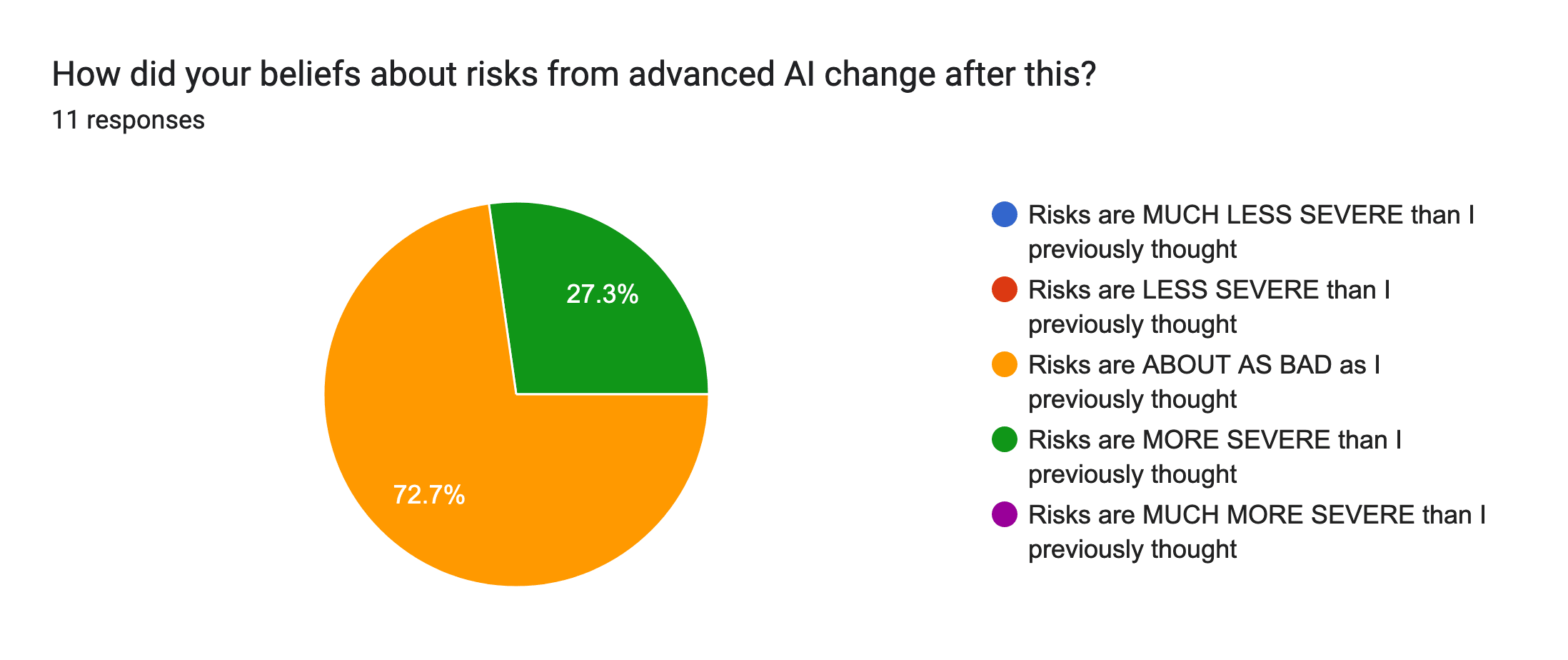

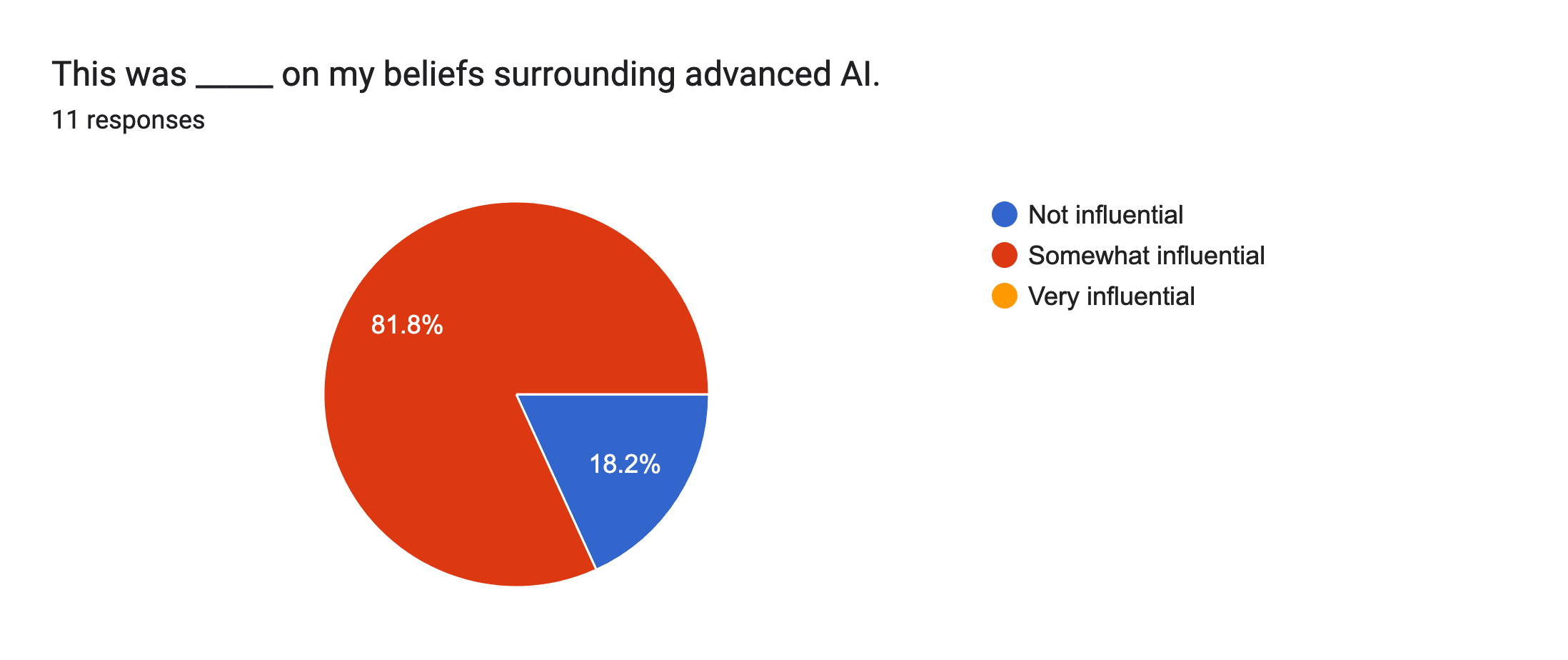

Bonus data that I collected after the talk (which was given to AI safety academics)

Comments:

- Great talk! I liked the clear description of the relative resources going into alignment and improvement of capabilities.

- "Not influential" only because I have already read a lot on this topic :-)

- Sorry to be a downer I just don’t believe in this stuff, I’m not a materialist

- I think the alignment problem is one that we, as humans, may not be able to figure out.

I want to highlight aisafety.training which I think is currently the single most useful link go give to anyone who want's to join the effort of AI Safety research.

Who ever gave me a disagreement vote, I'd be interested to hear why. No pressure though.

I didn't give a disagreement vote, but I do disagree on aisafety.training being the "single most useful link to give anyone who wants to join the effort of AI Safety research", just because there's a lot of different resources out there and I think "most useful" depends on the audience. I do think it's a useful link, but most useful is a hard bar to meet!

I agree that it's not the most useful link for everyone. I can see how my initial message was ambiguous about this. What I meant is that by all the links I know, I expect this to be most useful on average.

Like, if I meat someone and have a conversation with someone and I had to constrain myself to give them only a single link, I might pick another recourse to give them, based on their personal situation. But if I wrote a post online or gave a talk to a broad audience, and I had to pick only one link to share, it would be this one.