Manifund is a new funding org that experiments with systems and software to support awesome projects. In 2023, we built a website (manifund.org) and donor ecosystem supporting three main programs: impact certificates, regranting, and an open call for applications. We allocated $2m across dozens of charitable projects, primarily in AI safety and effective altruism cause areas. Here’s a breakdown of what Manifund accomplished, our current strengths and weaknesses, and what we hope to achieve in the future.

If you like our work, please consider donating to Manifund. Donations help cover our salaries & operating expenses, and fund projects and experiments that institutional donors aren’t willing to back — often the ones that excite us most!

At a glance

Here are some high-level stats that provide a snapshot of our 2023 activities:

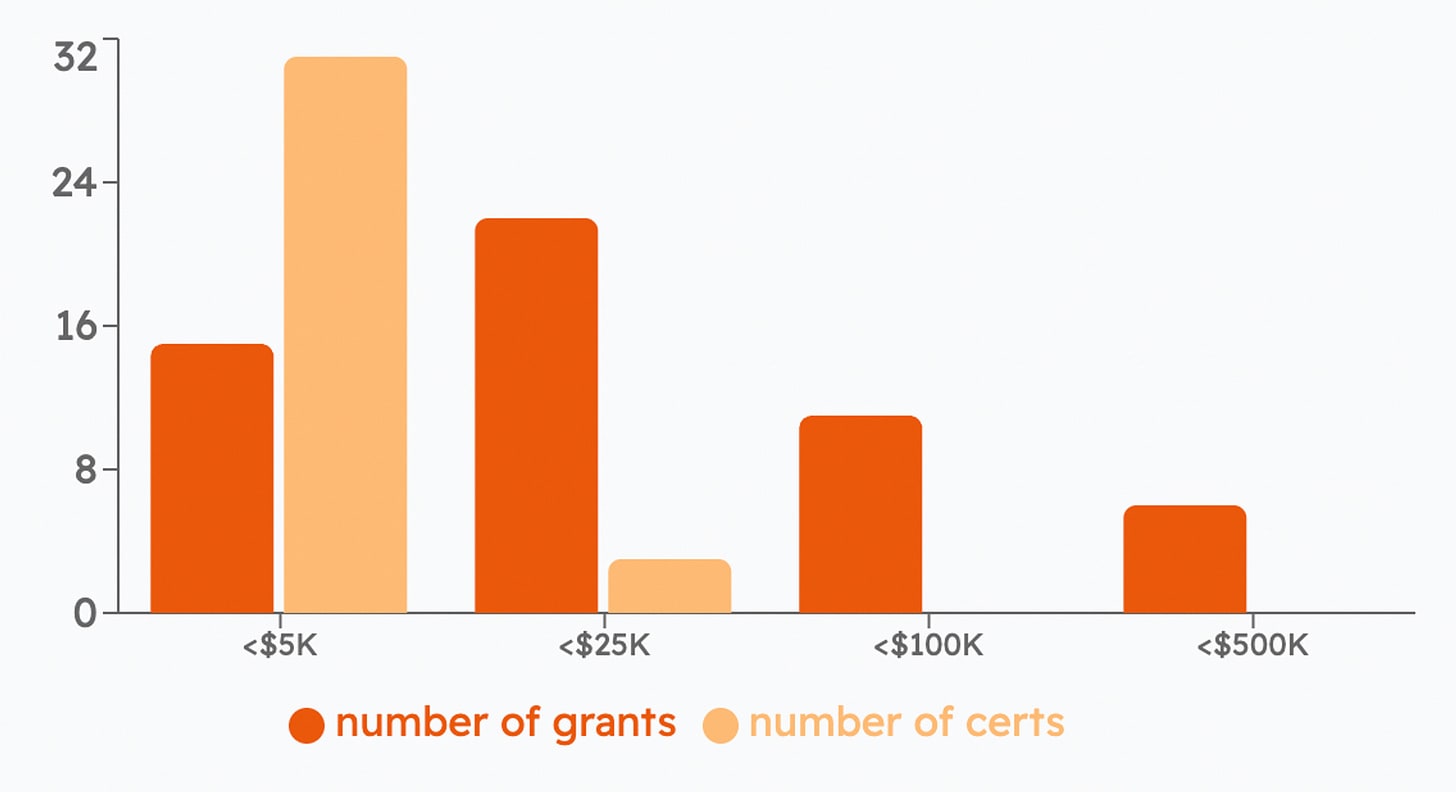

- $2.06M sent to projects: $2.012M to grants & $45K to impact certificates

- Of the totals above, $95K that went to grants and $40K that went to certs came from unaffiliated donors/investors, rather than regrantors.

- 88 projects were funded: 54 grants & 34 certs

- $2.22M has been deposited into Manifund, and $1.62M has been withdrawn so far.

- Below are the top cause areas of projects that got funded. Note that these are overlapping, that is, one project can be filed under multiple cause areas.

- Technical AI Safety: 27 projects funded, $1.57M dispersed

- Science and Technology: 9 projects funded, $118K dispersed

- AI Governance: 10 projects funded, $112K dispersed

- Biosecurity: 4 projects funded, $97K dispersed

- Honorable mention to Forecasting, which only received $76K total, but encompassed 35 projects. This is because our two biggest impact certificate rounds so far—ACX Mini-Grants and the Manifold Community Fund—were centered around forecasting and funded lots of small projects.

2023 Programs

Impact certificates

Summary: Impact certificates are venture funding for charitable endeavors. Investors fund founders by buying shares (”certs”) in their projects, which pay out if the project later receives a retroactive prize. See also our and ACX’s explainers.

Assessment: 7/10

Impact certs have been discussed as a much more efficient system of funding charitable projects. Luminaries such as Paul Christiano, Katja Grace, Vitalik Buterin and Scott Alexander have championed the idea, but apart from a few small experiments with retroactive funding, no notable impact marketplaces existed prior to Manifund.

In 2023, we built out the website and operational experience to run impact certs end to end, from handling project submissions, to investments, to retroactive prize payouts. We completed 2 impact cert rounds and started 3 more, each testing different setups and domains.

How did we do? I would describe impact certs as “working as intended, but not yet hitting product/market fit”. Some possible reasons:

Impact certs are a 3-sided marketplace, and come with the implied cold start problems: we need to get a retro funder, investors, and founders all on board.

We think of the retro funder as the hardest part; they have to be convinced that it’s worth paying out money for work already accomplished. Most donors instead think in terms of funding things prospectively.

But finding good investors is also not trivial! The investors’ decisions shape which projects actually get underway; they serve the role of grant evaluators in a traditional charity ecosystems, which requires a particular skillset and dedication.

A lot of education is needed to explain the whole system: it has a lot of moving parts, and most people are unfamiliar with the venture ecosystem.

Feedback loops via impact certs are slow. Projects take a long time to develop; updates have been infrequent from all sides (founders, investors, and funders).

In theory, impact certs should encourage investors to seek out good projects and help them, though this doesn’t seem to have happened much. Perhaps this is due to small dollar amounts or us not having found expert investors to participate.

Despite these difficulties, I think impact certs are Manifund’s most exciting project, with the potential to transform the entire landscape of charitable funding. The economic theory behind them is elegant, and recent successes with advance market commitments (Operation Warp Speed, Stripe Frontier) are waking people up to the idea that prize funding is a great way of encouraging public goods.

Here are some reflections, broken down by round.

ACX Forecasting Minigrants: 7/10

Stats: $30k prize pool distributed to 20 projects, Jan to Oct 2023

See also: ACX announcement, Manifund’s retro, ACX’s retro

This was the project that kicked off Manifund! Scott approached us saying that he wanted to run ACX Grants 2 on impact certs, but (understandably) wanted to try a lower-stakes test first. We picked “forecasting” as an area that Scott felt qualified to judge as a retro funder; I brought on Rachel to work on Manifund fulltime, and together we shipped the MVP of the site in 2 weeks.

Lessons: Everything worked! We successfully created the world’s first ecosystem around investing in charitable projects and retroactively funding them. I don’t think projects produced were quite as good as those in the original ACX Grants round; not sure if that is due to impact certs, the much lower funding & prize pool ($20-40k), more limited scope, or something else.

OpenPhil AI Worldviews Essay Contest: 2/10

Stats: 5 essays cert-ified, Feb to Sep 2023.

See also: OpenPhil’s announcement, results

This one was kind of a flop. We had launched ACX Minigrants and were waiting for results; we saw that OpenPhil had announced this contest and figured “$225k for essays? Great fit for contestants trying to hedge some of their winnings”. We reached out to Jason Schukraft, the contest organizer, and got his blessing — but unfortunately too late to secure an official partnership. We reached out to the essayists on our own, but most did not agree to create a Manifund impact cert (including none of the ultimate winners).

Lessons: large dollar prizes + well-known brand are not sufficient to get a robust cert ecosystem started. Unclear if “essays” are a compelling use case for impact certs, as the investment comes after all the work is done. On the plus side, this was a pretty cheap experiment to try, as all the infrastructure was already in place from the ACX Minigrants round.

Chinatalk Prediction Essay Contest: ongoing (Nov 2023 to Jan 2024)

Stats: $6k prize pool; expecting 50-100 submissions.

See also: Chinatalk’s essay website

Chinatalk (a Manifund grantee) approached us to sponsor their essay contest; I saw this as a chance where we could try out “impact certs for essays”, but this time with official partnership status. So far, Jordan and Caithrin have been amazing to work with; it remains to be seen if the added complexity of impact certs are worth the benefits of investor engagement.

As an aside: the Chinatalk contest makes me wonder if there’s space for “hosting contests-as-a-service”. There’s proven demand and a lot of good work generated via contests and competitions (ACX Book Reviews; Vesuvius Prize; AIMO prize) but each of them jury-rig together websites & infra. Manifund could become a platform that streamlines contest creation (think Kaggle but for misc contests) and thereby fill in the “retro funder” part of the marketplace.

Manifold Community Fund: ongoing (Dec 2023 to Feb 2024)

Stats: $10k x3 prize payouts, ~20 projects proposed

See also: Manifold announcement

Partnering with other orgs has upsides (publicity, funding) but also downsides (higher communication costs and more negotiations). Could we move faster and experiment more by funding a prize round ourselves? We landed on “community projects for Manifold” as an area we were experts in judging, and could justify spending money on to get good results.

We set up the MCF with 3 rounds of ~$10k in funding once per month, instead of a single prize payout at the end. My hope is that more frequent prize funding will provide better feedback to investors & founders, and also teach us more about how to actually allocate retro funding, which is a surprisingly nontrivial problem!

ACX Grants 2024: ongoing (Dec 2023 to Dec 2024)

Stats: $300k+ prize pool; predict there will be 400-800 proposals, with 10-30 funded

See also: ACX announcement

This will be our largest impact cert round so far. Some changes we made, compared to the previous ACX Minigrants:

Scott will be directly funding the proposals that he likes up front, instead of waiting to act as a retro funder. The other proposals may then be created as impact certificates.

We allow anyone to invest (via a donor-advised funds model) instead of restricting to accredited investors.

A group of EA funders have agreed to participate as retro funders (Survival and Flourishing Funds, EA Funds & ACX). This is particular exciting, as the first test case of having multiple different final buyers of impact.

We’re happy that Scott found the first round of our impact certs compelling enough to want to expand it into the next official round! ACX Grants is also especially dear to our hearts, as it was counterfactually responsible for getting Manifold off the ground; to have the opportunity to come and help future ACX Grantees is quite the privilege.

Next steps for impact certs

We’d like to continue running impact cert rounds, in a more frequent, standardized manner (perhaps even self-serve). We’d also like to find a large, splashy use case for impact certs, that draws more attention to the concept and validates that it works well at larger scales. This would likely involve partnering closely with some deep-pocketed funder who wants to put up a large prize for a specific cause.

Potential causes we’ve been daydreaming about:

- Curing malaria (vaccine rollout? gene drives?)

- Big, yearly AI Safety prize

- Ending flu season in SF with FarUVC rollout

- Offsets for factory farmed eggs

- Carbon credits, similar to Stripe Climate

- Political change (e.g. housing reform?)

- General scientific research prizes in some weird field (eg longevity? fertility?)

Regranting

Summary: A charitable donor delegates a grantmaking budget to individuals known as “regrantors”. Regrantors independently make grant decisions, based on the objectives of the original donor and their own expertise.

Assessment: 7/10

Regranting was pioneered by the FTX Future Fund; among the grantmaking models they experimented with in 2022, they considered regranting to be the most promising. We believed that regranting is a good way of allocating funding for a few reasons:

Faster turnaround times for grantees: Regrants involve lower overhead and less consensus, which leads to faster decisionmaking. Around the time we started this program, the EA funding space had just been severely disrupted by the collapse of FTX, which was making grant turn around times especially long. We had experienced this ourselves, as had many other people in our network, and this seemed like a problem we could help solve.

More efficient funding allocation and active grantmaking: Regranting utilizes regrantors’ knowledge and networks, which may lead to above the bar use of funding on the margin. They’re often more connected to their grantees, which allows them to give more feedback or even initiate projects themselves, whereas most funders take a more passive approach.

A better option for some donors: Delegating donations to regrantors is a unique donor experience, which offers a balance between maintaining control and minimizing effort relative to either directly giving to projects or giving to grantmaking organizations. Donors can pick individuals they trust to give intelligently and with their values in mind, but who may be better able to allocate the money as efficiently as possible due to some combination of time, expertise, and connections.

Scalable: Regranting can scale up to moving large amounts of funding. This was a clear upside for the Future Fund, which was aiming to distribute 100M to 1B+ a year, though is less important for us now, as Manifund (and EA as a whole) are more funding constrained now.

Large regrantors

Stats: 5 regrantors with max budgets of ~$400k each, $1.4m total pool. 5 grants initiated, 15 grants supported.

Assessment: 7/10

See also: regranting launch announcement

We decided to do a regranting program after we were introduced to an anonymous donor, “D”, in May 2023. D liked Future Fund’s regranting setup, and wanted to fund their own regrantors, to the tune of $1.5m dollars across this year. This seemed like a good fit for Manifund to run, as:

We had already built a lot of the necessary infrastructure for ACX Minigrants—a website, 501c3 org, and payout processes—and we could reuse these for regranting.

We’d been beneficiaries of the regranting mechanism ourselves: Manifold’s seed round was initiated by an offer from a Future Fund regrantor.

We thought we could give our grantees a better experience, with faster turnaround times and more involved grantmakers.

We thought that by supporting regrantors and their grantees now, we could later convince them to participate in impact certs as investors & founders.

We were in a lull anyways, waiting for ACX Minigrants projects to wrap up.

We’re reasonably happy with the quality of grants made! At a midpoint review in September, our donor D’s judgement was that the regrants made were somewhat better than initially expected. They were open to renewing the program for next year, pending other considerations.

We do wish that we’d gotten more consistent active participation from the large regrantors. Relative to the small regrantors, they tended to make fewer grants and engage in less coordination and discussion with other regrantors… which is understandable! D chose a bunch of very well-credentialed folks with great judgement, but it turns out such people are pretty busy or in high demand, and $400k is not a large enough budget to warrant spending significant amounts of time doing active grantmaking.

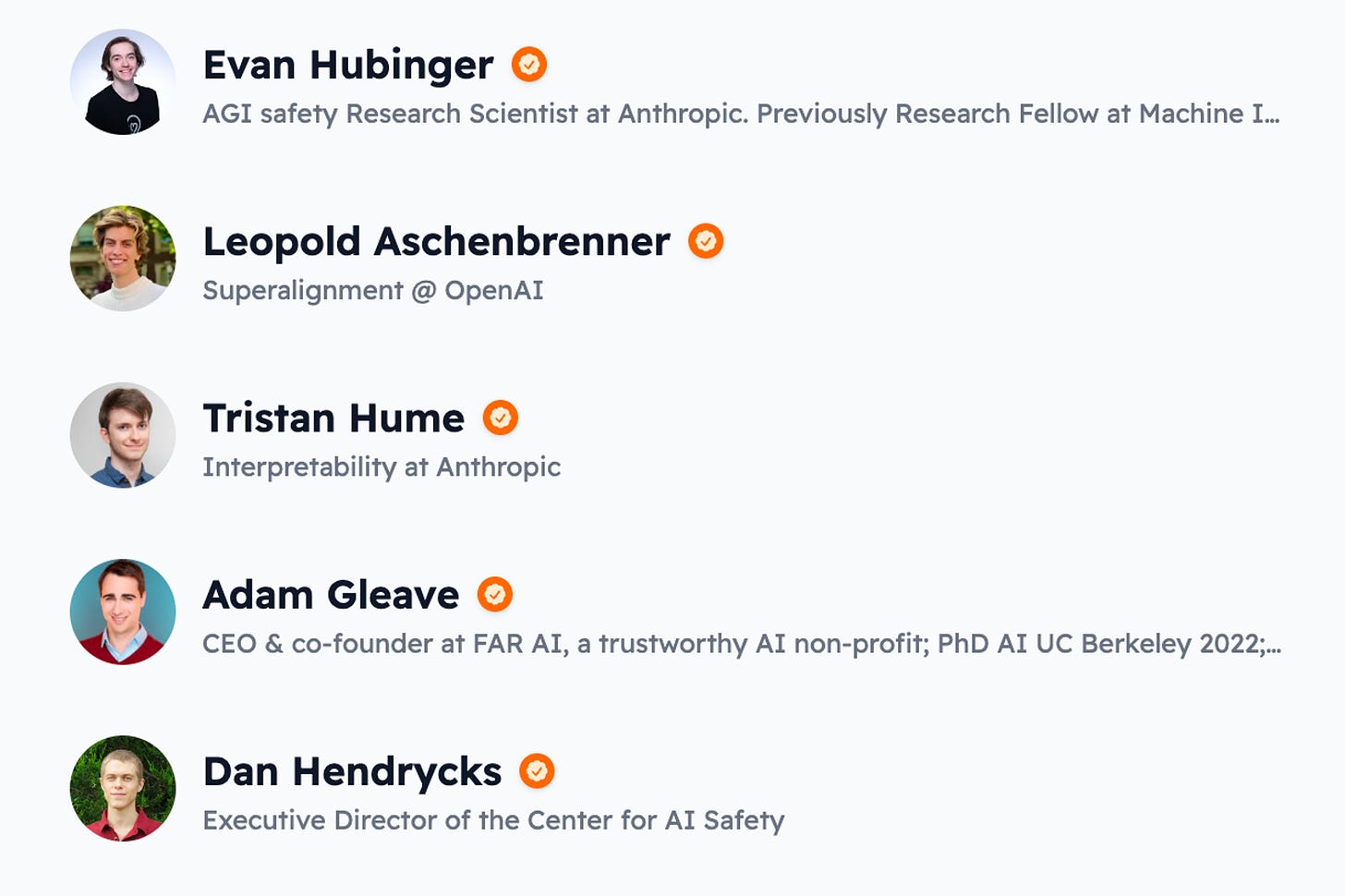

We had one notable exception to this pattern: Evan Hubinger was very prolific and dedicated, making 9 different grants to projects in technical AI safety. We ended up increasing his budget to encourage him to continue finding good opportunities.

Still, until the last couple weeks of the year, it looked like a large portion of the large regrantor pot would be left unspent, but then Dan, Evan, and Adam came through with some last minute recommendations and made use of their remaining budgets.

While we’re glad all of the money was sent to projects, this end of year influx wasn’t ideal. First, it’s unlikely that the best opportunities just happened to come along suddenly at the end of the year, which means something inefficient was going on. It seems more likely that they could have given to marginally better projects earlier in the year, or they could have given to these projects earlier. This is informative for how we set up budgets if we do this again, so we don’t incentivize waiting until a week before the expiration date to spend budgets.

Additionally, because we didn’t anticipate this influx, the regrantors offered more in funding than we had budget for, and we had to reject two grant recommendations that at other times we would have approved. This possibly created false expectations and a pretty bad experience for these two grantees, for which we are quite sorry.

Highlighted grants

Evan Hubinger, $100k: Scoping Developmental Interperetability

This also got donations from Marcus, Ryan, and Rachel, though all after Evan’s initial contribution and recommendation.

This was an example of regrantors using their professional connections to find opportunities and that they had a lot of context on: Evan previously mentored Jesse, the recipient of this grant, and is familiar with the work of others on the team. With this all of this context, he said he “believe[s] them to be quite capable of tackling this problem”.

Adam Gleave, $10.5k: Introductory resources for Singular Learning Theory

According to Adam, “There's been an explosion of interest in Singular Learning Theory lately in the alignment community, and good introductory resources could save people a lot of time. A scholarly literature review also has the benefit of making this area more accessible to the ML research community more broadly. Matthew seems well placed to conduct this, having already familiarized himself with the field during his MS thesis and collected a database of papers. He also has extensive teaching experience and experience writing publications aimed at the ML research community.” I’ll note that Evan also expressed excitement about Singular Learning Theory in his writeup for the above grant.

Like the above grant, this was an opportunity that Adam came across and could evaluate with lots of context as he previously mentored Matthew and continues to collaborate with him.

Leopold Aschenbrenner, $400k: Compute and other expenses for LLM alignment research

From Leopold’s comment explaining why he chose to give this grant: ”Ethan Perez is a kickass researcher whom I really respect, and he also just seems very competent at getting things done. He is mentoring these projects, and these are worthwhile empirical research directions in my opinion. The MATs scholars are probably pretty junior, so a lot of the impact might be upskilling, but Ethan also seems really bullish on the projects, which I put a lot of weight on. I'm excited to see more external empirical alignment research like this!

Ethan reached out to me a couple days ago saying they were majorly bottlenecked on compute/API credits; it seemed really high-value to unblock them, and high-value to unblock them quickly. I'm really excited that Manifund regranting exists for this purpose!”

Leopold started with a donation of $200k, and then followed it up with another $200k two months later after seeing the progress they’d made and learning they were still funding constrained.

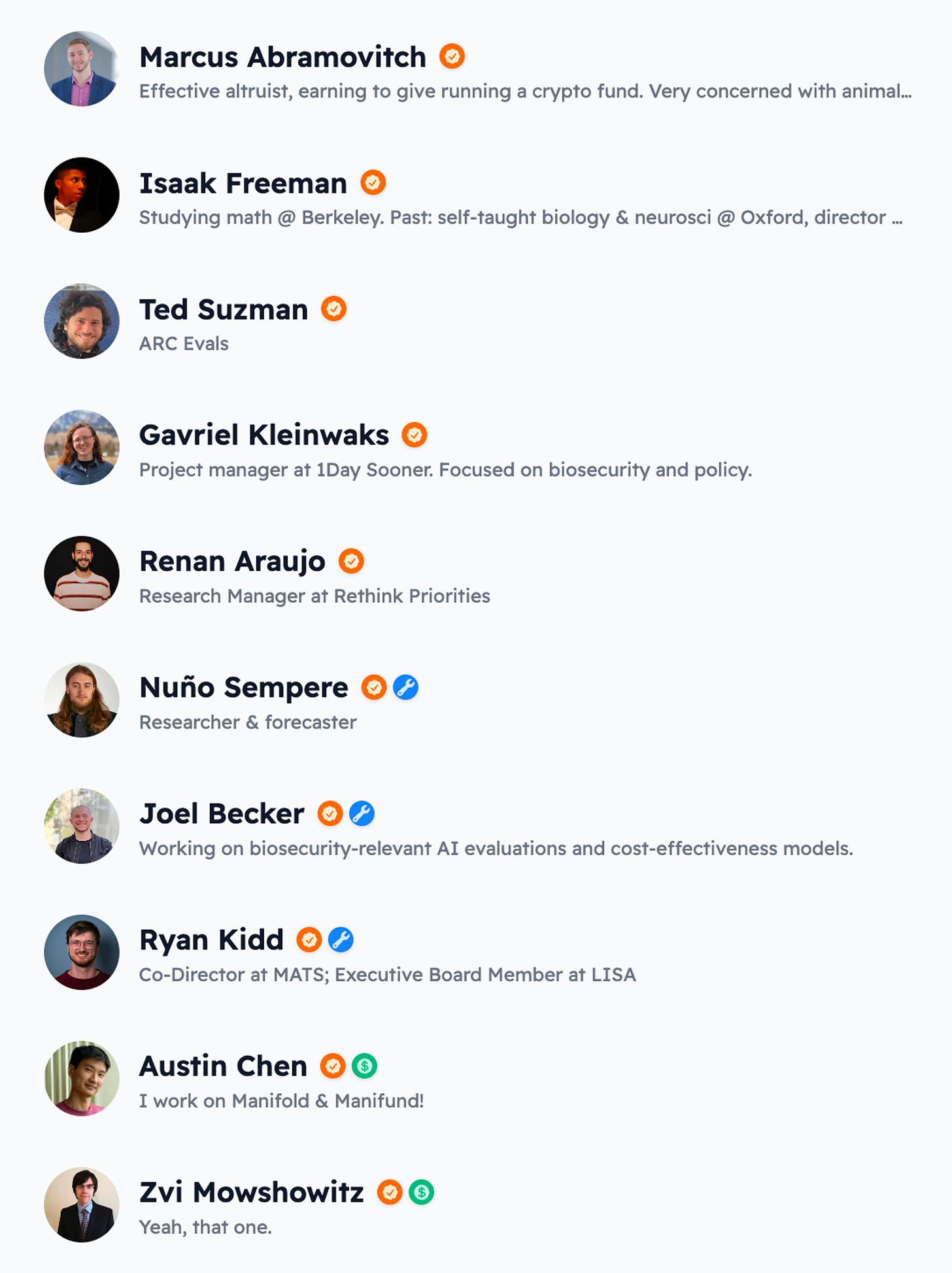

Small regrantors

Stats: 11 regrantors with budgets of ~$50k each, $400k total pool. 11 grants initiated, 41 grants supported.

Assessment: 8/10

See also: Joel Becker’s reflections as a regrantor

After planning out the regranting program with D, we thought that 5 regrantors weren’t enough and that increasing the size of the regrantor cohort would be good for fostering more regrantor discussion and broadening the kinds of grants made. We decided to fund this from our own budget, primarily out of our general support grant from the Survival and Flourishing Funds.

One main benefit of choosing our own regrantors was that we could bet on unconventional candidates. As a variant on “hits-based giving”, we were “hits-based delegating”, giving budgets to wide variety of people, including many with little-to-no previous track record of grantmaking.

The small regrantor program is one of the places where Manifund’s experimentation has been most successful, in our opinion. Some of our regrantors have gone above and beyond, in their commitment to initiating good grants and helping grantees. After launching with our initial cohort, we opened up an application for regrantors and got very high-quality applicants. We approved Ryan Kidd, Renan Araujo, Joel Becker, Nuno Sempere and waitlisted many more promising regrantor candidates. We also had some regrantors who weren’t active or decided to withdraw which is fine — we were expecting this and structured the regrantor budget allocation with this in mind.

We thought that good grants made by our regrantors would encourage other people to donate to regrantor budgets. This doesn’t seem to have happened much; among donations made by individuals on Manifund, very little went towards regrantor budgets relative to specific projects listed via the open call. To that end, fundraising for our regrantors remains our main constraint on operating this program.

Highlighted grants

Joel Becker, $1.5K & Renan Araujo, $1.5K: Explainer and analysis of CNCERT/CC (国家互联网应急中心)

Joel’s explanation of the origin of this grant: “Renan and I put out a call to an invite-only scholarship program, the "Aurora Scholarship," to 9 individuals recommended by a source we trust. We were aiming to support people who are nationals of or have lived in China with a $2,400-$4,800 scholarship for a research project in a topic related to technical AI safety or AI governance…Alexa was one of our excellent applicants.”

Empowering people in or with connections to China to do AI safety work seems pretty important. We were particularly impressed by the initiative Joel and Renan took in getting this off the ground—the prospect of facilitating active grantmaking like this is part of what motivated us to start the regranting program!

Gavriel Kleinwaks, $41.7K: Optimizing clinical Metagenomics and Far-UVC implementation

Gavriel works at One Day Sooner, and is our only biosecurity-focused regrantor, and inline with this grant, her work has been focused on Far-UVC implementation! Here are some quotes from Gavriel’s writeup explaining why she chose this project:

“From my conversation with Miti and Aleš, it sounded as though there was a pretty good chance to unlock UK government buy-in for an important biosecurity apparatus, through the relatively inexpensive/short-term investment of a proposal submitted to the government. Biosecurity doesn’t have a lot of opportunities for cheap wins as far as I normally see, so this is really exciting.”

“This is exactly the type of project Manifund is best poised to serve: the turnaround needs to be really fast, since Miti is targeting an October deadline, and it’s for a small enough amount that at my $50k regranting budget I can fully fund it.”

Shout out to Joel again who recommended this grant to Gavriel! Because of a COI with the recipient, he didn’t contribute financially, but he still deserves a lot of credit for making this happen.

Marcus Abramovitch, $25K: Joseph Bloom - Independent AI Safety Research

Joseph Bloom had a strong track record with independent AI safety research—he maintains TransformerLens (the top package for mechanistic interperetability), his work has been listed by Anthropic, he teaches at the ARENA program, and he came highly recommended from Neel Nanda. Unsurprisingly, he seems to have lived up to this track record and made good progress on his research according to the updates he sends Marcus each month.

This also received the biggest independent donation of any project on Manifund: $25k from Dylan Mavrides!

Open Call

Summary: “Kickstarter for charitable projects”: allow anyone to post a public grant proposal on the Manifund site, for regrantors and the general public to fund

Assessment: 6/10

Stats: 150 projects submitted, $95k raised among 40 individual donors

We started our open call to identify more opportunities for our regrantors to donate to. The open call worked well for this: it has surfaced many projects that we wouldn’t have seen otherwise. For example, I (Austin) allocated half of my own regrantor budget to projects that applied via the open call: Lantern Bioworks, Sophia Pung, Neuronpedia, and Holly Elmore.

To our surprise, many open call projects got support from individual donors that we had no pre-existing relationships with. We had about forty people donate this way, for a total of $95k.

Shout out to our top 10 individual donors of 2023.

- Dylan M - $25,000

- Jalex S - $20,000

- Anton M - $11,530

- Vincent W - $10,500

- Cullen O - $8,710

- Carson G - $6,000

- Peter W - $5,000

- Gavin L - $5,000

- Nik S - $5,000

- Adrian K - $4,000

There were still some downsides associated with this program. The biggest is that an always-open call takes up a constant amount of toil on our team to screen and process grants. Many projects that ask for funding don’t seem impactful or look like bad fits for Manifund. Finally, it seems like regrantors generally prefer to spend their budgets on projects they personally initiate.

Highlighted grants

This received a total of $190K from 8 different sources, including 6 donors and 2 regrantors.

I (Rachel) am a big fan of MATS, as it seems are lots of people. Insofar as AI safety is talent constrained rather than funding constrained, programs like MATS are great way of converting abundant resources into more scarce and valuable ones, i.e. good technical AI safety researchers. MATS specifically occupies one of the hardest parts of that pipeline and does a great job. Many of their alums go onto work on the safety teams of the most important players in AI, like Anthropic and OpenAI, and it seems our two regrantors from Anthropic—Tristan Hume and Evan Hubinger—are both willing to pay for the talent that MATS brings to their company and field.

Tristan Hume explained his decision to direct $150k to MATS: ”I've been very impressed with the MATS program. Lots of impressive people have gotten into and connected through their program and when I've visited I've been impressed with the caliber of people I met.

An example is Marius Hobbhahn doing interpretability research during MATS that helped inform the Anthropic interpretability team's strategy, and then Marius going on to co-found Apollo.” (n.b. Apollo Research is also a Manifund grantee!)

Experiments to test EA / longtermist framings and branding

This received a total of $26.8K from 5 different sources, including 3 donors and 2 regrantors.

From Marcus’ comment explaining why he decided to contribute: “We just need to know this or have some idea of it (continuous work should be done here almost certainly). Hard to believe nobody has done this yet.”

Other comments from donors each expressed a similar sentiment: this is simply a really important question and people are curious to see the results!

Recreate the cavity-preventing GMO bacteria BCS3-L1 from precursor

This received a total of $40.6K from 10 different sources, including 8 donors and 2 regrantors. As Lantern Bioworks is a for-profit company, this was structured as a SAFE investment as part of their seed round, rather than a donation.

This project is simply very cool. Since receiving the Manifund investment, they’ve successfully gotten hold of this bacteria and started administering it (including to us, COI disclosure). Now they are focused on selling their probiotic more widely, and remain on a good path to succeeding at their ultimate goal of curing all cavities forever.

See also: ACX writeup and their launched product, Lumina

Manifold Charity Program

Summary: Allow people to donate their Manifold mana to charities of their choice.

Assessment: 4/10

See also: Donations on the charity page

This was actually the original reason Manifold created a 501c3, back in 2022. We raised $500k from Future Fund as seed funding, to distribute to other charities; the idea was to provide some backing value for Manifold mana, and put donation decisions in the hands of our best traders.

Manifold users like that this exists. They mention that they buy into mana with the idea that they can donate it later. When Stripe initially asked us to discontinue this program, many of our users were vocally unhappy at this.

It also provides a cleaner story for why people participate on Manifold. Predictions markets are sometimes negatively viewed as “gambling”, and “gambling for fake money” is even less understandable, whereas “gambling for charity” is easier to explain and wholesome.

This program is currently in maintenance mode, from the perspective of Manifund. We’re continuing to administer it, but it’s not an area we’re trying to improve upon. We’ll revisit this as part of Manifold’s monetization goals in 2024. For now, it’s being capped at $10k/mo (The New Deal for Manifold’s Charity Program)

There are many potential areas of improvement to this that we could invest in:

- Make donating more of a social experience

- Support donations to any charity, make it more self-serve

- Transfer responsibility for program administration fully into Manifund

- Run matching programs to encourage more user donations

- Partner with charities

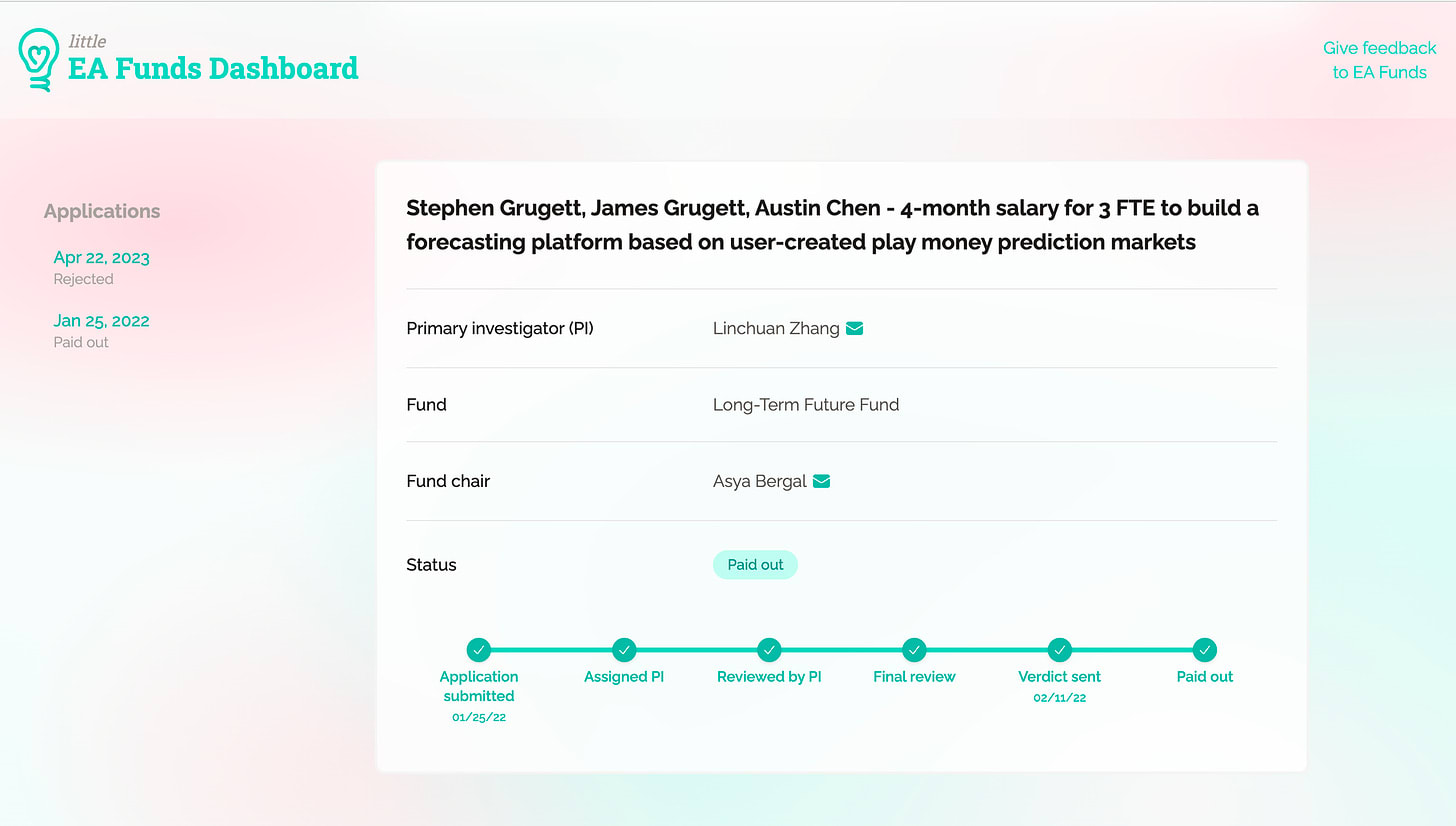

leaf-board.org: EA Funds’s grantee portal

Summary: Rachel built a dashboard for EA Funds grantees, which reads from the EA Funds system and tells applicants about their status in the grantee pipeline.

Assessment: 7/10

Here’s Manifold’s grantee page as an example:

This was a test of increased collaboration between Manifund and EA Funds, as our two orgs have a lot of overlap in cause alignment, check size, and org size. Beyond building this dashboard, we discussed many other options for collaborating as well, which may bear fruit down the line, such as creating a “common app” for EA, sharing notes on grantees, or merging our financial operations. Building this was also an experiment into “what if Manifund acted as a software consultancy, increasing the quality waterline of software in EA”. We proved that we could quickly deliver high-quality websites—Rachel shipped the entire site from scratch in <2 weeks.

Other stuff we tried

Beyond impact certs and regranting, we’ve experimented with other financial mechanisms to assist with charitable endeavors. Some of the weirder things:

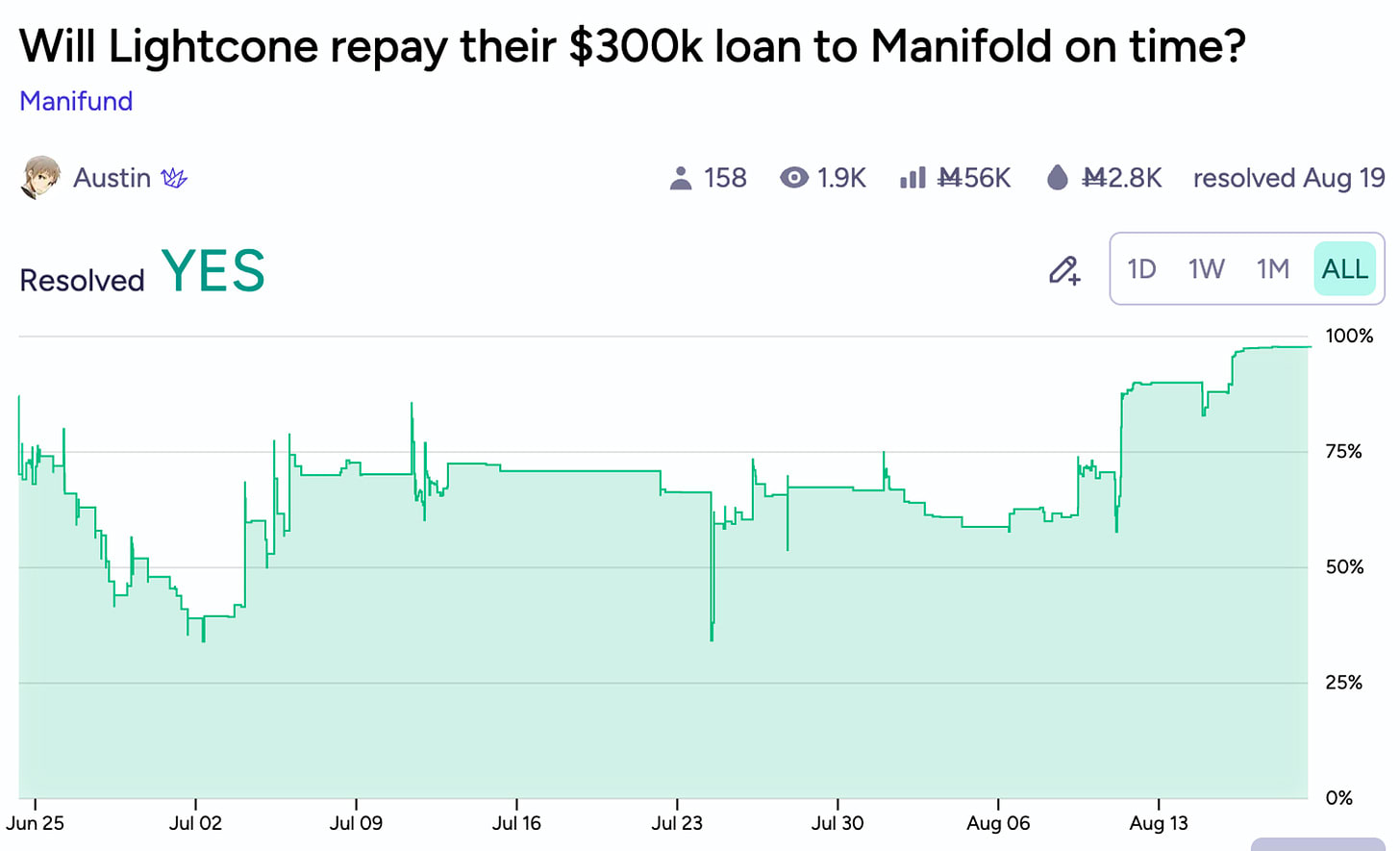

Did you know that nonprofits can make loans? We’ve loaned out $300k twice, to two orgs who we felt aligned with and had a compelling pitch for how they would use the funds: Lightcone Infrastructure, and Marcus Abramovitch’s trading firm AltX. In both cases, we earned a nice return on investment for ourselves, while helping out other organizations in our network.

Though the market seemed to think this was a bad idea…

Did you know that nonprofits can make investments? We put in $40k as a SAFE into Lantern Bioworks, through Austin and Isaac’s regrantor budgets. We have a soft spot for venture investments made through nonprofits; Manifold Markets started our seed round with a large investment from the Future Fund.

Here are some other mechanisms that have caught our eye, as things to experiment with:

- Income share agreements, as a replacement for “upskilling grants”

- Dominant Assurance Contracts, as a partial solution to public goods funding problems and a nice addition to our crowdfunding ecosystem

- Quadratic funding and the S-process, as potential ways of calculating how much to allocate to retroactive payouts

What we were happy with in 2023

The core Manifund product: UI and grantee experience

This year, we built a website and entire funding ecosystem from scratch, which has moved about 2 million dollars to projects to date. Our two main areas of focus were building out novel funding mechanisms and delivering a good grantee experience, and we feel we’ve succeeded at both.

Manifund supports regranting, crowdfunding, and impact certificates. We’re the first site ever to support trading impact certificates — we think this represents a huge step forward, experimentally testing out an idea that’s been discussed for a while.

We also think it’s nicer to be a Manifund grantee than a grantee of some other orgs in the EA space: our grantees get their money faster, receive more feedback on their projects, and have an easier time communicating with us and their grantmakers.

Transparency & openness

Our initial thesis was that grant applications and screening largely can be done in public, and should be. We followed through on trying this out, and feel it went very well.

We’ve formed the largest database of public EA grant applications, as far as we know. Whereas every other application process and review happens over private writeups, Manifund enables these applications to be proposed and discussed on the public internet, including comments and feedback from grantmakers and others.

We think that more more transparency in funding is a public good. For most people in EA, it’s something of a mystery how funding decisions get made, which can be frustrating and confusing. Manifund makes the thought processes of grantmakers less mysterious.

Grantees also seem to appreciate the open discussion. From Brian Wang, discussing details about their proposal for “Design and testing of broad-spectrum antivirals” with regrantor Joel Becker:

it’s been a breath of fresh air to be able to have this real-time, interactive discussion on a funding request, so props to Manifund for enabling this!

Finally, having applications in public has given projects greater exposure. Ryan Kidd told us that someone got in touch about funding SERI MATS after seeing the Manifund post—not to mention all of the proposals posted via open call that were supported directly by small donors who just saw them on our website. As another example of the benefits of transparent grant applications: Lantern Bioworks’s Manifund proposal to cure cavities got to #1 on Hacker News, helping them share their plan widely when they were at a very early stage.

Quality of projects we’ve been supporting

This is both one of the most important metrics of success, and one of the hardest to evaluate. Part of the point of regranting is that the regrantors know more about their fields than we or our donor do—that’s the point of outsourcing the decisions to them! This means both that they make better decisions than we could, and that it’s really hard for us to make judgements about their decisions since they were based on expertise we don’t have.

All that aside, the quality generally seemed pretty high to us. Almost all grants were at least one of: evaluated by someone with special context on the team or the work which we feel comfortable deferring to, counterfactual or counterfactually fast when it was important, or an obviously positive grant of the type e.g. LTFF would make.

We’d be very curious to hear other people’s thoughts on whether this assessment seems accurate!

Coordination with other EA funders

Because the funding space is pretty fragmented, it seems like there’s a lot to be gained from a little bit of coordination. In our observation, EA funders rarely sync up, even on basic questions like “are you also planning on funding this project?” or “what are your plans for this quarter?”. We wanted to combat this and have worked closely with a handful of other orgs, and we’re happy with the value we’ve provided to them. These collaborations include:

Astral Codex Ten: we provided a polished website, fiscal sponsorship, and payout support for ACX Mini-Grants and ACX Grants 2024.

EA Funds: we built a dashboard to improve their grantee experience.

Lightcone: we gave them a fast loan when they were temporarily liquidity-constrained.

And we’ll be working more with the Long-Term Future Fund and the Survival and Flourishing Fund, as they’ve agreed to be retroactive funders for ACX Grants 2024.

Areas for improvement for 2024

Fundraising

We haven’t raised much for Manifund’s core operations or our programs. Our successful attempts include the grant from the Survival and Flourishing Fund, the grant from the Future Fund, the donation from D, and the many small donations from individuals through the site. Unsuccessful attempts include our pitches to OpenPhil, Lightspeed (for regranting), and YCombinator (for impact certs).

We’ve been saying for a while that we’d like to find donors outside of traditional EA sources, though we haven’t followed through on giving this a serious try. Ideally, the Manifund product would appeal to “tech founder” or “quant trader” types, as a place where they can direct their money to charity in more efficient and aligned ways, but we’ve made few inroads into this demographic.

Hiring

Currently, Manifund consists of Rachel working fulltime and Austin working about halftime. On one hand, we think our output per FTE is pretty impressive! On the other, 1.5 FTE is not really that much for all the things we want to accomplish. We’re open to bringing on:

A fullstack software engineer, to build out new features and generally improve the site

A strategy/ops role, to lead one of our main programs (regranting, impact certs, the open call) via fundraising, partnerships & communications

On the other hand, as a nonprofit startup seeking product-market-fit, we don’t want to overhire, either.

We’d also like to improve our nonprofit board. Our board currently consists of me (Austin Chen), Barak Gila, and Vishal Maini; Barak and Vishal signed on when Manifund was just running the Manifold Charity program. As we expand our operations, I’d like to bring on board members with connections and expertise in the areas we’re trying to grow into.

Community engagement

Unlike Manifold, Manifund doesn’t have much of a community of its own. People don’t spend their free time on Manifund, or chat with each other for fun on our Discord. I tentatively think this could be a major area of improvement.

In the early days, “forecasters hanging out” was a big part of making the Manifold community feel like a live, exciting place to talk with each other. Community was a key part of Manifold’s viral loops: people would create interesting prediction market questions, then share it outside of the site. Manifund is missing a similarly powerful viral loop.

Maybe it’s hard to replicate the Manifold community because Manifund feels more transactional. The nature of evaluating grant opportunities might make things seem less fun and more “let’s get down to business”. Or perhaps working with real money feels inherently more serious, compared with Manifold’s fake money.

The closest thing we’ve had to a lively community was the regrantors channel in the first couple of months of the regranting program, though the amount of collaboration and evaluation through discussion has tapered off. Still, it points to the possibility of creating a strong community among grantmakers or perhaps donors.

Amount we help our grantees

Part of the motivation for Manifund was based on having participated in the existing funding ecosystem, feeling the grantee experience was quite lacking, and thinking “huh, surely we could do better than that…”

While we think we’ve done a lot better on turn around times, we’ve only done slightly better at grantee feedback. When making grants, our regrantors are encouraged to write comments about why they chose to give the grant, and in general our comment section can facilitate conversations between any user and the applicant. However, as far as we can tell, once the grant is made, there isn’t much more interaction between grantees and grantmakers, or founders and investors in the case of certs, and Manifund the organization doesn’t provide any further support either.

One possible mission for Manifund would be to achieve YCombinator-levels of support for our project creators. We could nudge regrantors to stay in close contact with their grantees, like by suggesting they check in every month and see where grantees need help. We could aim to run our own batch for incubating projects at Manifund (as in YC or Charity Entrepreneurship), and facilitate stronger connections between the grantees.

Building a growth loop

Right now, each of our programs requires a large amount of time to organize, fundraise for, and then facilitate. We’d like our platform to be more self-serve and require less intervention from our team. For impact certs, perhaps we could standardize different aspects of creating a contest, and have a form and a standardized pipeline for spinning up custom contests.

The open call is already moderately self-serve: we haven’t put much effort into soliciting project applications or donors, and despite that we’ve received many interesting applications and donor interest!

Focus

Possibly we’re trying too many things for an org of our size and resources. On one hand, we view Manifund’s comparative advantage in the EA funding ecosystem as the ability to rapidly experiment with new programs and mechanisms that other funders wouldn’t consider; on the other, we may be able to execute better if we winnowed down our programs to only one or two that are very promising or clearly working well.

Ambitious projects & moonshots for 2024

10x’ing impact certificates: we’re reasonably happy with how impact certificates have worked out so far, and we’ve learned a lot through our experiments. The next step is to see how they work at a larger scale. Here are some ideas for much bigger prizes that could use impact certificates:

- “Nobel Prize” for AI safety work, highlighting the best examples of technical and governance work in the space each year, and allowing people to bet on entries beforehand.

- O-1 Visa impact certs: offer up eg $10k prizes for employers to bring in O-1 candidates; allow investors and lawyers to buy into a share of the prize?

- Eliminating flu season in San Francisco, with an Advance Market Commitment towards deployment of Far UVC tech. This is inspired by conversations with regrantor Gavriel, who works at 1DaySooner and would be a natural partner org for this.

Building a site for “contests-as-a-service”: within EA (OpenPhil AI Worldviews, EA Criticism Contest) and outside of it (Vesuvius Challenge, AI IMO contest), there are open contests for different kinds of work; but there’s no website you can go to to easily host your own contest. One inspiration might be from the world of logo design contests. As a bonus, if we make prize funding more of a norm, impact certs become much more palatable.

Pushing harder on the Donor Advised Fund-angle of Manifund: we’ve been using the “DAF” approach to legitimize prediction markets & impact certs, but on a relatively small scale (~$10k-100k/year). Could we convince large donors to store significant assets with Manifund (e.g. totalling $1M-10M/year), and offer exposure to other things that don’t work with real money (e.g. more liquid prediction markets; private stocks; ISAs)? And could we offer other services that DAFs currently don’t, like regranting as a product, or charity evaluations.

Become the central hub for all giving & donation tracking: OpenBook/Manifund merge scenario where we become a hub for donations, where people track their donations, see the donations of others, and discuss donations in one place. Giving What We Can is kind of like this, though they are less forum-y and only allow donations to a small set of orgs.

Thanks to Dave Kasten, Joel Becker, Marcus Abramovitch, and others for feedback on this writeup. We’d love to hear what you think of our work as well, and what you’d be excited to see from us as we go into 2024!

Executive summary: In 2023, Manifund experimented with novel funding mechanisms like impact certificates and regranting to support technical AI safety and EA projects. They funded $2 million across dozens of projects, learned lessons about what works, and aim to expand successful programs going forward.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.