2

Reactions

Just a general note, I think adding some framing of the piece, maybe key quotes, and perhaps your own thoughts as well would improve this from a bare link-post? As for the post itself:

It seems Bregman views EA as:

a misguided movement that sought to weaponize the country’s capitalist engines to protect the planet and the human race

Not really sure how donating ~10% of my income to Global Health and Animal Welfare charities matches that framework tbqh. But yeah 'weaponize' is highly aggressive language here, if you take it out there's not much wrong with it. Maybe Rutger or the interviewer think Capitalism is inherently bad or something?

effective altruism encourages talented, ambitious young people to embrace their inner capitalist, maximize profits, and then donate those profits to accomplish the maximum amount of good.

Are we really doing the earn-to-give thing again here? But like apart from the snark there isn't really an argument here, apart from again implicitly associating capitalism with badness. EA people have also warned about the dangers of maximisation before, so this isn't unknown to the movement.

Bregman saw EA’s demise long before the downfall of the movement’s poster child, Sam Bankman-Fried

Is this implying that EA is dead (news to me) or that is in terminal decline (arguable, but knowledge of the future is difficult etc etc)?

he [Rutger] says the movement [EA] ultimately “always felt like moral blackmailing to me: you’re immoral if you don’t save the proverbial child. We’re trying to build a movement that’s grounded not in guilt but enthusiasm, compassion, and problem-solving.

I mean, this doesn't sound like an argument against EA or EA ideas? It's perhaps why Rutger felt put off by the movement, but then if you want a movement based on 'enthusiasm, compassion, and problem-solving' (which are still very EA traits to me, btw), then that's because it would be doing more good, rather than a movement wracked by guilt. This just falls victim to classic EA Judo, we win by ippon.

I don't know, maybe Rutger has written up more of his criticism somewhere more thoroughly. Feel like this article is such a weak summary of it though, and just leaves me feeling frustrated. And in a bunch of places, it's really EA! See:

- Using Rob Mather founding AMF as a case study (and who has a better EA story than AMF?)

- Pointing towards reducing consumption of animals via less meat-eating

- Even explicitly admires EA's support for "non-profit charity entrepreneurship"

So where's the EA hate coming from? I think 'EA hate' is too strong and is mostly/actually coming from the interviewer, maybe more than Rutger. Seems Rutger is very disillusioned with the state of EA, but many EAs feel that way too! Pinging @Rutger Bregman or anyone else from the EA Netherlands scene for thoughts, comments, and responses.

In general, I think the article's main point was to promote Moral Ambition, not to be a criticism of EA, so it's not surprising that it's not great as a criticism of EA.

Not really sure how donating ~10% of my income to Global Health and Animal Welfare charities matches that framework tbqh. But yeah 'weaponize' is highly aggressive language here, if you take it out there's not much wrong with it. Maybe Rutger or the interviewer think Capitalism is inherently bad or something?

For what it's worth, Rutger has been donating 10% to effective charities for a while and has advocated for the GWWC pledge many times:

So I don't think he's against that, and lots of people have taken the 10% pledge specifically because of his advocacy.

Is this implying that EA is dead (news to me) or that is in terminal decline (arguable, but knowledge of the future is difficult etc etc)?

I think sadly this is a relatively common view, see e.g. the deaths of effective altruism, good riddance to effective altruism, EA is no longer in ascendancy

I mean, this doesn't sound like an argument against EA or EA ideas?

I think this is also a common criticism of the movement though (e.g. Emmet Shear on why he doesn't sign the 10% pledge)

This just falls victim to classic EA Judo, we win by ippon.

I think this mixes effective altruism ideals/goals (which everyone agrees with) with EA's specific implementation, movement, culture and community. Also, arguments and alternatives are not really about "winning" and "losing"

So where's the EA hate coming from?I think 'EA hate' is too strong and is mostly/actually coming from the interviewer, maybe more than Rutger. Seems Rutger is very disillusioned with the state of EA, but many EAs feel that way too!

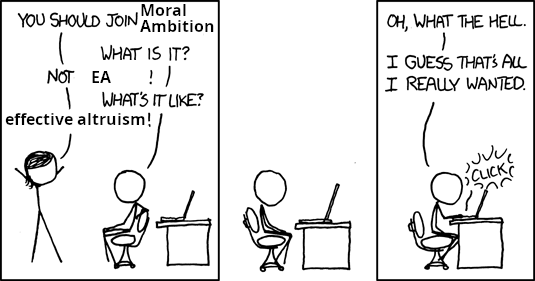

Then you probably agree that it's great that they're starting a new movement with similar ideals! Personally, I think it has a huge potential, if nothing else because of this:

If we want millions of people to e.g. give effectively, I think we need to have multiple "movements", "flavours" or "interpretations" of EA projects.

You might also be interested in this previous thread on the difference between EA and Moral Ambition.

Feels like you've slightly misunderstood my point of view here Lorenzo? Maybe that's on me for not communicating it clearly enough though.

For what it's worth, Rutger has been donating 10% to effective charities for a while and has advocated for the GWWC pledge many times...So I don't think he's against that, and lots of people have taken the 10% pledge specifically because of his advocacy

That's great! Sounds like very 'EA' to me 🤷

I think this mixes effective altruism ideals/goals (which everyone agrees with) with EA's specific implementation, movement, culture and community.

I'm not sure everyone does agree really, some people have foundational moral differences. But that aside, I think effective altruism is best understand as a set of ideas/ideals/goals. I've been arguing that on the Forum for a while and will continue to do so. So I don't think I'm mixing, I think that the critics are mixing.

This doesn't mean that they're not pointing out very real problems with the movement/community. I still strongly think that the movement has lot of growing pains/reforms/recknonings to go through before we can heal the damage of FTX and onwards.

The 'win by ippon' was just a jokey reference to Michael Nielsen's 'EA judo' phrase, not me advocating for soldier over scout mindset.

If we want millions of people to e.g. give effectively, I think we need to have multiple "movements", "flavours" or "interpretations" of EA projects.

I completely agree! Like 100000% agree! But that's still 'EA'? I just don't understand trying to draw such a big distinction between SMA and EA in the case where they reference a lot of the same underlying ideas.

So I don't know, feels like we're violently agreeing here or something? I didn't mean to suggest anything otherwise in my original comment, and I even edited it to make it more clear I was more frustrated at the interviewer than anything Rutger said or did (it's possible that a lot of the non-quoted phrasing were put in his mouth)

feels like we're violently agreeing here or something

Yes, I think this is a great summary. Hopefully not too violently?

I mostly wanted to share my (outsider) understanding of MA and its relationship with EA

Was going to post this too! Good for community to know about these critiques and alternatives to EA. However, as JWS has already pointed out, critiques are weak or based on strawman version of EA.

But overall, I like the sound of the 'Moral Amibition' project given its principles align so well with EA. Though, there is risk of confusing outsiders given how similar the goals are, and also risk of people falsely being put off EA if they get such a biased perspective.

In general, I think the article's main point was to promote Moral Ambition, not to be a criticism of EA, so it's not surprising that it's not great as a criticism of EA.

For what it's worth, Rutger has been donating 10% to effective charities for a while and has advocated for the GWWC pledge many times:

So I don't think he's against that, and lots of people have taken the 10% pledge specifically because of his advocacy.

I think sadly this is a relatively common view, see e.g. the deaths of effective altruism, good riddance to effective altruism, EA is no longer in ascendancy

I think this is also a common criticism of the movement though (e.g. Emmet Shear on why he doesn't sign the 10% pledge)

I think this mixes effective altruism ideals/goals (which everyone agrees with) with EA's specific implementation, movement, culture and community. Also, arguments and alternatives are not really about "winning" and "losing"

Then you probably agree that it's great that they're starting a new movement with similar ideals! Personally, I think it has a huge potential, if nothing else because of this:

If we want millions of people to e.g. give effectively, I think we need to have multiple "movements", "flavours" or "interpretations" of EA projects.

You might also be interested in this previous thread on the difference between EA and Moral Ambition.