In the spirit of Debate Week (March 17–24), we’re revisiting the badness of extinction question and introducing a broader framework for thinking about our impact on the possibly far future.

Introduction: Both Sides

There might come a day when sentient beings are no more. Our collective actions will likely hasten or delay that day. It is also reasonable to expect that for however long the future carries on, our choices will shape the quality of the future that emerges. What we do with our resources should be informed by both sides: length and quality. We want to develop such a framework.

Revisiting 'How Bad Would Extinction Be?'

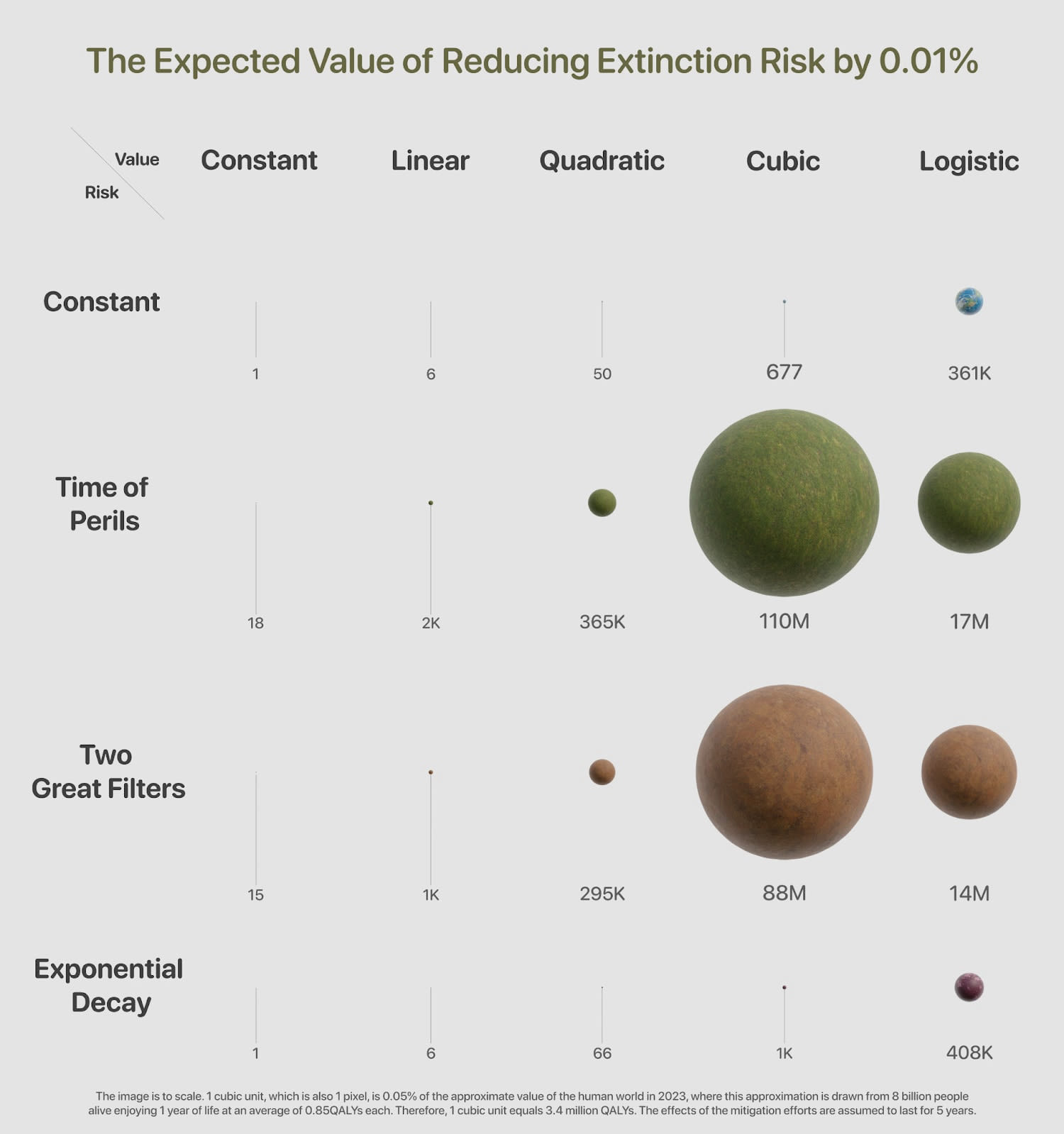

In our earlier work 'How Bad Would Human Extinction Be?', we tried to quantify the loss from human extinction in expected value terms. In that analysis, we combined two key ingredients to estimate the expected value of reducing extinction risk:

- Risk trajectories: We considered the probability of an extinction catastrophe over time – which might change over the years (due to technological advances, new threats, or safety measures).

- Value trajectories: We also considered how much value humanity could realize if it survives – essentially, the potential value of the future over time. This involves imagining how the well-being, knowledge, and achievements of future generations of sentient life might grow. For instance, the intuition behind strong longtermism is that the future could contain an astronomical amount of value (trillions of happy lives or more), meaning that human extinction would foreclose an astronomically large future.

By combining these risk and value trajectories, the previous analysis estimated the expected value of reducing extinction risk. In simple terms, even a tiny reduction in extinction risk can yield a sizable payoff in expectation, because it slightly increases the chance of unlocking that vast future. This helps explain why many effective altruists have argued that working on existential risk reduction is among the most important things we can do. On the other hand, the expected value of reducing existential risk can be much smaller than astronomical if value growth is not super fast and/or if the background risk is high.

Our preliminary work showed that risk and value trajectories matter a lot when evaluating the expected value of an action in the longterm future. However, there, we only focused on the expected value of actions to reduce extinction risk. But we should also consider efforts we might make to change the amount of value of the future. In other words, we asked 'How bad is it if the future ends prematurely?' – but we didn’t ask 'What would be the impact of making a future that does happen better?'. By focusing solely on risk reduction, the analysis left out that crucial piece of the puzzle. Moreover, as we discuss below, tractability, potential downsides, and cost of interventions should also feature in any final recommendations.

Why Bother? The Limits of a Risk-Only Focus

Focusing only on extinction risk mitigation provides an incomplete picture of long-term impact. Yes, preventing human extinction is enormously important – it safeguards the quantity of future life – but it doesn’t account for the quality of that future life. In simple terms: imagine we knew humanity would survive the next 500 years no matter what; we would still care a great deal about how those 500 years go. A future with billions of flourishing, fulfilled individuals is far more valuable than a future of equal or considerably greater length filled with suffering or stagnation.

Several limitations emerge if we look at long-term impact only through the lens of extinction risk:

- Neglecting future quality: Extinction is not the only bad outcome. Humanity’s future could be middling or wonderful depending on the actions we take. Or, indeed, it could be filled with suffering in the worst cases (s-risks). If we only try to ensure civilization’s survival, we might miss opportunities to make the future of sentient beings much better. For example, improving global institutions or spreading benevolent values could dramatically increase how well future generations fare, even though these actions might not directly change extinction probabilities.

- Diminishing returns of survival alone: Past the point of harvesting all the low-hanging fruits, further reducing an x-risk might prove particularly difficult. If so, pouring all resources into marginal risk reductions could divert attention from much more valuable trajectory improvements that reliably make future lives better or multiply the number of positive future lives. In short, an exclusive focus on survival could become inefficient by ignoring tractable ways to considerably boost welfare.

- Interaction effects: Efforts to improve future quality can also help with x-risks (and vice versa). For instance, a more prosperous and cooperative future society might be better equipped to handle risks that do arise. Alternatively, successfully averting a catastrophe could create the breathing room for society to flourish. By considering only risk, we ignore these synergies. However, there could also be tradeoffs. Some opportunities to reduce extinction might reduce the potential for flourishing, or some actions that increase expected potential flourishing might increase extinction risks directly. For example, a worldwide dictator might be much better equipped to survive the coming decades, but less likely to lead to flourishing beyond. Again, by considering risk only, we ignore these tradeoffs.

This is all to reiterate that humanity's survival is only part of securing a great long-term future — we must also ensure that humanity thrives. The earlier analysis focused solely on survival (quantity), leaving out the quality of our future, which calls for a framework that investigates both.

Is What We Have Enough?

A simple method for evaluating value changes is already possible. Imagine that the default trajectory of value follows a quadratic path, and that through certain interventions we could shift this into a logistic trajectory while keeping the risk profile unchanged. One might then simply evaluate the intervention by taking the difference in overall value between these two scenarios — comparing ‘worlds’ in the same row of the grid (Figure 1) below.

However, this approach has notable limitations. Many interventions result in only minor parameter tweaks rather than a wholesale shift to a different world. Moreover, as discussed below, the tractability and cost of such interventions, the timing of the changes they produce (whether they occur immediately or later), and persistence of their effects are not captured by this simple comparison.

Therefore, to meaningfully explore these additional dimensions of value change, we argue that they ought to be modelled in their own right rather than being treated as marginal annotations to the catastrophic risk framework. For a discussion on the range of possible modifications, see Ord’s Shaping Humanity’s Longterm Trajectory.

A Broader Model for Shaping the Future

We want to develop a broader model that explicitly compares extinction risk mitigation with direct efforts to improve the future’s value. In this framework, the expected long-term value we can achieve is still influenced by two fundamental factors:

- The chance humanity survives and reaches the future (which our actions can improve by reducing risks), and

- How valuable that future is if it comes to pass (which our actions can improve by directly shaping the world).

This broader model would essentially combine risk and value trajectories in the mathematical fashion presented by Figure 2 and Equation 1 of our previous piece. In the new model, interventions would act on either or both value and risk trajectory vectors. In practical terms, this might mean we consider interventions like advancing nuclear safety or biosecurity (which we might expect primarily increase the probability we have a long future) alongside interventions like improving global governance, fostering moral progress, or improving global animal welfare standards (which we might expect primarily increase the value realized in the future we get).

Crucially, the broadened framework wouldn’t commit to always fixing one trajectory (be it risk or value). And in reality, the two approaches can complement each other (indeed an optimal strategy may involve improving some of each); though this freedom makes the sensitivity analysis less tractable: there’s fewer fixed variables. The first goal is to identify the nature of the cases where nudging up the welfare trajectory of future generations is competitive with only nudging down the probability of catastrophe. To make such comparisons rigorous, our model would account for several key variables and considerations.

Key Factors in Our Framework

When evaluating interventions with our model, we will want to pay attention to a few perhaps familiar critical factors that determine long-term impact:

- Extinction risk trajectory: This is the familiar side of the equation and we will borrow from our previous work. The model will have flexible inputs here, such as an answer to ‘what is the baseline risk of catastrophe over time, and how might it change?’

- Value growth trajectory: What is the expected trajectory of humanity’s well-being or other values if we survive? Is the future on track to steadily improve (e.g. better technology, more enlightened values, less suffering), or could it stagnate or even worsen? Direct efforts to improve the future affect this value trajectory. For instance, investing in better governance or animal welfare might put sentient life on a higher value growth path. We ask how an intervention changes the quality of future years. Does it slightly raise the baseline (e.g. contributing to the quality or quantity of positive future lives), or potentially enable compounding improvements (e.g. setting the stage for continued moral progress), or simply bring about a certain state sooner (e.g. investing in particular kind of inevitable AI progress)? A higher value trajectory means that for each year humanity survives, more value is realized.

- Persistence of effects: Do the benefits of the intervention persist over the long run, or do they decay? And if they decay, in what manner? Persistence is a crucial variable for long-term impact. Some actions have one-off benefits that eventually fade out – for example, a single generation of economic growth or a temporary treaty might not last beyond a few decades. Other interventions can have enduring or self-reinforcing effects. For instance, successfully instilling cooperative international norms or robust institutions could shape how society functions for centuries. Or consider moral progress: a shift in values (like increased concern for animal welfare or future generations) might persist and influence behaviour for many future generations. In our model, a change that endures for hundreds or thousands of years contributes far more total value than a change that lasts only a decade. Highly persistent improvements to the future’s trajectory can rival the impact of reducing extinction risk, because they effectively add value to every surviving year going forward. On the flip side, we will bring persistence lessons from our prior work. Reducing extinction risk can itself be persistent (e.g. permanently eliminating a particular risk factor) or temporary, and that too dramatically affects its value. We try to account for how long-lasting each intervention’s impact is.

Tractability, Potential Downsides, and Cost: How feasible is it to achieve the desired change in risk or value, and how much change can we realistically expect per amount of resources invested? Incorporating estimates of tractability helps avoid leaning too heavily on interventions that look great 'on paper' for impact but are impractical. For example, drastically changing the course of global values might be very hard, slow, and uncertain; mitigating a specific extinction risk might be more straightforward by comparison – or vice versa, depending on the state of knowledge and institutions. Tractability helps us weigh our choices by how actionable they are. Even a high-leverage opportunity (like preventing a certain kind of catastrophe) is less compelling if we have almost no clue how to do it. Conversely, a more modest but highly tractable improvement (like a proven program that boosts institutional decision-making quality) might win out if it can be scaled and if its effects persist. Backfiring potential is also an important consideration. Realistically, at best, we can only make educated guesses of our actions’ consequences, and altruistically-minded actions could well backfire. Procedurally, our aim is to consider the tractability and backfiring potential of a given intervention. This could be fleshed out as part of this model, or, more sensibly, in a further tool that takes an action’s distribution of outcomes (including futile and backfiring cases) and this framework’s machinery, and estimates cost-effectiveness given those, just like our Cross-Cause Cost-Effectiveness Model tool does.[1]

By building the first three factors into one framework, we aim to estimate the overall long-term expected value of different strategies. In effect, the model asks: How much total future value (in expectation) does this intervention add? This involves estimating how it shifts the survival probability and/or the value trajectory, for how long, and (when accounting for the fourth factor) how likely it is to achieve that shift. Of course, there are huge uncertainties in any such estimates – the future is hard to predict – but making the assumptions explicit helps clarify where different interventions derive their impact, on what scale it might be, and how uncertain we should be about our estimates.

Why This Framework Matters

We believe this broader framework is important for effective altruists, funders, and decision-makers who care about influencing the long-term future. Here are a few reasons why:

- Clarifying choices: In the EA community (and especially within longtermist thinking), there’s often a debate between focusing on catastrophic risk reduction versus other long-term improvements. Our model will provide a common yardstick to evaluate both. Rather than arguing in the abstract, we can compare concrete projections: e.g. '$100 million to reduce AI-related extinction risk by X% for W years' vs. '$100 million to improve global coordination and values resulting in Y value growth, with Z persistence.' This helps identify which approach might yield more expected good, or how to balance investments between them. It moves the discussion from 'x-risk vs. everything else' to 'how much of each, given their dynamics and uncertainties'.

- Avoiding blind spots: An integrated model guards against blind spots where we might otherwise ignore a crucial lever. For instance, longtermist funders have poured a lot into x-risk areas like AI safety, sometimes assuming by default that this is superior to more indirect work. Our framework encourages evaluating that assumption: if value-improvement efforts (say, improving institutions or research into wiser governance) can have higher persistence or tractability, they could rival or exceed the impact of marginal x-risk reduction. Conversely, it also highlights how certain risk-reduction efforts could be even more valuable than we might expect under certain circumstances. By quantifying both, we’re less likely to neglect an area of enormous potential.

- Informing a portfolio approach: Different interventions have different profiles across the variables (risk reduction, value improvement, persistence, tractability). For example, work on pandemic prevention might moderately reduce extinction risk and also improve near-term global health (a direct good but maybe less persistent effect), whereas work on values (like promoting peace and moral inclusion) might have slower, more compounding effects on the value trajectory. A savvy decision-maker, or indeed a movement, might choose to diversify across multiple approaches – some aimed at keeping humanity safe, others at guiding humanity’s trajectory – to robustly improve the future under a range of assumptions. Our framework could help in constructing such a portfolio of long-term investments by making the trade-offs more transparent. It might show how a mix of interventions can jointly lower the extinction risk while also boosting the quality of key trajectories.

- Adapting to new evidence: Because we break the model down into distinct components — such as risk levels, potential value, and other key assumptions — we can clearly see how each input drives the overall conclusions. Rather than suggesting that our default settings track our final views about risk or value, the model is designed to systematize the importance and consequences of these assumptions. With this framework, decision-makers can run “what if” scenarios to stress-test which inputs are most critical, guiding further reflection and refinement. In this way, our approach is a living framework that evolves with new insights, rather than a one-time argument tied to a fixed set of assumptions.

Ultimately, we hope this broader model will guide us toward strategies that maximize the value of the future in a more holistic way. Current catastrophic-risk-only mindsets are, one daresays, much like the social planner that focuses on maximizing number of life years instead of maximizing quality-adjusted-life-years, to give an example of the latter kind. Doing this can yield them a vastly inferior analysis. By accounting for both the chance of having a future and how good that future could be, we align our analysis with what really matters: ensuring sentient beings not only exist for the long haul, but that they flourish if they do.

We’re excited to continue refining this framework and applying it to real-world decisions about where to direct resources. By widening our view beyond extinction risk alone, we aim to unlock more ways to positively shape the long-term trajectory of life on Earth (and beyond). There is enormous value at stake – both in safeguarding it and in enhancing it – and we want to make sure we’re considering the full picture when we decide how to invest our efforts for the sake of future generations.

We expect some of the details to change as others get filled in. The above is intended to convey our current perspective and we especially welcome feedback at this stage.

This post was written by Rethink Priorities' Worldview Investigations Team. Rethink Priorities is a global priority think-and-do tank aiming to do good at scale. We research and implement pressing opportunities to make the world better. We act upon these opportunities by developing and implementing strategies, projects, and solutions to key issues. We do this work in close partnership with foundations and impact-focused non-profits or other entities. If you're interested in Rethink Priorities' work, please consider subscribing to our newsletter. You can explore our completed public work here.

This seems like a good model for thinking about the question, but I think the conclusion should point to focusing more, but not exclusively, on risk mitigation - as I argue briefly here.

Thanks for the post! I strongly upvoted it.

Are you planning to apply the framework to:

How do you suggest decision-makers decide on the parameters of the model? It is very difficult to predict specifics of the future over a few decades from now, so I do not know how one can make informed choices about effects on the longterm value of the future.

Executive summary: While reducing extinction risk is crucial, focusing solely on survival overlooks the importance of improving the quality of the future; a broader framework is needed to balance interventions that enhance future value with those that mitigate catastrophic risks.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.