Wait, what? Why a post now about the EA's community's initial response to covid-19, based on an 80,000 Hours Podcast interview from April 2020? That will become clearer as you read on. There is a bigger lesson here.

Context: Gregory Lewis is a biorisk researcher, formerly at the Future of Humanity Institute at Oxford, with a background in medicine and public health. He describes himself as "heavily involved in Effective Altruism". (He's not a stranger here: his EA Forum account was created in 2014 and he has 21 posts and 6000 karma.)

The interview

Lewis was interviewed on the 80,000 Hours Podcast in an episode released on April 17, 2020. Lewis has some harsh words for how the EA community initially responded to the pandemic.

But first, he starts off with a compliment:

If we were to give a fair accounting of all EA has done in and around this pandemic, I think this would overall end up reasonably strongly to its credit. For a few reasons. The first is that a lot of EAs I know were, excuse the term, comfortably ahead of the curve compared to most other people, especially most non-experts in recognizing this at the time: that emerging infectious disease could be a major threat to people’s health worldwide. And insofar as their responses to this were typically either going above and beyond in terms of being good citizens or trying to raise the alarm, these seem like all prosocial, good citizen things which reflect well on the community as a whole.

He also pays a compliment to a few people in the EA community who have brainstormed interesting ideas about how to respond to the pandemic and who (as of April 2020) were working on some interesting projects. But he continues (my emphasis added):

But unfortunately I’ve got more to say.

So, putting things politely, a lot of the EA discussion, activity, whatever you want to call it, has been shrouded in this miasma of obnoxious stupidity, and it’s been sufficiently aggravating for someone like me. I sort of want to consider whether I can start calling myself EA adjacent rather than EA, or find some way of distancing myself from the community as a whole. Now the thing I want to stress before I go on to explain why I feel this way is that unfortunately I’m not alone in having these sorts of reactions.

... But at least I have a few people who talk to me now, who, similar to me, have relevant knowledge, background and skills. And also, similar to me, have found this community so infuriating they need to take a break from their social media or want to rage quit the community as a whole. ... So I think there’s just a pattern whereby discussion around this has been very repulsive to people who know a lot about the subject is, I think, a course for grave concern.

That EA's approval rating seems to fall dramatically with increasing knowledge is not the pattern you typically take as a good sign from the outside view.

Lewis elaborates (my emphasis added again):

And this general sense of just playing very fast and loose is pretty frustrating. I have experienced a few times of someone recommending X, then I go into the literature, find it’s not a very good idea, then I briefly comment going, “Hey, this thing here, that seems to be mostly ignored”, then I get some pretty facile reply and I give up and go home. And that’s happened to other people as well. So I guess given all these things, it seems like bits of the EA response were somewhat less than optimal.

And I think for ways it could have been improved were mostly in the modesty direction. So, for example, I think several EAs have independently discovered for themselves things like right censoring or imperfect ascertainment or other bits of epidemiology which inform how you, for example, assess the case fatality ratio. And that’s great, but all of that was in most textbooks and maybe it’d have saved time had those been consulted first rather than doing something else instead.

More on this consulting textbooks:

But typically for most fields of human endeavor, we have a reasonably good way which is probably reasonably efficient in terms of picking up the relevant level of knowledge and expertise. Now, it’s less efficient if you just target it, if you know in advance what you want to know ahead. But unfortunately, this area tends to be one where it’s a background tacit knowledge thing. It’s hard to, as it were, rapier-like just stab all the things, in particular, facts you need. And if you miss some then it can be a bit tricky in terms of having good ideas thereafter.

What's worse than inefficiency:

The other problems are people often just having some fairly bad takes on lots of things. And it’s not always bad in terms of getting the wrong answer. I think some of the interventions do seem pretty ill-advised and could be known to be ill-advised if one had maybe done one’s homework slightly better. These are complicated topics generally: something you thought about for 30 minutes and wrote a Medium post about may not actually be really hitting the cutting edge.

An example of a bad take:

So I think President Trump at the moment is suggesting that, as it were, the cure is worse than the disease with respect to suppression. ... But suppose we’re clairvoyant and we see in two years’ time, we actually see that was right. ... I think very few people would be willing to, well, maybe a few people listening to this podcast can give Trump a lot of credit for calling it well. Because they would probably say, “Well yeah, maybe that was the right decision but he chose it for the wrong reasons or the wrong epistemic qualities”. And I sort of feel like a similar thing sort of often applies here.

So, for example, a lot of EAs are very happy to castigate the UK government when it was more going for mitigation rather than suppression, but for reasons why, just didn’t seem to indicate they really attended to any of the relevant issues which you want to be wrestling with. And see that they got it right, but they got it right in the way that stopped clocks are right if you look at them at the right time of day. I think it’s more like an adverse rather than a positive indicator. So that’s the second thing.

On bad epistemic norms:

And the third thing is when you don’t have much knowledge of your, perhaps, limitations and you’re willing to confidently pronounce on various things. This is, I think, somewhat annoying for people like me who maybe know slightly more as I’m probably expressing from the last five minutes of ranting at you. But moreover, it doesn’t necessarily set a good model for the rest of the EA community either. Because things I thought we were about were things like, it’s really important to think things through very carefully before doing things. A lot of your actions can have unforeseen consequences. You should really carefully weigh things up and try and make sure you understand all the relevant information before making a recommendation or making a decision.

And it still feels we’re not really doing that as much as we should be. And I was sort of hoping that EA, in an environment where there’s a lot of misinformation, lots of outrage on various social media outlets, there’s also castigation of various figures, I was hoping EA could strike a different tone from all of this and be more measured, more careful and just more better I guess, roughly speaking.

More on EA criticism of the UK government:

Well, I think this is twofold. So one is, if you look at SAGE, which is the Scientific Advisory Group for Emergencies, who released what they had two weeks ago in terms of advice that they were giving the government, which is well worth a read. And my reading of it was essentially they were essentially weeks ahead of EA discourse in terms of all the considerations they should be weighing up. So obviously being worse than the expert group tasked to manage this is not a huge rap in terms of, “Well you’re doing worse than the leading experts in the country.” That’s fair enough. But they’re still overconfident in like, “Oh, don’t you guys realize that people might die if hospital services get overwhelmed, therefore your policy is wrong.” It seems like just a very facile way of looking at it.

But maybe the thing is first like, not having a very good view. The second would be being way too overconfident that you actually knew the right answer and they didn’t. So much that you’re willing to offer a diagnosis, for example, “Maybe the Chief Medical Officer doesn’t understand how case ascertainment works or something”. And it’s like this guy was a professor of public health in a past life. I think he probably has got that memo by now. And so on and so forth.

On cloth masks:

I think also the sort of ideas which I’ve seen thrown around are at least pretty dicey. So one, in particular, is the use of cloth masks; we should all be making cloth masks and wearing them.

And I’m not sure that’s false. I know the received view in EA land is that medical masks are pretty good for the general population which I’ll just about lean in favor of, although all of these things are uncertain. But cloth masks seem particularly risky insofar as if people aren’t sterilizing them regularly which you expect they won’t: a common thing about the public that you care about is actual use rather than perfect use. And you have this moist cloth pad which you repeatedly contaminate and apply to your face which may in fact increase your risk and may in fact even increase the risk of transmission. It’s mostly based on contact rather than based on direct droplet spreads. And now it’s not like lots of people were touting this. But lots on Twitter were saying this. They cite all the things. They seem not to highlight the RCT which cluster analyzed healthcare workers to medical masks, control, and cloth masks, and found cloth masks did worse than the control.Then you would point out, per protocol, that most people in the controlled arm were using medical masks anyway or many of them were, so it’s hard to tell whether cloth masks were bad or medical masks were good. But it’s enough to cause concern. People who write the reviews on this are also similarly circumspect and I think they’ve actually read the literature where I think most of the EAs confidently pronouncing it’s a good idea generally haven’t. So there’s this general risk of having risky policy proposals which you could derisk, in expectation, by a lot, by carefully, as it were, checking the tape.

More on cloth masks:

And I still think if you’re going to do this, or you’re going to make your recommendations based on expectation, you should be checking very carefully to make sure your expectation is as accurate as it could be, especially if there’s like a credible risk of causing harm and that’s hard to do for anyone, for anything. I mean cf. the history of GiveWell, for example, amongst all its careful evaluation. And we’re sort of at the other end of the scale here. And I think that could be improved. If it was someone like, “Oh, I did my assessment review of mask use and here’s my interpretation. I talked to these authors about these things or whatever else”, then I’d be more inclined to be happy. But where there’s dozens of ideas being pinged around… Many of them are at least dubious, if not downright worrying, then I’m not sure I’m seeing really EA live out its values and be a beacon of light in the darkness of irrationality.

Lewis' concrete recommendations for EA:

The direction I would be keen for EAs to go in is essentially paying closer attention to available evidence such as it is. And there are some things out there which can often be looked at or looked up, or existing knowledge one can get better acquainted with to help inform what you think might be good or bad ideas. And I think, also, maybe there’s a possibility that places like 80K could have a comparative advantage in terms of elicitation or distillation of this in a fast moving environment, but maybe it’s better done by, as it were, relaying on what people who do this all day long, and who have a relevant background are saying about this.

So yeah, maybe Marc Lipsitch wants to come on the 80K podcast, maybe someone like Adam Kucharski would like to come on. Or like Rosalind Eggo or other people like this. Maybe they’d welcome a chance of being able to set the record straight given like two hours to talk about their thing rather than like a 15 minute media segment. And it seems like that might be a better way of generally improving the epistemic waterline of EA discussions, rather than lots of people pandemic blogging, roughly speaking, and a very rapid, high turnaround. By necessity, there’s like limited time to gather relevant facts and information.

More on EA setting a bad example:

...one of the things I’m worried about, it’s like a lot of people are going to look at COVID-19, start want get involved in GCBRs. And sort of all these people are cautious, circumspect, lot’s of discretion and stuff like that. I don’t think 80Ks activity on this has really modeled a lot of that to them. Rob [Wiblin], in particular, but not alone. So having a pile of that does not fill me with great amounts of joy or anticipation but rather some degree of worry.

I think that does actually apply even in first order terms to the COVID-19 pandemic, where I can imagine a slightly more circumspect or cautious version of 80K, or 80K staff or whatever, would have perhaps had maybe less activity on COVID, but maybe slightly higher quality activity on COVID and that might’ve been better.

On epistemic caution:

I mean people like me are very hesitant to talk very much on COVID for fear of being wrong or making mistakes. And I think that fear should be more widespread and maybe more severe for folks who don’t have the relevant background who’re trying to navigate the issue as well.

The lesson

Lewis twice mentions an EA Forum post he wrote about epistemic modesty, which sounds like it would be a relevant read, here. I haven't read the whole thing yet, but I adore this bon mot in the section "Rationalist/EA exceptionalism":

Our collective ego is writing checks our epistemic performance (or, in candour, performance generally) cannot cash; general ignorance, rather than particular knowledge, may explain our self-regard.

Another bon mot a little further down, which is music to my weary ears:

If the EA and rationalist communities comprised a bunch of highly overconfident and eccentric people buzzing around bumping their pet theories together, I may worry about overall judgement and how much novel work gets done, but I would at grant this at least looks like fertile ground for new ideas to be developed.

Alas, not so much. What occurs instead is agreement approaching fawning obeisance to a small set of people the community anoints as ‘thought leaders’, and so centralizing on one particular eccentric and overconfident view. So although we may preach immodesty on behalf of the wider community, our practice within it is much more deferential.

This so brilliantly written, and so tightly compressed, I nearly despair, because I fear my efforts to articulate similar ideas will never approach this masterful expression.[1]

The philosopher David Thorstad corroborates Lewis' point here in a section of a Reflective Altruism blog post about "EA celebrities".

I'm not an expert on AI, and there is so much fundamental uncertainty about the future of AI and the nature of intelligence, and so much fundamental disagreement, that it would be hard if not impossible to meaningfully discern the majority views of expert communities on AGI in anything like the way you can for fields like epidemiology, virology, or public health. So, covid-19 and AGI are just fundamentally incomparable in some important way.

But I do know enough about AI — things that are not hard for anyone to Google to confirm — to know that people in EA routinely make elementary mistakes, ask the wrong questions, and confidently hold views that the majority of experts disagree with.

Elementary mistakes include: getting the definitions of key terms in machine learning wrong; not realizing that Waymos can only drive with remote assistance.

Asking the wrong questions includes: failing to critically appraise whether performance on benchmark tasks actually translates into real world capabilities on tasks in the same domain (i.e. does the benchmark have measurement validity if its intended use is to measure general intelligence, or human-like intelligence, or even just real world performance or competence?); failing to wonder what (some, many, most) experts say are the specific obstacles to AGI.

Confidently holding views that the majority of experts disagree with includes: there is widespread, extreme confidence about LLMs scaling to AGI, but a survey of AI experts earlier this year found that 76% think current AI techniques are unlikely or very unlikely to scale to AGI.

The situation with AI is more forgivable than with covid because there's no CDC or SAGE for AGI. There's no research literature — or barely any, especially compared to any established field — and there are no textbooks. But, still, there is general critical thinking and skepticism that can be applied with AGI. There are commonsense techniques and methods to understanding the issue better.

In a sense, I think the best analogy for assessing the plausibility of claims about near-term AGI is investigating claims that someone possesses a supernatural power like psychics who claim to be able to solve crimes via extrasensory perception, or investigating an account of a religious miracle, like a holy relic healing someone's disease or injury. Or maybe an even better analogy is assessing the plausibility of the hypothesis that a near-Earth object is alien technology, as I discussed here. There is no science of extrasensory perception, or religious miracles. There is no science of alien technology (besides maybe a few highly speculative papers). Yet there are general principles of scientific epistemology, scientific skepticism, and critical thinking we can apply to these questions.

I resonate completely with Gregory Lewis' dismay at how differently the EA community does research or forms opinions today than how GiveWell evaluates charities. I feel, like Lewis seems to, that the way it is now is a betrayal of EA's founding values.

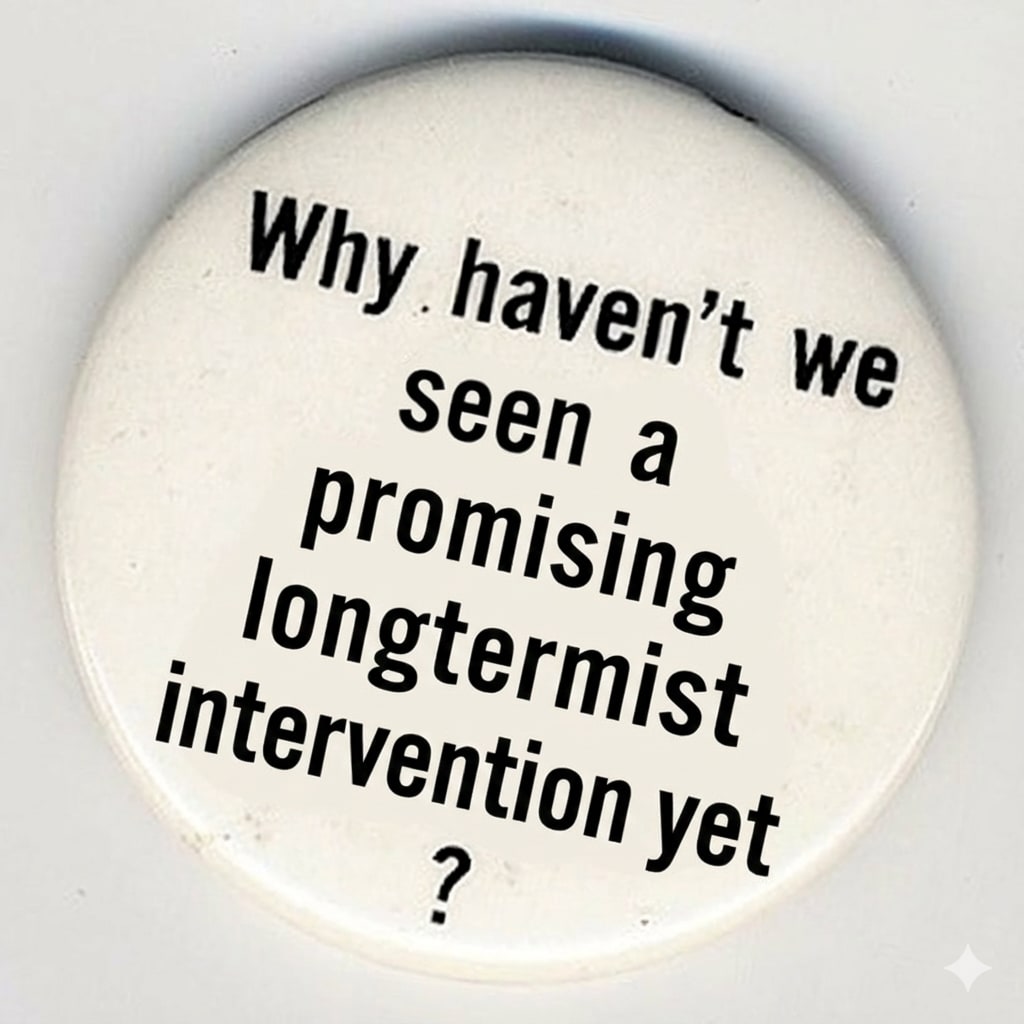

AGI is an easy topic for me to point out the mistakes in a clear way. A similar conversation could be had about longtermism, in terms of applying general critical thinking and skepticism. To wit: if longtermism is supposedly the most important thing in the world, then, after eight years of developing the idea, after multiple books published and many more papers, why haven't we seen a single promising longtermist intervention yet, other than those that long predate the term "longtermism"? (More on this here.)

Other overconfident, iconoclastic opinions in the EA community are less prominent, but you'll often see people who think they can outsmart decades of expert study of an issue with a little effort in their spare time. You see opinions of this sort in areas including policy, science, philosophy, and finance/economics. It would be impossible for me to know enough about all these topics to point out the elementary mistakes that are probably made in all of them. But even in a few cases where I know just a little, or suddenly feel curious and care to Google, I have been able to notice some elementary mistakes.

To distill the lesson of the covid-19 example into two parts:

- In cases where there is an established science or academic field or mainstream expert community, the default stance of people in EA should be nearly complete deference to expert opinion, with deference moderately decreasing only when people become properly educated (i.e., via formal education or a process approximating formal education) or credentialed in a subject.

- In cases where there is no established science or academic field or mainstream expert community, such as AGI, longtermism, or alien technology, the appropriate approach is scientific skepticism, epistemic caution, uncertainty, common sense, and critical thinking.

- ^

My best attempt so far was my post "Disciplined iconoclasm".

FWIW, it's unclear to me how persuasive COVID-19 is as a motivating case for epistemic modesty. I can also recall plenty of egregious misses from public health/epi land, and I expect re-reading the podcast transcript would remind me of some of my own.

On the other hand, the bar would be fairly high: I am pretty sure both EA land and rationalist land had edge over the general population re. COVID. Yet the main battle would be over whether they had 'edge generally' over 'consensus/august authorities'.

Adjudicating this seems murky, with many 'pick and choose' factors ('reasonable justification for me, desperate revisionist cope for thee', etc.) if you have a favoured team you want to win. To skim a few:

For better or worse, I still agree with my piece on epistemic modesty, although perhaps I find myself an increasing minority amongst my peers.

To clarify, are you saying that, in retrospect, the process through which people in EA did research on epidemiology, public health, and related topics looks any better to you now that it looked to you back in April 2020 when you did this interview?

I think I understand your point that it would probably be nearly impossible to score the conclusions in a way that people in EA would agree is convincing or fair — there's tons of ambiguity and uncertainty, hence tons of wiggle room. (I hope I'm understanding that right.)

But in the April 2020 interview, you said that many of these conclusions were akin to calling a coin flip. Crudely, many interventions that experts were still debating could be seen as roughly having a 50-50 chance of being good or bad (or maybe it's anywhere from 70-30 to 30-70, doesn't really matter), so any conclusion that an intervention is good or bad has a roughly 50-50 chance of being right. You said a stopped clock is right twice a day, and it may turn out that Donald Trump got some things right about the pandemic, but if so, it will be through dumb luck rather than good science.

So, I'm curious: leaving aside the complicated and messy question of scoring the conclusions, do you now think the EA community's approach to the science — particularly, the extent to which they wanted to do it themselves, as non-experts, rather than just trying to find the expert consensus on any given topic, or even seeing if any expert would talk to them about it (e.g. in 2020, you suggested some names of experts to have on the 80,000 Hours Podcast) — was any less bad than you saw it in 2020?

I'd say my views now are roughly the same now as they were then. Perhaps a bit milder, although I am not sure how much of this is "The podcast was recorded at a time I was especially/?unduly annoyed at particular EA antics which coloured my remarks despite my best efforts (such as they were, and alas remain) at moderation" (the complements in the pre-amble to my rant were sincere; I saw myself as hectoring a minority), vs. "Time and lapses of memory have been a salve for my apoplexy---but if I could manage a full recounting, I would reprise my erstwhile rage".

But at least re. epistemic modesty vs. 'EA/rationalist exceptionalism', what ultimately decisive is overall performance: ~"Actually, we don't need to be all that modest, because when we strike out from "expert consensus" or hallowed authorities, we tend to be proven right". Litigating this is harder still than re. COVID specifically (even if 'EA land' spanked 'credentialed expertise land' re. COVID, its batting average across fields could still be worse, or vice versa),

Yet if I was arguing against my own position, what happened during COVID facially looks like fertile ground to make my case. Perhaps it would collapse on fuller examination, but certainly doesn't seem compelling evidence in favour of my preferred approach on its face.

Thanks, that's very helpful.

I'm curious why you say that about the accuracy/performance of the conclusions of the EA community with regard to covid. Are you saying it's just overly complicated and messy to evaluate these conclusions now, even to your own satisfaction? Or you do personally have a sense of how good/bad overall the conclusions were, you just don't think you could convince people in EA of your sense of things?

The comparison that comes to mind for me is how amateur investors (including those who don't know the first thing about investing, how companies are valued, GAAP accounting, and so on) always seem to think they're doing a great job. Part of this is they typically don't even benchmark their performance against market indexes like the S&P 500. Or, if they do, they do it in a really biased, non-rigorous way, e.g. oh, my portfolio of 3 stocks went up a lot recently, let me compare it to the S&P 500 year-to-date now. So, they're not even measuring their performance properly in the first place, yet they seem to believe this is a great idea and they're doing a great job anyway.

Studies of even professional investors find it's rare for an investor to beat the market over a 5-year period, and even rarer for an investor who beats the market in a 5-year period to beat the market again in the next 5-year period. There actually seems to be surprisingly weak correlation between beating the market in one period to the next. Using your coin flip analogy, if every stock trade is a bet on a roughly 50/50 proposition, i.e., "this stock will beat the market" or "this stock won't beat the market", then you need a large sample size of trades to rule out the influence of chance. It's so easy for amateurs to cherry-pick trades, prematurely declare victory (e.g. say they beat the market the moment a stock goes up a lot, rather than waiting until the end of the quarter or the end of the year), become overconfident on too small a number of trades (e.g. just bought Apple stock), or not even benchmark their performance against the market at all.

Seeing these irrationalities so often and so viscerally, and even seeing how hard it is to talk people out of them even when you can show them the research and expert opinion, or explain these concepts, I'm extremely skeptical of people who just an intuitive, gut feeling that they've outperformed experts on making calls or predictions with a statistically significant sample size of calls, in the absence of any kind of objective accounting of their performance. It just seems too tempting, feels too good, to feel like one is winning, to take a moment of sober second thought and double-check that feeling against an objective measure (in the case of stocks, checking a market index), wonder if you can rule out luck (e.g. just buying Apple and that's it), and wonder if you can rule out bias in your assessment of performance (e.g., checking the S&P 500 when your favourite stock has just gone up a lot).

If the process was as bad as you say, as in, people who have done a few weeks of reading on the relevant science and medicine making elementary mistakes, then I'm very skeptical of the amount of psychological bias involved in people recalling and subjectively assessing their own track record, or any sense of confidence they have about that. It seems like if we don't need people who understand science and medicine to do science and medicine properly, then a lot of our education system and scientific and medical institutions are a waste. Given that it's just so commonsense that understanding a subject better should lead you to make better calls on that subject — overall, over the long term, statistically — we should not violate common sense on the basis of a few amateurs guessing a few coin flips better than experts, and we should especially not violate common sense when we can't even confirm whether that actually happened.

On the other hand, many early critiques of GiveWell were basically "Who are you, with no background in global development or in traditional philanthropy, to think you can provide good charity evaluations?"

That seems like a perfectly reasonable, fair challenge to put to GiveWell. That’s the right question for people to ask! My impression is that GiveWell, starting early on and continuing today, has acted with humility and put in the grinding hard work to build credibility over time.

I don’t think GiveWell would have been a meaningfully useful project if a few people just spent a bit of their spare time over a few weeks to produce the research and recommendations. (Yet that seems sufficient in some cases, such as covid-19, for people in EA to decide to overrule expert opinion.)

It could have been different if there were already a whole field or expert community doing GiveWell-style cost-effectiveness evaluations for global health charities. Part of what GiveWell did was identify a gap in the “market” (so to speak) and fill it. They weren’t just replicating the effort of experts.

[Edited on Dec. 16 at 8:50 PM Eastern to add: to clarify, I mean the job GiveWell did was more akin to science journalism or science communication than original scientific research. They were building off of expert consensus rather than challenging it.]

As I recall, GiveWell initially was a project the founders tried to do in their spare time, and then quickly realized was such a big task it would have to be a full-time job. I also hazily recall them doing a lot of work to get up to speed, and also that they had, early on, (and still have) a good process for taking corrections from people outside the organization.

I think as a non-expert waltzing into an established field, you deserve the skepticism and the challenges you will initially get. That is something for you to overcome, that you should welcome as a test. If that is too hard, then the project is too hard.

After all, this is not about status, esteem, ego, or pride, right? It’s about doing good work, and about doing right by the aid recipients or the ultimate beneficiaries of the work. It's not about being right, it's about getting it right.

Based on my observations and interactions, EA had much more epistemic modesty and much less of a messiah complex in the early-to-mid-2010s. Although I saw a genuinely scary and disturbing sign of a messiah complex from a LEAN employee/volunteer as early as 2016, who mused on a Skype call about the possibility of EA solving the world's problems in priority sequence. (That person, incidentally, also explicitly encouraged me and my fellow university EA group organizer in the Skype meeting to emotionally manipulate students into becoming dedicated effective altruists in a way that I took to be plainly unethical and repugnant. So, overall, a demented conversation.)

The message and tone in the early-to-mid-2010s was more along the lines of: 'we have these ideas about our obligation to give to charity and about charity cost-effectiveness that might sound radical and counterintuitive, but we want to have a careful, reasonable conversation about it and see if we can convince people we're on the right track'. Whereas by now, in the mid-2020s, the message and tone feels more like: 'the EA community has superior rationality to 99.9% of people on Earth, including experts and academics in fields we just started thinking about 2 weeks ago, and it's up to us to save the world — no, the lightcone!'. I can see how the latter would be narratively and emotionally compelling to some people, but it's also extremely off-putting to many other people (like me, perhaps also to Gregory Lewis and other people he knows with backgrounds in public health/medicine/epidemiology/virology/etc., although I don't want to speak for him or the people he knows).

In fact, they were largely building off the efforts of recognized domain experts. See, e.g., this bibliography from 2010 of sources used in the "initial formation of [its] list of priority programs in international aid," and this 2009 analysis of bednet programs.

I agree with this if you read the challenge literally, but the actual challenges were usually closer to a reflexive dismissal without actually engaging with GiveWell's work.

Also, I disagree that the only way we were able to build trust in GiveWell was through this:

We can often just look at object-level work, study research & responses to the research, and make up our mind. Credentials are often useful to navigate this, but not always necessary.

I don't know the specific, actual criticisms of GiveWell you're referring to, so I can't comment on them — how fair or reasonable they were.

My point is more abstract: just that, in general, it is fair to be to challenge non-experts who are trying to do serious work in area outside of their expertise. It is a challenge that anyone in the position of the GiveWell founders should gladly and willingly accept, or else they're not up to the job.

Reputation, trust, and credibility in an area where you are a neophyte is not a right owed to you automatically. It's something you earn by providing evidence that you trustworthy, credible, and deserve a good reputation.

This is hazy and general, so I don't know what you specifically mean by it. But there are all kinds of reasons that non-experts are, in general, not competent to assess the research on a topic. For example, they might be unacquainted with the nuances of statistics, experimental designs, and theories of underlying mechanisms involved in studies on a certain topic. Errors or caveats that an expert would catch might be missed by an amateur. And so on.

I am extremely skeptical of any claim that an individual or a group is competent at assessing research in any and all extant fields of study, since this would seem to imply that individual or group possesses preternatural abilities that just aren't realistic given what we know about human limitations. I think the sort of Tony Stark or Sherlock Holmes general-purposes geniuses of fiction are only fictional. But even if they existed, we would know who they are, and they would have a litany of objectively impressive accomplishments.

If you took this seriously, in 2011 you'd have had no basis to trust GiveWell (quite new to charity evaluation, not strongly connected to the field, no credentials) over Charity Navigator (10 years of existence, considered mainstream experts, CEO with 30 years of experience in charity sector).

But, you could have just looked at their website (GiveWell, Charity Navigator) and tried to figure out yourself whether one of these organisations is better at evaluating charities.

This feels like a Motte ("skeptical of any claim that an individual or a group is competent at assessing research in any and all extant fields of study") and Bailey (almost complete deference with deference only decreasing with formal education or credentials). GiveWell obviously never claimed to be experts in much beyond GHW charity evaluation.

Well, no. Because I did hold that view very seriously (as I still do) in the late 2000s and early 2010s, and I came to trust GiveWell.

Charity Navigator doesn't even claim to evaluate cost-effectiveness; they don't do cost-effectiveness estimates.

Even prior to GiveWell, there were similar ideas kicking around. A clunky early term that was used was 'philanthrocapitalism' (which is a mouthful and also ambiguous). It meant that charities should seek an ROI in terms of impact like businesses do in terms of profit.

Back in the day, I read the development economist William Easterly's blog Aid Watch (a project of NYU's Development Research Institute) and he called it something like the smart aid movement, or the smart giving movement.

The old blog is still there in the Wayback Machine, but the Wayback Machine doesn't allow for keyword search, so it's hard to track down specific posts.

I had forgotten until I just went spelunking in the archive that William Easterly and Peter Singer had a debate in 2009 about global poverty, foreign aid, and charity effectiveness. The blog post summary says that even though it was a debate and they disagreed on things, they agreed on recommendations to donate to some specific charities.

My point here is that charity effectiveness had been a public conversation involving aid experts like Easterly going back a long time. You never would have taken away from this public conversation that you should pay attention to something like Charity Navigator rather than something like GiveWell.

In the late 2000s and early 2010s, what international development experts would have told you to look at Charity Navigator?

I might have done a poor job getting across what I'm trying to say. Let me try again.

What I mean is that, in order for a person or a group of people to avoid deferring to experts in a field, they would have to be competent at assessing research in that field. And maybe they are for one or a few fields, but not all fields. So, at some point, they have to defer to experts on some things — on many things, actually.

What I said about this wasn't intended as a commentary on GiveWell — sorry for the confusion. I think GiveWell's approach was sensible. They realized that competently assessing the relevant research on global poverty/global health would be a full-time job, and they would need to learn a lot, and get a lot of input from experts — and still probably make some big mistakes. I think that's an admirable approach, and the right way to do it.

I think this is quite different from spending a few weeks researching covid and trying to second-guess expert communities, rather than just trying to find out what the consensus views among expert communities are. If some people in EA had decided in, say, 2018 to start focusing full-time on epidemiology and public health, and then started weighing in on covid-19 in 2020 — while actively seeking input from experts — that would have been closer to the GiveWell approach.

Yeah but didn’t it turn out that Gregory Lewis was basically wrong about masks, and the autodidacts he was complaining about were basically right? Am I crazy? How are you using that quote, of all things, as an example illustrating your thesis??

More detail: I think a normal person listening to the Gregory Lewis excerpt above would walk away with an impression: “it’s maybe barely grudgingly a good idea for me to use a medical mask, and probably a bad idea for me to use a cloth mask”. That’s the vibe that Lewis is giving. And if this person trusted Lewis, then they would have made worse decisions, and been likelier to catch COVID, then if they had instead listened to the people Lewis was criticizing. Because current consensus is: medical masks are super duper obviously good for not catching COVID, and cloth masks are not as good as medical masks but clearly better than nothing.

This sounds like outcome bias to me, i.e., believing in retrospect that a decision was the right one because it happened to turn out well. For example, if you decide to drive home drunk and don't crash your car, you could believe based on outcome bias that that was the right decision.

There may also be some hindsight bias going on, where in retrospect it's easier to claim that something was absolutely, obviously the right call, when, in fact, at the time, based on the available evidence, the optimally rational response might have been to feel a significant amount of uncertainty.

I don't know if you're right that someone taking their advice from Gregory Lewis in this interview would have put themselves more at risk. Lewis said it was highly uncertain (as of mid-April 2020) whether medical masks were a good idea for the general population, but to the extent he had an opinion on it at the time, he was more in favour than against. He said it was highly uncertain what the effect of cloth masks would ultimately turn out to be, but highlighted the ambiguity of the research at the time. There was a randomized controlled trial that found cloth masks did worse than the control, but many people in the control group were most likely wearing medical masks. So, it's unclear.

The point he was making with the cloth masks example, as I took it, was simply that although he didn't know how the research was ultimately going to turn out, people in EA were missing stuff that experts knew about and that was stated in the research literature. So, rather than engaging with what the research literature said and deciding based on that information, people in EA were drawing conclusions from less complete information.

I don't know what Lewis' actual practical recommendations were at the time, or if he gave any publicly. It would be perfectly consistent to say, for example, that you should wear medical masks as a precaution if you have to be around people and to say that the evidence isn't clear yet (as of April 2020) that medical masks are helpful in the general population. As Lewis noted in the interview, the problem isn't with the masks themselves, it's that medical professionals know how to use them properly and the general population doesn't. So, how does that cash out into advice?

You could decide: ah, why bother? I don't even know if masks do anything or not. Or you could think: oh, I guess I should really make sure I'm wearing my mask right. What am I supposed to do...?

Similarly, with cloth masks, when it became better-known how much worse cloth masks were than medical masks, everyone stopped wearing cloth masks and started wearing KN95, N95 masks, or similar. If someone's takeaway from that April 2020 interview with Lewis was that the efficacy of cloth masks was unclear and there's a possibility they might even turn out to be net harmful, but that medical masks work really well if you use them properly, again, they could decide at least two different things. They could think: oh, why bother wearing any mask if my cloth mask might even do more harm than good? Or they could think: wow, medical masks are so much better than cloth masks, I really should be wearing those instead of cloth masks.

Conversely, people in EA promoting cloth masks might have done more harm than good depending on, well, first of all, if anyone listened to that advice in the first place, but, second, on whether any people who did listen decided to go for cloth masks rather than no mask, or decided to wear cloth masks instead of medical masks.

Personally, my hunch is that if very early on in the pandemic (like March or April 2020), there had been less promotion of cloth masks, and if more people had been told the evidence looked much better for medical masks than for cloth masks (slight positive overall story for medical masks, ambiguous evidence for cloth masks, with a possibility of them even making things worse), then people would have really wanted to switch from cloth masks to medical masks — because this is what happened later on when the evidence came in and it was much clearer that medical masks were far superior.

The big question mark above that is I don't know/don't remember at what point the supply chain was able to provide enough medical masks for everybody.

I looked into it and found a New York Times article from April 3, 2020 that discusses a company in Chicago that had a large supply of KN95 masks, although this is still in the context of providing masks to hospitals:

I found another source that says, "Millions of KN95 masks were imported between April 3 and May 7 and many are still in circulation." But this is also still in the context of hospitals, not the general public. That's in the United States.

I found a Vice article from July 13, 2020 that implies by that point it was easy for anyone to buy KN95 masks. Similarly, starting on or around June 30, 2020, there were vending machines selling KN95 masks in New York City subway stations. Although apparently KN95 masks were for sale in vending machines in New York as early as May 29.

In any case:

For balance, the established authorities' early beliefs and practices about COVID did not age well. Some of that can be attributed to governments doing government things, like downplaying the effectiveness of masks to mitigate supply issues. But, for instance, the WHO fundamentally missed on its understanding of how COVID is transmitted . . . for many months. So we were told to wash our groceries, a distraction from things that would have made a difference. Early treatment approaches (e.g., being too quick to put people on vents) were not great either.

The linked article shows that some relevant experts had a correct understanding early on but struggled to get acceptance. "Dogmatic bias is certainly a big part of it,” one of them told Nature later on. So I don't think the COVID story would present a good case for why EA should defer to the consensus view of experts. Perhaps it presents a good case for why EA should be very cautious about endorsing things that almost no relevant expert believes, but that is a more modest conclusion.

Is this a response to what Gregory Lewis said? I don’t think I understand.

Maybe this is subtle/complicated… Are the examples you’re citing the actual consensus views of experts? Or are they examples of governments and institutions like the World Health Organization (WHO) misunderstanding what the expert conensus and/or misrepresenting the expert consensus to the public?

This excerpt from the Nature article you cited makes it sound like the latter:

Did random members of the EA community — as bright and eager as they might be — with no prior education, training, or experience with relevant fields like public health, epidemiology, virology, and medicine outsmart the majority of relevant experts on this question (airborne vs. not) or any others, not through sheer luck or chance, but by actually doing better research? This is a big claim, and if it is to be believed, it needs strong evidentiary support.

In this Nature article, there is an allegation that the WHO wasn’t sufficiently epistemically modest or deferential to the appropriate class of experts:

And there is an allegation around communications, rather than around the science itself:

So, let’s say there’s another pandemic. Which is the better strategy?

Strategy A: Read forum posts and blog posts by people in the EA community doing original research and opining on epidemiology, virology, and public health who have never so much cracked open a relevant textbook.

Strategy B: Survey sources of expert opinion, including publications like Nature, open letters written on behalf of expert communities, statements by academic and scientific organizations and so on, to determine if a particular institution like the WHO is accurately communicating the majority view of experts, or if they’re a weird outlier adhering to a minority view, or just communicating the science badly.

I would say the Nature article is support for Strategy B and not at all support for Strategy A.

You could even interpret it as evidence against Strategy A. If you believe the criticism in Nature is right, even experts in an adjacent field or subfield, who have prestigious credentials like advising the WHO, can get things catastrophically wrong by being insufficiently epistemically modest and not deferring enough to the experts who know the most about a subject, and who have done the most research on it. If this is true, then that should make you even more skeptical about how reliable the research and recommendations will be from a non-expert blogger with no relevant education who only started learning about viruses and pandemics for the first time a few weeks ago.

What is described in the Nature piece sound like incredibly subtle mistakes (if we can for sure call them mistakes at this point). Lewis’ critique of the EA community is that it was making incredibly obvious, elementary mistakes. So, why think the EA community can outperform experts on avoiding subtle mistakes if it can’t even avoid the obvious mistakes?

One of the recent examples of hubris I saw on the EA Forum was someone asserting that they (or the EA community at large) could resolve, within the next few months/years, the fundamental uncertainty around the philosophical assumptions that go into cost-effectiveness estimates comparing shrimp welfare and human welfare. Out of the topics I know well, the philosophy of consciousness might be #1, or at least near the top. I don’t know how to convey the level of hubris that comment betrays.

It would be akin to saying that a few people in EA, with no training in physics, could figure out, in a few months or years, the correct theory of quantum gravity and reconcile general relativity and quantum mechanics. Or that a few people in EA, with no training in biology or medicine, would be able to cure cancer within a few months or years. Or that, with no background in finance, business, or economics, that they’d be able to launch an investment fund that consistently, sustainably achieves more alpha than the world’s top-performing investment funds every year. Or that, with no background in engineering or science, they’d be able to beat NASA and SpaceX in sending humans to Mars.

In other words, this is a level of hubris that’s unfathomable, and just not a serious or credible way to look at the world or your own abilities, in the absence of strong, clear evidence that you possess even average abilities relative to the relevant expert class.

I don’t know the first thing about epidemiology, virology, public health, or medicine. So, I can’t independently evaluate how appropriate or correct it is/was for Gregory Lewis to be so aggravated by the EA community’s initial response to covid-19 that he considered distancing himself from the movement. I can believe that Lewis might be correct because a) he has the credentials, b) the way he’s describing it is how it essentially always turns out when non-experts think they can outsmart experts in a scientific or medical field without first becoming experts themselves, and c) in areas where I do know enough to independently evaluate the plausibility of assertions made by people in the EA community on the object level, I feel as infuriated and incredulous as Lewis described feeling in that 80,000 Hours interview.

I see these sort of wildly overconfident claims about being able to breezily outsmart experts on difficult scientific, philosophical, or technical problems as moderately, but not dramatically, more credible than the people talking about UFOs or ESP or whatever. (Which apparently is not that rare.)

I see a general rhetorical or discursive strategy employed across many people with fringe views, be they around pseudoscience, fake medicine, or conspiracy theories. First, identify some scandal or blunder or internecine conflict within some scientific expert community. Second, say, “Aha! They’re not so smart, after all!” Third, use this as support for whatever half-cocked pet theory you came up with. This is obviously a logically invalid argument, as in, obviously the conclusion does not logically follow from the premises. The standard for scientific experts should not be perfection; the standard for amateurs, dilettantes, and non-expert iconoclasts should be showing they can objectively do better than the average expert — not on a single coin flip, but on an objective, unbiased measure of overall performance.

There is a long history in the LessWrong community of opposition to institutional science, with the typical amount of intellectual failure that usually comes with opposition to institutional science. There is a long history of hyperconfidently scorning expert consensus and being dead wrong. Obviously, there is significant overlap between the LessWrong community and the EA community, and significant influence by the former on the latter. What I fear is that this anti-scientific attitude and undisciplined iconoclasm has become a mainstream, everyday part of the EA community, in a way that was not true, or at least not nearly as true, in my experience, in the early-to-mid-2010s.

The obvious rejoinder is: if you really can objectively outperform experts in any field you care to try your hand at for a few weeks, go make billions of dollars right now, or do any other sort of objectively impressive thing that would provide evidence for the idea that you have the abilities you think you do. Surely, within a few months or years of effort, you would have something to show for it. LessWrong has been around for a long time, EA has been around for a long time. There’s been plenty of time. What’s the excuse for why people haven’t done this yet?

And, based on base rates, what would you say is more likely: people being misunderstood iconoclastic self-taught geniuses who are on the cusp of greatness or people just being overly confident based on a lack of experience and a lack of understanding of the problem space?

Just responding on this point since a comment of mine was linked to (re ESP):

Are you sure that no-one with any credibility thinks UFOs may be extraterrestrial spacecraft?

And re these two topics and a few others, the experts (in the sense of those who have spent years researching them) are largely those who believe they are real, as they are taboo topics along mainstream scientists. Hence the latter are surprisingly ignorant about them, with little/no knowledge of the research, and a tendency to resort to unscientific hand-wavy dismissals that don’t stand up to scrutiny.

(I’m not claiming to be an expert on either of these topics myself by the way, though I seem to be better read on them than most astronomers and psychologists.)

Yes.

This was interesting to read! I don't necessarily think the points that Greg Lewis pointed out are that big of a deal because while it can sometimes be embarrassing to discuss and investigate things as non-experts, there are also benefits that can come from it. Especially when the experts seem to be slow or under political constraints or sometimes just wrong in the case of individual experts. But I agree that EA can fall into a pattern where interested amateurs discuss technical topics with the ambition (and confidence?) of domain experts -- without enough people in the room noticing that they might be out of their depth and missing subtle but important things.

Some comments on the UK government's early reaction to Covid:

Even if we assume that it wasn't possible for non-experts to do better than SAGE, I'd say it was still reasonable for people to have been worried that the government was not on top of things. The recent Covid inquiry lays out that SAGE was only used to assess the consequences of policies that the politicians presented before them; lockdown wasn't deemed politically feasible (without much thought -- it basically just wasn't seriously considered until very late). This led to government communications doing this weird dance where they tried to keep the public calm and speak about herd immunity and lowering the peak, but their measures and expectations did not match the reality of the situation.

Not to mention that when it came to the second lockdown later in 2020, by that point Boris Johnson was listening to epidemiologists who were just outright wrong. (Sunetra Gupta had this model that herd immunity had already been reached because there was this "iceberg" of not-yet-seen infections.) It's unclear how much similar issues were already a factor in February/March of 2020. (I feel like I vaguely remember a government source mentioning vast numbers of asymptomatic infections before the first lockdown, but I just asked Claude about summarizing the inquiry findings on this, and Claude didn't find anything that would point to this having been a factor. So, maybe I misremembered or maybe the government person did say that in one press interview as a possibility, but then it wasn't a decisive factor in policy decisions and SAGE itself obviously never took this seriously because it could be ruled out early on.)

So, my point is that you can hardly blame EAs for not leaving things up to the experts if the "experts" include people who even in autumn of 2020 thought that herd immunity had already been reached, and if the Prime Minister picks them to listen to rather than SAGE.

Lastly, I think Gregory Lewis was at risk of being overconfident about the relevance of expert training or "being an expert" when he said that EAs who were right about the government U-turn about lockdowns were right only in the sense of a broken clock. I was one of several EAs who loudly and clearly said "the government is wrong about this!." I even asked in an EA Covid group if we should be trying to get the attention of people in government about it. This might have been like 1-2 days before they did the U-turn. How would Greg Lewis know that I (and other non-experts like me -- I wasn't the only one who felt confident that the government was wrong about something right before March 16th) had not done sound steps of reasoning at the time?

I'm not sure myself; I admittedly remember having some weirdly overconfident adjacent beliefs at the time, not about the infection fatality rate [I think I was always really good at forecasting that -- you can go through my Metaculus commenting history here], but about what the government experts were basing their estimates on. I for some reason thought it was reasonably plausible that the government experts were making a particular, specific mistake about interpreting the findings from the Cruise ship cases, but I didn't have much evidence of them making that specific mistake [other than them mentioning the Cruise ship in connection with estimating a specific number], nor would it even make sense for government experts to stake a lot of their credence in just one single data point [because neither did I]. So, me thinking I know that they were making a specific mistake, as opposed to just being wrong for reasons that must be obscure to me, seems like pretty bad epistemics. But anyway, other than that, I feel like my comments from early March 2020 aged remarkably well and I could imagine that people don't appreciate how much you will know and understand about a subject if you follow it obsessively with all your attention every single day. And it doesn't take genius statistics skill to piece together infection fatality estimates and hospitalization estimates from different outbreaks around the world. Just using common sense and trying to adjust for age stratification effects with very crude math, and reasoning about where countries do good or bad testing (like, reading about the testing in Korea, it became clear to me that they probably were not missing tons of cases, which was very relevant in ruling out some hypothesis about vast amounts of asymptomatic infections), etc. This stuff was not rocket science.

I agree with the main takeaway of this post - settled scientific consensus should generally be deferred to, and would add on that from a community perspective more effort needs to be put into EAs that can do so doing science communication, or science communicators posting on e.g. the EA Forum. I will have a think about if I can do anything here, or in my in-person group organising.

But I think some of this post is just describing personality traits of the kind of people who come to EA, and saying they're bad personality traits. And I'm not really sure what you want to be done about that.

Huh? I think everything mentioned in this post (either by me or by Gregory Lewis) are behaviours that people can consciously choose to engage in or not. I don’t think any of them are personality traits.

What do you think is an example of a personality trait mentioned in this post?

I'll give a couple of examples:

I mean, isn't the idea that they might be able to contribute something meaningful, and doing so is both low-effort and very good if it works, so worth the shot?

If EA deferred to the moral consensus, it would cease to exist, because the moral consensus is that altruism should be relational and you have no obligation to do anything else. People who have tendencies to defer to the moral consensus don't join up with EA.

-

Again, not that these are optimal, but they basically seem to me to be either pretty stable individual difference things about people or related to a person's age (young adult, usually). It would be great to have more older adults on-board with the core idea of doing the most good possible being a tool of achieving self-actualisation, as well as more acceptance of this core idea among mainstream society. I hope we will get there.

I don’t think any of these are personality traits. These are ideas or strategies that people can discuss and decide whether they’re wise or unwise. You could, conceivably, have a discussion about one or more of these, become convinced that the way you’ve been doing things is unwise, and then change your behaviour subsequently. I wouldn’t call that "changing your personality". I don't see why these would be stable traits, as opposed to things that people can change by thinking about it and deciding to act differently.

I think there might be serious problems with the ideas or strategies that you described, if those were the ideas or strategies at play in EA. But my feeling is you gave a bit of a watered-down, euphemistic retelling of the ideas and strategies than what I tend to see people in EA actually act on, or what they tend to say they believe.

For instance, on covid-19, it seems like some people in EA still think (as evidenced by the comments on this post) that they actually repeatedly outsmarted the expert/scientific communities on the relevant public health questions — not just by chance or luck, in a "broken clock is right twice a day" or "calling a coin flip" way, but by general superior rationality/epistemology — rather than following a much more epistemically modest, cautious rationale of we "might be able to contribute something meaningful, and doing so is both low-effort and very good if it works, so worth the shot".

I don't buy that thinking this way is a stable personality trait that is beyond your power to change as opposed to something that you can be talked out of.

It seems weird to call any of these things personality traits. Is being an act consequentialist as opposed to a rule consequentialist a personality trait? Obviously not, right? It seems equally obvious to me that what we're talking about here are not personality traits. It seems equally weird to me to call them personality traits as it would be to call subscribing to rule consequentialism a personality trait.