We are excited to announce the launch of a new advocacy nonprofit, StakeOut.AI.

The mission statement of our nonprofit

StakeOut.AI fights to safeguard humanity from AI-driven risks. We use evidence-based outreach to inform people of the threats that advanced AI poses to their economic livelihoods and personal safety. Our mission is to create a united front for humanity, driving national and international coordination on robust solutions to AI-driven disempowerment.

We pursue this mission via partnerships (e.g., with other nonprofits, content creators, and AI-threatened professional associations) and media-based awareness campaigns (e.g., traditional media, social media, and webinars).

Our modus operandi is to tell the stories of the AI industry’s powerless victims, such as:

- people worldwide, especially women and girls, who have been victimized by nonconsensual deepfake pornography of their likenesses

- unemployed artists whose copyrighted hard work were essentially stolen by AI companies without their consent, in order to train their economic AI replacements

- parents who fear that their children will be economically replaced, and perhaps even replaced as a species, by “highly autonomous systems that outperform humans at most economically valuable work” (OpenAI’s mission)

We connect these victims’ stories to powerful people who can protect them. Who are the powerful people? The media, the governments, and most importantly: the grassroots public.

StakeOut.AI's motto

The Right AI Laws, to Right Our Future.

We believe AI has great potential to help humanity. But like all other industries that put the public at risk, AI must be regulated. We must unite, as humans have done historically, to work towards ensuring that AI helps humanity flourish rather than cause our devastation. By uniting globally with a single voice to express our concerns, we can push governments to pass the right AI laws that can right our future.

However, StakeOut.AI's Safer AI Global Grassroots United Front movement isn't for everybody.

- It's not for those who don’t mind being enslaved by robot overlords.

- It’s not for those whose first instincts are to avoid making waves, rather than to help the powerless victims tell their stories to the people who can protect them.

- It's not for those who say they ‘miss the days’ when only intellectual elites talked about AI safety.

- It's not for those who insist, even after years of trying, that attempting to solve technical AI alignment while continuing to advance AI capabilities is the only way to prevent the threat of AI-driven human extinction.

- It’s not for those who think the public is too stupid to handle the truth about AI. No matter how much certain groups say they are trying to ‘shield’ regular folks for their ‘own good,’ the regular folks are learning about AI one way or another.

- It's also not for those who are indifferent to the AI industry’s role in invading privacy, exploiting victims, and replacing humans.

So to help save your time, please stop reading this post if any of the above statements reflect your views.

But, if you do want transparency and accountability from the AI industry, and you desire a moral and safe AI environment for your family and for future generations, then the United Front may be for you.

By prioritizing high-impact projects over fundraising in our early months, we at StakeOut.AI were able to achieve five publicly known milestones for AI safety:

- researched a 'scorecard' evaluating various AI governance proposals, which was presented by Professor Max Tegmark at the first-ever international AI Safety Summit in the U.K. (as part of The Future of Life Institute’s governance proposal for the Summit),

- raised awareness, such as by holding a webinar informing AI-threatened Hollywood actors about the threat that advanced AI poses to their jobs and safety,

- contributed to AI-related regulatory processes through submissions to multiple U.S. government agencies, such as the National Institute of Standards and Technology, the U.S. Copyright Office, and the White House Office of Management and Budget,

- worked tirelessly to circulate multiple open letters, petitions, and reports that push decision-makers towards AI safety, and

- informed the public via various media interviews around the world, including speaking out in the New York Times against certain AI industry leaders who believe "humanity should roll over and let the robots overtake us."

In addition, StakeOut.AI connects and coordinates #TeamHuman allies (especially our allies’ media-based campaigns for awareness and victim advocacy) behind the scenes—although we cannot publicly disclose details, as this is necessary to protect our allies, our sources, and the victims we are helping.

The AI control problem remains unsolved

With timelines shortening, grassroots mobilization can augment the elite-mobilization approach that has traditionally dominated AI safety.

We, the humans who care about humans, need to take the lead in subjecting the AI industry to meaningful democratic oversight. AI companies are racing to automate increasingly many human capabilities of economic and strategic relevance (a phenomenon that manifests as the rapid replacement of human occupations), with the ultimate goal being the creation of superintelligent AI that outperforms humans at most such capabilities. This is particularly concerning given that the scientific problem of how to control AI remains unsolved. Legislative action rooted in democratic mobilization is necessary to ensure that this dangerous, unaccountable AI race does not replace us humans. Instead, AI should augment us humans.

The AI industry will not make it easy for us. Money corrupts, and well-moneyed industries that cause harm to the public tend to mount sophisticated corporate influence campaigns, even to the point of creating front groups (for a detailed case, please read Park & Tegmark, 2023). These front groups include legitimate-sounding organizations in the media sector, the research sector, and the nonprofit sector. While appearing legitimate, these front groups are designed to explicitly or implicitly silence the industry’s powerless victims, by weakening efforts to bring these victims’ stories to the attention of the powerful people who can protect them (the media, the governments, and the grassroots public).

On top of the EA community’s existing efforts on AI safety, we at StakeOut.AI are excited to help inform the public about the truth—the truth about AI companies’ dangerous, unaccountable race towards superintelligent AI. The stakes (economic replacement leading to species replacement, with rapidly shortening timelines) are very high. And the magnitude of what needs to be achieved on the AI governance front is so large that while some past efforts have avoided grassroots mobilization (in favor of elite mobilization), we at StakeOut.AI think that grassroots mobilization may be able to augment the elite-mobilization approach that has traditionally dominated the AI safety landscape.

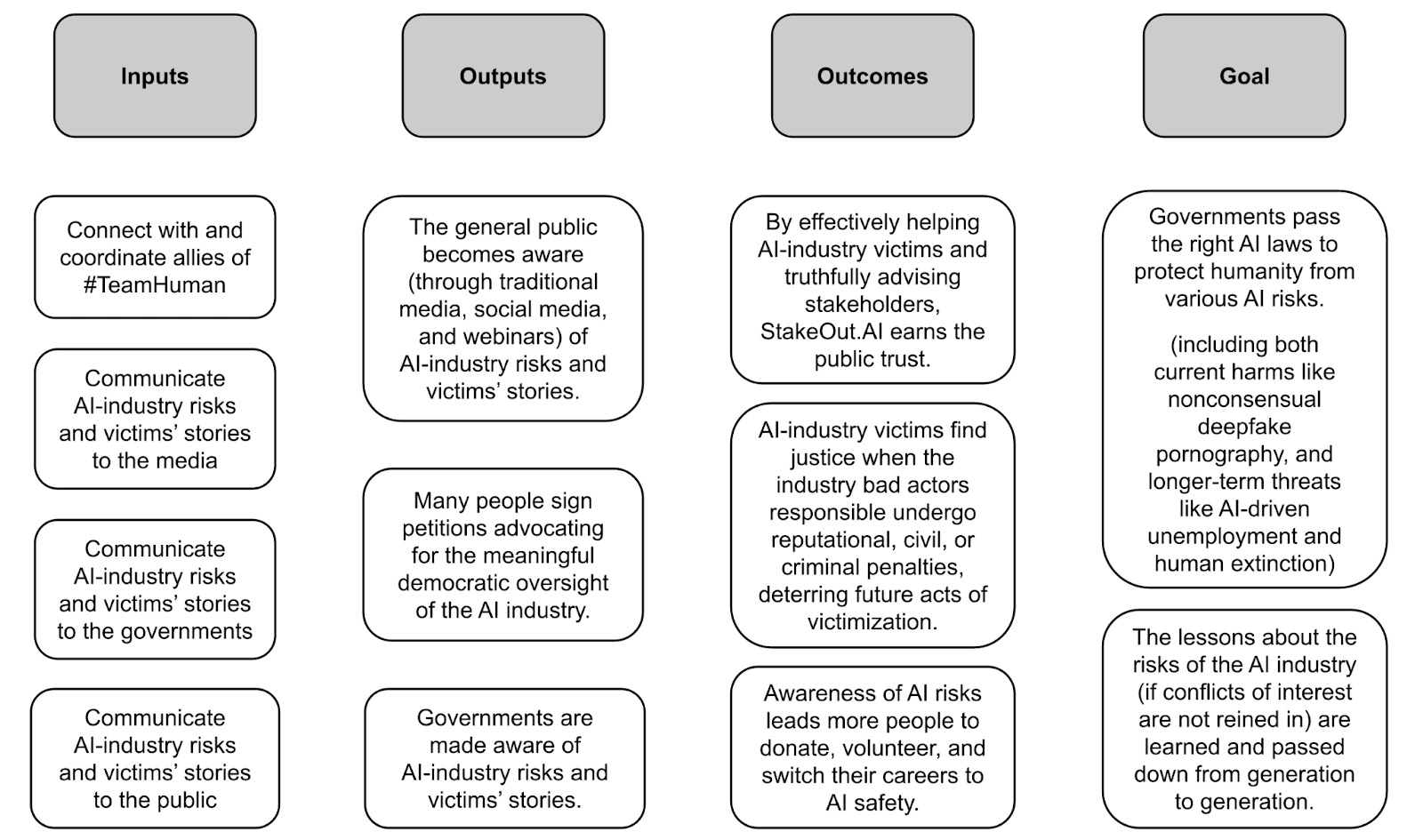

StakeOut.AI's Theory of Change

Our apology for not following the protocol of estimating the expected value

Having said this, we sincerely apologize that we do not have a probability statement nor a clear cost-effectiveness analysis, as might be expected in an introductory post.

The truth is, there are no guarantees.

- We cannot guarantee that the global grassroots movement will create enough external pressure to compel governments to no longer ignore the voices calling for regulated AI—this could potentially accelerate the law-making process and prevent further AI-driven harms.

- We also cannot promise that the global grassroots movement will be the force that ultimately unites people to heed the warnings of all the experts in the field about the potential threat of human extinction by superintelligent AI—this could possibly pressure governments to act quicker upon seeing just how many people care about this issue from a single petition.

- Of course, we cannot claim that the correct AI laws will definitively establish safeguards with 100% certainty, deterring Big Tech companies from continuing to develop superintelligent AI—there is a chance that any company can choose not to comply with the laws in the countries where they operate.

But imagine if the AI existential risk problem is truly resolved because of the right AI laws being implemented before the birth of superintelligent AI, and we can all breathe a sigh of relief. Knowing the dedication of the EA crowd, after this short break, the hardworking EAs will then look for other major problems like climate change to tackle for the greater good, putting AI-driven human extinction behind us.

What we can promise you is that we will do our best to:

- Get the petitions signed to hit critical mass and become real pressure points

- Understand to the best of our abilities which are the right AI laws to push (e.g. responsible scaling policies would probably not be a good idea, and identifying safety washing)

- Bring the massive number of signatures of people who care about these issues to lawmakers who can effect change

- Be consistent, use understandable language, and make similar asks when talking to policymakers to avoid causing confusion

- Control the message by getting people to refer back to the movement website, discouraging the use of shortened posts on social media as the full message (being careful of the message being taken out of context)

- Monitor the risk of polarization both within nations and between countries

- Be on the lookout for regulatory capture in the proposed laws (which would favor big companies to keep smaller companies out)

- Be careful not to misrepresent things (especially when referencing other people’s papers)

- Be clear about lines like even though AI is good, we cannot accidentally push effective accelerationism

- Be open to collaboration and feedback from 'inside game' people, to ensure that implementing the right AI laws would be feasible

- Stay connected with the latest happenings on the AI policy landscape so as not to push the 'button' before the capacity building side is ready

- Set the expectation while working with policymakers that there is no single bill they can write that will solve everything all in one go. It will take lots of research and evaluation, and further amendments will be necessary so we need to keep the door open

- Be upfront about caring for the multiple aspects of AI risks, not just job loss or deepfakes, etc. (so there is no bait and switch, or feelings of being tricked)

- Think about the possibility of increasing the AI arms race dynamics

- Consider the impact that AI could make if controlled or deployed by extremists

- Stay true to our message no matter who we are talking to

We thank all feedback providers from the bottom of our hearts

The AI industry has access to substantial amounts of money—and with that money, sophisticated corporate influence campaigns designed to reduce the effectiveness of nonprofits like StakeOut.AI. Barring something like the AI industry being outlawed entirely, we at StakeOut.AI can never compete with the AI industry in terms of money.

On top of this, it's actually quite daunting to witness firsthand the influence that those with power and status can exert, even within a do-good movement like EA. We have spoken to over 100 EAs individually in one-on-one situations. Many of them have voiced their support for what we are doing in these private conversations, in addition to the amazing red-teaming feedback we openly received (some shared above). We thank you from the bottom of our hearts because many of these short 30-minute conversations, and even some 20-minute conversations, were PIVOTAL in contributing to and shaping our nonprofit’s strategy.

Yet, we found out that even though we want to shout our gratitude to the mountains for some of these people, we were advised by another nonprofit co-founder here in EA to “always err in favor of not mentioning specific names or asking for explicit permission to mention their names.” In the for-profit business world where I used to work, there have been many instances where the most groundbreaking advice came from short conversations during conferences. A nugget of wisdom dropped by someone much more experienced in a conversation as brief as 10 minutes had actually dramatically improved the direction of different business initiatives. In those instances, the providers of such advice more than welcomed the shout-out.

But from what we have gathered, grassroots approaches for AI safety have been arguably considered taboo in EA during the last few years, even to the point where people fear that being seen as supporting a grassroots AI safety movement might jeopardize one’s career, status, and future in EA. It's unfortunate how being associated with the strategy of grassroots mobilization can tarnish one’s reputation.

We fully understand that since we are not posting from anonymous accounts, we may face potential repercussions in the future.

All that to say… Since we don’t have time to directly reach out to the numerous people we want to thank for their significant contributions (and also not to put you in an awkward position by being publicly seen to support an AI safety effort opposed to the norm), we can only thank you in silence.

Because of you, we have successfully motivated members of the general public to put their names behind their support for the #WeStakeOutAI movement. It’s also due to your support that we have received such positive feedback from the public for what we are doing - so much feedback that we haven’t even had time to add onto our website.

Thank you for your anonymous support as we advocate for safe AI, going against the tide in more ways than one. We are working day and night to push our community in a direction where every one of us feels like we can say the truth, rather than being pressured into silence.

We need your help to effectively protect humanity

We know from the FTX debacle that money corrupts—that conflicts of interest can impair the epistemics of even very smart, very well-intentioned people in the EA community.

But we at StakeOut.AI refuse to accept this status quo as a given. Yes, we at StakeOut.AI can never compete with the AI industry in terms of money. But where we can effectively compete with the AI industry—and with your help, perhaps even outcompete the AI industry—is in telling everyone the truth.

Our director and co-founder, Dr. Park, has been consistent in telling the truth he arrived at—the truth that the industry’s dangerous AI race should be slowed down, that AI safety should be increased at the expense of AI capabilities, and that this must be done with unprecedented amounts of democratic and global coordination. We at StakeOut.AI have been telling this truth, and will continue to tell this truth for as long as we think the evidence supports it, even if financial and institutional pressures attempt to dissuade us from doing so.

And we need your help to effectively tell people the truth about the dangerous, unaccountable race to build superintelligent AI.

We need your help to effectively help the AI industry’s powerless victims—to effectively share these victims’ stories with powerful people who can help protect them (the media, the governments, and the grassroots public).

We need your help to help keep StakeOut.AI as an effective nonprofit, despite the adversarial tactics employed by AI-industry leaders who are financially or ideologically opposed to our mission.

Please consider donating, kickstarting the petitions, and connecting us with AI-industry victims who need their voices heard (via our “Submit an Incident” form). And most importantly, please spread the word about StakeOut.AI! We need all the help we can get.

Thank you very much for reading.

Thanks for sharing, Harry!

I think I am basically with you respecting the above points as you put them. At the same time, I wonder what would be your thoughts on the control over the future mostly peacefully shifting from biological humans to AI systems and digital humans over this century. I think this may well be good, but there is a sense in which it would go against the last point above, as biological humans would sort of be replaced.

Thank you for your comment.

My beliefs are rooted in Christianity.

To be honest, I have not contemplated the possibility of digital humans and what that means.

I would have to talk to a lot more people, do a lot more research, and a lot more praying to even attempt to come up with an answer.

As of the current moment, the initiative is to protect biological humans born into this world from a human womb. What the future holds, I do not know.

I do apologize if my answer is basically "I don't know."

Executive summary: StakeOut.AI is a new nonprofit aiming to advocate for AI safety and regulation through grassroots mobilization and telling "truths" the AI industry wants to suppress.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Strong vote of no-confidence in the combo of (the author(s), at this time, with this strategy), based on this post.

This post does not suggest to me that the author(s) have a deep, nuanced understanding of the trade-offs. The strategy of rallying grass-roots might make sense, but could also cause a lot of harm, and be counter productive in many many ways.

For those who have down-voted or disagreed: happy to hear (and potentially engage with) substantive counter arguments. But I don't think the Forum is a good place to post posturing, which the original post sometimes descends into.