Claude 3.7's coding ability forced me to reanalyze whether where will be a SWE job for me after college. This has forced me to re-explore AI safety and its arguments, and I have been re-radicalized towards the safety movement.

What I can’t understand, though, is how contradictory so much of Effective Altruism (EA) feels. It hurts my head, and I want to explore my thoughts in this post.

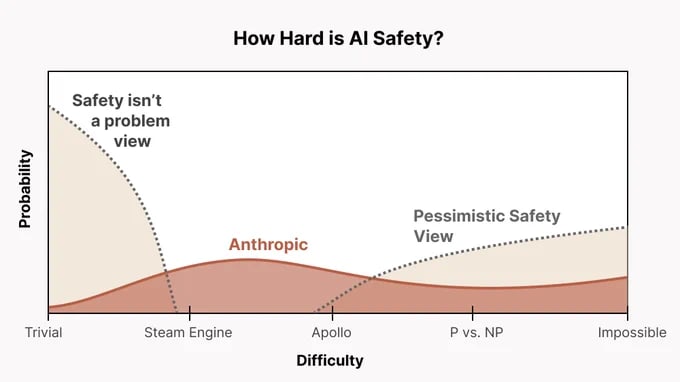

EA seems far too friendly toward AGI labs and feels completely uncalibrated to the actual existential risk (from an EA perspective) and the probability of catastrophe from AGI (p(doom)). Why aren’t we publicly shaming AI researchers every day? Are we too unwilling to be negative in our pursuit of reducing the chance of doom? Why are we friendly with Anthropic? Anthropic actively accelerates the frontier, currently holds the best coding model, and explicitly aims to build AGI—yet somehow, EAs rally behind them? I’m sure almost everyone agrees that Anthropic could contribute to existential risk, so why do they get a pass? Do we think their AGI is less likely to kill everyone than that of other companies? If so, is this just another utilitarian calculus that we accept even if some worlds lead to EA engineers causing doom themselves? What is going on...

I suspect that many in the AI safety community avoid adopting the "AI doomer" label. I also think that many AI safety advocates quietly hope to one day work at Anthropic or other labs and will not publicly denounce a future employer.

Another possibility is that Open Philanthropy (OP) plays a role. Their former CEO now works at Anthropic, and they have personal ties to its co-founder. Given that most of the AI safety community is funded by OP, could there be financial incentives pushing the field more toward research than anti AI-lab advocacy? This is just a suspicion, and I don’t have high confidence in it, but I’m looking for opinions.

Spending time in the EA community does not calibrate me to the urgency of AI doomerism or the necessary actions that should follow. Watching For Humanity’s AI Risk Special documentary made me feel far more emotionally in tune with p(doom) and AGI timelines than engaging with EA spaces ever has. EA feels business as usual when it absolutely should not. More than 700 people attended EAG, most of whom accept X-risk arguments, yet AI protests in San Francisco still draw fewer than 50 people. I bet most of them aren’t even EAs.

What are we doing?

I’m looking for discussion. Please let me know what you think.

That makes sense. For what it’s worth, I’m also not convinced that delaying AI is the right choice from a purely utilitarian perspective. I think there are reasonable arguments on both sides. My most recent post touches on this topic, so it might be worth reading for a better understanding of where I stand.

Right now, my stance is to withhold strong judgment on whether accelerating AI is harmful on net from a utilitarian point of view. It's not that I think a case can't be made: it's just I don’t think the existing arguments are decisive enough to justify a firm position. In contrast, the argument that accelerating AI benefits people who currently exist seems significantly more straightforward and compelling to me.

This combination of views leads me to see accelerating AI as a morally acceptable choice (as long as it's paired with adequate safety measures). Put simply:

Since I give substantial weight to both perspectives, the stronger and clearer case for acceleration (based on the interests of people alive today) outweighs the much weaker and more uncertain case for delay (based on speculative long-term utilitarian concerns) in my view.

Of course, my analysis here doesn’t apply to someone who gives almost no moral weight to the well-being of people alive today—someone who, for instance, would be fine with everyone dying horribly if it meant even a tiny increase in the probability of a better outcome for the galaxy a billion years from now. But in my view, this type of moral calculus, if taken very seriously, seems highly unstable and untethered from practical considerations.

Since I think we have very little reliable insight into what actions today will lead to a genuinely better world millions of years down the line, it seems wise to exercise caution and try to avoid overconfidence about whether delaying AI is good or bad on the basis of its very long-term effects.