No one has ever seen an AGI takeoff, so any attempt to understand it must use these outside view considerations.

—[Redacted for privacy]

What? That’s exactly backwards. If we had lots of experience with past AGI takeoffs, using the outside view to predict the next one would be a lot more effective.

—My reaction

Two years ago I wrote a deep-dive summary of Superforecasting and the associated scientific literature. I learned about the “Outside view” / “Inside view” distinction, and the evidence supporting it. At the time I was excited about the concept and wrote: “...I think we should do our best to imitate these best-practices, and that means using the outside view far more than we would naturally be inclined.”

Now that I have more experience, I think the concept is doing more harm than good in our community. The term is easily abused and its meaning has expanded too much. I recommend we permanently taboo “Outside view,” i.e. stop using the word and use more precise, less confused concepts instead. This post explains why.

What does “Outside view” mean now?

Over the past two years I’ve noticed people (including myself!) do lots of different things in the name of the Outside View. I’ve compiled the following lists based on fuzzy memory of hundreds of conversations with dozens of people:

Big List O’ Things People Describe As Outside View:

- Reference class forecasting, the practice of computing a probability of an event by looking at the frequency with which similar events occurred in similar situations. Also called comparison class forecasting. [EDIT: Eliezer rightly points out that sometimes reasoning by analogy is undeservedly called reference class forecasting; reference classes are supposed to be held to a much higher standard, in which your sample size is larger and the analogy is especially tight.]

- Trend extrapolation, e.g. “AGI implies insane GWP growth; let’s forecast AGI timelines by extrapolating GWP trends.”

- Foxy aggregation, the practice of using multiple methods to compute an answer and then making your final forecast be some intuition-weighted average of those methods.

- Bias correction, in others or in oneself, e.g. “There’s a selection effect in our community for people who think AI is a big deal, and one reason to think AI is a big deal is if you have short timelines, so I’m going to bump my timelines estimate longer to correct for this.”

- Deference to wisdom of the many, e.g. expert surveys, or appeals to the efficient market hypothesis, or to conventional wisdom in some fairly large group of people such as the EA community or Western academia.

- Anti-weirdness heuristic, e.g. “How sure are we about all this AI stuff? It’s pretty wild, it sounds like science fiction or doomsday cult material.”

- Priors, e.g. “This sort of thing seems like a really rare, surprising sort of event; I guess I’m saying the prior is low / the outside view says it’s unlikely.” Note that I’ve heard this said even in cases where the prior is not generated by a reference class, but rather from raw intuition.

- Ajeya’s timelines model (transcript of interview, link to model)

- … and probably many more I don’t remember

Big List O’ Things People Describe As Inside View:

- Having a gears-level model, e.g. “Language data contains enough structure to learn human-level general intelligence with the right architecture and training setup; GPT-3 + recent theory papers indicate that this should be possible with X more data and compute…”

- Having any model at all, e.g. “I model AI progress as a function of compute and clock time, with the probability distribution over how much compute is needed shifting 2 OOMs lower each decade…”

- Deference to wisdom of the few, e.g. “the people I trust most on this matter seem to think…”

- Intuition-based-on-detailed-imagining, e.g. “When I imagine scaling up current AI architectures by 12 OOMs, I can see them continuing to get better at various tasks but they still wouldn’t be capable of taking over the world.”

- Trend extrapolation combined with an argument for why that particular trend is the one to extrapolate, e.g. “Your timelines rely on extrapolating compute trends, but I don’t share your inside view that compute is the main driver of AI progress.”

- Drawing on subject matter expertise, e.g. “my inside view, based on my experience in computational neuroscience, is that we are only a decade away from being able to replicate the core principles of the brain.”

- Ajeya’s timelines model (Yes, this is on both lists!)

- … and probably many more I don’t remember

What did “Outside view” mean originally?

As far as I can tell, it basically meant reference class forecasting. Kaj Sotala tells me the original source of the concept (cited by the Overcoming Bias post that brought it to our community) was this paper. Relevant quote: “The outside view is ... essentially ignores the details of the case at hand, and involves no attempt at detailed forecasting of the future history of the project. Instead, it focuses on the statistics of a class of cases chosen to be similar in relevant respects to the present one.” If you look at the text of Superforecasting, the “it basically means reference class forecasting” interpretation holds up. Also, “Outside view” redirects to “reference class forecasting” in Wikipedia.

To head off an anticipated objection: I am not claiming that there is no underlying pattern to the new, expanded meanings of “outside view” and “inside view.” I even have a few ideas about what the pattern is. For example, priors are sometimes based on reference classes, and even when they are instead based on intuition, that too can be thought of as reference class forecasting in the sense that intuition is often just unconscious, fuzzy pattern-matching, and pattern-matching is arguably a sort of reference class forecasting. And Ajeya’s model can be thought of as inside view relative to e.g. GDP extrapolations, while also outside view relative to e.g. deferring to Dario Amodei.

However, it’s easy to see patterns everywhere if you squint. These lists are still pretty diverse. I could print out all the items on both lists and then mix-and-match to create new lists/distinctions, and I bet I could come up with several at least as principled as this one.

This expansion of meaning is bad

When people use “outside view” or “inside view” without clarifying which of the things on the above lists they mean, I am left ignorant of what exactly they are doing and how well-justified it is. People say “On the outside view, X seems unlikely to me.” I then ask them what they mean, and sometimes it turns out they are using some reference class, complete with a dataset. (Example: Tom Davidson’s four reference classes for TAI). Other times it turns out they are just using the anti-weirdness heuristic. Good thing I asked for elaboration!

Separately, various people seem to think that the appropriate way to make forecasts is to (1) use some outside-view methods, (2) use some inside-view methods, but only if you feel like you are an expert in the subject, and then (3) do a weighted sum of them all using your intuition to pick the weights. This is not Tetlock’s advice, nor is it the lesson from the forecasting tournaments, especially if we use the nebulous modern definition of “outside view” instead of the original definition. (For my understanding of his advice and those lessons, see this post, part 5. For an entire book written by Yudkowsky on why the aforementioned forecasting method is bogus, see Inadequate Equilibria, especially this chapter. Also, I wish to emphasize that I myself was one of these people, at least sometimes, up until recently when I noticed what I was doing!)

Finally, I think that too often the good epistemic standing of reference class forecasting is illicitly transferred to the other things in the list above. I already gave the example of the anti-weirdness heuristic; my second example will be bias correction: I sometimes see people go “There’s a bias towards X, so in accordance with the outside view I’m going to bump my estimate away from X.” But this is a different sort of bias correction. To see this, notice how they used intuition to decide how much to bump their estimate, and they didn’t consider other biases towards or away from X. The original lesson was that biases could be corrected by using reference classes. Bias correction via intuition may be a valid technique, but it shouldn’t be called the outside view.

I feel like it’s gotten to the point where, like, only 20% of uses of the term “outside view” involve reference classes. It seems to me that “outside view” has become an applause light and a smokescreen for over-reliance on intuition, the anti-weirdness heuristic, deference to crowd wisdom, correcting for biases in a way that is itself a gateway to more bias...

I considered advocating for a return to the original meaning of “outside view,” i.e. reference class forecasting. But instead I say:

Taboo Outside View; use this list of words instead

I’m not recommending that we stop using reference classes! I love reference classes! I also love trend extrapolation! In fact, for literally every tool on both lists above, I think there are situations where it is appropriate to use that tool. Even the anti-weirdness heuristic.

What I ask is that we stop using the words “outside view” and “inside view.” I encourage everyone to instead be more specific. Here is a big list of more specific words that I’d love to see, along with examples of how to use them:

- Reference class forecasting

- “I feel like the best reference classes for AGI make it seem pretty far away in expectation.”

- “I don’t think there are any good reference classes for AGI, so I think we should use other methods instead.”

- Analogy

- Analogy is like a reference class but with lower standards; sample size can be small and the similarities can be weaker.

- “I’m torn between thinking of AI as a technology vs. as a new intelligent species, but I lean towards the latter.”

- Trend extrapolation

- “The GWP trend seems pretty relevant and we have good data on it”

- “I claim that GPT performance trends are a better guide to AI timelines than compute or GWP or anything else, because they are more directly related.”

- Foxy aggregation (a.k.a. multiple models)

- “OK that model is pretty compelling, but to stay foxy I’m only assigning it 50% weight.”

- Bias correction

- “I feel like things generally take longer than people expect, so I’m going to bump my timelines estimate to correct for this. How much? Eh, 2x longer seems good enough for now, but I really should look for data on this.”

- Deference

- “I’m deferring to the markets on this one.”

- “I think we should defer to the people building AI.”

- Anti-weirdness heuristic

- “How sure are we about all this AI stuff? The anti-weirdness heuristic is screaming at me here.”

- Priors

- “This just seems pretty implausible to me, on priors.”

- (Ideally, say whether your prior comes from intuition or a reference class or a model. Jia points out “on priors” has similar problems as “on the outside view.”)

- Independent impression

- i.e. what your view would be if you weren’t deferring to anyone.

- “My independent impression is that AGI is super far away, but a lot of people I respect disagree.”

- “It seems to me that…”

- i.e. what your view would be if you weren’t deferring to anyone or trying to correct for your own biases.

- “It seems to me that AGI is just around the corner, but I know I’m probably getting caught up in the hype.”

- Alternatively: “I feel like…”

- Feel free to end the sentence with “...but I am not super confident” or “...but I may be wrong.”

- Subject matter expertise

- “My experience with X suggests…”

- Models

- “The best model, IMO, suggests that…” and “My model is…”

- (Though beware, I sometimes hear people say “my model is...” when all they really mean is “I think…”)

- Wild guess (a.k.a. Ass-number)

- “When I said 50%, that was just a wild guess, I’d probably have said something different if you asked me yesterday.”

- Intuition

- “It’s not just an ass-number, it’s an intuition! Lol. But seriously though I have thought a lot about this and my intuition seems stable.”

Conclusion

Whenever you notice yourself saying “outside view” or “inside view,” imagine a tiny Daniel Kokotajlo hopping up and down on your shoulder chirping “Taboo outside view.”

Many thanks to the many people who gave comments on a draft: Vojta, Jia, Anthony, Max, Kaj, Steve, and Mark. Also thanks to various people I ran the ideas by earlier.

I worriedly predict that anyone who followed your advice here would just switch to describing whatever they're doing as "reference class forecasting" since this captures the key dynamic that makes describing what they're doing as "outside viewing" appealing: namely, they get to pick a choice of "reference class" whose samples yield the answer they want, claim that their point is in the reference class, and then claiming that what they're doing is what superforecasters do and what Philip Tetlock told them to do and super epistemically virtuous and anyone who argues with them gets all the burden of proof and is probably a bad person but we get to virtuously listen to them and then reject them for having used the "inside view".

My own take: Rule One of invoking "the outside view" or "reference class forecasting" is that if a point is more dissimilar to examples in your choice of "reference class" than the examples in the "reference class" are dissimilar to each other, what you're doing is "analogy", not "outside viewing".

All those experimental results on people doing well by using the outside view are results on people drawing a new sample from the same bag as previous samples. Not "arguably the same bag" or "well it's the same bag if you look at this way", really actually the same bag: how late you'll be getting Christmas presents this year, based on how late you were in previous years. Superforecasters doing well by extrapolating are extrapolating a time-series over 20 years, which was a straight line over those 20 years, to another 5 years out along the same line with the same error bars, and then using that as the baseline for further adjustments with due epistemic humility about how sometimes straight lines just get interrupted some year. Not by them picking a class of 5 "relevant" historical events that all had the same outcome, and arguing that some 6th historical event goes in the same class and will have that same outcome.

Good point, I'll add analogy to the list. Much that is called reference class forecasting is really just analogy, and often not even a good analogy.

I really think we should taboo "outside view." If people are forced to use the term "reference class" to describe what they are doing, it'll be more obvious when they are doing epistemically shitty things, because the term "reference class" invites the obvious next questions: 1. What reference class? 2. Why is that the best reference class to use?

Hmm, I'm not convinced that this is meaningfully different in kind rather than degree. You aren't predicting a randomly chosen holdout year, so saying that 2021 is from the same distribution as 2011-2020 is still a take. "X thing I do in the future is from the same distribution of all my attempts in past years*" is still a judgement call, albeit a much easier one than AI timelines.

I agree with (part of) your broader point that incareful applications of the outside view and similar vibes is very susceptible to motivated reasoning (including but not limited to the absurdity heuristic), but I guess my take here is that we should just be more careful individually and more willing to point out bad epistemic moves in others (as you've often done a good job of!) as a community.

All our tools are limited and corruptible, and I don't think on balance reference class forecasting is more susceptible to motivated reasoning than other techniques.

*are you using your last 10 years? since you've been an adult? all the years you've been alive?

FWIW, as a contrary datapoint, I don’t think I’ve really encountered this problem much in conversation. In my own experience (which may be quite different from yours): when someone makes some reference to an “outside view,” they say something that indicates roughly what kind of “outside view” they’re using. For example, if someone is just extrapolating a trend forward, they’ll reference the trend. Or if someone is deferring to expert opinion, they’ll reference expert opinion. I also don’t think I’d find it too bothersome, in any case, to occasionally have to ask the person which outside view they have in mind.

So this concern about opacity wouldn’t be enough to make me, personally, want people to stop using the term “outside view.”

If there’s a really serious linguistic issue, here, I think it’s probably that people sometimes talk about "the outside view” as though there's only a single relevant outside view. I think Michael Aird made a good comment on my recent democracy post, where he suggests that people should taboo the phrase “the outside view” and instead use the phrase “an outside view.” (I was guilty of using the phrase “the outside view” in that post — and, arguably, of leaning too hard on one particular way of defining a reference class.) I’d be pretty happy if people just dropped the “the,” but kept talking about “outside views.”[1]

It’s of course a little ambiguous what counts as an “outside view,” but in practice I don’t think this is too huge of an issue. In my experience, which again may be different from yours, “taking an outside view” still does typically refer to using some sort of reference-class-based reasoning. It’s just the case that there are lots of different reference classes that people use. (“I’m extrapolating this 20-year trend forward, for another five years, because if a trend has been stable for 20 years it’s typically stable for another five.” “I’m deferring to the experts in this survey, because experts typically have more accurate views than amateurs.” Etc.) I do feel like this style of reasoning is useful and meaningfully distinct from, for example, reasoning based on causal models, so I’m happy to have a term for it, even if the boundaries of the concept are somewhat fuzzy. ↩︎

Thanks for this thoughtful pushback. I agree that YMMV; I'm reporting how these terms seem to be used in my experience but my experience is limited.

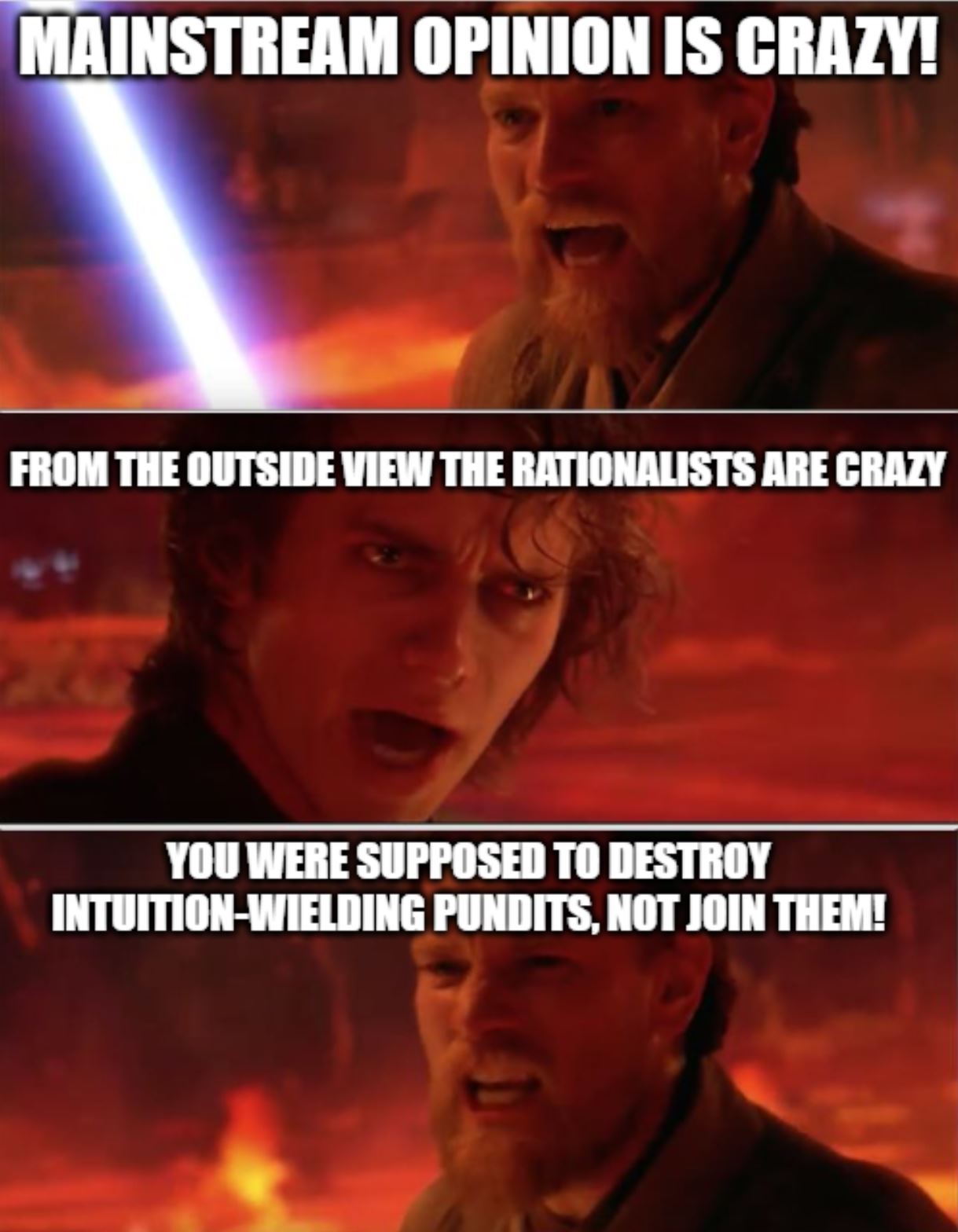

I think opacity is only part of the problem; illicitly justifying sloppy reasoning is most of it. (My second and third points in "this expansion of meaning is bad" section.) There is an aura of goodness surrounding the words "outside view" because of the various studies showing how it is superior to the inside view in various circumstances, and because of e.g. Tetlock's advice to start with the outside view and then adjust. (And a related idea that we should only use inside view stuff if we are experts... For more on the problems I'm complaining about, see the meme, or Eliezer's comment.) This is all well and good if we use those words to describe what was actually talked about by the studies, by Tetlock, etc. but if instead we have the much broader meaning of the term, we are motte-and-bailey-ing ourselves.

I agree that people sometimes put too much weight on particular outside views -- or do a poor job of integrating outside views with more inside-view-style reasoning. For example, in the quote/paraphrase you present at the top of your post, something has clearly gone wrong.[1]

But I think the best intervention, in this case, is probably just to push the ideas "outside views are often given too much weight" or "heavily reliance on outside views shouldn't be seen as praiseworthy" or "the correct way to integrate outside views with more inside-view reasoning is X." Tabooing the term itself somehow feels a little roundabout to me, like a linguistic solution to a methodological disagreement.

I think you're right that "outside view" now has a very positive connotation. If enough community members become convinced that this positive connotation is unearned, though, I think the connotation will probably naturally become less positive over time. For example, the number of upvotes on this post is a signal that people shouldn't currently expect that much applause for using the term "outside view."

As a caveat, although I'm not sure how much this actually matters for the present discussion, I probably am significantly less concerned about the problem than you are. I'm pretty confident that the average intellectual doesn't pay enough attention to "outside views" -- and I think that, absent positive reinforcement from people in your community, it actually does take some degree of discipline to take outside views sufficiently seriously. I'm open to the idea that the average EA community member has over-corrected, here, but I'm not yet convinced of it. I think it's also possible that, in a lot of cases, the natural substitute for bad outside-view-heavy reasoning is worse inside-view-heavy reasoning. ↩︎

On the contrary; tabooing the term is more helpful, I think. I've tried to explain why in the post. I'm not against the things "outside view" has come to mean; I'm just against them being conflated with / associated with each other, which is what the term does. If my point was simply that the first Big List was overrated and the second Big List was underrated, I would have written a very different post!

By what definition of "outside view?" There is some evidence that in some circumstances people don't take reference class forecasting seriously enough; that's what the original term "outside view" meant. What evidence is there that the things on the Big List O' Things People Describe as Outside View are systematically underrated by the average intellectual?

My initial comment was focused on your point about conflation, because I think this point bears on the linguistic question more strongly than the other points do. I haven’t personally found conflation to be a large issue. (Recognizing, again, that our experiences may differ.) If I agreed with the point about conflation, though, then I would think it might be worth tabooing the term "outside view."

By “taking an outside view on X” I basically mean “engaging in statistical or reference-class-based reasoning.” I think it might also be best defined negatively: "reasoning that doesn’t substantially involve logical deduction or causal models of the phenomenon in question. "[1]

I think most of the examples in your list fit these definitions.

Epistemic deference is a kind of statistical/reference-class-based reasoning, for example, which doesn't involve applying any sort of causal model of the phenomenon in question. The logic is “Ah, I should update downward on this claim, since experts in domain X disagree with it and I think that experts in domain X will typically be right.”

Same for anti-weirdness: The idea is that weird claims are typically wrong.

I’d say that trend extrapolation also fits: You’re not doing logical reasoning or relying on a causal model of the relevant phenomenon. You’re just extrapolating a trend forward, largely based on the assumption that long-running trends don’t typically end abruptly.

“Foxy aggregation,” admittedly, does seem like a different thing to me: It arguably fits the negative definition, depending on how you generate your weights, but doesn’t seem to fit statistical/reference-class one. It also feels like more of a meta-level thing. So I wouldn’t personally use the term “outside view” to talk about foxy aggregation. (I also don’t think I’ve personally heard people use the term “outside view” to talk about foxy aggegration, although I obviously believe you have.)

A condensed answer is: (a) I think most public intellectuals barely use any of the items on this list (with the exception of the anti-weirdness heuristic); (b) I think some of the things on this list are often useful; (c) I think that intellectuals and people more generally are very often bad at reasoning causally/logically about complex social phenomena; (d) I expect intellectuals to often have a bias against outside-view-style reasoning, since it often feels somewhat unsatisfying/unnatural and doesn't allow them to display impressive-seeming domain-knowledge, interesting models of the world, or logical reasoning skills; and (e) I do still think Tetlock’s evidence is at least somewhat relevant to most things on the list, in part because I think they actually are somewhat related to each other, although questions of external validity obviously grow more serious the further you move from the precise sorts of questions asked in his tournaments and the precise styles of outside-view reasoning displayed by participants. [2]

There’s also, of course, a bit of symmetry here. One could also ask: “What evidence is there that the things on the Big List O' Things People Describe as Outside View are systematically overrated by the average intellectual?” :)

These definitions of course aren’t perfect, and other people sometimes use the term more broadly than I do, but, again, some amount of fuzziness seems OK to me. Most concepts have fuzzy boundaries and are hard to define precisely. ↩︎

On the Tetlock evidence: I think one thing his studies suggest, which I expect to generalize pretty well to many different contexts, is that people who are trying to make predictions about complex phenemona (especially complex social phenemona) often do very poorly when they don't incorporate outside views into their reasoning processes. (You can correct me if this seems wrong, since you've thought about Tetlock's work far more than I have.) So, on my understanding, Tetlock's work suggests that outside-view-heavy reasoning processes would often substitute for reasoning processes that lead to poor predictions anyways. At least for most people, then, outside-view-heavy reasoning processes don't actually need to be very reliable to constitute improvements -- and they need to be pretty bad to, on average, lead to worse predictions.

Another small comment here: I think Tetlock's work also counts, in a somewhat broad way, against the "reference class tennis" objection to reference-class-based forecasting. On its face, the objection also applies to the use of reference classes in standard forecasting tournaments. There are always a ton of different reference classes someone could use to forecast any given political event. Forecasters need to rely on some sort of intuition, or some sort of fuzzy reasoning, to decide on which reference classes to take seriously; it's a priori plausible that people would be just consistently very bad at this, given the number of degrees of freedom here and the absence of clear principles for making one's selections. But this issue doesn't actually seem to be that huge in the context of the sorts of questions Tetlock asked his participants. (You can again correct me if I'm wrong.) The degrees-of-freedom problem might be far larger in other contexts, but the fact that the issue is manageable in Tetlockian contexts presumably counts as at least a little bit of positive evidence. ↩︎

I said in the post, I'm a fan of reference classes. I feel like you think I'm not? I am! I'm also a fan of analogies. And I love trend extrapolation. I admit I'm not a fan of the anti-weirdness heuristic, but even it has its uses. In general most of what you are saying in this thread is stuff I agree with, which makes me wonder if we are talking past each other. (Example 1: Your second small comment about reference class tennis. Example 2: Your first small comment, if we interpret instances of "outside view" as meaning "reference classes" in the strict sense, though not if we use the broader definition you favor. Example 3: your points a, b, c, and e. (point d, again, depends on what you mean by 'outside view,' and also what counts as often.)

My problem is with the term "Outside view." (And "inside view" too!) I don't think you've done much to argue in favor of it in this thread. You have said that in your experience it doesn't seem harmful; fair enough, point taken. In mine it does. You've also given two rough definitions of the term, which seem quite different to me, and also quite fuzzy. (e.g. if by "reference class forecasting" you mean the stuff Tetlock's studies are about, then it really shouldn't include the anti-weirdness heuristic, but it seems like you are saying it does?) I found myself repeatedly thinking "but what does he mean by outside view? I agree or don't agree depending on what he means..." even though you had defined it earlier. You've said that you think the practices you call "outside view" are underrated and deserve positive reinforcement; I totally agree that some of them are, but I maintain that some of them are overrated, and would like to discuss each of them on a case by case basis instead of lumping them all together under one name. Of course you are free to use whatever terms you like, but I intend to continue to ask people to be more precise when I hear "outside view" or "inside view." :)

It’s definitely entirely plausible that I’ve misunderstood your views.

My interpretation of the post was something like this:

I’m curious if this feels roughly right, or feels pretty off.

Part of the reason I interpreted your post this way: The quote you kicked the post off suggested to me that your primary preoccupation was over-use or mis-use of the tools people called “outside views,” including more conventional reference-class forecasting. It seemed like the quote is giving an example of someone who’s refusing to engage in causal reasoning, evaluate object-level arguments, etc., based on the idea that outside views are just strictly dominant in the context of AI forecasting. It seemed like this would have been an issue even if the person was doing totally orthodox reference-class forecasting and there was no ambiguity about what they were doing.[1]

I don’t think that you’re generally opposed to the items in the “outside view” bag or anything like that. I also don’t assume that you disagree with most of the points I listed in my last comment, for why I think intellectuals probably on average underrated the items in the bag. I just listed all of them because you asked for an explanation for my view, I suppose with some implication that you might disagree with it.

I think it’s probably not worth digging deeper on the definitions I gave, since I definitely don’t think they're close to perfect. But just a clarification here, on the anti-weirdness heuristic: I’m thinking of the reference class as “weird-sounding claims.”

Suppose someone approaches you not the street and hands you a flyer claiming: “The US government has figured out a way to use entangled particles to help treat cancer, but political elites are hoarding the particles.” You quickly form a belief that the flyer’s claim is almost certainly false, by thinking to yourself: “This is a really weird-sounding claim, and I figure that virtually all really weird-sounding claims that appear in random flyers are wrong.”

In this case, you’re not doing any deductive reasoning about the claim itself or relying on any causal models that directly bear on the claim. (Although you could.) For example, you’re not thinking to yourself: “Well, I know about quantum mechanics, and I know entangled particles couldn’t be useful for treating cancer for reason X.” Or: “I understand economic incentives, or understand social dynamics around secret-keeping, so I know it’s unlikely this information would be kept secret.” You’re just picking a reference class — weird-sounding claims made on random flyers — and justifying your belief that way.

I think it’s possible that Tetlock’s studies don’t bear very strongly on the usefulness of this reference class, since I imagine participants in his studies almost never used it. (“The claim ‘there will be a coup in Venezuela in the next five years’ sounds really weird to me, and most claims that sound weird to me aren't true, so it’s probably not true!”) But I think the anti-weirdness heuristic does fit with the definitions I gave, as well as the definition you give that characterizes the term's "original meaning." I also do think that Tetlock's studies remain at least somewhat relevant when judging the potential usefulness of the heuristic.

I initially engaged on the miscommunication, point, though, since this is the concern that would mostly strongly make me want to taboo the term. I’d rather address the applause light problem, if it is a problem, but trying get people in the EA community stop applauding, and the evidence problem, if it is a problem, by trying to just directly make people in the EA community more aware of the limits of evidence. ↩︎

Wow, that's an impressive amount of charitable reading + attempting-to-ITT you did just there, my hat goes off to you sir!

I think that summary of my view is roughly correct. I think it over-emphasizes the applause light aspect compared to other things I was complaining about; in particular, there was my second point in the "this expansion of meaning is bad" section, about how people seem to think that it is important to have an outside view and an inside view (but only an inside view if you feel like you are an expert) which is, IMO, totally not the lesson one should draw from Tetlock's studies etc., especially not with the modern, expanded definition of these terms. I also think that while I am mostly complaining about what's happened to "outside view," I also think similar things apply to "inside view" and thus I recommend tabooing it also.

In general, the taboo solution feels right to me; when I imagine re-doing various conversations I've had, except without that phrase, and people instead using more specific terms, I feel like things would just be better. I shudder at the prospect of having a discussion about "Outside view vs inside view: which is better? Which is overrated and which is underrated?" (and I've worried that this thread may be tending in that direction) but I would really look forward to having a discussion about "let's look at Daniel's list of techniques and talk about which ones are overrated and underrated and in what circumstances each is appropriate."

Now I'll try to say what I think your position is:

How does that sound?

Thank you (and sorry for my delayed response)!

I also shudder a bit at that prospect.

I am sometimes happy making pretty broad and sloppy statements. For example: "People making political predictions typically don't make enough use of 'outside view' perspectives" feels fine to me, as a claim, despite some ambiguity around the edges. (Which perspectives should they use? How exactly should they use them? Etc.)

But if you want to dig in deep, for example when evaluating the rationality of a particular prediction, you should definitely shift toward making more specific and precise statements. For example, if someone has based their own AI timelines on Katja's expert survey, and they wanted to defend their view by simply evoking the principle "outside views are better than inside views," I think this would probably a horrible conversation. A good conversation would focus specifically on the conditions under which it makes sense to defer heavily to experts, whether those conditions apply in this particular case, etc. Some general Tetlock stuff might come into the conversation, like: "Tetlock's work suggests it's easy to trip yourself up if you try to use your own detailed/causal model of the world to make predictions, so you shouldn't be so confident that your own 'inside view' prediction will be very good either." But mostly you should be more specific.

I'd say that sounds basically right!

The only thing is that I don't necessarily agree with 3a.

I think some parts of the community lean too much on things in the bag (the example you give at the top of the post is an extreme example). I also think that some parts of the community lean too little on things in the bag, in part because (in my view) they're overconfident in their own abilities to reason causally/deductively in certain domains. I'm not sure which is overall more problematic, at the moment, in part because I'm not sure how people actually should be integrating different considerations in domains like AI forecasting.

There also seem to be biases that cut in both directions. I think the 'baseline bias' is pretty strongly toward causal/deductive reasoning, since it's more impressive-seeming, can suggest that you have something uniquely valuable to bring to the table (if you can draw on lots of specific knowledge or ideas that it's rare to possess), is probably typically more interesting and emotionally satisfying, and doesn't as strongly force you to confront or admit the limits of your predictive powers. The EA community has definitely introduced an (unusual?) bias in the opposite direction, by giving a lot of social credit to people who show certain signs of 'epistemic virtue.' I guess the pro-causal/deductive bias often feels more salient to me, but I don't really want to make any confident claim here that it actually is more powerful.

As a last thought here (no need to respond), I thought it might useful to give one example of a concrete case where: (a) Tetlock’s work seems relevant, and I find the terms “inside view” and “outside view” natural to use, even though the case is relatively different from the ones Tetlock has studied; and (b) I think many people in the community have tended to underweight an “outside view.”

A few years ago, I pretty frequently encountered the claim that recently developed AI systems exhibited roughly “insect-level intelligence.” This claim was typically used to support an argument for short timelines, since the claim was also made that we now had roughly insect-level compute. If insect-level intelligence has arrived around the same time as insect-level compute, then, it seems to follow, we shouldn’t be at all surprised if we get ‘human-level intelligence’ at roughly the point where we get human-level compute. And human-level compute might be achieved pretty soon.

For a couple of reasons, I think some people updated their timelines too strongly in response to this argument. First, it seemed like there are probably a lot of opportunities to make mistakes when constructing the argument: it’s not clear how “insect-level intelligence” or “human-level intelligence” should be conceptualised, it’s not clear how best to map AI behaviour onto insect behaviour, etc. The argument also hadn't yet been vetted closely or expressed very precisely, which seemed to increase the possibility of not-yet-appreciated issues.

Second, we know that there are previous of examples of smart people looking at AI behaviour and forming the impression that it suggests “insect-level intelligence.” For example, in Nick Bostrom’s paper “How Long Before Superintelligence?” (1998) he suggested that “approximately insect-level intelligence” was achieved sometime in the 70s, as a result of insect-level computing power being achieved in the 70s. In Moravec’s book Mind Children (1990), he also suggested that both insect-level intelligence and insect-level compute had both recently been achieved. Rodney Brooks also had this whole research program, in the 90s, that was based around going from “insect-level intelligence” to “human-level intelligence.”

I think many people didn’t give enough weight to the reference class “instances of smart people looking at AI systems and forming the impression that they exhibit insect-level intelligence” and gave too much weight to the more deductive/model-y argument that had been constructed.

This case is obviously pretty different than the sorts of cases that Tetlock’s studies focused on, but I do still feel like the studies have some relevance. I think Tetlock’s work should, in a pretty broad way, make people more suspicious of their own ability to perform to linear/model-heavy reasoning about complex phenomena, without getting tripped up or fooling themselves. It should also make people somewhat more inclined to take reference classes seriously, even when the reference classes are fairly different from the sorts of reference classes good forecasters used in Tetlock’s studies. I do also think that the terms “inside view” and “outside view” apply relatively neatly, in this case, and are nice bits of shorthand — although, admittedly, it’s far from necessary to use them.

This is the sort of case I have in the back of my mind.

(There are also, of course, cases that point in the opposite direction, where many people seemingly gave too much weight to something they classified as an "outside view." Early under-reaction to COVID is arguably one example.)

The Nick Bostrom quote (from here) is:

I would have guessed this is just a funny quip, in the sense that (i) it sure sounds like it's just a throw-away quip, no evidence is presented for those AI systems being competent at anything (he moves on to other topics in the next sentence), "approximately insect-level" seems appropriate as a generic and punchy stand in for "pretty dumb," (ii) in the document he is basically just thinking about AI performance on complex tasks and trying to make the point that you shouldn't be surprised by subhuman performance on those tasks, which doesn't depend much on the literal comparison to insects, (iii) the actual algorithms described in the section (neural nets and genetic algorithms) wouldn't plausibly achieve insect-level performance in the 70s since those algorithms in fact do require large training processes (and were in fact used in the 70s to train much tinier neural networks).

(Of course you could also just ask Nick.)

I also think it's worth noting that the prediction in that section looks reasonably good in hindsight. It was written right at the beginning of resurgent interest in neural networks (right before Yann LeCun's paper on MNIST with neural networks). The hypothesis "computers were too small in the past so that's why they were lame" looks like it was a great call, and Nick's tentative optimism about particular compute-heavy directions looks good. I think overall this is a significantly better take than mainstream opinions in AI. I don't think this literally affects your point, but it is relevant if the implicit claim is "And people talking about insect comparisons were lead astray by these comparisons."

I suspect you are more broadly underestimating the extent to which people used "insect-level intelligence" as a generic stand-in for "pretty dumb," though I haven't looked at the discussion in Mind Children and Moravec may be making a stronger claim. I'd be more inclined to tread carefully if some historical people tried to actually compare the behavior of their AI system to the behavior of an insect and found it comparable as in posts like this one (it's not clear to me how such an evaluation would have suggested insect-level robotics in the 90s or even today, I think the best that can be said is that today it seems compatible with insect-level robotics in simulation today). I've seen Moravec use the phrase "insect-level intelligence" to refer to the particular behaviors of "following pheromone trails" or "flying towards lights," so I might also read him as referring to those behaviors in particular. (It's possible he is underestimating the total extent of insect intelligence, e.g. discounting the complex motor control performed by insects, though I haven't seen him do that explicitly and it would be a bit off brand.)

ETA: While I don't think 1990s robotics could plausibly be described as "insect-level," I actually do think that the linked post on bee vision could plausibly have been written in the 90s and concluded that computer vision was bee-level, it's just a very hard comparison to make and the performance of the bees in the formal task is fairly unimpressive.

I think that's good push-back and a fair suggestion: I'm not sure how seriously the statement in Nick's paper was meant to be taken. I hadn't considered that it might be almost entirely a quip. (I may ask him about this.)

Moravec's discussion in Mind Children is similarly brief: He presents a graph of the computing power of different animal's brains and states that "lab computers are roughly equal in power to the nervous systems of insects."He also characterizes current AI behaviors as "insectlike" and writes: "I believe that robots with human intelligence will be common within fifty years. By comparison, the best of today's machines have minds more like those of insects than humans. Yet this performance itself represents a giant leap forward in just a few decades." I don't think he's just being quippy, but there's also no suggestion that he means anything very rigorous/specific by his suggestion.

Rodney Brooks, I think, did mean for his comparisons to insect intelligence to be taken very seriously. The idea of his "nouvelle AI program" was to create AI systems that match insect intelligence, then use that as a jumping-off point for trying to produce human-like intelligence. I think walking and obstacle navigation, with several legs, was used as the main dimension of comparison. The Brooks case is a little different, though, since (IIRC) he only claimed that his robots exhibited important aspects of insect intelligence or fell just short insect intelligence, rather than directly claiming that they actually matched insect intelligence. On the other hand, he apparently felt he had gotten close enough to transition to the stage of the project that was meant to go from insect-level stuff to human-level stuff.

A plausible reaction to these cases, then, might be:

I think there's something to this reaction, particularly if there's now more rigorous work being done to operationalize and test the "insect-level intelligence" claim. I hadn't yet seen the recent post you linked to, which, at first glance, seems like a good and clear piece of work. The more rigorous work is done to flesh out the argument, the less I'm inclined to treat the Bostrom/Moravec/Brooks cases as part of an epistemically relevant reference class.

My impression a few years ago was that the claim wasn't yet backed by any really clear/careful analysis. At least, the version that filtered down to me seemed to be substantially based on fuzzy analogies between RL agent behavior and insect behavior, without anyone yet knowing much about insect behavior. (Although maybe this was a misimpression.) So I probably do stand by the reference class being relevant back then.

Overall, to sum up, my position here is something like: "The Bostrom/Moravec/Brooks cases do suggest that it might be easy to see roughly insect-level intelligence, if that's what you expect to see and you're relying on fuzzy impressions, paying special attention to stuff AI systems can already do, or not really operationalizing your claims. This should make us more suspicious of modern claims that we've recently achieved 'insect-level intelligence,' unless they're accompanied by transparent and pretty obviously robust reasoning. Insofar as this work is being done, though, the Bostrom/Moravec/Brooks cases become weaker grounds for suspicion."

I do think my main impression of insect <-> simulated robot parity comes from very fuzzy evaluations of insect motor control vs simulated robot motor control (rather than from any careful analysis, of which I'm a bit more skeptical though I do think it's a relevant indicator that we are at least trying to actually figure out the answer here in a way that wasn't true historically). And I do have only a passing knowledge of insect behavior, from watching youtube videos and reading some book chapters about insect learning. So I don't think it's unfair to put it in the same reference class as Rodney Brooks' evaluations to the extent that his was intended as a serious evaluation.

Yeah, FWIW I haven't found any recent claims about insect comparisons particularly rigorous.

I guess we can just agree to disagree on that for now. The example statement you gave would feel fine to me if it used the original meaning of "outside view" but not the new meaning, and since many people don't know (or sometimes forget) the original meaning...

100% agreement here, including on the bolded bit.

Also agree here, but again I don't really care which one is overall more problematic because I think we have more precise concepts we can use and it's more helpful to use them instead of these big bags.

I think I agree with all this as well, noting that this causal/deductive reasoning definition of inside view isn't necessarily what other people mean by inside view, and also isn't necessarily what Tetlock meant. I encourage you to use the term "causal/deductive reasoning" instead of "inside view," as you did here, it was helpful (e.g. if you had instead used "inside view" I would not have agreed with the claim about baseline bias)

I mostly use outside views to mean reference classes, but I agree that this term has expanded to mean more than is originally denoted. I'm not sure how big a problem this is in practice; I think by default phrases in natural language expands to mean more than their technical beginnings (consider phrases like "modulo", "pop the stack," etc). My intuition is that zealously guarding against this expansion by specifying new broader words (rather than being precise in-context) seems quite doomed as an overall enterprise, though it might buy you a few years.

A related point is that if we do go with "reference classes" as the preferred phrase, we should be cognizant that for most questions there's a number of different relevant reference classes, and saying that a particular reference class we've picked is the best/only reference class is quite a strong claim, and (as EliezerYudkowsky alludes to) quite susceptible to motivated reasoning.

I agree it's hard to police how people use a word; thus, I figured it would be better to just taboo the word entirely.

I totally agree that it's hard to use reference classes correctly, because of the reference class tennis problem. I figured it was outside the scope of this post to explain this, but I was thinking about making a follow-up... at any rate, I'm optimistic that if people actually use the words "reference class" instead of "outside view" this will remind them to notice how there are more than one reference class available, how it's important to argue that the one you are using is the best, etc.

This is a bit tangential to the main point of your post, but I thought I'd give some thoughts on this, partly because I basically did exactly this procedure a few months ago in an attempt to come to a personal all-things-considered view about AI timelines (although I did "use some inside-view methods" even though I don't at all feel like I'm an expert in the subject!).

I liked your AI Impacts post, thanks for linking to it! Maybe a good summary of the recommended procedure is the part at the very end. I do feel like it was useful for me to read it.

I'm less sure about the direct relevance of Inadequate Equilibria for this, apart from it making the more general point that ~"people should be less scared of relying on their own intuition / arguments / inside view". Maybe I haven't scrutinised it closely enough.

To be clear, I don't think "weighted sum of 'inside views' and 'outside views'" is the gold standard or something. I just think it's an okay approach sometimes (maybe especially when you want to do something "quick and dirty").

If you strongly disagree (which I think you do), I'd love for you to change my mind! :)

Thanks!

Re : Inadequate Equilibria: I mean, that was my opinionated interpretation I guess. :) But Yudkowsky was definitely arguing something was bogus. (This is a jab at his polemical style) To say a bit more: Yudkowsky argues that the justifications for heavy reliance on various things called "outside view" don't hold up to scrutiny, and that what's really going on is that people are overly focused on matters of who has how much status and which topics are in whose areas of expertise and whether I am being appropriately humble and stuff like that, and that (unconsciously) this is what's really driving people's use of "outside view" methods rather than the stated justifications. I am not sure whether I agree with him or not but I do find it somewhat plausible at least. I do think the stated justifications often (usually?) don't hold up to scrutiny.

I mean, depending on what you mean by "an okay approach sometimes... especially when you want to do something quick and dirty" I may agree with you! What I said was:

And it seems you agree with me on that. What I would say is: Consider the following list of methods:

1. Intuition-weighted sum of "inside view" and "outside view" methods (where those terms refer to the Big Lists summarized in this post)

2. Intuition-weighted sum of "Type X" and "Type Y" methods (where those terms refer to any other partition of the things in the Big Lists summarized in this post)

3. Intuition

4. The method Tetlock recommends (as interpreted by me in the passage of my blog post you quoted)

My opinion is that 1 and 2 are probably typically better than 3 and that 4 is probably typically better than 1 and 2 and that 1 and 2 are probably about the same. I am not confident in this of course, but the reasoning is: Method 4 has some empirical evidence supporting it, plus plausible arguments/models.* So it's the best. Methods 1 & 2 are like method 3 except that they force you to think more and learn more about the case (incl. relevant arguments about it) before calling on your intuition, which hopefully results in a better-calibrated intuitive judgment. As for comparing 1 & 2, I think we have basically zero evidence that partitioning into "Outside view" and "Inside view" is more effective than any other random partition of the things on the list. It's still better than pure intuition though, probably, for reasons mentioned.

The view I was arguing against in the OP was the view that method 1 is the best, supported by the evidence from Tetlock, etc. I think I slipped into holding this view myself over the past year or so, despite having done all this research on Tetlock et al earlier! It's easy to slip into because a lot of people in our community seem to be holding it, and when you squint it's sorta similar to what Tetlock said. (e.g. it involves aggregating different things, it involves using something called inside view and something called outside view.)

*The margins of this comment are too small to contain, I was going to write a post on this some day...

Nice, thanks for this!

I guess I was reacting to the part just after the bit you quoted

Which I took to imply "Daniel thinks that the aforementioned forecasting method is bogus". Maybe my interpretation was incorrect. Anyway, seems very possible we in fact roughly agree here.

Re your 1, 2, 3, 4: It seems cool to try doing 4, and I can believe it's better (I don't have a strong view). Fwiw re 1 vs 2, my initial reaction is that partitioning by outside/inside view lets you decide how much weight you give to each, and maybe we think that for non-experts it's better to mostly give weight to the outside view, so the partitioning performed a useful service. I guess this is kind of what you were trying to argue against and unfortunately you didn't convince me to repent :).

Yeah, I probably shouldn't have said "bogus" there, since while I do think it's overrated, it's not the worst method. (Though arguably things can be bogus even if they aren't the worst?)

Partitioning by any X lets you decide how much weight you give to X vs. not-X. My claim is that the bag of things people refer to as "outside view" isn't importantly different from the other bag of things, at least not more importantly different than various other categorizations one might make.

I do think that people who are experts should behave differently than people who are non-experts. I just don't think we should summarize that as "Prefer to use outside-view methods" where outside view = the things on the First Big List. I think instead we could say:

--Use deference more

--Use reference classes more if you have good ones (but if you are a non-expert and your reference classes are more like analogies, they are probably leading you astray)

--Trust your models less

--Trust your intuition less

--Trust your priors less

...etc.

Something I used to call 'outside view' is asking 'what would someone other than me think of this', like trying to imagine how someone outside of myself would view something. I think it's a technique I learnt from CBT and would often take the form of 'what would a wise, empathetic friend advise you to do?' . I realised you could do it with various viewpoints. For example, you could imagine looking at current affairs from the viewpoint of an alien viewing earth from afar. I'm not sure what the term for this is.

That sounds like a useful technique. "Outside view" would be a good term for it if it wasn't already being used to mean so many other things. :/ How about "Neutral observer" or "friend's advice" or "hypothetical friend?"