All of Devon Fritz 🔸's Comments + Replies

Very interesting @Kirsten - thank you for the tips. I'll have my publicist follow up with those leads.

I am a dad myself and have on my list to make posts on my Substack about insights from having kids and impact. I give advice to EAs about many things, but I've notice my takes on parenting lead to really long conversations and follow up in a way that makes me think it could be valuable to the community.

@Federico Speziali and I both originally looked into support for this cohort of folks, but I ultimately got more pessimistic on it for two reasons:

- There were fewer people doing E2G than I thought.

- Those who were doing E2G were giving less than I thought.

I came to the view that those E2Gers giving significant amounts (100K+ let's say) were an order of magnitude lower than I'd hoped (maybe 50 instead of 500) and also that they were already known to the community for the most part and already pretty much maximizing their impact.

There could be room for an org t...

The things that most people can see are good, and which therefore would bring more people into the movement. Like finding the best ways to help people in extreme poverty, and ending factory farming (see my above answer to what I would do if I were in my twenties).

One common objection to what The Life You Can Save and GiveWell are doing - recommending the most effective charities to help people in extreme poverty - is that this is a band-aid, and doesn't get at the underlying problems, for which structural change is needed. I'd like to see more EAs engaging with that objection, and assessing paths to structural changes that are feasible and likely to make a difference.

Placing too much emphasis on longtermism. I'm not against longtermism at all - it's true that we neglect future sentient beings, as we neglect people who are distant from us, and as we neglect nonhuman animals. But it's not good for people to get the impresson that EA is mostly about longtermism. That impression hinders the prospects of EA becoming a broad and popular movement that attracts a wide range of people, and we have an important message to get across to those people: some ways of doing good are hundreds of times more effective than others.

M...

Thanks for the great work as usual. This is a very good snapshot of where people are at.

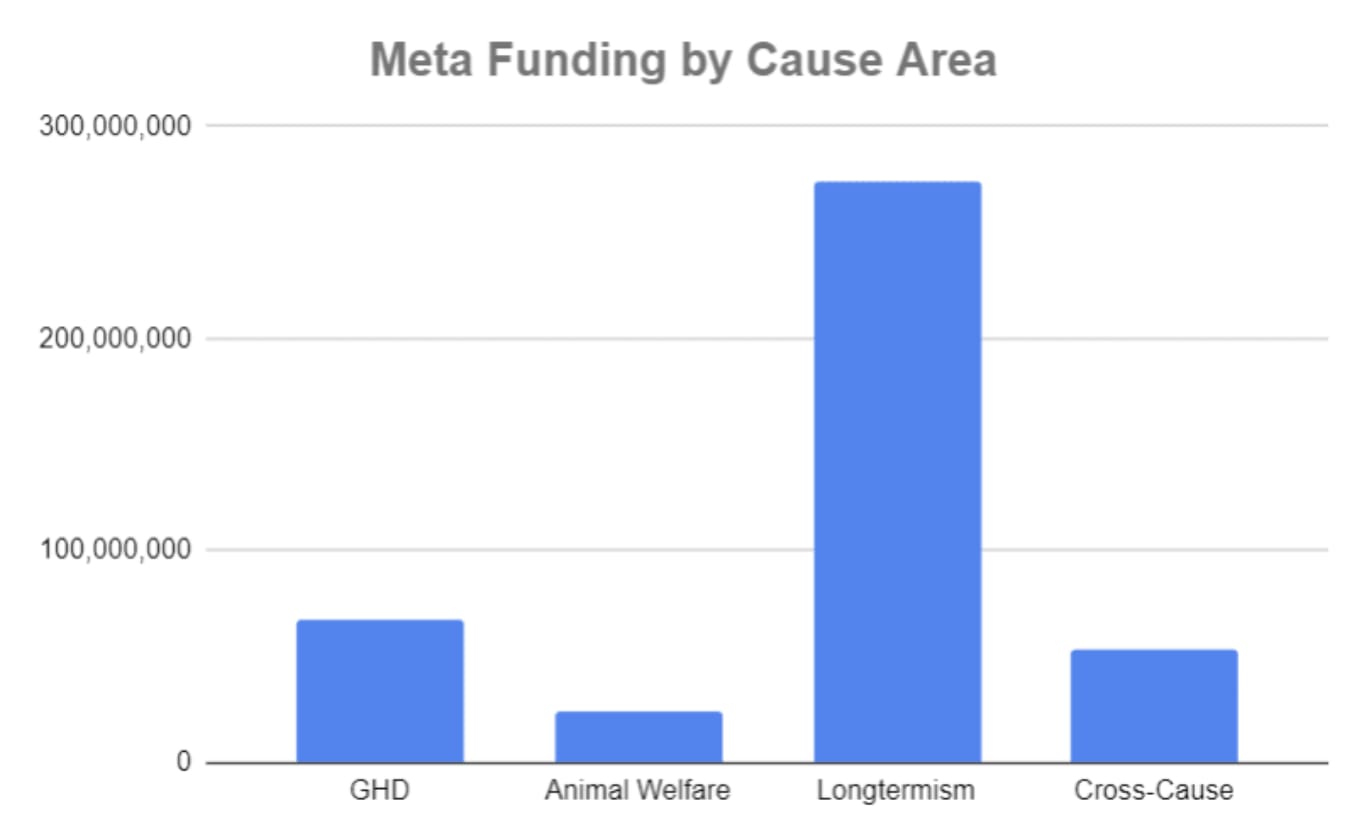

I would love to see an analysis that normalizes for meta funds flowing into causes areas. In @Joel Tan's recent Meta Funding Landscape post, he states that OP grants 72% of total meta funds and that the lion's share goes to longtermism.

From his post:

And although the EA Infrastructure fund supports multiple cause areas, if you scroll through the recent grants you might be surprised at the percentage going to LT.

Funders should, of course, prioritize the ...

Hey Hauke, really interesting and important - thanks!

A quick note:

The next $1k/cap increase in a country at $10k/cap is worth only 1/10th as much as in a country with $100k/cap, because, the utility gained from increasing consumption from $1k to $10k is much greater than the utility gained from increasing consumption from $11k to $20k, even though the absolute dollar amounts are the same.

The claim in the part I bolded should be reversed, no? E.g. Increasing $1K where GDP/cap is $100K is worth 1/10th as much...etc.

To me, based on what you said, you have provided a lot of value to many people at relatively low cost to yourself. I have the impression that the time was quite counterfactual given you didn't seem to have many burning projects at the time. So, seems pretty good to me on the face of it although for every given detail you know way more than I do!

Hey David, thanks for the question. I just want to chime in from High Impact Professionals' stand point.

High Impact Professionals has two main products, both of which are geared at getting professionals into more impactful roles. These are:

- Talent Directory - We have a talent directory with over 750 impact-oriented professionals looking for work at high-impact organizations and over 100 organizations sourcing from it. We encourage both professionals and organizations to sign up. I think this doesn't have much overlap with SuccessIf, except with thei...

Thanks for asking Devon!

On the margin, our commissioned reports cost ~$7,000-$30,000 depending on the scope (this particular report being on the lower end of that spectrum). That being said, we don't take in every commissioned work that comes our way and only have the capacity to take on a few projects at this price because: (1) we have longer term agreements with a couple of organizations for which we do a lot of work, (2) we want our team to have the capacity to pursue independent projects we think are promising.

That being said, you're always welcome to reach out if there are specific projects you are interested in. We always welcome new ideas and look forward to connecting with new individuals/organizations :)

Cool initiative!

I am not very involved in AI Safety but have heard multiple times from big funders in the ecosystem over time something like "everything in AI Safety that SHOULD be funded IS being funded. What we really need are good people working on it." I'd expect e.g. OP to be excited to fund great people working in the space, so curious why you think the people who will apply to your network aren't getting funded otherwise.

Just for context: I am very FOR a more diverse funding ecosystem so I think getting more people to fund more projects who have different strategies for funding and risk tolerances is going in the right direction.

Good question! Here are a few thoughts on that:

- Evaluating charities is more like evaluating startups than evaluating bridge-builders

You can tell if somebody is a good bridge builder. We have good feedback loops on bridges and we know why bridges work. For bridges, you can have a small number of experts making the decisions and it will work out great.

However, with startups, nobody really knows what works or why. Even with Y Combinator, potentially the best startup evaluator in the world, the vast majority of their bets don’t work out. We don’t know&nbs...

There are many factors going into that issue, but I think the biggest are the bottlenecks within the pipeline that brings money from OP to individual donation opportunities. Most directly, OP has a limited staff and a lot of large, important grants to manage. They often don't have the spare attention, time, or energy to solicit, vet, and manage funding to the many individuals and small organizations that need funding.

LTFF and other grantmakers have similar issues. The general idea is that just there are many inefficiencies in the grantmaker -> ??? ->...

Yeah I suppose we just disagree then. I think such a big error and hit to the community should downgrade any rational person's belief in the output of what EA has to offer and also downgrade the trust they are getting it right.

Another side point: Many EAs like Cowen and think he is right most of the time. I think it is suspicious that when Cowen says something about EA that is negative he is labeled stuff like "daft".

Hi Devon, FWIW I agree with John Halstead and Michael PJ re John's point 1.

If you're open to considering this question further, you may be interested in knowing my reasoning (note that I arrived at this opinion independently of John and Michael), which I share below.

Last November I commented on Tyler Cowen's post to explain why I disagreed with his point:

...I don't find Tyler's point very persuasive: Despite the fact that the common sense interpretation of the phrase "existential risk" makes it applicable to the sudden downfall of FTX, in actuality I think fo

The difference in that example is that Scholtz is one person so the analogy doesn't hold. EA is a movement comprised of many, many people with different strengths, roles, motives, etc and CERTAINLY there are some people in the movement whose job it was (or at a minimum there are some people who thought long and hard) to mitigate PR/longterm risks to the movement.

I picture the criticism more like EA being a pyramid set in the ground, but upside down. At the top of the upside-down pyramid, where things are wide, there are people working to ensure the l...

I think I just don't agree with your charitable reading. The very next paragraph makes it very clear that Cowen means this to suggest that we should think less well of actual existential risk research:

Hardly anyone associated with Future Fund saw the existential risk to…Future Fund, even though they were as close to it as one could possibly be.

I am thus skeptical about their ability to predict existential risk more generally, and for systems that are far more complex and also far more distant.

I think that's plain wrong, and Cowen actually is doing the ...

I agree with most of your points, but strongly disagree with number 1 and surprised to have heard over time that so many people thought this point was daft.

I don't disagree that "existential risk" is being employed in a very different sense, so we agree there, in the two instances, but the broader point, which I think is valid, is this:

There is a certain hubris in claiming you are going to "build a flourishing future" and "support ambitious projects to improve humanity's long-term prospects" (as the FFF did on its website) only to not exist 6 months later ...

Scott Alexander, from "If The Media Reported On Other Things Like It Does Effective Altruism":

Leading UN climatologist Dr. John Scholtz is in serious condition after being wounded in the mass shooting at Smithfield Park. Scholtz claims that his models can predict the temperature of the Earth from now until 2200 - but he couldn’t even predict a mass shooting in his own neighborhood. Why should we trust climatologists to protect us from some future catastrophe, when they can’t even protect themselves in the present?

I'm curious to hear the perceived downsides about this. All I can think of is logistical overhead, which doesn't seem like that much.

If something is called the "Coordination Forum" and previously called the "Leaders Forum" and there is a "leaders" slack where further coordination that affects the community takes place, it seems fair that people should at least know who is attending them. The community prides itself on transparency and knowing the leaders of your movement seems like one of the obvious first steps of transparency.

I've heard grantmakers in the LT space say that "everything that is above the bar is getting funded and what we need are more talented people filling roles or new orgs to start up." So it seems that any marginal donation going to the EA Funds LTFF is currently being underutilized/not used at all and doesn't even funge well. So that makes me lean more neartermist, even if you accept that LT interventions are ultimately more impactful.

Apologies if that is addressed in the video above - don't have time to view it now, but from your description it looks like it is more geared around general effectiveness and not on-the-current-margin giving right now.

Curious if you have any thoughts on that.

I actually strongly disagree with this - I think too many people defer to the EA Funds on the margin. Don't get me wrong, I think they do great work, but if FTX showed us anything it is that EA needs MORE funding diversity and I think this often shakes out as lots of people making many different decisions on where to give, so that e.g. and org doesn't need to rely on its existence from whether or not its gets a grant from EA Funds/OP.

In light of FTX, I am updating a bit away from giving to meta stuff, as some media made clear that a (legitimate) concern is EA orgs donating to each other and keeping the money internal to them. I don't think EAs do this on purpose for any bad reason, in fact I think meta is high leverage, but concern does give one pause to think about why we are doing this and also how this is perceived from the outside.

This year, I am giving $10K to Charity Entrepreneurship's incubated charities at their discretion as they know where it will best be placed after all counterfactuals have been calculated. I am giving here for a lot of reasons (CoI: I like them so much I am on the board):

- I think there is a lot of counterfactual value in supporting new EA startups with higher risk profiles, especially within CE, where there is a good rate of growth to GW Top Charity status.

- I like to fund stuff that isn't getting funded through the normal means to create more diversified fund

Hi Simon, love the initiative and have been working on an illustrated philosophy book for kids as well (and by 'working on' I mean 'made it 5 years ago and have to get back to it when I find the time with an outstanding promise to finish it before my daughter turns 6').

Will definitely sign up for the beta and provide feedback. Looking forward to reading it!

There is a big difference between not wanting to work with someone because you don't like their ethics (looking at you Kerry) and thinking they are going to commit the century's worst fraud.

I don't think anyone asking for more information about what people knew believes that central actors knew anything about fraud. If that is what you think, then maybe therein lies the rub. It is more that strong signs of bad ethics are important signals and your example of Kerry is perhaps a good one. Imagine if people had concerns with someone on the level of what they ...

Strongly agree with these points and think the first is what makes the overwhelming difference on why EA should have done better. Multiple people allege (both publicly on the forum and people who have told me in confidence) to have told EA leadership that SBF was doing thinks that strongly break with EA values since the Alameda situation of 2018.

This doesn't imply we should know about any particular illegal activity SBF might have been undertaking, but I would expect SBF to not have been so promoted throughout the past couple of years. This is ...

I respect that people who aren't saying what they know have carefully considered reasons for doing so.

I am not confident it will come from the other side as it hasn't to date and there is no incentive to do so.

May I ask why you believe it will be made public eventually? I truly hope that is the case.

The incentives for them to do so include 1) modelling healthy transparency norms, 2) avoiding looking bad when it comes out anyway, 3) just generally doing the right thing.

I personally commit to making my knowledge about it public within a year. (I could probably commit to a shorter time frame than that, that's just what I'm sure I'm happy to commit to having given it only a moment's thought.)

I agree - I don't think that the FTX debacle should define cause areas per se and I think all of the cause areas on the EA menu are important and meaningful. I meant more to say that EA has played more fast and loose over the years and gotten a bit too big for its britches and I think that is somehow correlated with what happened, although like everyone else I am working all of this out in my head like everyone else. Just imagine that Will was connecting SBF with Elon Musk as one example that was made public and only because it had to be, so we can assume ...

The people who are staying quiet about who they told have carefully considered reasons for doing so, and I'd encourage people to try to respect that, even if it's hard to understand from outside.

My hope is that the information will be made public from the other side. EA leaders who were told details about the events at early Alameda know exactly who they are, and they can volunteer that information at any time. It will be made public eventually one way or another.

My decision procedure does allow for that and I have lots of uncertainties, but it feels that given many insiders claim to have warned people in positions of power about this and Sam got actively promoted anyway. If multiple people with intimate knowledge of someone came to you and told you that they thought person X was of bad character you wouldn't have to believe them hook line and sinker to be judicious about promoting that person.

Maybe this is the most plausible of the 3 and I shouldn't have called it super implausible, but it doesn't seem very plausible for me, especially from people in a movement that takes risks more seriously than any other that I know.

I don't mean to claim that you think it should replace discussion. I think just reading your text I felt that you bracketed off the discussion/reflection quite quickly and moved on to what things will be like after which feels very quick to me. I think the discussions in the next few months will (I hope) change so many aspects of EA that I don't feel like we can make any meaningful statements about the shape of what comes next.

I see from your comment here that you also want to be motivational in the face of people being disheartened by the movement. I can see that now and think that that aspect is great.

We absolutely think (and stated this clearly) that this outlook shouldn't replace the discussion and reflection on the current situation.

What I've noticed when discussing with EAs during the past few days, is that many feel like this is a disaster we wouldn't recover from. I do think it's important to emphasize that we're not back to ground zero.

Thank you for your support Uhitha! I hope you enjoy it. Please do let me know what you think.