All of terraform's Comments + Replies

I think some were false. For example, I don't get the stuff about mini-drones undermining nuclear deterrence, as size will constrain your batteries enough that you won't be able to do much of anything useful. Maybe I'm missing something (modulo nanotech).

I think it's very plausible scaling holds up, it's plausible AGI becomes a natsec matter, it's plausible it will affect nuclear deterrence (via other means), for example.

What do you disagree with?

I agree with much of Leopold's empirical claims, timelines, and analysis. I'm acting on it myself in my planning as something like a mainline scenario.

Nonetheless, the piece exhibited some patterns that gave me a pretty strong allergic reaction. It made or implied claims like:

* a small circle of the smartest people believe this

* i will give you a view into this small elite group who are the only who are situationally aware

* the inner circle longed tsmc way before you

* if you believe me; you can get 100x richer -- there's still alpha, you can still be...

Happened to come across this old comment thread discussion whether holding too much Facebook stock was too risky. In the four years since the comment on Sep 21, 2020, Meta stock is up >100% and at an all time high. However, before reaching that point, it also had as large as a 60% drawdown vs the Sep 21 value, which occurred in late 2022 (notably, around the time of the FTX collapse).

(I haven't read the full comment here and don't want to express opinions about all its claims. But for people who saw my comments on the other post, I want to state for the record that based on what I've seen of Richard Hanania's writing online, I think Manifest next year would be better without him. It's not my choice, but if I organised it, I wouldn't invite him. I don't think of him as a "friend of EA".)

No, I think this is again importantly wrong.

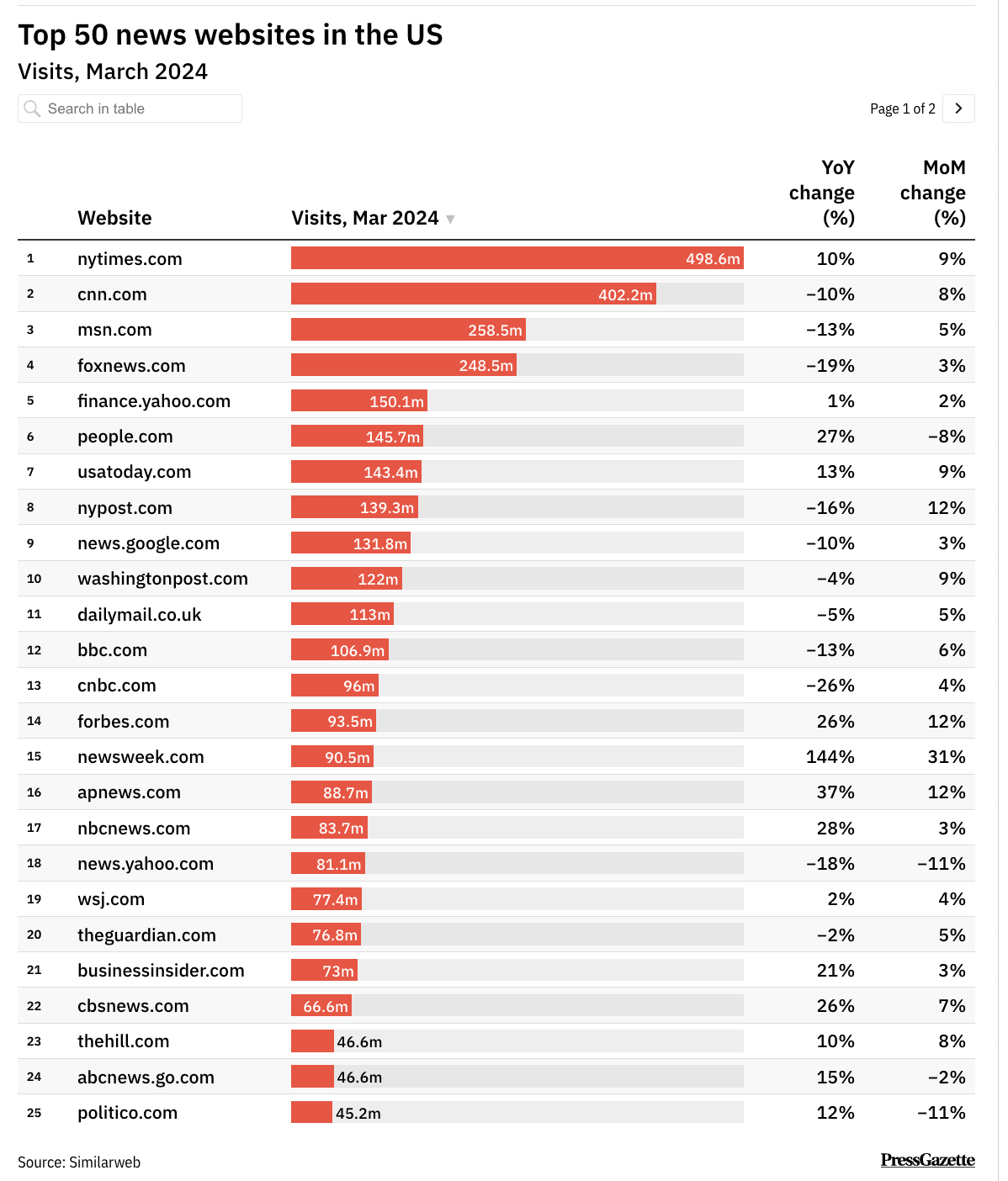

First, this was published in the Guardian US, not the Guardian.

The Guardian US does not have half the traffic of the NYTimes. It has about 15% the traffic, far as I can tell (source). The GuardianUS has 200k Twitter followers; The Guardian has 10M Twitter followers (so 2% of the following).

Second, I scrolled through all the tweets in the link you sent showing "praise". I see the following:

- Emile Torres with 250 likes.

- Timnit Gebru's new research org retweeting, 27 likes

- A professor I d

My mistake on the guardian US distinction but to call it a "small newspaper" is wildly off base, and for anyone interacting with the piece on social media, the distinction is not legible.

Candidly, I think you're taking this topic too personally to reason clearly. I think any reasonable person evaluating the online discussion surrounding manifest would see it as "controversial." Even if you completely excluded the guardian article, this post, Austin's, and the deluge of comments would be enough to show that.

It's also no longer feeling like a productive conversation and distracts from the object level questions.

Ah! I was wrong to claim you made "no" such comments. I've edited my above comment.

Now, I of course notice how you only mention "lots of mistakes" after Jeffrey objects, and after it's become clear that there is a big outpouring of hit piece criticism, and only little support.

Why were you glad about it before then?

Did you:

- ...not think it was a hit piece? (I think you're a smart guy, and even a journalist yourself, so I'm kind of incredulous about you not picking up on the patterns here)

- ...or were you okay with the-amount-of-hit-piece-you-though

To sort of steelman a defence here, shouldn't we be glad that Shakeel is publicly expressing the views he actually holds. To my understanding, he doesn't like how rationalists behave in this area and so has said so, both on twitter and on the forum.

Perhaps you might have preferred he did it differently, but it seems like he could have done it much worse and given it's a thing he actually believes, it seems better that he said it than that he didn't.

(I'm not sure I fully endorse this, nor do I endorse shakeel's position in general, but like I'm glad he's said it on the forum)

In the follow-up tweet you say: "Glad to see the press picking [this story] up (though wish they made the rationalist/EA distinction clearer!)"

So far as I've found, you've made no comments indicating that you disagree with the problematic methodology of the piece, and two comments saying you were "delighted" and "glad" with parts of it. I think my quote is representative. I've updated my comment for clarity.

Nonetheless: how would you prefer to be quoted?

EDIT: Shakeel posted a comment pointing to a tweet of his "mistakes" in the post, and I was ...

Mm, for example, I think using the word "fag" in conversation is somewhat past the line; I don't see why that kind of epithet would need to be used at Manifest, and hope that I would have spoken out against that kind of behavior if I had witnessed it. (I'm naturally not a very confrontational person, fwiw).

I don't remember any instances or interactions throughout Manifest that I witnessed which got close to the line; it's possible it didn't happen in front of me, because of my status as an organizer, but I think this was reflective of the vast majority of ...

Man yesterday this was at +20 karma and no it's at -20. There seems to be a massive diurnal effect in how the votes on the forum swing.

I think both of those karma values are kind of extreme, and so find myself flipping my vote around. But wish I could leave an anchor vote like "if the vote diverges from value X, change my vote to point it back toward X"

I think the really key thing here is the bait-and-switch at play.

Insofar as "controversial" means "heated discussion of subject x", let's call that "x-controversial".

Now the article generates heated discussion because of "being a hit piece", and so is "hit-piece-controversial". However, there's then also heated discussion of racism on the forum, call that "racism-controversial".

If we then unpack the argument made by Garrison above, it reads as "It is fair and accurate to label Manifest racism-controversial, because of a piece of reporting that ...

To clarify:

- Claiming that Manifest is controversial because of the Guardian reporting --- I'll argue against this pretty strongly

- Claiming that Manifest is controversial because of an independent set of good faith accounts from EA forum members --- more legit and I can see the case (though I personally disagree)

Very importantly, Garrison's comment was arguing using 1, not 2.

To perhaps help clarify the discourse, I'll leave a comment below where people can react to signal "I think the argument for controversy from the Guardian article is invalid; but I do think Manifest should be labeled controversial for other arguments that I think are valid"

The definition of “controversial” is “giving rise or likely to give rise to controversy or public disagreement”. The definition of “controversy” is “prolonged public disagreement or heated discussion”. This unusually active thread is, quite clearly, an example of “prolonged public disagreement or heated discussion”.

I think if the only thing claiming controversy was the article, it might make sense to call that fabricated/false claim by an outsider journalist, but given this post and the fact many people either disapprove or want to avoid Manifest, (and also that Austin writes about consciously deciding to invite people they thought were edgy,) means I think it's just actually just a reasonable description.

And there's disanalogy there. Racism is about someone's beliefs and behaviors, and I can't change those of someone's else's with a label. But controversy means peop...

I think controversial is a totally fair and accurate description of the event given that it was the subject of a very critical story from a major newspaper, which then generated lots of heated commentary online.

No, this argument is importantly invalid.

- It was not a "critical story". It was a hit piece engineered to cause reputational damage. This distinction really matters. (For people who wanted more receipts than my above comment about the adversarial intent, the journalist behind the article now also has sent a cryptic message eerily similar

What would you suggest as an alternative title? I don't feel very strongly about that particular choice of word and would be happy to change the title.

I considered changing the title to "My experience with racism at Manifest 2024", but that feels like it might invite low quality discussion and would probably be bad.

(Side note regarding the karma system and this comment being at like -30: I think the right response to this comment is "upvote, because it concretely and clearly answers the question, and then if you also disagree, use the 'disagree' react button". It's not a bad contribution to the discourse, which is what I think the downvote button should be used for)

(Edit: the comment is at +26 now. Karma here really is a rollercoaster, lol.)

Thanks for the reply, it feels like you're engaging in good faith and I really appreciate that!

Brief notes --

- The word "controversy": Thanks. I think the issue with some of these media things is that they feed off of themselves. Something becomes a controversy merely because everyone believes it's controversial; even though it really might not have to be. (For a longer explanation of this phenomenon, search for "gaffe" here)

- People you met: I believe you that you met people who were into HBD. I saw at least one comment in Manifest discord last year tha

I agree that framing is a bit intense, but noting that:

- He mentions "I’ve long expressed my disgust at how Lightcone/Manifold indulge abhorrent ideas and people, both while I was at CEA and after."

- The opinions are sometimes really just wrappers around imperative claims ("I think that... you ought to X")

- He also appears to support the journalistic methodology of the Guardian piece. That piece, of course, is not expressing opinions; it is adversarially designed to cause reputational damage.

I've seen Shakeel's comments in other places before, and I used t...

For those not on Twitter, note that the above commenter seems to be pursuing an active campaign against Manifold and Lightcone. See these two twitter threads [1], [2].

Quotes include being "glad to see" the Guardian running their piece and being "delighted" with the part on Brian Chau, "a big chunk of the rationalist community is just straight up racist", and "the entire 'truth seeking' approach is often a thinly veiled disguise for indulging in racism."

Yeah. I am aware of the story. (I was in fact the person who made this site, together with my colleague Ben.) Updated my comment for clarity.

(For people who don't know all the details: Scott didn't just voluntarily doxx himself. He only did it in a kind of judo-move response to the New York Times informing him they were going to proceed with doxxing him, against his repeatedly strongly expressed wishes.)

So, I downvoted this post, and wanted to explain why.

First though, I'd like to acknowledge that Manifest sure seems by far the most keen to invite "edgy" speakers out of any Lighthaven guests. Some of them seem like genuinely curious academics with an interest bound to get them into trouble (like Steve Hsu), whereas others seem like they're being edgy for edges sake, in a way that often makes me cringe (like Richard Hanania last year). Seems totally fair to discuss what's up with that speaker choice.

However, the way you engage in that discussio...

I think controversial is a totally fair and accurate description of the event given that it was the subject of a very critical story from a major newspaper, which then generated lots of heated commentary online.

And just as a data point, there is a much larger divide between EAs and rationalists in NYC (where I've been for 6+ years), and I think this has made the EA community here more welcoming to types of people that the Bay has struggled with. I've also heard of so many people who have really negative impressions of EA based on their experiences in...

Thanks for your comment! I think most of these issues stem from the fact that I am not a very good writer or a communicator, and because I tried to be funny at the same time. I hope you can cut me some slack, like you said. Rest assured I haven't written this post as a bad-faith hit piece, but as a collection of grievances that expand upon some of the core claims The Guardian article made. I am quite a conlfict averse person, so doing this in the first place is pretty nerve wrecking and I'm sure I made a bunch of mistakes or framed things in a sub-optimal ...

they're happy to collaborate with parts of the community that shares enough of their worldview/assumptions

I work at Lightcone and with Lighthaven, and I'm mostly happy to engage in positive sum economic trade with anyone regardless of worldview :) I'm hoping we can rent space for orgs both in the community and beyond merely as means of a good trade that helps us both, and regardless of where we agree or not in other matters.

The worldview alignment mostly effects where we'd spend our limited ability to subsidize events or run them below cost!

To really make this update, I'd want some more bins than the ones Nuno provide. That is, there could be an "extremely more successful than expected" bin; and all that matters is whether you manage to get any grant in that bin.

(For example, I think Roam got a grant in 2018-2019, and they might fall in that bin, though I haven't thought a lot about it.)

Counterpoint: yes, Facebook has lots of public image issues. As a result, we have good evidence that they're an org that's unusually resistant to such problems!

They've been having scandals since they were founded. And in spite of all the things you mention, their market cap has almost doubled since the bottom of the Cambridge Analytica fall-out.

They're also one of the world's most valuable companies, and operate in a sector (software) that on an inside view seems well poised to do well in future (unlike, say, Berkshire Hathaway, ...

This was really interesting to forecast! Here's my prediction, and my thought process is below. I decomposed this into several questions:

- Will OpenAI commercialize the API?

- 94% – this was the intention behind releasing the API, although the potential backlash adds some uncertainty [1]

- When will OpenAI commercialize the API? (~August/September)

- They released the API in June and indicated a 2 month beta, so it would begin generating revenue in August/September [2]

- Will the API reach $100M revenue? (90%)

- Eliezer is willing to bet there’ll be 1B i

I'm also posting a bounty for suggesting good candidates: $1000 for successful leads on a new project manager; $100 for leads on a top 5 candidate

DETAILS

I will pay you $1000 if you:

- Send us the name of a person…

- …who we did not already have on our list…

- …who we contacted because of your recommendation...

- ...who ends up taking on the role

I will pay you $100 if the person ends up among the top 5 candidates (by our evaluation), but does not take the role (given the other above constraints).

There’s no requirement for you to ...

In addition, I'll mention:

- Foretold is tracking ~20 questions and is open to anyone adding their own, but doesn't have very many predictions.

- In addition to the one you mentioned, Metaculus is tracking a handful of other questions and has a substantial number of predictions.

- The John Hopkins disease prediction project lists 3 questions. You have to sign up to view them. (I also think you can't see the crowd average before you've made your prediction.)

Here's a list of public forecasting platforms where participants are tracking the situation:

Foretold is tracking ~20 questions and is open to anyone adding their own, but doesn't have very many predictions.

Metaculus is tracking a handful questions and has a substantial number of predictions.

The John Hopkins disease prediction project lists 3 questions. You have to sign up to view them. (I also think you can't see the crowd average before you've made your prediction.)

This set-up does seem like it could be exploitable in an adversarial manner... but my impression from reading the poll results, is that this is weak evidence against that actually being a failure mode -- since it doesn't seem to have happened.

I didn't notice any attempts to frame a particular person multiple times. The cases where there were repeated criticism of some orgs seemed to plausibly come from different accounts, since they often offered different reasons for the criticism or seemed stylistically different.

Moreover, if asked beforehand ...

If there were a way to do this with those opinions laundered out, then I wouldn't have a problem.

I interpret [1] you here as saying "if you press the button of 'make people search for all their offensive and socially disapproved beliefs, and collect the responses in a single place' you will inevitably have a bad time. There are complex reasons lots of beliefs have evolved to be socially punished, and tearing down those fences might be really terrible. Even worse, there are externalities such that one person saying something crazy is goi...

Why do you think this is better than encouraging people to join foretold.io as individuals? Do you think that we are lacking an institution or platform which helps individuals to get up to speed and interested in forecasting (so that they are good enough that foretold.io provides a positive experience)?

I'm not sure if the group should fully run the tournaments, as opposed to just training a local team, or having the group leader stay in some contact with tournament organisers.

Though I have an intuition that some support from a local group might make ...

A while back me and habryka put up a bounty for people to compile a systematic list of social movements and their fates, with some interesting results. You can find it here.

How would this be an "internal practice"? The only way this would work would be to have people publically post their earn addresses.

"Internal" in the sense of being primarily intended to solve internal coordination purposes and primarily used in messaging within the community.

I think you underrate the cost of weirdness.

You gave a particular example of a causal pathway by which weirdness leads to bad stuff, but it doesn't really cause me to change my mind because I was already aware of it as a failure mode. What makes you think I u...

It's not clear to me that we are in a mess.

Well, that's why I'm posting this -- to get some data and find out :)

(I guess the title seemed to have turned a few people off, though)

In hindsight, I should have made the intended use-cases clearer in the post. I optimised for shipping it fast rather than not at all, but that had its costs.

The reason I wrote this was basically entirely motivated by problems I've encountered myself.

For example, I’ve spent this year trying to build an AI forecasting community, and faced the awkward problem ...

This is super helpful, thanks (and that's a really awesome list of email hygiene tips, I've saved it).

I wonder whether educating and encouraging good email hygiene could be an easier solution (at least initially).

I think it would improve things on the margin, and also has a much smaller risk of landing us in a worse equilibrium, so it seems robustly good for people to do.

Still, I'm not super excited because if you believe that the initial mess is a coordination problem, the solution is not for individuals to put in lots of effort to be h...

It's not clear to me that we are in a mess. The only actual example you gave was a spammy corporate newsletter, which seems irrelevant.

This might look as follows: Lots of people write to senior researchers asking for feedback on papers or ideas, yet they’re mostly crackpots or uninteresting, so most stuff is not worth reading. A promising young researcher without many connections would want their feedback (and the senior researcher would want to give it!), but it simply takes too much effort to figure out that the paper is promising, so it never gets...

On the topic of weirdness: I expect that if what I'm pointing to is a real problem, and paid emails would help the situation, then the benefits from becoming more effective at coordinating internally would massively outweigh reputational risks from increased weirdness.

I find it somewhat hard to elucidate the reasons I believe this (though could try if you'd want me to), but some hand-wavy examples are Paul Graham's thoughts that it's almost always a mistake for startups to worry about competitors as opposed to focusing on building a go...

I think the way to answer the question is: "given the distribution of equilibria we expect following this change, what are the expected costs and benefits, and how does that compare with the costs and benefits under the current equilibrium?" (as well as considering strategic heuristics like avoiding irreversible actions and unilateralist action.)

I don't update much on your comment since it feels like it's just pointing out a bunch of costs under a particular new equilibrium, without engaging enough with how likely this is or what the be...

This was crossposted to LessWrong, replacing all the mentions of "EA" with "rationality", mutatis mutandis.

(instead of making all comments on both places, ill continue discussing over at lesswrong https://www.lesswrong.com/posts/i5pccofToYepythEw/against-aschenbrenner-how-situational-awareness-constructs-a#Hview8GCnX7w4XSre )