Michael Townsend🔸

Bio

Program Associate on Open Phil's Global Catastrophic Risks Capacity Building team.

🔸 GWWC Pledger

Posts 17

Comments91

Topic contributions1

It seems pretty hard to argue with the idea that:

- You're more informed about what you're giving up if you take the 10% Pledge after having worked for 1–5 years,

- and it's generally a good thing to be informed before you make life-long commitments!

But even still, I think being an undergrad at university is a pretty great time to take the 10% Pledge. Curious what you/others think of the arguments here.

One thought is: why should the default be to keep 100% of your income? The world seems on fire, money seems like it can do a lot of good, and the people we're talking about pledging are likely pretty rich. I think in almost all cases, if you're among the richest few percent of the world, it's the right thing to do to give 10% (or more) of your income to effective charities. And I think that, even while you're an undergrad, there's a good chance you can know with very high confidence you're going to end up being among the richest few percent — assuming you're not there already.

An analogy could be raising children as vegetarians/vegans. I think this is a totally justified thing to do, and I personally wish I was raised vegetarian/vegan, so that I never craved meat. Some think that it's not fair to impose this dietary restriction on a child who can't make an informed choice, and it'd be better to only suggest they stop eating meat after they know what it tastes like. But given that eating meat is wrong (and it's also an imposition on the child to make them eat meat before they know that it is wrong!) I don't think being an omnivore should be the default.

There's admittedly an important disanalogy here: the parent isn't making a lifelong commitment on behalf of the child. But I think it still gets at something. At least in from my own experience, I feel like I benefited a lot from taking the 10% Pledge while at uni. If I hadn't, I think there's a good chance my commitment to my values could have drifted, and on top of that, every time I did give, it'd have felt like a big, voluntary/optional cost. Whereas now, it feels like a default, and I don't really know what it's like to do things another way (something I value!).

That said, I think it's pretty bad if anyone — but perhaps especially undergrads/people who are less sure what they're signing up to — feels like they were pressured to make such a big commitment. And in general, it's a rough situation if someone regrets it. But I've previously seen claims that undergrads pledging is bad, and should be discouraged, which seems like a step too far, and is more what I had in mind when I wrote this (sorry if that's not really making contact with your own views, Neel!).

Just speaking for myself, I'd guess those would be the cruxes, though I don't personally see easy-fixes. I also worry that you could also err on being too cautious, by potential adding warning labels that give people an overly negative impression compared to the underlying reality. I'm curious if there are examples where you think GWWC could strike a better balance.

I think this might be symptomatic of a broader challenge for effective giving for GCR, which is that most of the canonical arguments for focusing on cost-effectiveness involve GHW-specific examples, that don't clearly generalize to the GCR space. But I don't think that indicates you shouldn't give to GCR, or care about cost-effectiveness in the GCR space — from a very plausible worldview (or at least, the worldview I have!) the GCR-focused funding opportunities are the most impactful funding opportunities available. It's just that the kind of reasoning underlying those recommendations/evaluations are quite different.

(I no longer work at GWWC, but wrote the reports on the LTFF/ECF, and was involved in the first round of evaluations more generally.)

In general, I think GWWC's goal here is to "to support donors in having the highest expected impact given their worldview" which can come apart from supporting donors to give to the most well-researched/vetted funding opportunities. For instance, if you have a longtermist worldview, or perhaps take AI x-risk very seriously, then I'd guess you'd still want to give to the LTFF/ECF even if you thought the quality of their evaluations was lower than GiveWell's.

Some of this is discussed in "Why and how GWWC evaluates evaluators" in the limitations section:

Finally, the quality of our recommendations is highly dependent on the quality of the charity evaluation field in a cause area, and hence inconsistent across cause areas. For example, the state of charity evaluation in animal welfare is less advanced than that in global health and wellbeing, so our evaluations and the resulting recommendations in animal welfare are necessarily lower-confidence than those in global health and wellbeing.

And also in each of the individual reports, e.g. from the ACE MG report:

As such, our bar for relying on an evaluator depends on the existence and quality of other donation options we have evaluated in the same cause area.

- In cause areas where we currently rely on one or more evaluators that have passed our bar in a previous evaluation, any new evaluations we do will attempt to compare the quality of the evaluator’s marginal grantmaking and/or charity recommendations to those of the evaluator(s) we already rely on in that cause area.

- For worldviews and associated cause areas where we don’t have existing evaluators we rely on, we expect evaluators to meet the bar of plausibly recommending giving opportunities that are among the best options for their stated worldview, compared to any other opportunity easily accessible to donors.

First just wanted to say that this:

In my first year after taking the pledge, I gave away 20% of my income. However I had been able to save and invest much of my disposable income from my relatively well paid career before taking the pledge and so had built up strong financial security for myself and my family. As a result, I increased my donations over time and since 2019, have given away 75% of my income.

...is really inspiring :).

I'm interested in knowing more about how Allan decides where to donate. For example:

I currently split my donations between the Longview Philanthropy Emerging Challenges Fund and the Long Term Future Fund- I believe in giving to funds and letting experts with much more knowledge than me identify the best donation opportunities.

How did Allan arrive at this decision, and how confident does he feel in it? Also, how connected does Allan feel with other EtG'ers who are giving a similar amounts based on a similar worldview?

Just on this point:

Relatedly, it feels like this is not what the username field is for. If I'm interacting with someone on some topic unrelated to my advocacy it feels intrusive and uncooperative to be bringing it into the conversation

I think this argument might have a lot of power among folks who tend to think of social norms in quite explicit/analytical terms, and who put a lot of emphasis on being cooperative. But I suspect relatively few people will see this as uncooperative/intrusive, because the pin and the idea it's advocating are pretty non-offensive.

Luke, thank you for everything you've done for GWWC and the world.

I don’t think many people get to meet someone with such extraordinary levels of care, both for those far away in space/time, and loved ones nearby. While I was working at GWWC, Luke’s most common reason to take a day off was to help someone move house. Luke, your kindness, integrity and commitment are contagious — even with you no longer at the organization, those virtues will stay with GWWC in large part because of how you demonstrated them.

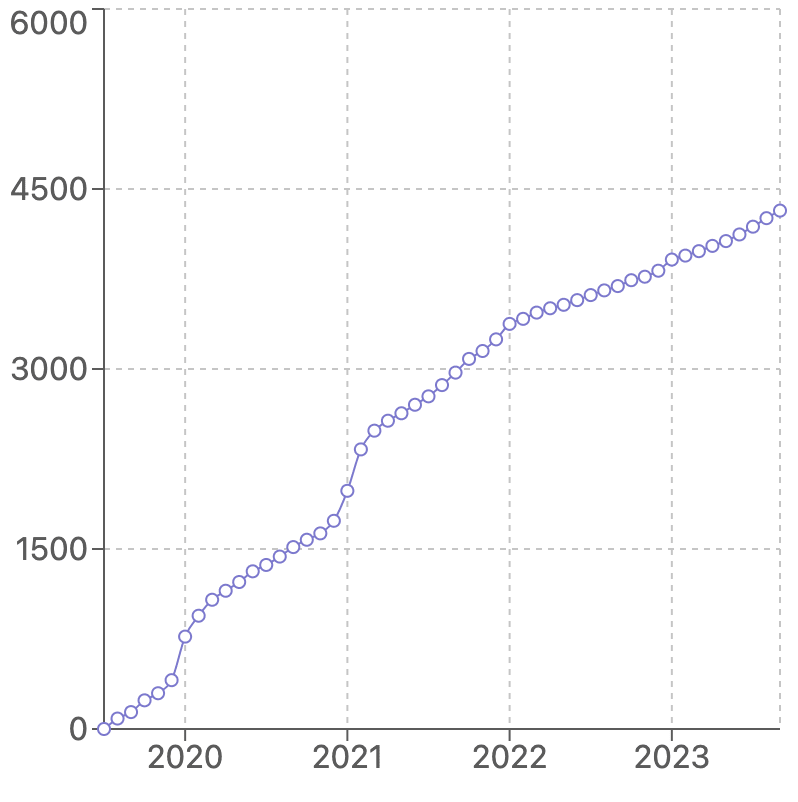

Here’s a graph of new 10% Pledgers since Luke joined GWWC.[1]

- ^

Courtesy of Claude… though my critical feedback for it is that it makes it look like 2024 hasn’t happened yet, and that Luke joined in 2019. Both false!

Hi Vasco — not all organisations shared permission to have their name shared, but it includes many of the fundraising organisations on this list.

CEA: now EA-adjacent!