All of RyanCarey's Comments + Replies

Any updates on this, now that a couple of years have passed? Based on the website, I guess you decided not to hire a chair in the end? Also, was there only $750k granted in 2025?

Another relevant comment:

Overall a nice system card for Opus 4! It's a strange choice to contract Apollo to evaluate sabotage, get feedback that "[early snapshot] schemes and deceives at such high rates that we advise against deploying this model...." and then not re-contracting Apollo for final evals

I think we should keep our eye the most important role that online EA (and adjacent) platforms have played historically. Over the last 20 years, there has always been one or two key locations for otherwise isolated EAs and utilitarians to discover like-minds online, get feedback on their ideas, and then become researchers or real-life contributors. Originally, it was (various incarnations of) Felicifia, and then the EA Forum. The rationalist community benefited in similar ways from the extropians mailing list, SL4, Overcoming Bias and LessWrong. The sheer ...

How is EAIF performing in the value proposition that it provides to funding applicants, such as the speed of decisions, responsiveness to applicants' questions, and applicants' reported experiences? Historically your sister fund was pretty bad to applicants, and some were really turned off by the experience.

I guess a lot of these faulty ideas come from the role of morality as a system of rules for putting up boundaries around acceptable behaviour, and for apportioning blameworthiness moreso than praiseworthiness. Similar to how the legal system usually gives individuals freedom so long as they're not doing harm, our moral system mostly speaks to harms (rather than benefits) from actions (rather than inaction). By extension, the basis of the badness of these harms has to be a violation of "rights" (things that people deserve not to have done to them). Insofar ...

Yeah, insofar as we accept biased norms of that sort, it's really important to recognize that they are merely heuristics. Reifying (or, as Scott Alexander calls it, "crystallizing") such heuristics into foundational moral principles risks a lot of harm.

(This is one of the themes I'm hoping to hammer home to philosophers in my next book. Besides deontic constraints, risk aversion offers another nice example.)

Another relevant dimension is that the forum (and Groups) are the most targeted to EAs, so they will be most sensitive to fluctuations in the size of the EA community, whereas 80k will be the least sensitive, and Events will be somewhere in-between.

Given this and the sharp decline in applications to events, it seems like the issue is really a decrease in the size of, or enthusiasm in the EA community, rather than anything specific to the forum.

I think the core issue is that the lottery wins you government dollars, which you can't actually spend freely. Government dollars are simply worth less, to Pablo, than Pablo's personal dollars. One way to see this is that if Pablo could spend the government dollars on the other moonshot opportunities, then it would be fine that he's losing his own money.

So we should stipulate that after calculating abstract dollar values, you have to convert them, by some exchange rate, to personal dollars. The exchange rate simply depends on how much better the opportunit...

It sounds like you would prefer the rationalist community prevent its members from taking taboo views on social issues? But in my view, an important characteristic of the rationalist community, perhaps its most fundamental, is that it's a place where people can re-evaluate the common wisdom, with a measure of independence from societal pressure. If you want the rationalist community (or any community) to maintain that character, you need to support the right of people to express views that you regard as repulsive, not just the views that you like. This could be different if the views were an incitement to violence, but proposing a hypothesis for socio-economic differences isn't that.

Well, it's complicated. I think in theory these things should be open to discussion (see my point on moral philosophy). But now suppose that hypothetically there was incontrovertible scientific evidence that Group A is less moral or capable than Group B. We should still absolutely champion the view that wanting to ship Group A into camps and exterminate them is barbaric and vile, and that instead the humane and ethical thing to do is help Group A compensate for their issues and flourish at the best of their capabilities (after all, we generally hold this v...

In my view, what's going on is largely these two things:

[rationalists etc] are well to the left of the median citizens, but they are to the right of [typical journalists and academics]

Of course. And:

biodeterminism... these groups are very, very right-wing on... eugenics, biological race and gender differences etc.-but on everything else they are centre-left.

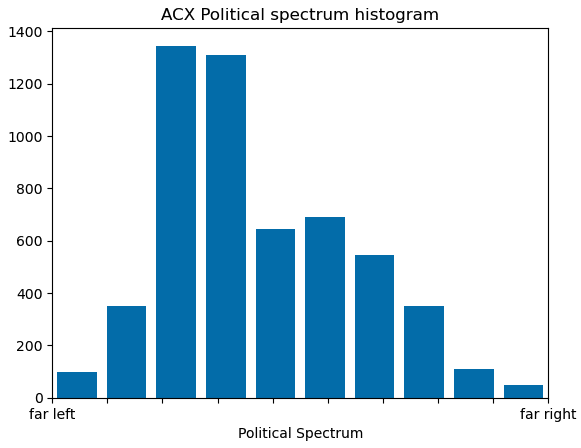

Yes, ACX readers do believe that genes influence a lot of life outcomes, and favour reproductive technologies like embryo selection, which are right-coded views. These views are actually not restr...

This was just a "where do you rate yourself from 1-10" type question, but you can see more of the questions and data here.

I think the trend you describe is mostly an issue with "progressives", i.e. "leftists" rather than an issue for all those left of center. And the rationalists don't actually lean right in my experience. They average more like anti-woke and centrist. The distribution in the 2024 ACX survey below has perhaps a bit more centre-left and a bit less centre and centre-right than the rationalists at large but not by much, in my estimation.

There is one caveat: if someone acting on behalf on an EA organisation truly did something wrong which contributed to this fraud, then obviously we need to investigate that. But I am not aware of any evidence to suggest that happened.

I tend to think EA did. Back in September 2023, I argued:

...EA contributed to a vast financial fraud, through its:

- People. SBF was the best-known EA, and one of the earliest 1%. FTX’s leadership was mostly EAs. FTXFF was overwhelmingly run by EAs, including EA’s main leader, and another intellectual leader of EA.

- R

This is who I thought would be responsible too, along with the CEO of CEA, that they report to, (and those working for the FTX Future Fund, although their conflictedness means they can't give an unbiased evaluation). But since the FTX catastrophe, the community health team has apparently broadened their mandate to include "epistemic health" and "Special Projects", rather than narrowing it to focus just on catastrophic risks to the community, which would seem to make EA less resilient in one regard, than it was before.

Of course I'm not necessarily saying th...

Surely one obvious person with this responsibility was Nick Beckstead, who became President of the FTX Foundation in November 2021. That was the key period where EA partnered with FTX. Beckstead had long experience in grantmaking, credibility, and presumably incentive/ability to do due diligence. Seems clear to me from these podcasts that MacAskill (and to a lesser extent the more junior employees who joined later) deferred to Beckstead.

In summarising Why They Do It, Will says that usually, that most fraudsters aren't just "bad apples" or doing "cost-benefit analysis" on their risk of being punished. Rather, they fail to "conceptualise what they're doing as fraud". And that may well be true on average, but we know quite a lot about the details of this case, which I believe point us in a different direction.

In this case, the other defendants have said they knew what they're doing was wrong, that they were misappropriating customers' assets, and investing them. That weighs somewhat against ...

(This comment is basically just voicing agreement with points raised in Ryan’s and David’s comments above.)

One of the things that stood out to me about the episode was the argument[1] that working on good governance and working on reducing the influence of dangerous actors are mutually exclusive strategies, and that the former is much more tractable and important than the latter.

Most “good governance” research to date also seems to focus on system-level interventions,[2] while interventions aimed at reducing the impacts of individuals...

Quote: (and clearly they calculated incorrectly if they did)

I am less confident that, if an amoral person applied cost-benefit analysis properly here, it would lead to "no fraud" as opposed to "safer amounts of fraud." The risk of getting busted from less extreme or less risky fraud would seem considerably less.

Hypothetically, say SBF misused customer funds to buy stocks and bonds, and limited the amount he misused to 40 percent of customer assets. He'd need a catastrophic stock/bond market crash, plus almost all depositors wanting out, to be unable to hon...

Great comment.

Will says that usually, that most fraudsters aren't just "bad apples" or doing "cost-benefit analysis" on their risk of being punished. Rather, they fail to "conceptualise what they're doing as fraud".

I agree with your analysis but I think Will also sets up a false dichotomy. One's inability to conceptualize or realize that one's actions are wrong is itself a sign of being a bad apple. To simplify a bit, on the one end of the spectrum of the "high integrity to really bad continuum", you have morally scrupulous people who constantly wond...

I think you can get closer to dissolving this problem by considering why you're assigning credit. Often, we're assigning some kind of finite financial rewards.

Imagine that a group of n people have all jointly created $1 of value in the world, and that if any one of them did not participate, there would only be $0 units of value. Clearly, we can't give $1 to all of them, because then we would be paying $n to reward an event that only created $0 of value, which is inefficient. If, however, only the first guy (i=1) is an "agent" that responds to incenti...

Hi Polkashell,

There are indeed questionable people in EA, as in all communities. EA may be worse in some ways, because of its utilitarian bent, and because many of the best EAs have left the community in the last couple of years.

I think it's common in EA for people to:

- have high hopes in EA, and have them be dashed, when their preferred project is defunded, when a scandal breaks, and so on.

- burn out, after they give a lot of effort to a project.

What can make such events more traumatic is if EA has become the source of their livelihood, meaning, f...

Julia tells me "I would say I listed it as a possible project rather than calling for it exactly."]

It actually was not just neutrally listed as a "possible" project, because it was the fourth bullet point under "Projects and programs we’d like to see" here.

It may not be worth becoming a research lead under many worldviews.

I'm with you on almost all of your essay, regarding the advantages of a PhD, and the need for more research leads in AIS, but I would raise another kind of issue - there are not very many career options for a research lead in AIS at present. After a PhD, you could pursue:

- Big RFPs. But most RFPs from large funders have a narrow focus area - currently it tends to be prosaic ML, safety, and mechanistic interpretability. And having to submit to grantmakers' research direction somewhat def

Thanks for engaging with my criticism in a positive way.

Regarding how timely the data ought to be, I don't think live data is necessary at all - it would be sufficient in my view to post updated information every year or two.

I don't think "applied in the last 30 days" is quite the right reference class, however, because by-definition, the averages will ignore all applications that have been waiting for over one month. I think the most useful kind of statistics would:

- Restrict to applications from n to n+m months ago, where n>=3

- Make a note of what percent

I had a similar experience with 4 months of wait (uncalibrated grant decision timelines on the website) and unresponsiveness to email with LTFF, and I know a couple of people who had similar problems. I also found it pretty "disrespectful".

Its hard to understand why a) they wouldn't list the empirical grant timelines on their website, and b) why they would have to be so long.

I think it could be good to put these number on our site. I liked your past suggestion of having live data, though it's a bit technically challenging to implement - but the obvious MVP (as you point out) is to have a bunch of stats on our site. I'll make a note to add some stats (though maintaining this kind of information can be quite costly, so I don't want to commit to doing this).

In the meantime, here are a few numbers that I quickly put together (across all of our funds).

Grant decision turnaround times (mean, median):

- applied in the last 30 days = 14 d

There is an "EA Hotel", which is decently-sized, very intensely EA, and very cheap.

Occasionally it makes sense for people to accept very low cost-of-living situations. But a person's impact is usually a lot higher than their salary. Suppose that a person's salary is x, their impact 10x, and their impact is 1.1 times higher when they live in SF, due to proximity to funders and AI companies. Then you would have to cut costs by 90% to make it worthwhile to live elsewhere. Otherwise, you would essentially be stepping over dollars to pick up dimes.

Of course there are some theoretical reasons for growing fast. But theory only gets you so far, on this issue. Rather, this question depends on whether growing EA is promising currently (I lean against) compared to other projects one could grow. Even if EA looks like the right thing to build, you need to talk to people who have seen EA grow and contract at various rates over the last 15 years, to understand which modes of growth have been healthier, and have contributed to gained capacity, rather than just an increase in raw numbers. In my experience, one ...

Yes, they were involved in the first, small, iteration of EAG, but their contributions were small compared to the human capital that they consumed. More importantly, they were a high-demand group that caused a lot of people serious psychological damage. For many, it has taken years to recover a sense of normality. They staged a partial takeover of some major EA institutions. They also gaslit the EA community about what they were doing, which confused and distracted decent-sized subsections of the EA communtiy for years.

I watched The Master a couple of mont...

Interesting point, but why do these people think that climate change is going to cause likely extinction? Again, it's because their thinking is politics-first. Their side of politics is warning of a likely "climate catastrophe", so they have to make that catastrophe as bad as possible - existential.

I think that disagreement about the size of the risks is part of the equation. But it's missing what is, for at least a few of the prominent critics, the main element - people like Timnit, Kate Crawford, Meredith Whittaker are bought in leftie ideologies focuses on things like "bias", "prejudice", and "disproportionate disadvantage". So they see AI as primarily an instrument of oppression. The idea of existential risk cuts against the oppression/justice narrative, in that it could kill everyone equally. So they have to opposite it.

Obviously this is not wha...

Hmm, OK. Back when I met Ilya, about 2018, he was radiating excitement that his next idea would create AGI, and didn't seem sensitive to safety worries. I also thought it was "common knowledge" that his interest in safety increased substantially between 2018-22, and that's why I was unsurprised to see him in charge of superalignment.

Re Elon-Zillis, all I'm saying is that it looked to Sam like the seat would belong to someone loyal to him at the time the seat was created.

You may well be right about D'Angelo and the others.

- The main thing that I doubt is that Sam knew at the time that he was gifting the board to doomers. Ilya was a loyalist and non-doomer when appointed. Elon was I guess some mix of doomer and loyalist at the start. Given how AIS worries generally increased in SV circles over time, more likely than not some of D'Angelo, Hoffman, and Hurd moved toward the "doomer" pole over time.

Re 2: It's plausible, but I'm not sure that this is true. Points against:

- Reid Hoffman was reported as being specifically pushed out by Altman: https://www.semafor.com/article/11/19/2023/reid-hoffman-was-privately-unhappy-about-leaving-openais-board

- Will Hurd is plausibly quite concerned about AI Risk[1]. It's hard to know for sure because his campaign website is framed in the language of US-China competition (and has unfortunate-by-my-lights suggestions like "Equip the Military and Intelligence Community with Advanced AI"), but I think a lot of the pr

Causal Foundations is probably 4-8 full-timers, depending on how you count the small-to-medium slices of time from various PhD students. Several of our 2023 outputs seem comparably important to the deception paper:

- Towards Causal Foundations of Safe AGI, The Alignment Forum - the summary of everything we're doing.

- Characterising Decision Theories with Mechanised Causal Graphs, arXiv - the most formal treatment yet of TDT and UDT, together with CDT and EDT in a shared framework.

- Human Control: Definitions and Algorithms, UAI - a paper arguing that corrig

2 - I'm thinking more of the "community of people concerned about AI safety" than EA.

1,3,4- I agree there's uncertainty, disagreement and nuance, but I think if NYT's (summarised) or Nathan's version of events is correct (and they do seem to me to make more sense to me than other existing accounts) then the board look somewhat like "good guys", albeit ones that overplayed their hand, whereas Sam looks somewhat "bad", and I'd bet that over time, more reasonable people will come around to such a view.

It's a disappointing outcome - it currently seems that OpenAI is no more tied to its nonprofit goals than before. A wedge has been driven between the AI safety community and OpenAI staff, and to an extent, Silicon Valley generally.

But in this fiasco, we at least were the good guys! The OpenAI CEO shouldn't control its nonprofit board, or compromise the independence of its members, who were doing broadly the right thing by trying to do research and perform oversight. We have much to learn.

Hey Ryan :)

I definitely agree that this situation is disappointing, that there is a wedge between the AI safety community and Silicon Valley mainstream, and that we have much to learn.

However, I would push back on the phrasing “we are at least the good guys” for several reasons. Apologies if this seems nit picky or uncharitable 😅 just caught my attention and I hoped to start a dialogue

- The statement suggests we have a much clearer picture of the situation and factors at play than I believe anyone currently has (as of 22 Nov 2023)

- The “we” phrasing seem

Yeah I think EA just neglects the downside of career whiplash a bit. Another instance is how EA orgs sometimes offer internships where only a tiny fraction of interns will get a job, or hire and then quickly fire staff. In a more ideal world, EA orgs would value rejected & fired applicants much more highly than non-EA orgs, and so low-hit-rate internships, and rapid firing would be much less common in EA than outside.

It looks like, on net, people disagree with my take in the original post.

I just disagreed with the OP because it's a false dichotomy; we could just agree with the true things that activists believe, and not the false ones, and not go based on vibes. We desire to believe that mech-interp is mere safety-washing iff it is, and so on.

On the meta-level, anonymously sharing negative psychoanalyses of people you're debating seems like very poor behaviour.

Now, I'm a huge fan of anonymity. Sometimes, one must criticise some vindictive organisation, or political orthodoxy, and it's needed, to avoid some unjust social consequences.

In other cases, anonymity is inessential. One wants to debate in an aggressive style, while avoiding the just social consequences of doing so. When anonymous users misbehave, we think worse of anonymous users in general. If people always write anonymously, the...

I'm sorry, but it's not an "overconfident criticism" to accuse FTX of investing stolen money, when this is something that 2-3 of the leaders of FTX have already pled guilty to doing.

This interaction is interesting, but I wasn't aware of it (I've only reread a fraction of Hutch's messages since knowing his identity) so to the extent that your hypothesis involves me having had some psychological reaction to it, it's not credible.

Moreover, these psychoanalyses don't ring true. I'm in a good headspace, giving FTX hardly any attention. Of course, I am not...

Creditors are expected by manifold markets to receive only 40c on each dollar that was invested on the platform (I didn't notice this info in the post when I previously viewed it). And, we do know why there is money missing: FTX stole it and invested it in their hedge fund, which gambled away and lost the money.

There's also fairly robust market for (at least larger) real-money claims against FTX with prices around 35-40 cents on the dollar. I'd expect recovery to be somewhat higher in nominal dollars, because it may take some time for distributions to occur and that is presumably priced into the market price. (Anyone with a risk appetite for buying large FTX claims probably thinks their expected rate of return on their next-best investment choice is fairly high, implying a fairly high discount rate is being applied here.)

Yeah, the cost of cheap shared housing is something like $20k/yr of 2026 dollars, whereas your impact would be worth a lot more than that, either because you are making hundreds of thousands of post-tax dollars per year, or because you're foregoing those potential earnings to do important research or activism. Van-living is usually penny-wise, but pound-foolish.