First, I want to say that this is not a post about how you should give. This is a post about spreading EA values in the non-EA community. It's about how even if you can't spread every EA value to every person, everyone can do good better. Without further ado...

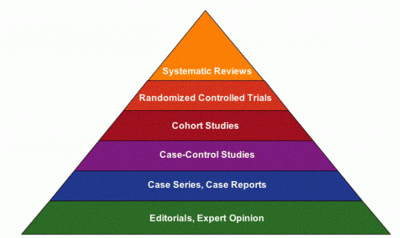

Cost-effective giving relies on evidence

If you're here, you know that a lot of people give to charities without doing research or considering impact data. Maybe they give based on "My friend runs it," or "My sister was affected by this issue." Maybe before you were an EA, this was also how you gave.

But no longer! Now you give to the charities with the Highest Cost-Effectiveness, not just in your community but In The World. And you know their cost-effectiveness because the charity collected lots of Data in the form of Numbers.

If you're here, I doubt you have a large portion of your budget directed toward unquantified grassroots efforts in your community. But there's a good chance you've talked to someone outside of EA who does. (If you haven't, your community-building might be stuck in a bubble.) I bet you've wondered how you can possibly spread EA ideas to someone with that focus. I'm here to tell you that you can.

At my last non-profit, I gave the communications coordinator a copy of Doing Good Better. "This is cool stuff," she said, "but we work with a lot of people in Indigenous communities who don't do quantitative monitoring and evaluation. They want to give within their communities. But does it make sense to talk to them about effective giving at all?"

Yes.

The best evidence about grassroots charities might not be quantitative

No matter where, small communities dominated by grassroots initiatives have a couple of problems when it comes to quantitative data.

- Collecting quantitative data on your impact takes time, human resources, an understanding of methodology, and money. Small, poor, or rural communities don't always have these resources.

- Under the right conditions, you might have good reasons to trust expert testimony more than quantitative data.

What reasons are those? Expert testimony is a form of evidence with pros and cons, just like quantitative data. In ideal conditions, I won't tell you it's a better form of evidence...

But what if you're trying to compare among a group of initiatives with much longer and richer history of informal observation than written/recorded data? What if you're comparing among a group of initiatives with a lot of intangible effects that couldn't be included in a model unless you had a lot of resources to spend on modelling?

Then, I think the expert testimony of a long-time community leader who has been watching the initiatives in your community for 70 years, and has talked to the people who have been affected by every initiative that is being run in that community, is a form of evidence you should use. It's evidence that's a hell of a lot more valuable than "My friend runs it," or "My sister was affected by this issue." Community leaders can tell you what intervention is helping the most people better than you can surmise on your own.

Community leaders in small, old, tight-knit communities (whether it's Indigenous communities in Canada like my colleague interfaces with, or rural communities in the US, or villages in Uganda like Anthony Kalulu supports) have not only their own lifetime of experience to draw from, but also the experience passed down from generations of community leaders before them, perhaps for centuries or millennia. If you ask them what works, they could give you a better, more reliable answer than a quantitative model -- if the only quantitative model they have access to has but a few months' worth of data collected under questionable conditions.

So if you're talking to someone who doesn't think they can consider effective giving when they support their community because of a lack of quantitative data, tell them this: non-quantitative evidence is evidence. It can tell you more about the cost-effectiveness within a group of grassroots organizations than not using evidence at all. And that can help you make effective giving decisions to the most effective grassroots initiatives in your community!

Is this EA enough?

Look, not everyone is an EA. Not everyone has bought into every EA principle. And I'm not going to force them to. If someone can adopt the idea of caring about cost-effectiveness, even if they only apply this to their community and don't adopt the idea of giving to the very most in-need people in the world, that's an idea worth spreading.

It's an idea that as EAs, we should spread. When we are doing community building, we are not just trying to get people to identify as EAs. We are trying to get them to adopt one or more principles that help them do good better with their money and time.

I am not asking you to start giving to grassroots organizations in place of organizations with lots of monitoring and evaluation behind them.

But when you're talking to someone who wants to focus on supporting their community, don't cast judgment on them for not immediately adopting a global lens and every other EA value. And don't exclude them from the effective giving conversation just because their community doesn't have data-driven projects. The wisdom of their community leaders can be a powerful kind of evidence for them to look to so they can increase the effectiveness of their giving.

Strong upvote I love this, and agree with the central thesis. In general I agree with taking whatever clear opportunities arise to increase the good done in the world, even if it isn't our primary thing.

Unfortunately where there are quantitative models available even when very poor, I find them to seem (with great uncertainty) more convincing than community leader opinion

"Community leaders in small, old, tight-knit communities (whether it's Indigenous communities in Canada like my colleague interfaces with, or rural communities in the US, or villages in Uganda like Anthony Kalulu supports) have not only their own lifetime of experience to draw from, but also the experience passed down from generations of community leaders before them, perhaps for centuries or millennia. If you ask them what works, they could give you a better, more reliable answer than a quantitative model -- if the only quantitative model they have access to has but a few months' worth of data collected under questionable conditions."

My experience (unfortunately) in Uganda doesn't corroborate this. I agree its possible that they "could" give you a better answer than a quantitive model, but I don't think they usually do. It hurts me a little to confess that BOL fermi-ish quantitive models (where possible) - done by experienced people with expertise in the field, seem in my limited experience usually better than the thoughts of an experienced community leader.

But your general point still stands - that influencing non-EA people, usually with non-quantatitve data to give and focus on better local causes could have great impact, and often wit little effort. There s also the chance of swinging people slowly towards more mainstream EA causes with this lighter touch approach.

(Can't say enough how much I appreciate it when people take my words of uncertainty like "could" literally!) Indeed, in most situations I can think of, I'd prefer a quantitative model. Especially by an experienced expert! Would that it were always available. Thanks for your comment!