My primary aim in this post is to present two basic models of the development and future of “intelligence”, and to highlight the differences between these models. I believe that people’s beliefs about the future of AI and “AI takeoff scenarios” may in large part depend on which of these two simple models they favor most strongly, and hence it seems worth making these models more explicit, so that we can better evaluate and critique them.

Among the two models I present, I myself happen to consider one of them significantly more plausible, and I will outline some of the reasons why I believe that.

The models I present may feel painfully basic, but I think it can be helpful to visit these most basic issues, as it seems to me that much disagreement springs from there.

“AI skepticism”?

It might be tempting to view the discussion of the contrasting models below as a clash between the “AI priority” camp and the “AI skepticism” camp. But I think this would be inaccurate. Neither of the two models I present imply that we should be unconcerned about AI, or indeed that avoiding catastrophic AI outcomes should not be a top priority. Where the models will tend to disagree is more when it comes to what kinds of AI outcomes are most likely, as well as how we can best address risks of bad AI outcomes. (More on this below.)

Two contrasting definitions of “intelligence”

Before outlining the two models of the development and future of “intelligence”, it is worth first specifying two distinct definitions of “intelligence”. These definitions are important, as the two contrasting models that I outline below see the relationship between these definitions of intelligence in very different ways.

The two definitions are the following:

Intelligence 1: Individual cognitive abilities.

Intelligence 2: The ability to achieve a wide range of goals.

The first definition is arguably the common-sense definition of “intelligence”, and is often associated with constructs such as IQ and the g factor. The second definition is more abstract, and is inspired by attempts to provide a broad definition of “intelligence” (see e.g. Legg & Hutter, 2007).

At a first glance, the difference between these two definitions may not be all that clear. After all, individual cognitive abilities can surely be classified as “abilities to achieve a wide range of goals”, meaning that Intelligence 1 can be seen as a subset of Intelligence 2. This seems fairly uncontroversial to say, and both of the models outlined below would agree with this claim.

Where substantive disagreement begins to enter the picture is when we explore the reverse relation. Is Intelligence 2 likewise a subset of Intelligence 1? In other words, are the two notions of “intelligence” virtually identical?

This is hardly the case. After all, abilities such as constructing a large building, or sending a spaceship to the moon, are not purely a product of individual cognitive abilities, even if cognitive abilities play crucial parts in such achievements.

The distance between Intelligence 1 and Intelligence 2 — or rather, how small or large of a subset Intelligence 1 is within Intelligence 2 — is a key point of disagreement between the two models outlined below, as will hopefully become clear shortly.

Two contrasting models of the development and future of “intelligence”

Simplified models at opposite ends of a spectrum

The two models I present below are extremely simple and coarse-grained, but I still think they capture some key aspects of how people tend to diverge in their thinking about the development and future of “intelligence”.

The models I present exist at opposite ends of a spectrum, where this spectrum can be seen as representing contrasting positions on the following issues:

- How large of a subset is Intelligence 1 within Intelligence 2? (Model 1: “Very large” vs. Model 2: “Rather modest”).

- How much of a bottleneck are individual human cognitive abilities to economic and technological growth? (Model 1: “By far the main bottleneck” vs. Model 2: “A significant bottleneck among many others”).

- How suddenly will the most growth-relevant human abilities be surpassed by machines? (Model 1: “Quite suddenly, perhaps in months or weeks” vs. Model 2: “Quite gradually, in many decades or even centuries”).

These three issues are not strictly identical, but they are nevertheless closely related, in that beliefs that lie at one end of the spectrum on one of these issues will tend to push beliefs toward the corresponding end of the spectrum on the other two issues.

Model 1

Model 1 says that individual human cognitive abilities are by far the main driver of, and bottleneck to, economic and technological progress. It can perhaps best be described with a few illustrations.

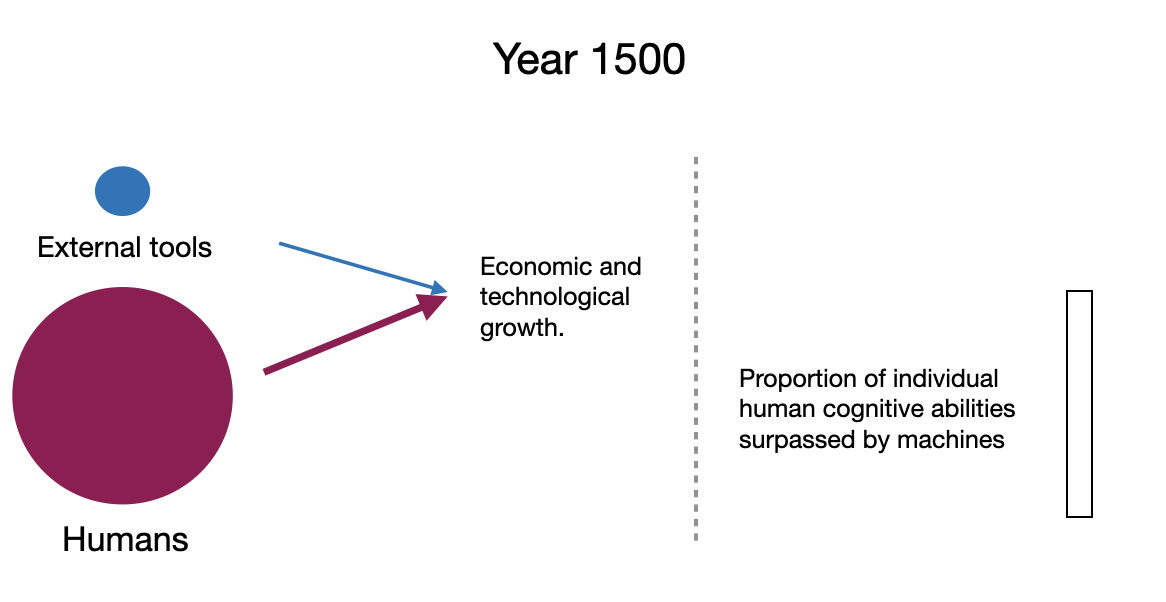

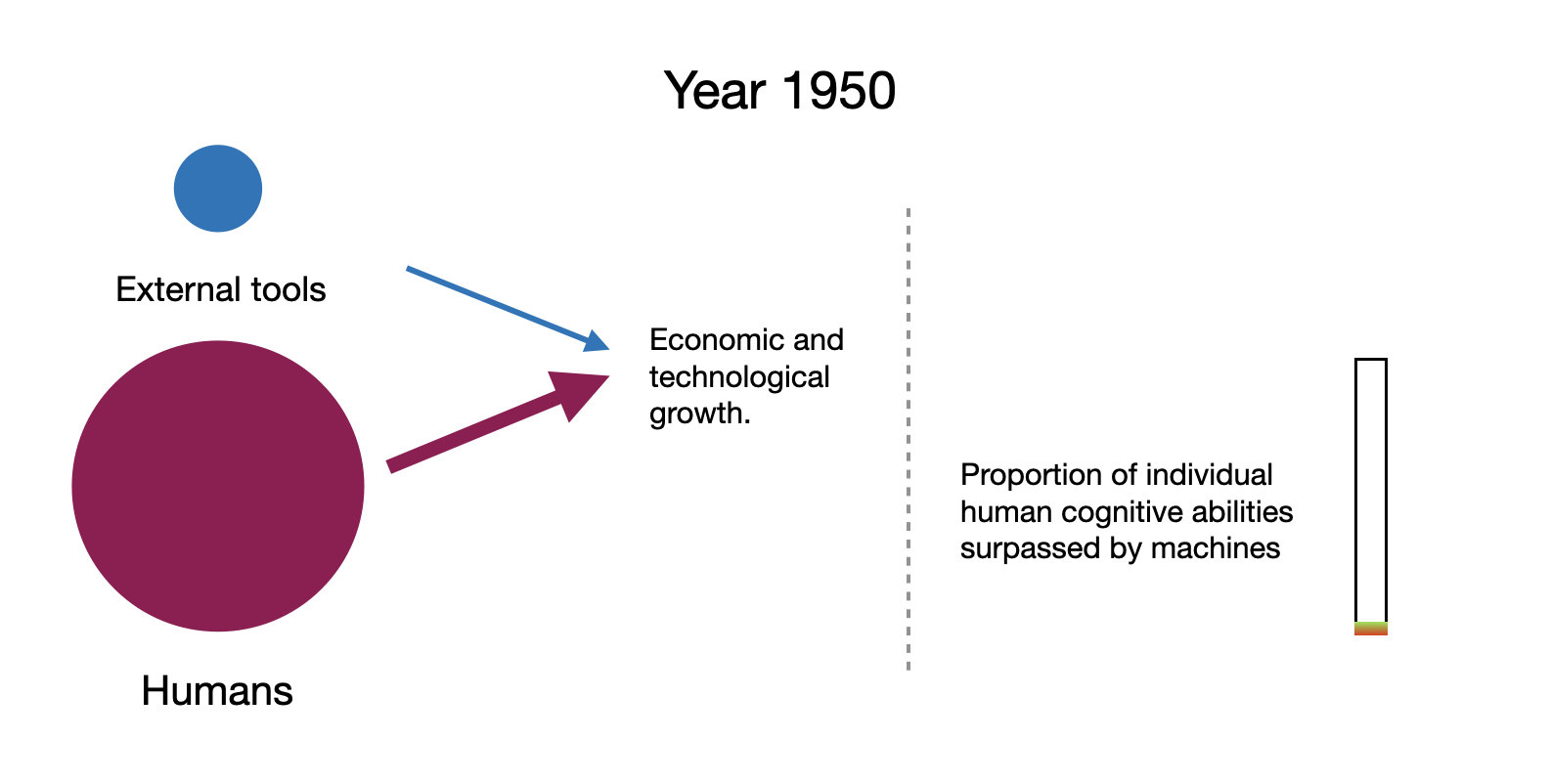

The following figures are meant to illustrate how Model 1 sees the respective contributions of humanity and humanity’s external tools to economic and technological progress over time. That is, the figures are meant to depict how much of a driver humans are in creating technological and economic growth compared to how much of a driver our external tools and technologies are. (The illustrations are not to scale in any sense.)

The bar to the right tracks how large a proportion of human cognitive abilities that is surpassed by machines at the time in question, since this is the key measure to keep track of for Model 1.

In 20.000 BC, we have relatively few tools and virtually no technological or economic progress (meant to be illustrated by the thin arrows). Machines do not surpass individual human cognitive abilities in any domain.

In 1500, we have more tools and far more humans — thousands of times more humans — and we have more technological and economic growth (though the global GDP per capita is still only estimated to be 50 percent higher than in 20.000 BC). Machines still do not surpass individual human cognitive abilities in any domain.

In 1950, we have considerably more tools and humans (more than five times as many humans as in 1500). We also have considerably more growth and wealth — global GDP per capita is estimated to be more than ten times as high as in 1500. Machines are beginning to surpass human cognitive abilities in a narrow range of domains, such as in calculations of the decimals of pi.

In 2020, we again have more tools, including advanced AI programs that surpass or are close to surpassing humans in many domains, e.g. AlphaZero, AlphaStar, and GPT-3. The human population roughly tripled since 1950, and global GDP is more than ten times higher than in 1950.

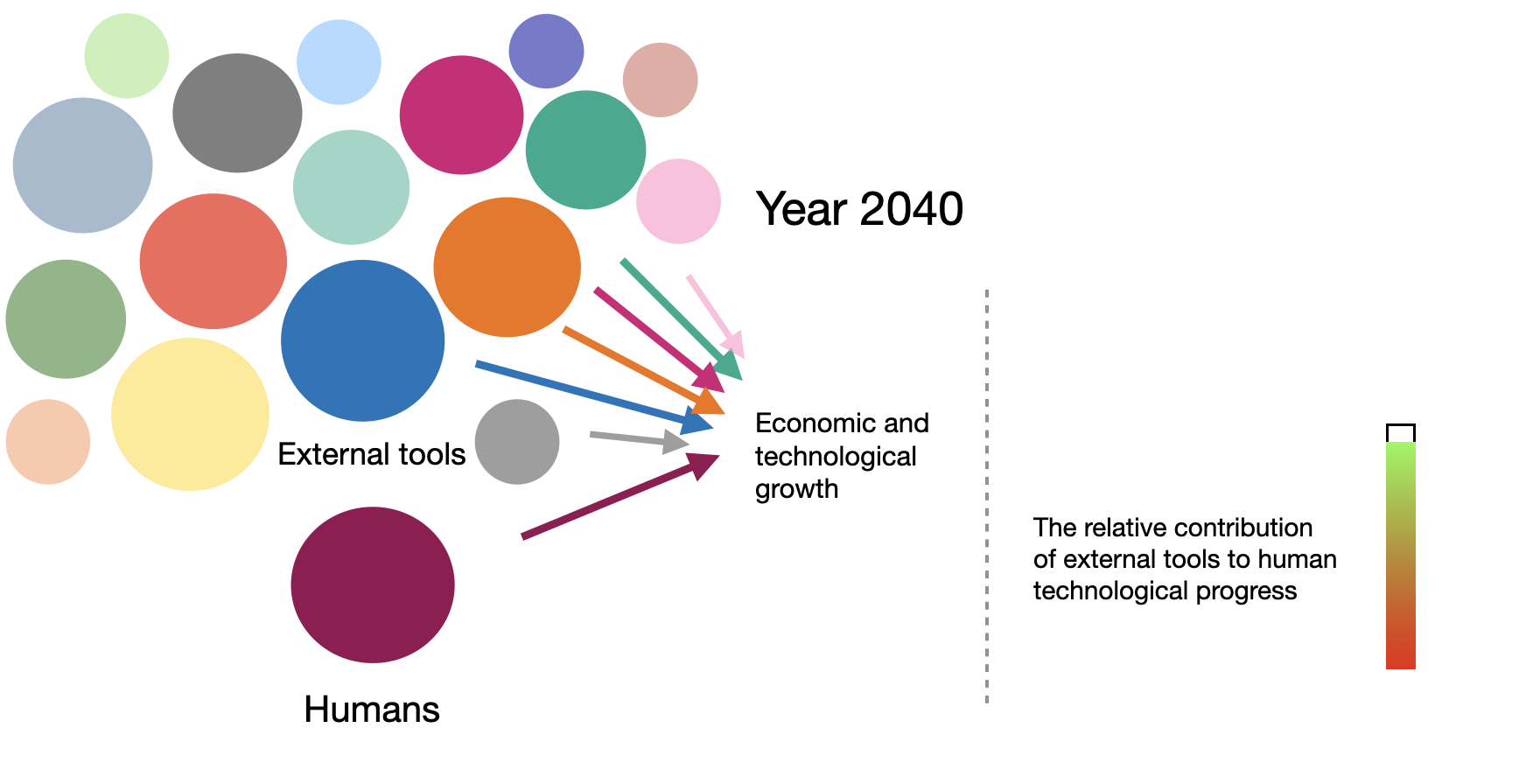

In 2040 (or within a few decades from now; the exact year is not important), Model 1 predicts that virtually all human cognitive abilities have been surpassed by AI systems. Importantly, and most distinctively, Model 1 predicts that once AI systems surpass humans on a sufficient set of cognitive abilities, human cognitive abilities will no longer be the main limiting factor to technological progress. The limiting factor will then be the AI systems themselves, which are continually growing themselves in ever more competent ways, in effect doubling the economy on a timescale of months or weeks, if not faster. The picture we started with has thus been reversed, and humans, if they are around anymore, are now but a tiny speck compared to the mushrooming Jupiter of AI.

Model 2

Model 2 disagrees with Model 1’s claim that individual human cognitive abilities are by far the main driver of, and bottleneck to, economic and technological progress. According to Model 2, there are many bottlenecks to economic and technological progress, of which human cognitive abilities are but one — an important one, to be sure, but not necessarily one that is more important than others.

Broadly speaking, Model 2 focuses more on Intelligence 2 (the ability to achieve goals in general) than on Intelligence 1 (individual human cognitive abilities), and it sees a large difference between the two.

Since Model 2 does not see progress as being chiefly bottlenecked by Intelligence 1, it is also less interested in tracking the extent to which machines surpass humans along the dimension of Intelligence 1. Instead, Model 2 is more interested in tracking how well our machines and tools do in terms of the broader notion of Intelligence 2. In particular, Model 2 considers it more important to track the relative contribution of our external tools to humanity’s ability to achieve goals in general, including the goal of creating technological progress.

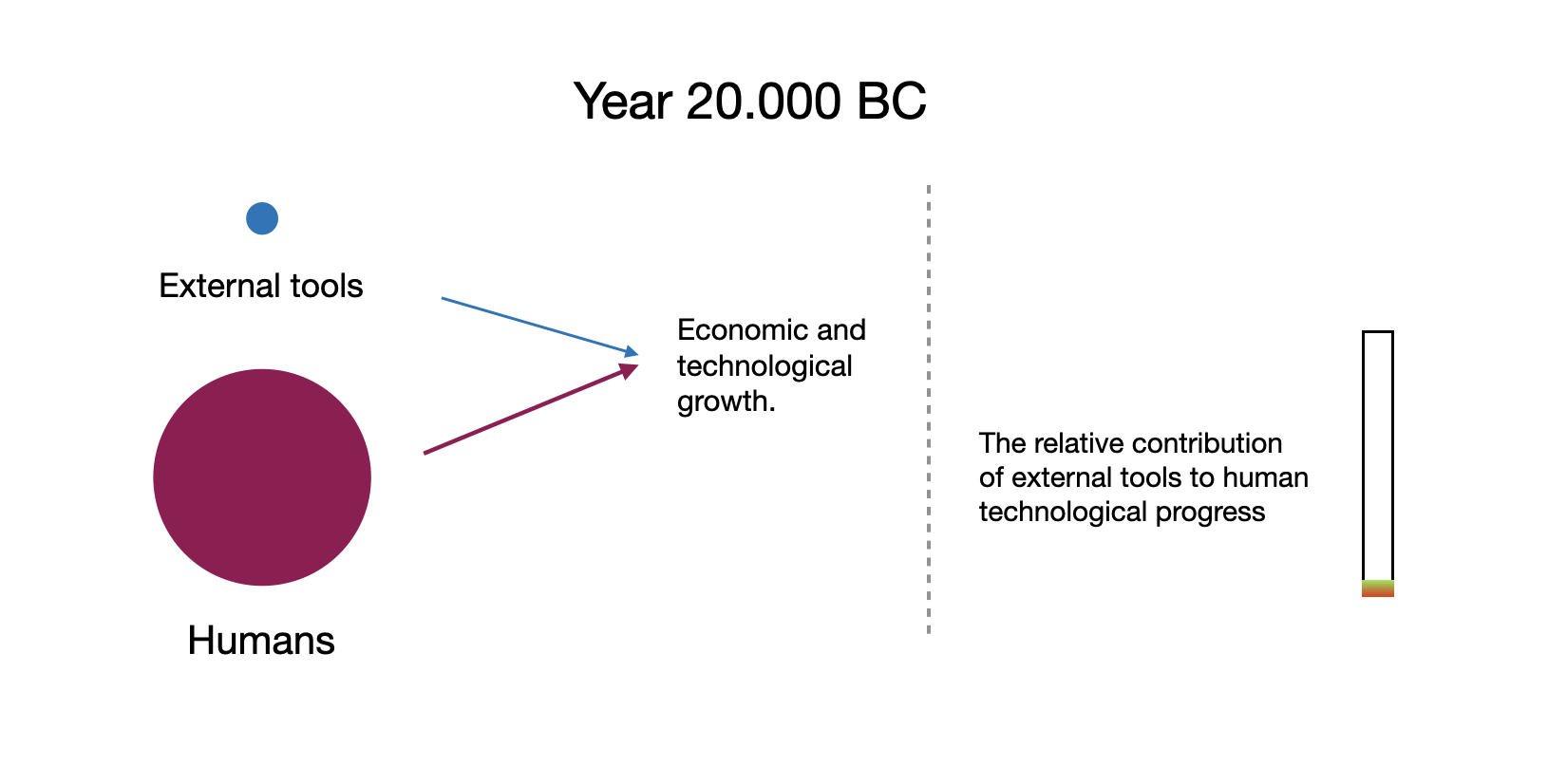

Thus, the following figures are meant to illustrate how Model 2 sees the respective contributions of humanity and external human tools to economic and technological progress over time. And the bar to the right now tracks the relative contribution of external tools to humanity’s technological progress.

As we can see, Model 2 is largely in agreement with Model 1 in the year 20.000 BC. The only difference is that Model 2 highlights how, in the very limited growth that occurred back then, humanity’s external tools already played a meaningful role. (Model 1 would obviously not disagree that there were contributions of this kind, but it sees these contributions as much less important than the, for Model 1, all-important question of how well our external tools match human cognitive abilities.)

In 1500, the differences between Model 1 and Model 2 begin to emerge. Model 2 includes many distinct classes of external tools, as it sees each of them as significant bottlenecks to progress. (Model 2 sees these bottlenecks as being more significant than does Model 1, and Model 2 therefore represents them more prominently.)

Examples of these tools include scientific instruments, tools for food harvesting, tools for mining, etc. Model 2 highlights the complex interplay of these many different classes of tools, and how the existing set of tools in large part determines what new tools can be built — hence the large relative contribution in the progress bar.

Furthermore, Model 2 stresses the point that the latest, most advanced technologies are, already at this stage, crucial components in the creation of the next generation of technology. There is thus already significant recursive self-improvement at this stage, only it is a recursive self-improvement that occurs gradually at the level of the larger set of tools, rather than at the level of a single tool that directly improves itself.

In 1950, we see a continuation of the preceding trend: our set of external tools has been expanded further, and has become an even greater factor in economic and technological growth. Unlike in 1500, humanity has now made inventions such as computers, telephones, and combustion engines, which in turn unlock new avenues of innovation.

Humans gradually begin to emerge as a node in a network of factors — a network of tools, broadly construed — that are driving technological growth. Humans are, of course, a critically important node in this network, yet the same is increasingly true of the other nodes in the network. For example, if we instantly removed combustion engines or telecommunication in the world of 1950, economic and technological growth would have grinded to a halt — indeed turned negative — and it could not have resumed until these technologies (or their analogues) had been reinvented and widely redeployed.

In 2020, our interconnected web of tools has grown even larger, and our external tools likewise play a still greater role in the creation of new technologies. Humans now use computers and other machines on a vast scale, including when they create the next generation of technology. Machines thus effectively do a large share of the work in tech development — in the design of new technologies, in calculations of optimal solutions to technical problems, in transportation, in manufacturing, etc.

Of course, machines still do not perform this work wholly independently (even as many manufacturing systems are now practically fully automated). But this is a symmetric point, in that humans by no means perform the work independently either. Both humans and machines are necessary nodes in the network of tools that drive technological growth. And the core point on which Model 1 and Model 2 disagree is how large of a node — i.e. how large of a contributor and bottleneck — humans are compared to other nodes in this network. In 2020, Model 2 sees humans as a considerably more modest node in this network compared to Model 1 (even if Model 2 may still see humans as the single most significant node).

In 2040 (again, the exact year is not important), Model 2 predicts a very different outcome compared to Model 1. Where Model 1 predicts a growth explosion, Model 2 predicts roughly continuous technological progress along existing trend lines.

The reason for this divergence is that — unlike Model 1 — Model 2 does not see human cognitive abilities as the overriding bottleneck to technological progress, and hence it does not expect future progress in surpassing human cognitive abilities to be a master key that will unlock the floodgates to explosive technological growth.

To reiterate, Model 2 does expect continued growth, partly as a result of further progress in software that enables machines to surpass human cognitive abilities in ever more domains. But in terms of growth rates, Model 2 expects this future growth to largely resemble the technological growth that we have seen in past decades, in which machines have already been gradually surpassing humans in an ever wider range of tasks.

Model 2 sees many bottlenecks to technological growth (e.g. economic and technological bottlenecks in manufacturing and energy harvesting), and it expects these many bottlenecks to continue to exist — and indeed to become increasingly significant — if or when AI systems surpass 80, 90, or even 100 percent of human cognitive abilities.

Contrasting narratives centered on contrasting notions of “intelligence”

Broadly speaking, the two models outlined above can be seen as representing two different narratives of the development of “intelligence”. Model 1 is essentially the story of how machines came to surpass humans in terms of their individual cognitive abilities — i.e. “Intelligence 1”, the chief driver of growth, according to Model 1 — and thereby rapidly took off on its own.

Model 2, in contrast, is essentially the story of how humans created an ever larger and more intricate set of tools that in turn expanded technological civilization’s ability to achieve a wide range of goals — i.e. “Intelligence 2” — including the goal of creating further economic and technological growth. It is a story that sees the ability to create technological progress as not being chiefly determined by Intelligence 1, but as instead being determined and constrained by many factors of similar significance, such as the capabilities of specialized hardware tools found across a wide range of domains.

Examples of views that are loosely approximated by these models

Of course, neither of the models described above are a perfect illustration of anyone’s views. But my impression is that these models nevertheless do approximate two contrasting views that one can find in discussions and projections of the future of AI.

Examples of people whose views mostly seem to resemble Model 1 include I. J. Good, Ray Solomonoff, Vernor Vinge, Eliezer Yudkowsky, Nick Bostrom and Anna Salamon. These authors all appear to have argued that explosive technological growth is likely to occur once machines can surpass human cognitive abilities in a sufficient range of tasks — that is, an “intelligence explosion”.

In contrast, people whose views mostly seem to resemble Model 2 include Ramez Naam, Max More, Theodore Modis, Tim Tyler, Bryan Caplan, and François Chollet.[1]

There are also views that are more difficult to categorize, such as those of Robin Hanson and Eric Drexler. Hanson and Drexler have both argued that an intelligence explosion is unlikely, at least in the sense of a single recursively self-improving AI system that ignites a growth explosion; they argue that growth in AI capabilities is more likely to be distributed among a broader economy of AI systems. In these respects, their views resemble Model 2. Yet their views resemble Model 1 in that they still believe that AI progress is quite likely to result in explosively fast growth, even if the nature of this AI progress will mostly resemble Model 2 in terms of consisting of a larger conglomerate of AI systems and industries that co-develop.

Why I consider Model 2 most plausible

What follows is an overview of some of the reasons why I consider Model 2 most plausible.[2]

A picture akin to Model 2 is arguably what we have observed historically

When looking at the history of economic and technological growth, it seems to me that Model 2 better describes the pattern that we observe. For example, in terms of individual cognitive abilities — or rather, cognitive talents[3] — humans today do not seem to have significantly bigger or more advanced brains than did humans 20.000 years ago. In fact, some groups of humans who lived more than 20.000 years ago may have had brains that were 15-20 percent larger than the average human brain of today.

In other words, our individual cognitive abilities do not seem to have increased all that much over the last thousands of years, whereas our collective abilities and our cultural and technological tools have undergone a drastic evolution, especially in the last few centuries. Hence, it seems that the key bottlenecks that we have overcome in the last 20.000 years — from our stable ~no-growth condition to our current state of technological advancement — have been unlocked by our collection of external tools and our collective cultural development. It is in large part by overcoming bottlenecks in these domains that humanity has created the economic and technological growth that we have seen in recent millennia (see also Muthukrishna & Henrich, 2016). This recent cultural and technological development is arguably what most distinguishes humans from chimpanzees, more so than our individual cognitive abilities in isolation (Henrich, 2015, ch. 2; Vinding, 2016, ch. 2; 2020; Grace, 2022).

Superhuman software capabilities increasingly advance technological development — yet growth rates appear to be declining

Model 1 emphasizes that computers could rapidly become not just a little better than humans, but far better than humans across virtually all cognitive domains. This would be a momentous event, according to Model 1, since computers themselves would then be driving scientific and technological progress at an unprecedented pace.

Model 2, in contrast, tends to emphasize how computers have already been superhuman in various domains for decades — and not just slightly superhuman, but vastly and increasingly superhuman (e.g. in multiplying astronomical numbers and in memory storage). Additionally, Model 2 highlights how superhuman software capabilities have already been pushing the frontier of scientific and technological development for decades, and how they continue to do so today.

For instance, supercomputers already help advance cutting-edge mathematics (see e.g. experimental mathematics and computer-assisted proofs), and they are likewise among the main tools pushing physics forward, along with software in general. A recent example is how bootstrapping methods run on supercomputers help to clarify the conditions that our physical theories (e.g. of gravity) must satisfy — what physicist Claudia de Rham called “one of the most exciting research developments at the moment” in fundamental physics.

Compared to Model 1, Model 2 tends to see the gains from progress in software as being more uniformly distributed over time, as something that is reaped and translated into further technological progress in a rather gradual manner starting already decades ago. Model 2 expects a continuation of this trend, and hence it sees little reason to predict the kind of growth explosion that is predicted by Model 1.[4]

The respective predictions that Model 1 and Model 2 make about what we should observe in our current condition are thus another reason why I consider Model 2 more plausible. For if Model 1 sees human cognitive abilities as the key bottleneck to technological growth, a bottleneck whose unwinding would effectively give rise to a growth explosion, and if computers increasingly surpass human cognitive abilities and increasingly sit at the forefront of technological development, then Model 1 would seem to predict that growth rates should now be steadily rising.[5]

Yet what we observe is arguably the opposite. Economic and technological growth rates appear to be steadily decreasing, across various measures, despite superhuman cognitive abilities — and software in general — playing an increasingly large role in economic and technological development. This weakly suggests that progress in creating and employing superhuman software capabilities has diminishing returns in terms of its effects on economic and technological growth rates. Model 2 fits better with this putative observation than does Model 1.[6]

Declining growth rates

The observation that growth rates appear to be declining across a wide range of measures deserves further elaboration, as it constitutes a key part of the evidence against Model 1, in my view. Below are some specific examples. None of these examples represent strong evidence against a future growth explosion in themselves; they each merely count as weak evidence against it. But in combination, I think these observations do give us considerable reason to be skeptical.[7]

Global economic growth

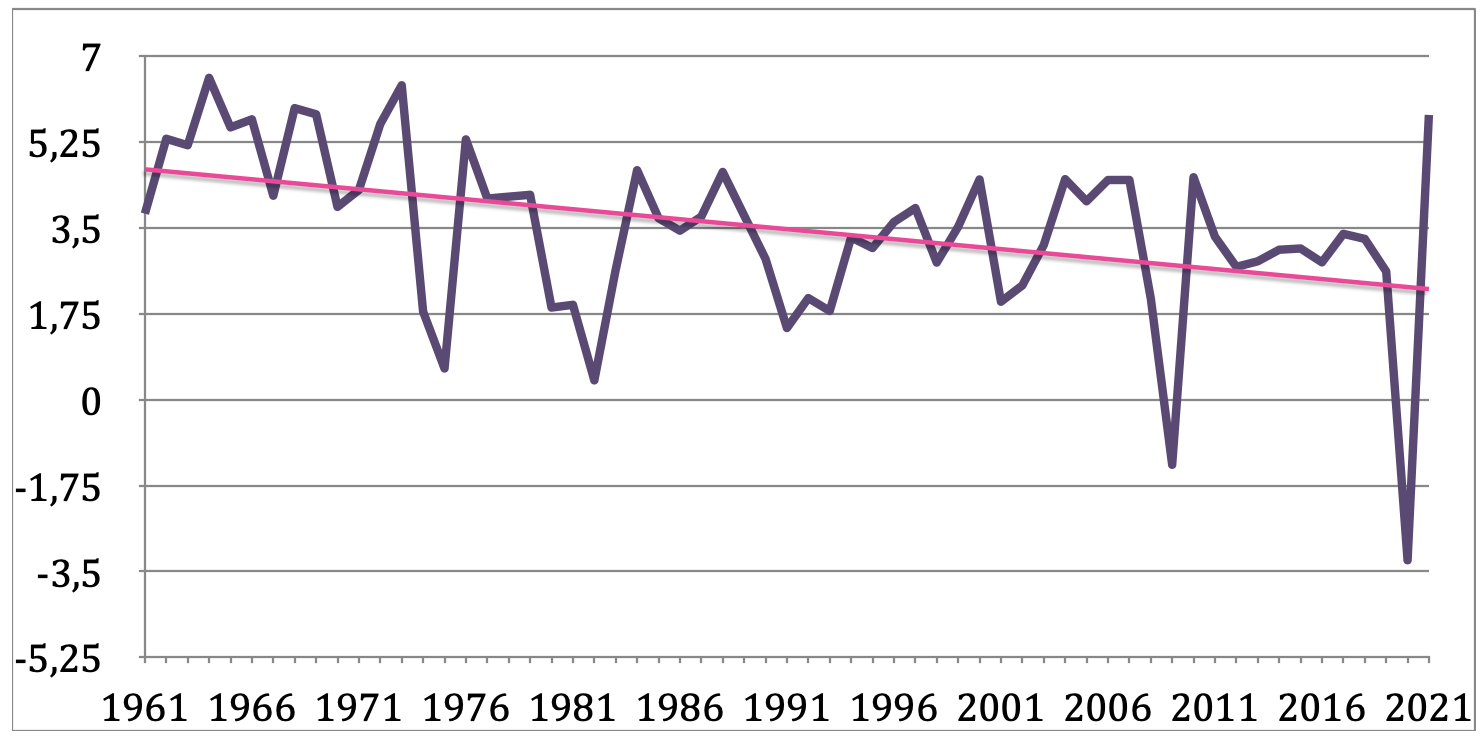

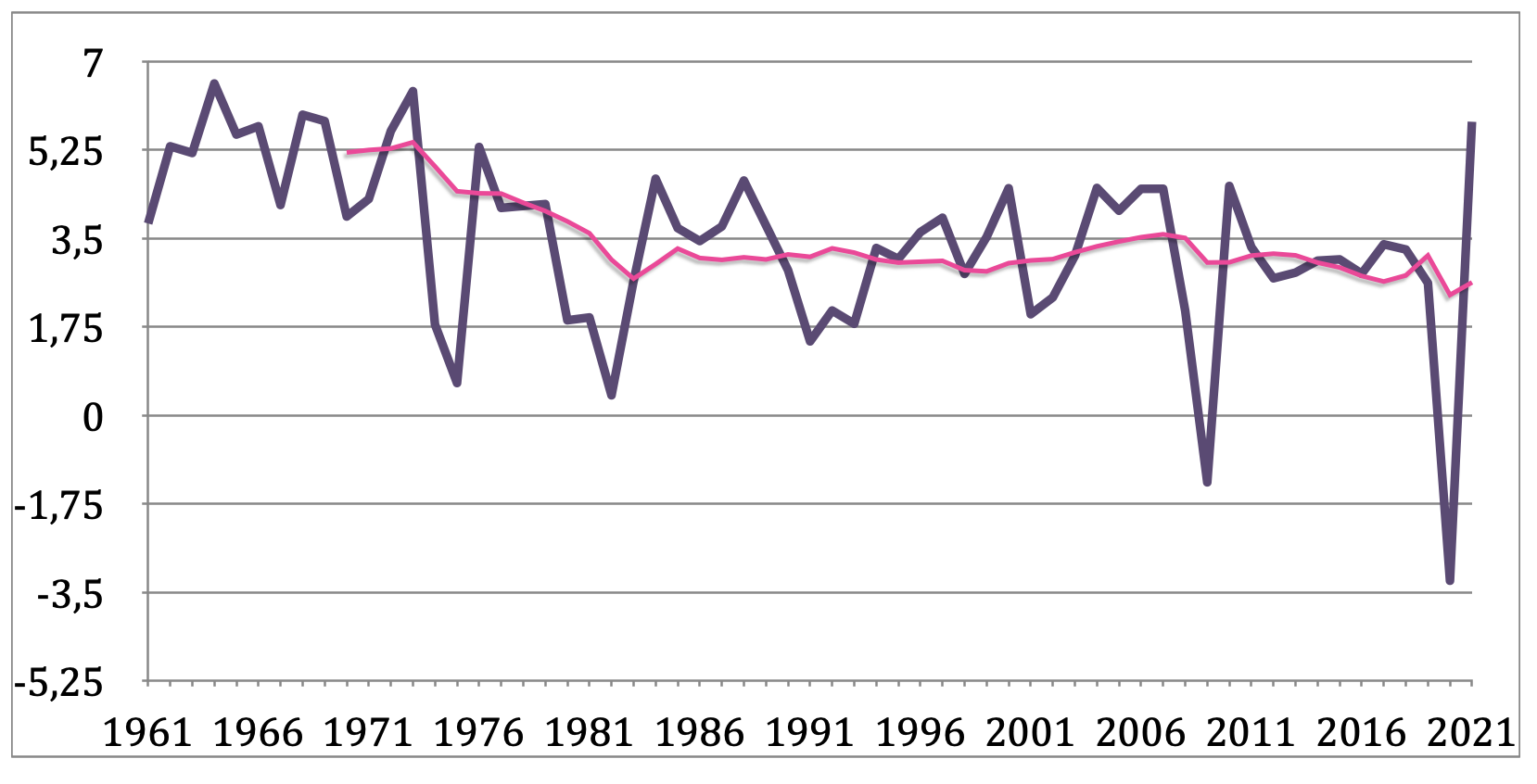

The growth rate of the global economy has, in terms of its underlying trend, seen a steady decline since 1960.

In the 1960s, the average growth rate of the global economy was above 5 percent, and it has since gradually declined such that, in the 2010s, the average growth rate was just around 3 percent (excluding the recession year of 2020, and including the post-recession recovery year of 2010).[8]

The following graphs show the growth rates of the world economy from 1961 to 2021 (purple), along with a linear fit and a 10-year moving average, respectively (pink).[9]

Linear fit:[10]

10-year moving average:

It is likewise worth noting that most economists do not appear to expect a future growth explosion.

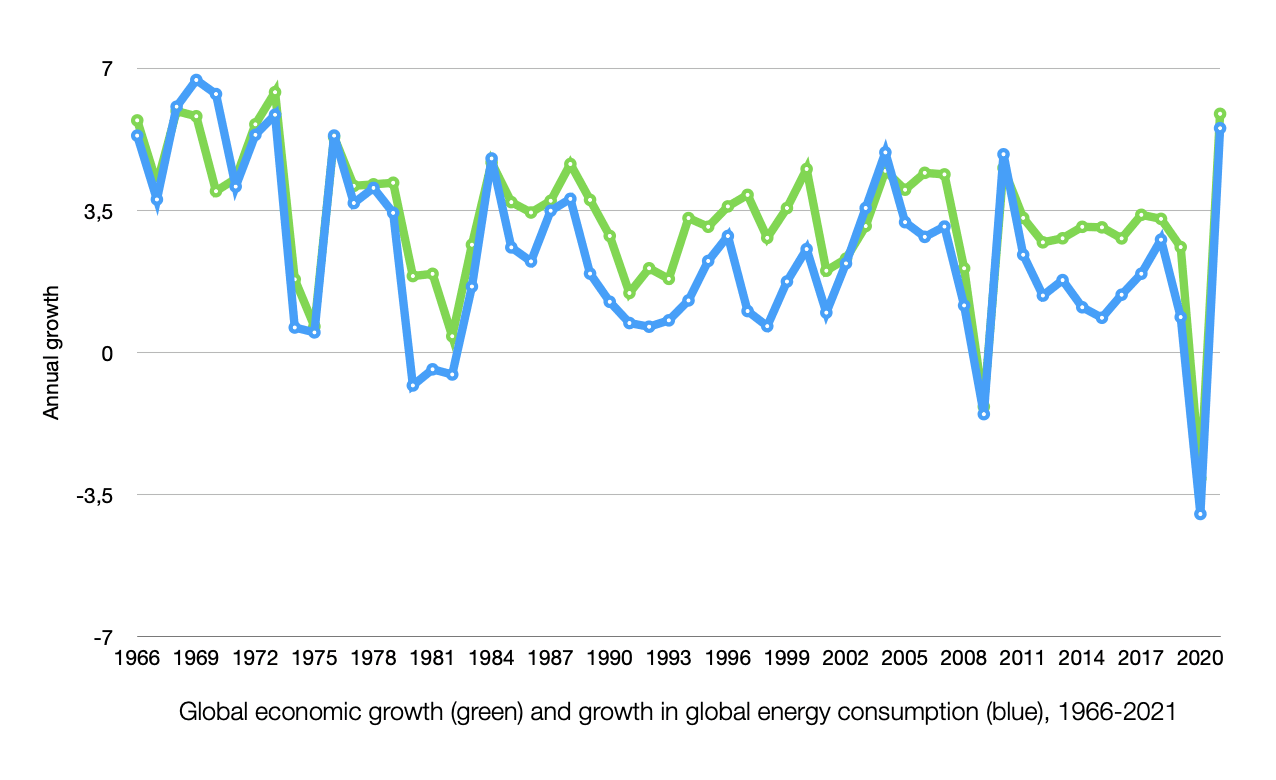

Moreover, as can be seen below, there is a striking similarity between growth in the global economy and growth in global energy consumption, which suggests that we are unlikely to see explosive economic growth without also seeing a growth explosion in energy production. Yet historical trends give us little reason to expect such an explosion, since growth in energy production has likewise been declining in recent decades.

In particular, given how critical energy is to economic activity, it seems unlikely that rapid progress in software will lead to explosive economic growth on its own. That is, explosive growth in energy production would most likely require explosive hardware growth — even if only in terms of scaling up the deployment of existing forms of energy harvesting technologies — and it is unclear why future progress in software should be expected to give rise to such a hardware explosion (cf. Smil, 2010, p. 4).

The fastest supercomputers and other hardware measures

A similar trend of decline can be observed in the performance development of the top 500 supercomputers worldwide, except the decline started occurring sometime around 2010-2015. As the graph below illustrates, the performance of the fastest supercomputer is now a full order of magnitude below what one would have expected based on an extrapolation of the trend that we saw in 1993-2013.

This decline is arguably what one would expect given the predicted end of Moore’s law, projected to hit the ceiling around 2025. In particular, in approaching the end of Moore’s law, we should arguably expect progress to steadily decline as we get closer to the ceiling, rather than expecting a sudden slowdown that only kicks in once we reach 2025 (or thereabout).

Progress on other measures has also slowed down significantly, such as processor clock speeds, the cost of hard drive storage (cf. Kryder’s law), and computations per joule (cf. Koomey’s law).

These declining trends all count somewhat against projections of imminent explosive growth driven by computers. After all, hardware growth and software growth seem to have gone in lockstep so far, with greater computation being a key driver of software progress. This tentatively suggests that the observed slowing trends in hardware will lead to similar trends in software. (Similar considerations apply to AI progress in particular, cf. Appendix B .)

Many key technologies only have modest room for further growth

It appears that many technologies cannot be improved much further due to physical limits. Moore’s law mentioned above is a good example: we have strong reasons to think that we cannot keep on shrinking transistors much further, as we are approaching the theoretical limit. In the ultimate limit, we cannot make silicon transistors smaller than one atom, and limits are expected to be reached before that, due to quantum tunneling.

Physicist Tom Murphy gives additional examples in the domain of other machines and energy harvesting technologies: “Electric motors, pumps, battery charging, hydroelectric power, electricity transmission — among many other things — operate at near perfect efficiency (often around 90%).”

This means that many of our key technologies can no longer be made significantly more efficient, which in turn implies that, at least in many domains, sheer scaling is now pretty much the only way to see further growth (as opposed to scaling combined with efficiency gains).[11]

This relates to one of the main theses explored by Robert J. Gordon in The Rise and Fall of American Growth, namely that many of the key innovations that happened in the period 1870 to 1970 — the period in which human civilization saw the highest growth rates in history — were in some sense zero-to-one innovations that cannot be repeated (e.g. various efficiency gains and light-speed communication).[12]

To briefly zoom out and make a more general point, I believe that Model 2 is right in its strong emphasis on the multifaceted nature of growth. As a famous economics essay explains, nobody knows how to make a pencil — it involves work and tools across countless industries. And this point is even more true of the manufacture of more advanced technologies. There are countless processes and tools involved, and improvements in a wide range of this set of tools — like what we saw around the 1960s, according to Gordon — will tend to lead to greater growth. Conversely, when an increasing number of these key tools can no longer be improved much further, such that only a comparatively limited set of tools in our collective toolbox see significant improvements, the growth of the whole system will likewise tend to be more modest, as it is partly constrained by the components that are no longer improving much.[13]

In other words, this picture suggests that the various areas in which we are now approaching the theoretical ceilings will be, if they are not indeed already, considerable bottlenecks to growth.

An unprecedented decline in economic growth rates across doublings

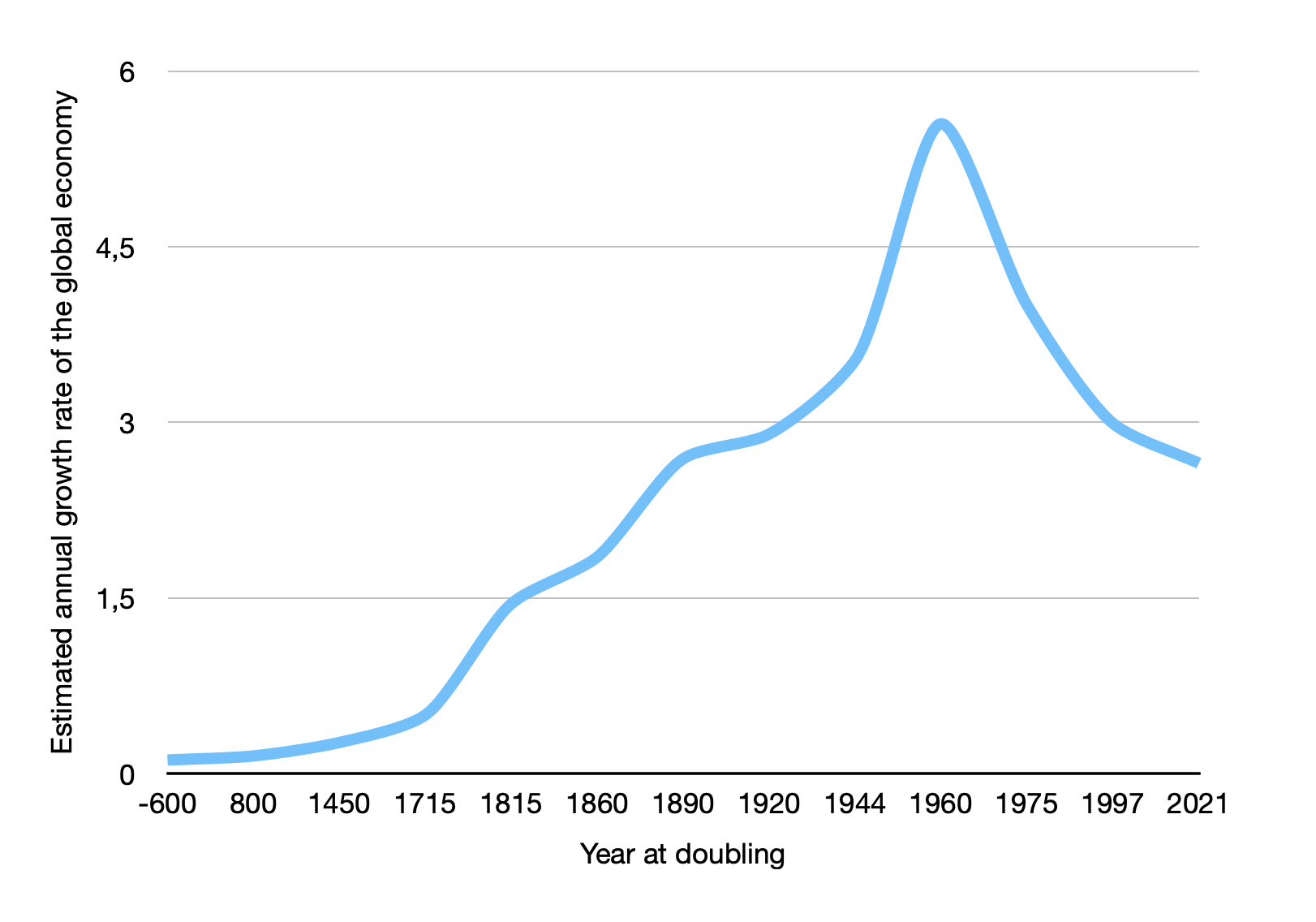

A final observation that I wish to draw attention to is that the decline in economic growth rates that we have witnessed in recent decades is in some sense unprecedented in the entire history of human growth over the last million years.

The world economy has seen roughly three doublings since 1960. And as noted above, the average growth rate today is considerably lower than it was in 1960 — around 3 percent today versus an average annual growth rate above 5.5 percent in the period 1950-1970 (DeLong, 1998, “Total World Real GDP”).[14]

This decline in growth rates across doublings is unprecedented in the history of human economic growth. Throughout economic history, three doublings of the global economy has resulted in a clear increase in the growth rate of the economy.[15] For example, the economy doubled roughly three times from 600 BC to 1715, the latter being just before the Industrial Revolution, where growth rates rose to unprecedented levels (DeLong, 1998, “Total World Real GDP”).[16]

To be clear, I am not saying that this observation is in itself strong evidence against a future growth explosion. But it does serve as counter-evidence to a claim that is sometimes made in favor of a future growth explosion, namely that the history of economic growth suggests that we are headed for a new, even faster growth mode (see e.g. Hanson, 2000; note that Hanson likewise mostly bases his claims on DeLong’s estimates).

The unprecedented growth decline that we have seen over the past three doublings may be taken as weak inductive evidence that we are generally headed for slower rather than faster growth, and that we might now find ourselves past a long era of increasing growth rates (see also Modis, 2012, pp. 20-22).

This wouldn’t be extremely surprising, given that we have good reasons to think that current growth rates can at most continue for a couple of thousand years, and perhaps only a few hundred years. For example, estimates suggest that a continuation of current growth rates in information processing would hit ultimate theoretical limits in less than 250 years. Hence, if future economic growth will be strongly tied to growth in computation, we have some reason to think that current economic growth rates cannot be sustained for much longer than a couple of centuries either.

In light of such strikingly imminent limits — whether they lie a few thousand or a few hundred years ahead — it would not be that surprising if we are already now witnessing a gradual slowdown in growth rates. After all, as mentioned earlier, we should arguably expect a gradual decline in growth rates to set in a good while before we hit ultimate limits.[17]

Again, none of the considerations I have outlined above are in themselves strong reasons to doubt a future growth explosion. But I find that they do begin to paint a fairly compelling picture when put together.[18]

Objections

Below are a couple of counterpoints that may be raised in favor of Model 1, along with my comments on them.

Some versions of Model 1 are consistent with the evidence

There are versions of Model 1 that do not predict gradually rising growth rates when just any set of human cognitive abilities get surpassed, yet which do predict explosive growth rates once AI systems exceed humans in the right set of cognitive skills. Specifically, there may well be a certain set of cognitive skills relevant to growth such that, once AI systems surpass humans along those dimensions, then we will see explosive growth. These skills may include strategic planning or the ability to write code.

I think it is worth taking this hypothesis seriously, and to carefully scrutinize its plausibility. The following are some of the reasons why I am skeptical.

Probably the main reason I consider this picture unlikely is that it still seems to overlook the multitude of bottlenecks that work against explosive growth. In particular, it still appears to ignore the increasingly challenging constraints found in the realm of hardware and hardware production. It is difficult to see how greatly improving a given set of cognitive abilities would vastly improve the process of hardware production, since hardware production in large part seems bottlenecked by the sophistication of our hardware itself, not just information.

To be sure, improvements in domains such as strategic planning and coding may produce considerable gains. Yet would these gains be enough to not only get us back to something that resembles the growth in computational efficiency, memory, processing speed, and performance that we saw more than a decade ago, but also enough to positively exceed those growth rates by a considerable margin?

I think we have good reasons to doubt that, and it is worth reiterating that the reasons to be skeptical are partly a matter of hard physical limits, many of which we have practically already reached or are close to reaching (as mentioned above).[19]

It is also worth asking why progress in surpassing a particular set of human cognitive abilities, such as strategic planning, should give rise to explosive growth, when vastly surpassing other human cognitive abilities — e.g. in various calculation tasks since around 1950 — has not resulted in explosive growth (even as it has helped power recent growth).

After all, it is not clear why future progress in surpassing human cognitive abilities should boost growth rates more than did past progress of this kind. For example, one could argue that past progress likely reaped the low-hanging fruit, particularly in the domains where computers have the clearest advantages compared to humans, and that software progress is now facing diminishing returns in terms of how much it will boost growth rates going forward. Again, this is arguably consistent with the patterns that we currently observe, with the growth rates of the global economy and growth in computer hardware steadily declining.

In other words, expectations of greater increases in growth rates from future progress compared to past progress seem to call for an explanation, especially since past trends and our current trajectory can seem to suggest the opposite.

We should chiefly focus on Model 1 regardless

For any set of plausible credences in Model 1 and Model 2, we should still give far more priority to scenarios that conform to Model 1, as they seem far more consequential and worrying.

Model 2 also implies many serious risks, and it is not clear that these are significantly less consequential.

After all, near-term growth rates do not seem critical to the size of the future. If one assumes an eventual colonization wave that occurs near the speed of light, there is virtually no difference between whether such a wave is approached through something like 3 percent annual growth or whether it is approached at the fastest pace allowed by the laws of physics. These two scenarios would likely only differ by a few hundred — or at most a few thousand — years in terms of when they start expanding at the speed of light (cf. Krauss, 2011; Vinding, 2017a). That difference becomes proportionally minuscule as time progresses, implying that a slight difference in the quality of the respective colonization waves would be far more consequential than their respective starting times.

Second, it seems that many specific risks that one might worry about in the context of Model 1 would have analogues in Model 2, including risks of lock-in scenarios.

Third, one could argue that we have greater reason to focus mostly on Model 2 scenarios. For example, one could argue that Model 2 and its basic predictions seem significantly more plausible (as I have tried to do above). Additionally, one may argue that we have a better chance of having a beneficial impact under Model 2 — e.g. because the effects of interventions might generally be more predictable in Model 2 scenarios, and because such scenarios give us more time to understand and implement genuinely helpful interventions. Likewise, one might think that Model 2 implies a significantly lower risk of accidentally making things much worse. For instance, it seems plausible that Model 1 scenarios come with a significantly greater risk of unwittingly creating suffering on a vast scale, which may be an additional reason to focus more on Model 2 scenarios (cf. Trammell’s “Which World Gets Saved” and Tomasik’s “Astronomical suffering from slightly misaligned artificial intelligence”).

Fourth, even if we were to grant that Model 1 scenarios deserve greater priority, one could still argue that such scenarios are currently overprioritized among people concerned about creating beneficial AI outcomes in the long term, and hence that it would be better to give greater priority to Model 2 scenarios on the current margin. For example, one might think that the current focus is roughly 80/20 in favor of Model 1 (among people working to create beneficial AI outcomes), whereas it should ideally only be, say, 60/40 in favor of Model 1.

Lastly, I think it is worth flagging how priority-related assumptions of the kind expressed in the objection above can potentially distort our views and priorities. For one, such assumptions about how some scenarios and worldviews deserve far more priority than others might be risky in that they may reduce our motivation to carefully explore which outcomes are in fact most likely. Moreover, to the extent that we do seriously explore which outcomes are most likely, there is a risk that priority-related assumptions of this kind will exert an undue influence on our descriptive picture — e.g. if we are inclined to downplay a line of argument for priority-related reasons, we might confuse ourselves as to whether we also disagree for purely descriptive reasons. It may be difficult to keep these classes of reasons separate in our minds, and to not let priority-related reasons pollute our descriptive worldview.

Worrying, too, is the empirically documented tendency of “belief digitization”, namely the tendency to wholly dismiss a less plausible hypothesis when making inferences and decisions, even when one acknowledges that the hypothesis in question still deserves considerable weight. This tendency could lead us to assign unduly low credences to scenarios that might not seem most likely or most worrisome, yet which still deserve considerable weight and priority (in line with the previous point about the possibility that we underprioritize Model 2 scenarios on the current margin, even if we believe that Model 1 scenarios deserve the most weight in absolute terms).

Potential implications for altruistic priorities

In closing, I will briefly outline what I see as some of the main implications of updating more toward Model 2 compared to Model 1 (if one were to make such an update).

There is obviously much uncertainty as to what the exact implications would be, but it seems that a greater credence in Model 2 likely pushes toward:

- Comparatively less focus on individual AI systems.

- Individual AI systems in themselves are less likely to be the critical determinants of future outcomes on Model 2. This is not to say that the design of individual AI systems is unimportant on Model 2, but merely that it becomes less important.

- A greater focus on improving institutions and other “broader” factors, e.g. norms and values.

- This may be the most promising lever with which to steer civilization in better directions in scenarios with more distributed growth and power, as it has the potential to simultaneously influence a vast number of actors, creating better equilibria for how they cooperate and make decisions.

- Note that a focus on institutions would not necessarily just mean a focus on AI governance in particular, but also better political institutions and practices in a broader sense.

- More research on what could go wrong in Model 2 scenarios.

- For example, what are the most worrying lock-in dynamics that might occur in these scenarios, and how might they come about? How can they best be prevented?

Updating more toward Model 2 would by no means change everything, but it would still imply significant changes in altruistic priorities.

Acknowledgments

Thanks to Tobias Baumann for suggesting that I write this post. I am also grateful to the people who gave feedback on these ideas at a recent retreat and in writing.

Appendix A: Critical questions regarding the models

It seems worth briefly reviewing a couple of critical points and questions that one might raise in response to the two basic models reviewed in this post.

Just qualitative models?

One critique might be that the two models presented here are merely qualitative, and hence they are less useful than proper quantitative models of future growth. I would make two points in response to this sentiment.

First, it seems that qualitative models are a good place to start if we want to think straight about a given issue. In particular, if we want to make sure that the more elaborate models we construct are plausible, we have good reason to first make sure that we base these models on plausible assumptions about the nature of technological growth, especially as far as the key drivers and bottlenecks are concerned.

This brings me to the second point, which is that quantitative models are only as good as the underlying assumptions on which they rest. A qualitative model can thus be more useful than a quantitative model, if the qualitative model better reflects reality in terms of the core assumptions and predictions it makes. That being said, it would, of course, be ideal to have quantitative models that do rest on accurate assumptions.

The two models presented here are not meant to provide precise quantitative predictions (even as they do provide some rough predictions), but rather to illustrate an underlying source of disagreement in a simple and intuitive way.

How do the models presented here relate to the economic models presented in Aghion et al., 2019?

The models presented by Aghion et al. relate quite closely to the models presented in this post. The first model they present is an automation model in which a set of tasks that are difficult to automate serve as a bottleneck to more rapid economic growth — a model that seems to describe recent growth trends reasonably well (Aghion et al., 2019, sec. 9.2).

The authors then proceed to describe various models that are similar to Model 1, in which growth becomes ever faster due to greater automation or self-improving AI (Aghion et al., 2019, sec. 9.4). Yet it is worth noting that the authors do not claim that any of these models are plausible. Indeed, these abstract models are in some sense trivially implausible, at least in the limit, since they either imply that economic output becomes infinite in finite time, or that growth rates will increase exponentially without bounds.

Furthermore, concerning the equations that the authors use to formalize an intelligence explosion, they note that there are reasons to believe that those equations “would not be the correct specifications”, and they go on to discuss these reasons, “which can broadly be characterized as ‘bottlenecks’ that AI cannot resolve” (Aghion et al., 2019, p. 258). These reasons relate to Model 2, in that Model 2 can be seen as an intuitive illustration of some of the counterpoints that Aghion et al. raise against the plausibility of automation/AI-driven explosive growth (in Section 9.4.2).

Overall, Aghion et al. do not appear to take a strong stance against a future growth explosion driven by AI, but my impression is that they are tentatively skeptical.

How do the points made in favor of Model 2 in this post relate to the conclusions drawn in Davidson 2021?

Davidson concludes that it is plausible (>10 percent likely) that ‘explosive growth’ — by which he means >30 percent annual global economic growth — will occur before 2100. Yet it is worth noting that 30 percent annual growth is far closer to current growth rates than to the growth rates found in an economy that doubles on the order of months, weeks, or shorter. For example, an economy that doubles every other month would see 6300 percent annual growth(!), whereas an economy that doubles every week would see more than (i.e. >400 quadrillion) percent annual growth(!!).

So we should be clear that Davidson is evaluating a much milder growth explosion than the extreme growth explosions typically implied by Model 1, or by Robin Hanson’s projections (Hanson, 2000; 2016). And assigning >10 percent probability to >30 percent annual growth is obviously far less radical than is assigning >10 percent probability to >6300 percent annual growth, let alone to >400 quadrillion percent annual growth. Worth noting, too, is that Davidson seems to assign an even higher probability to a growth slowdown: “I place at least 25% probability on the global economy only doubling every ~50 years by 2100”; “my central estimate is not stable, but it is currently around 40%”. This seems broadly consistent with the arguments I have presented in favor of Model 2.

Appendix B: Peak AI growth occurring roughly now? Or in the past? Declining growth rates in the compute of AI models

[This appendix was added March 27, 2023; latest updates made Sep., 2024.]

We have seen a lot of progress in AI over the last decade, which renders it natural to expect that we will see similar relative progress in the next ten years.

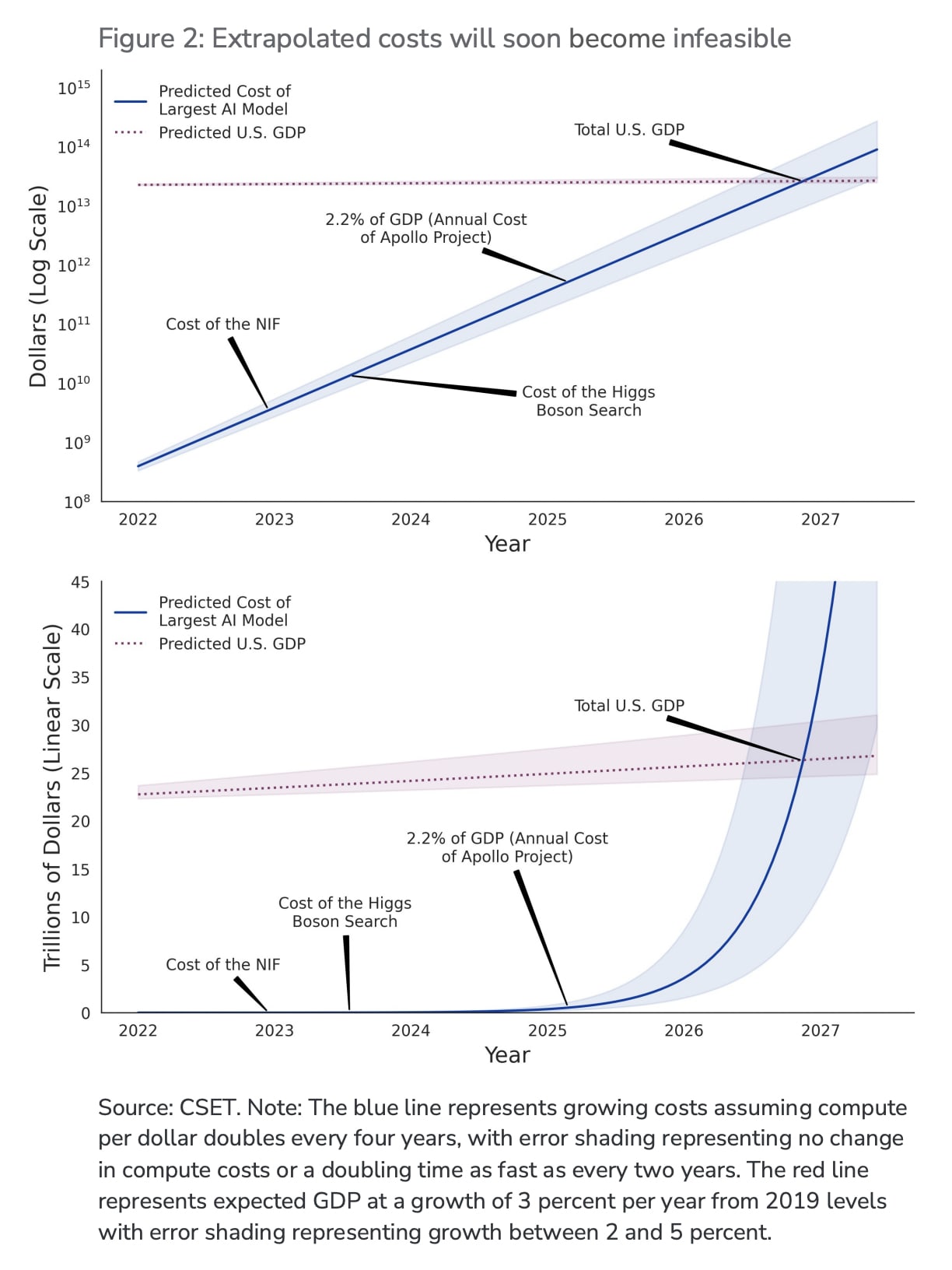

Yet there are reasons to think that the high rate of progress we have seen in AI over the past decade cannot be sustained for that long. For instance, according to Lohn & Musser (2022), an extrapolation of the growth of the largest AI models suggests that the average growth rate since around 2012[20] is unlikely to be sustainable beyond 2026, as they estimate that the computing costs would exceed total US GDP:

Perhaps the main objection to this line of reasoning is that total GDP will also start to increase rapidly, and/or that hardware progress will enable computing costs to remain low enough to sustain past rates of progress. This is a possibility, though I would argue that it is unlikely (<10 percent likely) that economic growth rates will see such an explosion — i.e. that either US or global GDP will more than double by 2027 — for the reasons reviewed above.

The same goes for the claim about hardware progress. Indeed, the estimates provided by Lohn & Musser already take the potential for significant hardware progress into account — cf. the blue shading to the right, which seems optimistic about future computing costs in light of recent hardware trends (see also Lohn & Musser, 2022, p. 11, p. 14).[21]

Of course, as noted above in the context of Moore's law, we should not expect to hit a ceiling abruptly, but instead expect growth rates to decline steadily as we approach a limiting constraint (e.g. computational costs). In particular, we should not expect the growth rate of the size of the largest models to undergo a uniquely sudden slowdown three years from now. Rather, we should expect growth rates to continue to gradually decline, as they seemingly already have since around 2018.[22]

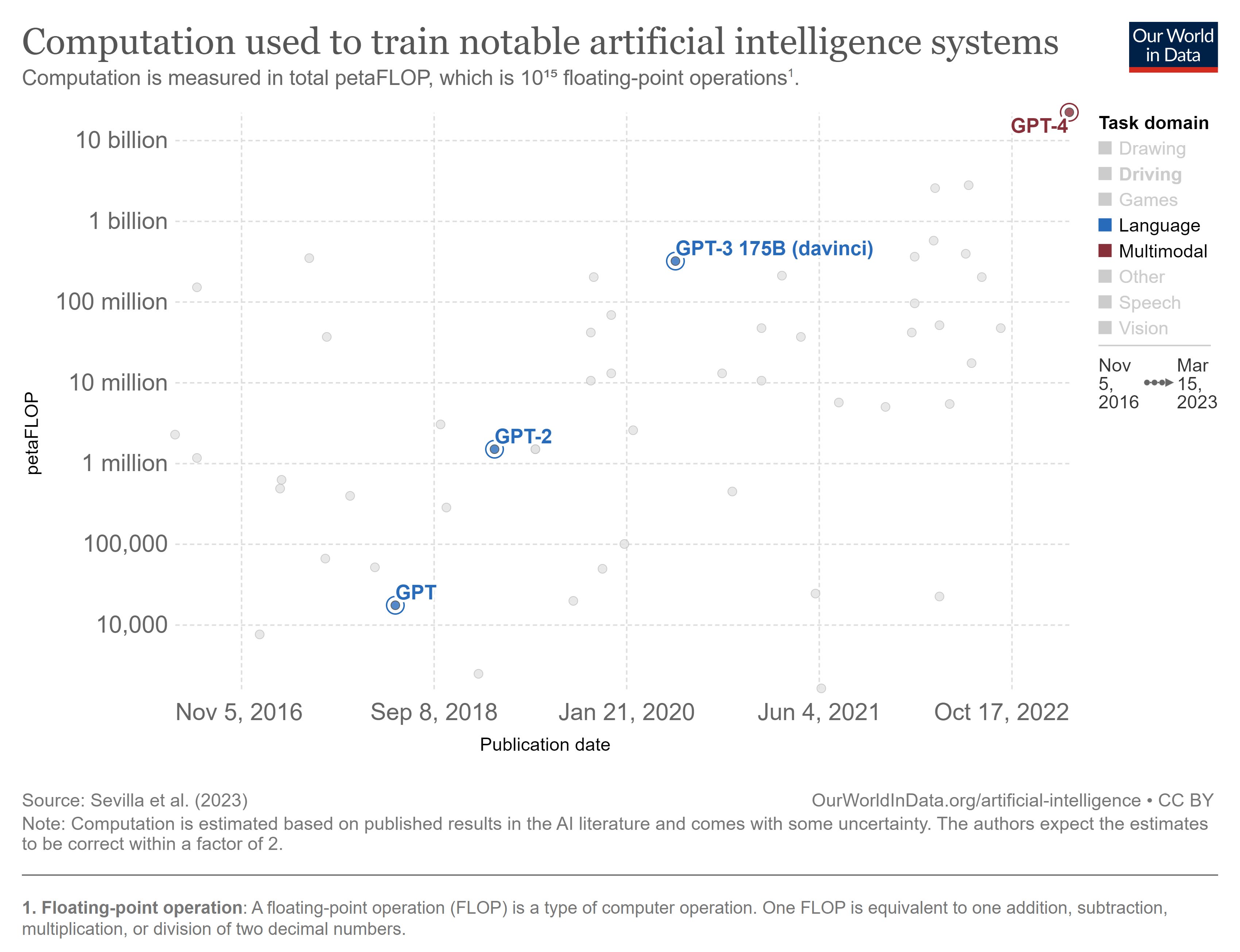

This gradual slowdown in growth rates also seems apparent in the compute trends of different generations of GPTs from OpenAI, as seen below.

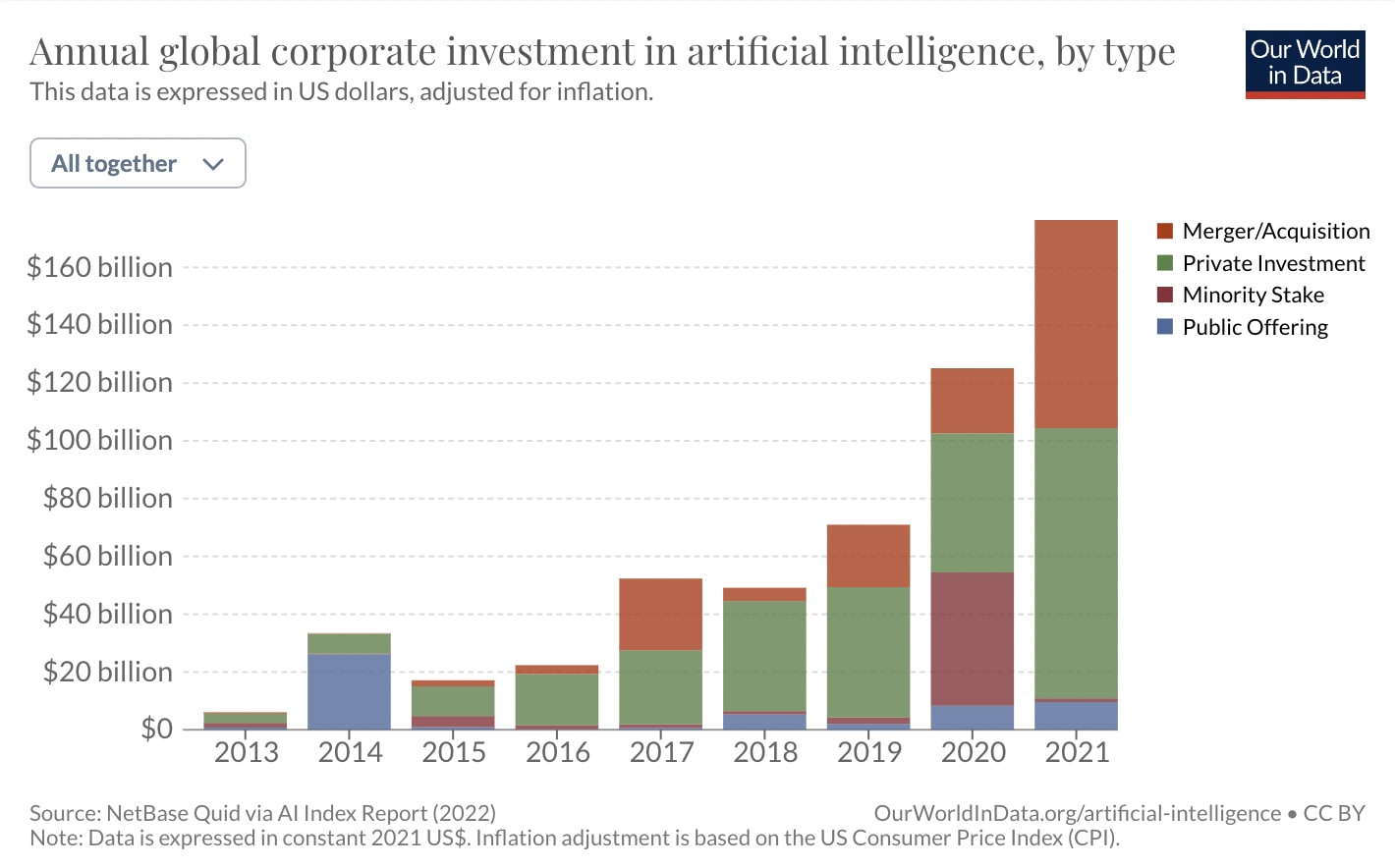

And note that these declining trends have occurred despite increasing investments into AI:

Another reason to expect that current rates of progress in AI cannot be sustained for long is that the training of large AI models seems to be increasingly bottlenecked by memory and communication bandwidth — what is referred to as the “memory wall”. In particular, while the compute and parameter sizes of large language models have been growing rapidly in recent years (roughly at a rate of 750x and 240x every two years, respectively), the growth in memory capacity and bandwidth has been much slower (roughly 1.4x to 2.0x every two years). Some researchers have argued that this differential growth rate makes memory an ever more significant bottleneck over time (see also Bergal et al., 2020).

Similarly, there are reasons to think that training data is yet another critical bottleneck to the capabilities of AI models, and one that is also getting increasingly significant. In particular, training data is now — at least in some respects — a greater bottleneck to the improvement of large AI models than is model size per se. And extrapolations of recent trends in the amount of data used to train AI models suggest that AI training runs will soon be using practically all the high-quality language data that is available, “likely before 2026” (Villalobos et al., 2022, p. 1). Language models are typically trained on high-quality language data, yet the total stock of such data is less than a single order of magnitude larger than the largest datasets that have already been used to train AI models, implying that current AI models are surprisingly close to using all of our existing high-quality data (Villalobos et al., 2022, p. 1). (See also Buckman, 2022; 2023.)

The three reasons reviewed above — the economic constraints to growth in AI compute and model sizes, the memory wall, and the training data bottleneck — each independently suggest that the relative growth of the capabilities of large AI models is likely to slow down within the next few years. Of course, there are also considerations that point in the opposite direction — e.g. perhaps economic growth could indeed explode within the next few years, perhaps engineers will find ways to get around the memory wall, and perhaps AI can generate vast amounts of high-quality language data on its own. Such possibilities cannot be ruled out. But the considerations reviewed above still represent substantial reasons to believe that the relative rates of progress that we have seen in AI recently — i.e. over the last decade — are unlikely to be sustained for long.[23]

References

Aaronson, S. (2008). The limits of quantum. Scientific American, March 2008, pp. 62-69. Ungated

Aghion, P. et al. (2019). Artificial Intelligence and Economic Growth. In Agrawal, A. et al. (eds.), The Economics of Artificial Intelligence: An Agenda. The University of Chicago Press. Ungated

Bergal, A. et al. (2020). Trends in DRAM price per gigabyte. Ungated

Buckman, J. (2022). Recursively Self-Improving AI Is Already Here. Ungated

Buckman, J. (2023). We Aren't Close To Creating A Rapidly Self-Improving AI. Ungated

Cottier, B. (2023). Trends in the dollar training cost of machine learning systems. Ungated

Cowen, T. (2011). The Great Stagnation: How America Ate All the Low-Hanging Fruit of Modern History, Got Sick, and Will (Eventually) Feel Better. Dutton Adult.

Davidson, T. (2021). Could Advanced AI Drive Explosive Economic Growth? Ungated

DeLong, B. (1998). Estimating World GDP, One Million B.C. - Present. Ungated

Gordon, R. (2012). Is U.S. Economic Growth Over? Faltering Innovation Confronts the Six Headwinds. Ungated

Gordon, R. (2016). The Rise and Fall of American Growth: The U.S. Standard of Living since the Civil War. Princeton University Press.

Grace, K. (2022). Counterarguments to the basic AI x-risk case. Ungated

Hanson, R. (2000). Long-Term Growth As A Sequence of Exponential Modes. Ungated

Hanson, R. (2009). Limits To Growth. Ungated

Hanson, R. (2016). The Age of Em: Work, Love and Life When Robots Rule the Earth. Oxford University Press.

Henrich, J. (2015). The Secret of Our Success: How Culture Is Driving Human Evolution, Domesticating Our Species, and Making Us Smarter. Princeton University Press.

Hobbhahn, M. & Besiroglu, T. (2022). Trends in GPU price-performance. Ungated

Jones, C. (2021). The past and future of economic growth: a semi-endogenous perspective. Ungated

Jones, C. (2022). The Past and Future of Economic Growth: A Semi-Endogenous Perspective. Annu. Rev. Econ., 14, pp. 125-152. Ungated

Krauss, L. (2011). The Future of Life in the Universe. Ungated

Krauss, L. & Starkman, G. (2004). Universal Limits on Computation. Ungated

Legg, S. & Hutter, M. (2007). Universal Intelligence: A Definition of Machine Intelligence. Ungated

Lohn, A. & Musser, M. (2022). How Much Longer Can Computing Power Drive Artificial Intelligence Progress? Ungated

Modis, T. (2012). Why the Singularity Cannot Happen. In Eden, A. et al. (eds.), Singularity Hypotheses: A Scientific and Philosophical Assessment. Springer. Ungated

Murphy, T. (2011). Can Economic Growth Last? Ungated

Muthukrishna, M. & Henrich, J. (2016). Innovation in the collective brain. Philosophical Transactions of the Royal Society B: Biological Sciences, 371: 20150192. Ungated

Smil, V. (2010). Energy Innovation as a Process: Lessons from LNG. Ungated

Sotala, K. (2017). Disjunctive AI scenarios: Individual or collective takeoff? Ungated

Tomasik, B. (2018). Astronomical suffering from slightly misaligned artificial intelligence. Ungated

Trammell, P. (2018). Which World Gets Saved. Ungated

Villalobos, P. et al. (2022). Will we run out of ML data? Evidence from projecting dataset size trends. Ungated

Vinding, M. (2016/2020). Reflections on Intelligence. Kindle; PDF

Vinding, M. (2017a/2020). The future of growth: Near-zero growth rates. Ungated

Vinding, M. (2017b/2022). A Contra AI FOOM Reading List. Ungated

Vinding, M. (2020). Chimps, Humans, and AI: A Deceptive Analogy. Ungated

Vinding, M. (2021). Some reasons not to expect a growth explosion. Ungated

Vinding, M. (2022). What does a future dominated by AI imply? Ungated

- ^

Other examples of people whose views conform reasonably well to Model 2 may be found in the following Contra AI FOOM Reading List. However, as I note shortly in the text, the views of some people on this list also conform well to Model 1 in some respects.

- ^

I have also argued for the plausibility of this model in my book Reflections on Intelligence (2016). For a brief summary and review of the book, see Sotala, 2017.

- ^

Cognitive abilities are strongly tied to culture, so it seems worth distinguishing cognitive talents vs. abilities (e.g. capacity for learning mathematics vs. actually having learned mathematics thanks to a surrounding culture that could teach it).

- ^

Likewise, Model 2 is more skeptical as to whether it is helpful to talk about “a point” at which humanity achieves AGI, as opposed to there being a continued, decade-long process of AI systems surpassing humans in ever more cognitive domains, where many of the most important superhuman cognitive abilities may be achieved early in that process, e.g. superhuman calculation and memory abilities that serve critical functions in the development of technology.

- ^

This would at least be the prediction of Model 1 in its most straightforward version; I discuss an alternative view below.

- ^

Note that diminishing returns in terms of growth rates need not imply diminishing returns in terms of absolute growth. For example, an economy whose growth rate steadily declines from above 5 percent to around 3 percent would obviously see diminishing growth rates, i.e. it would grow sub-exponentially, but the size of the economy may still be growing superlinearly, since absolute growth can increase despite decreasing growth rates (as the development of the global economy since the 1960s illustrates).

- ^

Some additional observations are presented in Vinding, 2021.

- ^

- ^

Note that the trend shown below extends further back than the 1960s, as the average annual growth rate in the 1950s was likewise above 5 percent.

- ^

The linear regression is obviously not a good fit (), but it still helps give some sense of the overall direction of economic growth rates over the last 60 years.

- ^

But can’t we still just scale our way to massive growth? This might be possible in theory, but a problem is that hitting limits in many kinds of efficiency gains and hardware progress (as described in the previous section) also means that further scaling, e.g. in terms of getting more computing power, will become increasingly expensive compared to what we are used to. Efficiency gains appear to have been an important driver of economic growth to date (see e.g. Gordon, 2012), and hence as efficiency gains begin to decline, we should not only expect to get less relative growth for the money that we invest into further growth, but we should arguably also expect the relative growth of our investment capital itself to begin to decline (compared to what we have been used to). So simply scaling up at unprecedented rates may be a lot easier said than done, as the costs may be prohibitive.

- ^

A similar thesis is defended in Cowen, 2011. Both Gordon’s and Cowen’s books focus on American growth, yet they still capture much of the global picture, since the US economy accounted for roughly 40 percent of the global economy in 1960, and hence it drove much of the global growth in that period.

Charles Jones likewise argues that most of the growth that the US economy has seen since the 1950s has been due to zero-to-one improvements that cannot be repeated, e.g. misallocations of resources that cannot be corrected much further. A smaller fraction of growth, around 20 percent, has been due to an increase in population, which is the only factor in the growth model presented by Jones that could keep on driving growth in the long term (Jones, 2022).

- ^

- ^

The graph below is based on estimates of global GDP over time from The World Bank (in the period 1960-2021) and from DeLong, 1998 (prior to 1960). The graph draws a smoothed-out line between the estimated growth rate at the respective years listed along the x-axis, between which the world economy has seen a full doubling.

- ^

That is, in terms of the underlying trendline, disregarding short-lasting recessions.

- ^

Estimates of global GDP in historical and prehistoric times are surely far from perfectly reliable. But a closer look at these estimates renders it unlikely that the estimates are so unreliable that it would turn out to be false that three doublings have historically led to consistently higher growth rates (prior to 1960), in terms of the average growth trend across doublings. The main reason is that it is clear that, prior to the modern age, it took a very long time to double the economy three times — so long that global civilization appears to have increased its ability to grow the economy considerably in the meantime.

Specifically, at any point prior to 1960 in DeLong’s estimates (under “Total World Real GDP”), one can try to work three doublings ahead and then see whether it is plausible that the underlying growth rate should be lower than at the starting point. A further test might be to compare the time it took for another doubling to occur from the three-doublings-later point relative to how long a single doubling took at the starting point.

- ^

Note also how declining or relatively stable growth rates need not imply that absolute growth will be modest compared to today. For example, if we imagine that economic growth were to continue at roughly the current pace (~3 percent per year) for another 200 years, then the 3 percent growth occurring in a single year 200 years from now would, in absolute terms, be greater than 11 times our entire economy today. (I should note that I do not believe that the most likely scenario is that growth will remain at around 3 percent in the coming centuries, but rather that growth rates will continue to gradually decline along the downtrend we have seen since the 1960s, as also projected by this review of long-term GDP projections and this PWC report.)

- ^

- ^

As for the possibility that quantum computers might overcome these constraints, physicist Scott Aaronson argues that quantum computers could solve certain problems much faster, but they would likely only do slightly better than conventional computers on most tasks.

- ^

Or more precisely: what they take to be the average growth rate of the compute demands of the largest AI models from around 2012 to 2020, cf. Lohn & Musser, 2022, p. 5.

- ^

At a first glance, the data reviewed in Hobbhahn & Besiroglu (2022) seems to render it plausible that compute per dollar might double every 2-3 years going forward. But note that their projections are based on data from around 2005 to 2020, which appears to cover two different growth modes; one that occurred roughly around 2005 to 2010, and a considerably slower one that occurred around 2010 to 2020. When we look at this more recent trend in compute costs (cf. Lohn & Musser, 2022, p. 11) — and combine it with the underlying hardware trends reviewed above — a two-year doubling time looks like an optimistic projection.

- ^

Cottier (2023) projects a slower and more realistic increase in the costs of AI models than do Lohn & Musser (2022), as Cottier bases his projections on more recent trends that already display much slower growth rates compared to those of 2012-2018. In other words, Lohn & Musser's extrapolation is really an exercise in extrapolating a past average growth rate that does not reflect more recent, and considerably slower growth rates.

- ^

Again, as hinted in fn 17, it is important to distinguish relative growth from absolute growth. To say that future relative rates of progress are unlikely to match those of the past decade is consistent with thinking that progress will continue to be significant in absolute terms, including in terms of its absolute economic impact.

What do you think about the perspective of "Model 2, but there's still explosive growth"? In particular, depending on what exactly you mean by cognitive abilities, I think it's pretty plausible to believe (1) cognitive abilities are a rather modest part of ability to achieve goals, (2)

individual human cognitive abilities are a significant bottleneck among many others to economic and technological growth, (3) the most growth-relevant human abilities will be surpassed by machines quite continuously (not over millennia, but there isn't any one "big discrete jump") and (4) there will be explosive growth. (1)-(3) are your main examples of Model 2 predictions, while (4) says there's explosive growth anyway.

Some readings on this perspective:

In particular I think most of your arguments for Model 2 also apply to this perspective. The one exception is the observation that growth rates are declining, though this perspective would likely argue that this is because of the demographic transition, which breaks the positive feedback loop driving hyperbolic growth. (Not sure about the last part, haven't thought it through in detail.)

I wrote earlier that I might write a more elaborate comment, which I'll attempt now. The following are some comments on the pieces that you linked to.

I disagree with this series in a number of places. For example, in the post "This Can't Go On", it says the following in the context of an airplane metaphor for our condition:

As argued above, in terms of economic growth rates, we're in fact not accelerating, but instead seeing an unprecedented growth decline across doublings. Additionally, the piece, and the series as a whole, seems to neglect the possibility that growth rates might simply continue to decline very gradually, as they have over the last 5-6 decades.[1]

Relatedly, I would question some of the "growth-exploding" implications that the series implicitly assumes would follow from PASTA ("AI systems that can essentially automate all of the human activities needed to speed up scientific and technological advancement"). I've tried to explain some of my reservations here (more on this below).

This post relates to the PASTA point above; I guess the following is the key passage in this context:

I think this is by far the most plausible conjecture as to how growth rates could explode in the future. But I still think there are good reasons to be skeptical of such an explosion. For one, an AI-driven explosion of this kind would most likely involve a corresponding explosion in hardware (e.g. for reasons gestured at here and here), and there are both theoretical and empirical reasons to doubt that we will see such an explosion.

Indeed, growth rates are declining across various hardware metrics, and yet here we are not just talking about growth rates in hardware remaining at familiar levels, but presumably about an explosion in these growth rates. That seems to go against the empirical trends that we are observing in hardware, and against the impending end of Moore's law that we can predict with reasonable confidence based on theory.

Relatedly, there is the point that explosive economic growth likely would imply an explosion in energy production, but it is likewise doubtful whether progress in software/computation could unleash such an explosion (as argued here). More generally, even if we made a lot of progress in both computer software and hardware, it seems likely that growth would become constrained by other factors to a degree that precludes an economic growth explosion (Bryan Caplan makes similar points in response to Hanson's Age of Em; see point 9 here; see also Aghion et al., 2019, p. 260).

Moreover, as mentioned in an earlier comment, it is worth noting that the decline in growth rates that we have seen since the 1960s is not only due to decreasing population growth, as there are also other factors that have contributed, such as certain growth potentials that have been exhausted, and the consequent decline in innovations per capita.

One thing to notice about this model is that it conflicts with the historic decline in growth rates. In particular, the model is an ever worse fit with the observed growth rates of the last four decades. Roodman himself notes that his model has shortcomings in this regard:

(Perhaps also see Tom Davidson's reflections on Roodman's model.)

In other words, the model is not a good fit with the last 2-3 doublings of the world economy, and this is somewhat obscured when the model is evaluated across standard calendar time rather than across doublings; when looking at the model through the former lens, its recent mispredictions seem minor, but when seen across doublings — arguably the more relevant perspective — they become rather significant.

Given the historical data, it seems that a considerably better fit would be a model that predicts roughly symmetric growth rates around a peak growth rate found ca. 1960, as can be gleaned here (similar points are made in Modis, 2012, pp. 20-22). Such a model may be theoretically grounded in the expectation that there will be a point where innovations become increasingly harder to find. One would expect such a "peak growth rate" to occur at some point a priori, given the finite potential for growth (implying that the addition of a limiting factor that represents the finite nature of growth-inducing innovations would be a natural extension to Roodman's model). And empirical data then tentatively suggests that this peak lies in the past. (But again, peak growth rates being in the past is still compatible with increasing growth in absolute terms.)

I think my main comments on this model are captured above.

Thanks again for engaging, and feel free to comment on any points you disagree with. :)

E.g. when it says: "We're either going to speed up even more, or come to a stop, or something else weird is going to happen." This seems to overlook the kind of future that I alluded to above, which would amount to staying at roughly the same pace, yet continuing to slow down growth rates very gradually. This is arguably not a particularly "weird" or "wild" prospect. (It's also worth nothing that the "come to a stop" would occur faster in the "speed up even more" scenario, as it would imply that we'll hit ultimate limits earlier; slower growth would imply that non-trivial growth rates end later.)

Thanks for this, it's helpful. I do agree that declining growth rates is significant evidence for your view.

I disagree with your other arguments:

I don't have a strong take on whether we'll see an explosion in hardware efficiency; it's plausible to me that there won't be much change there (and also plausible that there will be significant advances, e.g. getting 3D chips to work -- I just don't know much about this area).

But the central thing I imagine in an AI-driven explosion is an explosion in the amount of hardware (i.e. way more factories producing chips, way more mining operations getting the raw materials, etc), and an explosion in software efficiency (see e.g. here and here). So it just doesn't seem to matter that much if we're at the limits of hardware efficiency.

I realize that I said the opposite in a previous comment thread, but right now it feels consistent with explosive growth to say that innovations per capita are going to decline; indeed I agree with Scott Alexander that it's hard to imagine it being any other way. The feedback loop for explosive growth is output -> people / AI -> ideas -> output, a core part of that feedback loop is about increasing the "population".

(Though the fact that I said the opposite in a previous comment thread suggests I should really delve into the math to check my understanding.)

(The rest of this is nitpicky details.)

Incidentally, the graphs you show for the decline in innovations per capita start dropping around 1900 (I think, I am guessing for the one that has "% of people" as its x-axis), which is pretty different from the 1960s.

Also, I'm a bit skeptical of the graph showing a 5x drop. It's based off of an analysis of a book written by Asimov in 1993 presenting a history of science and discovery. I'm pretty worried that this will tend to disadvantage the latest years, because (1) there may have been discoveries that weren't recognized as "meeting the bar", since their importance hadn't yet been understood, (2) Asimov might not have been aware of the most recent discoveries (though this point could also go the other way), and (3) as time goes on, discoveries become more and more diverse (there are way more fields) and hard to understand without expertise, and so Asimov might not have noticed them.

Regarding explosive growth in the amount of hardware: I meant to include the scale aspect as well when speaking of a hardware explosion. I tried to outline one of the main reasons I'm skeptical of such an 'explosion via scaling' here. In short, in the absence of massive efficiency gains, it seems even less likely that we will see a scale-up explosion in the future.

That's right, but that's consistent with the per capita drop in innovation being a significant part of the reason why growth rates gradually declined since the 1960s. I didn't mean to deny that total population size has played a crucial role, as it obviously has and does. But if innovations per capita continue to decline, then even a significant increase in effective population size in the future may not be enough to cause a growth explosion. For example, if the number of employed robots continues to grow at current rates (roughly 12 percent per year), and if future robots eventually come to be the relevant economic population, then declining rates of innovation/economic productivity per capita would mean that the total economic growth rate still doesn't exceed 12 percent. I realize that you likely expect robot populations to grow much faster in such a future, but I still don't see what would drive such explosive growth in hardware (even if, in fact especially if, it primarily involves scaling-based growth).

That makes sense.

On the other hand, it's perhaps worth noting that individual human thinking was increasingly extended by computers after ca. 1950, and yet the rate of innovation per capita still declined. So in that sense, the decline in progress could be seen as being somewhat understated by the graphs, in that the rate of innovation per dollar/scientific instrument/computation/etc. has declined considerably more.

I am confused by your argument against scaling.

My understanding of the scale-up argument is:

In some sense I agree with you that you have to see efficiency improvements, but the efficiency improvements are things like "you can create new skilled robots in days, compared to the previous SOTA of 20 years". So I think if you accept (3) then I think you are already accepting massive efficiency improvements.

I don't see why current robot growth rates are relevant. When you have two different technologies A and B where A works better now, but B is getting better faster than A, then there will predictably be a big jump in the use of B once it exceeds A, and extrapolating the growth rates of B before it exceeds A is going to predictably mislead you.

(For example, I'd guess that in 1975, you would have done better thinking about how / when the personal computer would overtake other office productivity technologies, perhaps based on Moore's law, rather than trying to extrapolate the growth rate of personal computers. Indeed, according a random website I just found, it looks like the growth rate accelerated till the EDIT: 1980s, though it's hard to tell from the graph.)

(To be clear, this argument doesn't necessarily get you to "transformative impact on growth comparable to the industrial revolution", I'd guess you do need to talk about innovations to get that conclusion. But I'm just not seeing why you don't expect a ton of scaling even if innovations are rarer, unless you deny (3), but it mostly seems like you don't deny (3).)

I agree with premise 3. Where I disagree more comes down to the scope of premise 1.

This relates to the diverse class of contributors and bottlenecks to growth under Model 2. So even though it's true to say that humans are currently "the state-of-the-art at various tasks relevant to growth", it's also true to say that computers and robots are currently "the state-of-the-art at various tasks relevant to growth". Indeed, machines/external tools have been (part of) the state-of-the-art at some tasks for millennia (e.g. in harvesting), and computers and robots in particular have been the state-of-the-art at various tasks relevant to growth for decades (e.g. in technical calculations and manufacturing). And the proportion of tasks at which machines have been driving growth has been gradually increasing (the pictures of Model 2 was an attempt to illustrate this perspective). Yet despite superhuman machines (i.e. machines that are superhuman within specific tasks) playing an increasing role in pushing growth over the past decades, economic growth rates not only failed to increase, but decreased almost by a factor of 2. That is, robots/machines have already been replacing humans at the growth frontier across various tasks in the way described in premises 1-4, yet we still haven't seen growth increase. So a key question is why we should expect future growth/displacement of this kind to be different. Will it be less gradual? If so, why?

In short, my view is that humans have become an ever smaller part of the combined set of tools pushing growth forward — such that we're in various senses already a minority force, e.g. in terms of the lifting of heavy objects, performing lengthy math calculations, manufacturing chips, etc. — and I expect this process to gradually continue. I don't expect a critical point at which growth rates suddenly explode because the machines themselves are already doing such a large share of the heavy lifting, and an increasing proportion of our key bottlenecks to growth are (already) their bottlenecks to faster growth (which must again be distinguished from claims about absolute room for growth; there may be plenty of potential for growth in various domains without there being an extremely fast way to realize that potential).

Not if B is gradually getting better than A at specific growth-relevant tasks, and if B is getting produced and employed roughly in proportion to how fast it is getting better than A at those specific tasks. In that case, familiar rates of improvement (of B over A) could imply familiar growth rates in the production and employment of B in the future.

Just to be clear, in one sense, I do expect to see a ton of scaling compared to today, I just don't expect scaling growth rates to explode, such that we see a doubling in a year or faster. In a future robot population that is much larger than the current one, consistent 12 percent annual growth would still amount to producing more robots in a single year than had been produced throughout all history 20 years earlier.

I don't disagree with any of the above (which is why I emphasized that I don't think the scaling argument is sufficient to justify a growth explosion). I'm confused why you think the rate of growth of robots is at all relevant, when (general-purpose) robotics seem mostly like a research technology right now. It feels kind of like looking at the current rate of growth of fusion plants as a prediction of the rate of growth of fusion plants after the point where fusion is cheaper than other sources of energy.

(If you were talking about the rate of growth of machines in general I'd find that more relevant.)

By "I am confused by your argument against scaling", I thought you meant the argument I made here, since that was the main argument I made regarding scaling; the example with robots wasn't really central.

I'm also a bit confused, because I read your arguments above as being arguments in favor of explosive economic growth rates from hardware scaling and increasing software efficiency. So I'm not sure whether you believe that the factors mentioned in your comment above are sufficient for causing explosive economic growth. Moreover, I don't yet understand why you believe that hardware scaling would come to grow at much higher rates than it has in the past.

If we assume innovations decline, then it is primarily because future AI and robots will be able to automate far more tasks than current AI and robots (and we will get them quickly, not slowly).

Imagine that currently technology A that automates area X gains capabilities at a rate of 5% per year, which ends up leading to a growth rate of 10% per year.

Imagine technology B that also aims to automate area X gains capabilities at a rate of 20% per year, but is currently behind technology A.

Generally, at the point when B exceeds A, I'd expect growth rates of X-automating technologies to grow from 10% to >20% (though not necessarily immediately, it can take time to build the capacity for that growth).

For AI, the area X is "cognitive labor", technology A is "the current suite of productivity tools", and technology B is "AI".

For robots, the area X is "physical labor", technology A is "classical robotics", and technology B is "robotics based on foundation models".