I'm sure this is a very unpopular take but I feel obliged to share it: I find the "pausing AI development is impossible" arguments extremely parallel to the "economic degrowth in rich countries is impossible" arguments; and the worse consequences for humanity (and its probabilities) of not doing doing them not too dissimilar. I find it baffling (and epistemically bad) how differently these debates are treated within EA.

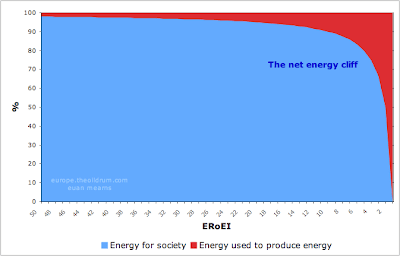

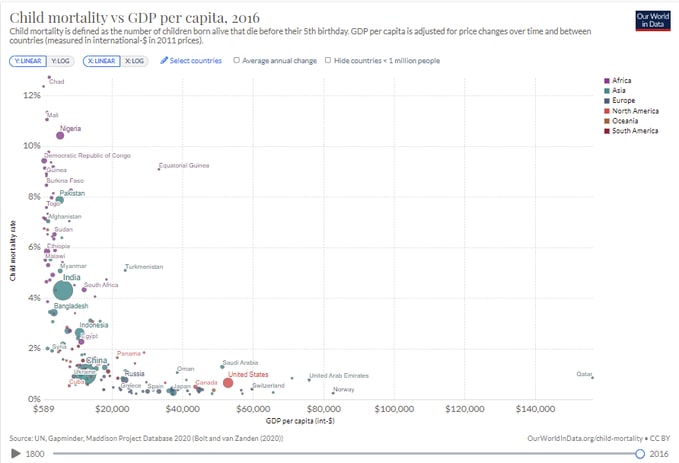

Although parallel arguments can be given for and against both issues, EA have disregarded the possibility to degrowth the economy in rich countries without engaging the arguments. Note that degrowthers have good reasons to believe that continued economic growth would lead to ecological collapse --which could be considered an existential risk as, although it would clearly not lead to the extinction of humanity, it may very well permanently and drastically curtail its potential. The EA community has not addressed these reasons, just argued that economic growth is good and that degrowth in rich countries is anyway impossible. Sounds familiar? "AI development is good and stopping it is anyway impossible".

I have this impression since long and I'd have liked to elaborate it it in a decent post, but I don't have the time. Probably I'm not the only one having this impression so I would ask readers to argue and debate below. Especially if you disagree, explain why or upvote a comment that roughly reflects your view rather than downvoting. Downvoting controversial views only hides them rather than confronting them.

[Additions:

I want to make clear that I find the term degrowth misleading and that many people in that movement use terms like a-growth, post-growth, growth agnostics.

I want to thank the users who have engaged and will engage in the discussion! This was the main objective of the post, thanks.]

[Addition 2:

I think this tweet (and Holly's repost) makes the comparison ever more clear.]

In my mind there are 2 main differences:

I will say, if I thought p(doom | climate) > 10%, with climate timelines of 12 years, then I would be in favor of degrowth policies that seemed likely to reduce this risk. I just think that in reality, the situation is very different than this.

The issue is not only climate change, here. We are in dangerous territory for most of the planetary boundaries.

One of the points is that EAs do not seem to engage with large close-to-existential risks in the minds of degrowthers and the like. It is true that they do not have fleshed out to what extent their fears are existential, but this is because they are large enough for worrying them. See "Is this risk actually existential?" may be less important than we think.

I like your second point. But still, even if it... (read more)