Summary

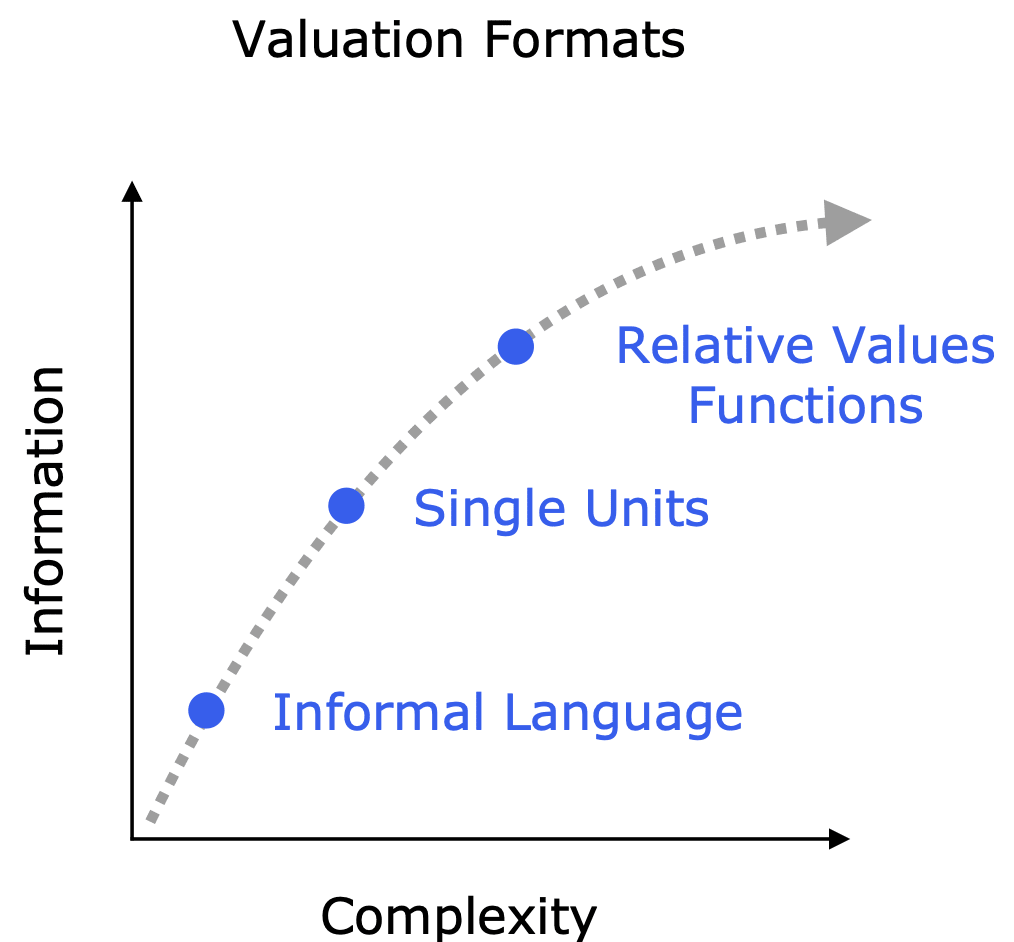

Quantifying value in a meaningful way is one of the most important yet challenging tasks for improving decision-making. Traditional approaches rely on standardized value units, but these falter when options differ widely or lack an obvious shared metric. We propose an alternative called relative value functions that uses programming functions to value relationships rather than absolute quantities. This method captures detailed information about correlations and uncertainties that standardized value units miss. More specifically, we put forward value ratio formats of univariate and multivariate forms.

Relative value functions ultimately shine where single value units struggle: valuing diverse items in situations with high uncertainty. Their flexibility and elegance suit them well to collective estimation and forecasting. This makes them particularly well-suited to ambitious, large-scale valuation, like estimating large utility functions.

While promising, relative value functions also pose challenges. They require specialized knowledge to develop and understand, and will require new forms of software infrastructure. Visualization techniques are needed to make their insights accessible, and training resources must be created to build modeling expertise.

Writing programmatic relative value functions can be much easier than one might expect, given the right tools. We show some examples using Squiggle, a programming language for estimation.

We at QURI are currently building software to make relative value estimation usable, and we expect to share some of this shortly. We of course also very much encourage others to try other setups as well.

Ultimately, if we aim to eventually generate estimates of things like:

- The total value of all effective altruist projects;

- The value of 100,000 potential personal and organizational interventions; or

- The value of each political bill under consideration in the United States;

then the use of relative value assessments may be crucial.

Presentation & Demo

I gave a recent presentation on relative values, as part of a longer presentation in our work at QURI. This features a short walk-through of an experimental app we're working on to express these values.

The Relative Values part of the presentation is is from 22:25 to 35:59.

This post gives a much more thorough description of this work than the presentation does, but the example in the presentation might make the rest of this make more sense.

Challenges with Estimating Value with Standard Units

The standard way to measure the value of items is to come up with standardized units and measure the items in terms of these units.

- Many health measure benefits are estimated in QALYs or DALYs

- Consumer benefit has been measured in willingness to pay

- Longtermist interventions have occasionally been measured in “Basis Points”, Microdooms and Microtopias

- Risky activities can be measured in Micromorts

- COVID activities have been measured in MicroCOVIDs

Let’s call these sorts of units “value units” as they are meant as approximations or proxies of value. Most of these (QALYs, Basis Points, Micromorts) can more formally be called summary measures, but we’ll stick to the term unit for simplicity.

These sorts of units can be very useful, but they’re still infrequently used.

- QALYs and DALYS don’t have many trusted and aggregated tables. Often there are specific estimates made in specific research papers, but there aren’t many long aggregated tables for public use.

- There are very few tables of personal intervention value estimates, like the net benefit of life choices.

- Very few business decisions are made with reference to clear units of value. For example, “Which candidate should we hire?”, “Should we implement X feature?”,

“Which marketing campaign should we go with”, etc. - Longtermist interventions are very rarely estimated in clear units. Perhaps partly because of this, there is fairly little quantified longtermist valuation.

If explicitly estimating value is useful, why is it so unusual?

We think one reason is that these "standard unit valuations" often miss out on a lot of critical information. They lack correlations or joint distributions. This deficiency leads to major compromises and awkward situations, causing human modelers to either give up or generate poor results.

We’ll go through two thought experiments to demonstrate.

Thought Experiment #1: Comparing Research Documents

Let’s imagine using units to evaluate all scientific research. Where would we start? We could try to come up with some measure like, “a quality-adjusted research paper”. But papers about global health are very different from those on AI transparency. And papers are very different from books, presentations, or Tweets.

There’s an intense tradeoff between generality and complexity. On one hand, we could try to evaluate everything in terms of a few units, but then most of the estimates would be extremely uncertain. If we estimate everything in terms of “microdoom equivalents”, then our global health measures will be almost meaningless.

Alternatively, we could try to use 100+ units, with a huge rule book of which things can be evaluated in terms of which units. Subsequently, a distinct system would be required to convert these estimations into standard units for comparisons between clusters. Determining the optimal units prior to conducting the estimation is challenging, and altering the selected units after estimation would entail significant costs. Nuño outlined one related approach here.

So on the one hand, we could use one unit and get poor estimates. On the other, we could use a bunch of units but then need to enforce a lengthy, slow-moving, and still suboptimal ontology.

Below is a simple diagram to help demonstrate this decision process.

Thought Experiment #2: Dollars, in QALYs

How valuable is it for an average human to be given $1, in terms of a unit like QALYs? It’s very uncertain. First, people’s incomes vary a lot. Second, even when just referencing a specific person, it’s really tough to say how money converts to QALYs.

Let’s assume that we use distributions for estimation.

Suppose we estimate that $1 for an “average person” equates to a rough range of 0.001 to 100 (90% CI) “quality-adjusted life minutes”.

Is $5 better than $1? Not obviously, according to such a unit.

Our estimate for $5 might be “0.005 to 500”. However, without any information on the correlations between these two distributions, it is unclear if they are related.

Readers might assume we are unsure if $5 is more valuable than $1. Based on uncorrelated distributions, one might think that there’s a ⅓ chance the $1 has a higher value.

Lacking proper correlations, we cannot perform even simple arithmetic operations. Basic procedures like addition and division would yield distributions with significantly wider tails than what is accurate. (See Appendix 1 for an example)

This is not an isolated example. In practice, correlations are commonly found between interventions. Some research papers may be clearly more valuable than others, despite both being highly uncertain in terms of shared value units. The absence of correlation data can make certain decisions appear much more uncertain than they truly are.

Takeaways

We’ve discussed estimating value in standard units. This works for homogeneous items with highly relevant known units but breaks down if either the items are heterogeneous or if there’s no appropriate known unit.

A different option is the possibility of providing a set of units, with some conversion factors between them. This allows for the evaluation of more heterogeneous items but introduces substantial complexity.

| Can value similar items? | Can value dissimilar items? | Imposed Structure | |

| Single Unit | Yes, if unit exists. | Very poorly | Low. Just the choice of unit. |

| Set of Units | Yes, if unit exists. | Yes, if all elements fit well with units. | High. Many units, each for different sets of items. |

As demonstrated in this table, both approaches have severe downsides.

Relative Values, with Value Ratios

Introduction

One solution to the downside of standard units is relative values: comparing items’ values directly to each other.

To be more specific, we suggest using the ratios of values of items, or value ratios. As with all ratios, these are unitless.

In practice, this works by having human modelers provide estimates for the ratios of every possible pair of items. This could be done by hand, but programming functions would make it scalable to large combinations of items. Storing estimates with programming code is unusual, but powerful.

Minor Aside:

Value ratios are actually a generalization of using standard value units. In those cases, valuations are estimates in the format,Instead, with value ratios, modelers could generate results for the ratios of any pair of items. This can of course include standard units, but it’s not limited to them.

It’s important to use probability distributions instead of point values when dealing with heterogeneous and uncertain estimates. Different comparisons will have wildly different uncertainty ranges, and it’s important to represent this.

In the rest of this section, we’ll go over a concrete example, and then discuss the costs and benefits of this approach.

Comparison Tables

Say we want to compare the value of the following items:

- $1

- $5

- A 0.001% chance of death

- A 0.005% chance of death

Instead of creating one unit, let’s make a table of ratio comparisons.

Let’s imagine that we think that $5 is roughly (4.8 to 5) times as valuable as $1, and a 0.005% chance of death is roughly (4.9 to 5) times as bad as a 0.001% chance. Say we have some unit conversion, death_to_dollars, to convert a 0.001% chance of death to dollars. death_to_dollars is probably some very uncertain distribution, like (10 to 100,000).

Here’s a table where each cell is an estimate of the value of the row item, divided by the value of the column item. The top right triangle is just the inverse of the bottom left triangle, so is not fully shown. The diagonals are all exactly a point mass at 1, as they refer to an item's value divided by itself.

| $1 | $5 | 0.001% chance of death | 0.005% chance of death | |

| $1 | 1 | 1/(4.8 to 5) | -1*1/death_to_dollars | … |

| $5 | 4.8 to 5 | 1 | … | … |

| 0.001% chance of death | -1*death_to_dollars | -1*death_to_dollars*(4.8 to 5) | 1 | … |

| 0.005% chance of death | death_to_dollars | -1*(4.9 to 5) *death_to_dollars /(4.8 to 5) | 4.9 to 5 | 1 |

It might be rare for someone to want to know the value of $5 in terms of “0.001% chance of death”, but in this setup, viewers can access that if they want it. When they do, they’ll see that it’s very uncertain. But we still can see very well the close relationships between $1 and $5, and a 0.001% chance of death vs. a 0.005% chance of death.

It would now be straightforward to add more items to this list, like,

- 1 Micromort (a one-in-a-million chance of death)

- The risk of death from a certain risky activity, like BASE jumping.

- The total of both the health and financial costs of BASE jumping.

- $1,000.00

Note that if we were to convert everything into “one unit”, that would effectively be one single row of the grid. If we were to try to use a few units, that would force us to split our list up into clusters. Items like “The total of both the health and financial costs of BASE jumping” might not fit well into any one cluster. But with the full grid, we don’t have these disadvantages.

Programming Functions

Manually constructing these tables is needlessly time-consuming. Coding simplifies this process. The majority of the task above involves the estimation of three variables and their appropriate reapplication—a task easily managed by software.

The key function we need is one with approximately the following type definition:

fn(id1, id2) => distributionSuch a function can easily generate the full table if desired, but often we just need a small subset of it.

Writing such functions doesn’t have to be very laborious. The key parts can be just a simple list of relationships. Here's some example pseudocode.

dollar1 = 1 // The value of $1. This is the unit, so we're marking as 1

dollar5 = dollar1 * (4.7 to 5) // The value of $5. Assuming (steeply) diminishing marginal returns

costOfp001DeathChance = 10 to 10k // Cost of a 0.001% chance of death, in dollars

chanceOfDeath001 = -1 * costOfp001DeathChance * dollar1 // Cost of a 0.001% chance of death

chanceOfDeath005 = chanceOfDeath001 * (4.7 to 5) // Cost of a 0.005% chance of death

... boilerplate code ...We give a simple working example using Squiggle in Appendix 2.

"Functions as Estimates" are more generally a powerful mechanism to embed complicated beliefs. We believe this form deserves more attention, and intend to write more about them specifically in future posts. Relative value functions illustrate some of the possibilities of such an approach.

Specific Formats

There are multiple specific options in how to represent value ratios using probability distributions. Here are a few, each with a slightly different programming type definition.

Value Ratio (Univariate)

fn(id1, id2) => distributionA single probability distribution meant to represent the quotient of two valuations. This is similar to the ratio form X/Y. Existing valuations that use standard units often use univariate value ratios.

The univariate format is very useful for presentation (i.e. showing to users). Even in more advanced cases, the X/Y univariate format will often be shown.

One important hindrance is that this format can be poor if the value of either compared item has probability mass at negative values, zero, or infinity. In those situations, bivariate or multivariate approaches are preferred.

Value Ratio (Bivariate)

fn(id1, id2) => (distribution, distribution)A joint density of two probability distributions, meant to represent the ratios of two valuations. This is similar to the ratio form X:Y. These distributions are scale-invariant. For example, the ratio “5:8” is equivalent to the ratio “50:80”.

One downside with the bivariate approach is that it assumes the modelers broadly agree on which items have positive value and which have negative value. In the case of univariate distributions, this distinction is ambiguous, which can, in some situations, be preferable.

For example, say two modelers are comparing clean meat interventions. One modeler suspects that better clean meat work actually leads to net global harm, because of the net impact on insects. The modelers agree on the ratio of these two interventions, but one thinks they are both negative, while the other expects them to be positive. In this case, their univariate distributions could be very similar, but the bivariate distributions will be approximately inverted.

Value Ratio (Multivariate)

fn([id]) => [distribution]A joint density of more than two probability distributions, representing the ratios of multiple valuations.

Depending on how value ratio programming functions are written, it could either be trivial to go from univariate outputs to multivariate outputs, or it might be very difficult.

Full multivariate distributions clearly have more information than the univariate or bivariate approaches, but it would take additional work to make good use of this information.

Advantages

High information storage capacity

The primary advantage of relative value formats is their ability to hold more information than simple lists of values in basic units. As stated above, a single unit would correspond to just a single row in a full value ratio comparison table; the rest is additional information.

In real-world decision-making, choices often involve comparisons between similar options. Whether it's a business comparing offerings from two appliance vendors or a donor choosing from a pool of researchers in a comparable field, these decisions often can be understood as relative values between similar options. The extra information that relative value formats provide is typically primarily around comparing similar items, so will be useful in these situations. This precision can guide decision-makers towards locally optimal decisions—a significant advantage in the grand scheme of things.

Ease of getting started

One of the major drawbacks of units is the frequent lack of an appropriate unit. An estimator might be ready to estimate the value of several items, but if they don’t know of a great unit to use for comparison, they might easily give up.

In contrast, with relative values, estimators just need to grasp some reasonable value definition. After they have done so, they can start doing the majority of the work by estimating the value of different projects against each other. Later on, if a great unit becomes popular, it would be easy to later add that unit to their estimated item list.

Arguably, units like QALYs should be treated as presentation methods rather than fundamental information storage mechanisms. Estimators shouldn’t need to worry about getting the units right upfront before doing the majority of their work, it should be easy to add conversions later to whatever formats/units viewers find most useful.

Composability

Multiple relative value functions, each pertaining to mostly different sets of items, can be combined using approximation, provided some overlap exists. This process of stitching may not be as accurate as a full function, but in many instances, the approximation could be accurate enough to be useful.

Thus, different parties can independently focus on items they find interesting, and their work can still be integrated into a larger system.

If attempted with units, this would necessitate sets of units, which would impose a lot of overhead and coordination.

Resiliency to different opinions

Differences in opinion often result in minor alterations in relative values. For example, two people may disagree on the value of climate change work versus nuclear risk work, but they are likely to agree on many of the relative values between specific climate change projects and between specific nuclear risk projects. In an extreme example, two people might disagree on if a set of things is harmful or beneficial, but still agree on many of the ratios.

Estimations using sets of units would be similarly robust if the clusters were very well-chosen (“climate change projects” vs. “nuclear risk projects”), but again, this would be a lot of work to get right.

Costs

Relative value functions as we describe them are very novel, so the upfront costs in particular will be expensive. The marginal work of writing the functions will likely require specialized labor.

- We need infrastructure for storing and visualizing these functions.

- Writing these functions takes knowledge of the right programming tools.

- Complex relative value estimation can require large models, which can get very complicated.

- Both estimators and audiences will need to become sophisticated in writing and understanding them.

It’s possible that with the advent of AI code generation, these costs can go down substantially.

Limitations

Relative value assessments serve as an advancement of cost-benefit analysis, so have the corresponding limitations of cost-benefit analysis. You can think of relative value functions as enabling tons of simple auto-generated cost-benefit analyses.

Requirement of clear counterfactuals

Cost-benefit analysis necessitates interventions with well-understood counterfactual scenarios. They work best with clear binary decisions. Estimating the net cost-benefit of electing one Presidential candidate over another or in relation to a probable outcome is feasible, yet one can’t evaluate a Presidential candidate's value in isolation. Similarly, the marginal utility derived from consuming a particular food item can only be comprehended when juxtaposed with a distinct alternative scenario.

Narrow definitions, particularly about notions of value

Cost-benefit analyses typically use incredibly narrow notions of value. There’s a very large set of ways to define and estimate value. Specific cost-benefit analyses typically either choose one or choose a very limited subset.

For example, these two following values could be wildly different. Estimating the relative value of a party in terms of that of microCovids, using Utility as the specification of value, will be very different than if you use a microCovid as the specification of value.

Future Directions

We can take the basic ideas of relative values and value ratios, and imagine even more ambitious efforts.

1. Passing in parameters

Instead of each passed-in relative value function parameter being a string representing a single item, we could allow for algebraic data types with continuous variables.

Imagine something like:

fn(ExtraIncome({value: Pounds(52), personIncome: Pounds(57k)}), ChanceOfDeath({amountInPercent: 12.3, personAge: 45}))This function call is requesting a comparison of £52 of extra income, to a person with an income of £57,000, to a 12.3% chance of death, to a person who is 45 years old.

These input types can become arbitrarily detailed.

This sort of additional complexity would, of course, require more work for estimators, but the function API could be fairly straightforward.

Functions can also provide estimates for multiple specifications of value. For example, a function could estimate the value of an intervention as judged by either a US Republican voter or a US Democratic voter.

2. Handling combinations of items

Instead of taking in a set of specific items, functions can accept a set of lists of items.

For example,

fn([project1, project2, project7], [project5, project 6])Each input would represent the counterfactual value of the complete set of things. This is important because very frequently there is significant overlap between items.

In the above example, a user wants to calculate the ratio of value between the total of projects [1, 2, and 7], compared to that of projects [5 and 6]. If the modeler believed that projects 1 and 7 were completely replaceable with each other, then this would need to be taken into account.

Shapley values are one way of dealing with item value overlap, but programming functions like above would strictly represent more information. It would be easy to calculate Shapley values using complete functions, but there's not enough information to go the other direction.

This type definition would be difficult to provide very high-resolution estimates for. However, in many cases, simple approximations might still be good enough to be useful.

3. Forecasting

Value ratio functions could be neatly aggregated and scored, like other forecasting formats. There just needs to be some resolution mechanism for the scoring. This would likely require some panel to make subjective judgments.

4. Alternative input formats, for modelers

Functions are valuable for storage, but that doesn’t mean that all modelers need to directly write functions. There could be custom user interfaces that elicit estimates from people in other ways, and convert those into value ratio functions. There’s likely a lot of room for creativity in this area.

5. LLM Generation

With the proliferation of large language models, it seems feasible that such models could either assist in writing value ratio functions, or could do all of the work. In theory, it might be possible to use LLMs to approximate very large value ratio functions using existing online data, and then help use those for decision-making. This sort of format could be useful for clearly specifying value estimates otherwise stored in neural networks.

Tradeoffs and Conclusion

We can now expand the previous trade-off table to include relative values. Relative value formats do very well on the categories in the previous table, but have the new downside of requiring programming and infrastructure.

| Def | How well can this value similar items? | How well can this value dissimilar items? | Imposed structure | Needs estimators to produce code? | Handles zeros and negative numbers | |

| Single Unit | (id) => v(id) / v(unit) | Good, if unit exists | Bad | Low. Just the choice of unit. | No | Yes |

| Set of Units | (id) => v(id) / v(unit_i) | Good, if unit exists | Good, if all elements are covered by units | High. Many units, each for different sets of elements. | No | Yes |

| Value Ratios, Univariate | (id1, id2) => v(id1) / v(id2) | Very Good | Very Good | None | Yes | No |

| Value Ratios, Multivariate | (id1, id2) => [v(id1), v(id2)] | Very Good | Very Good | None | Yes | Yes |

Later on we can go further, with even more complicated formats. But we think that relative value functions are a good tradeoff to aim for at this point, for groups interested in large-scale heterogeneous valuations.

Further Content

The following is background content that might be useful to some readers. We moved this to the end of this piece to highlight that it is optional.

Relationships to QURI’s previous work

Our work at QURI has involved developing ideas related to value estimation and forecasting.

In Amplifying generalist research via forecasting, a forecasting platform was used to predict generic evaluations by an expert. Similarly, forecasters can use value ratio functions as their direct predictions, with resolutions provided by experts or community panels evaluating a narrow subset of value combinations.

In Valuing research works by eliciting comparisons from EA researchers and An experiment eliciting relative estimates for Open Philanthropy’s 2018 AI safety grants, we elicited valuations on the value of effective altruist projects by asking people to give probability distributions that represented the ratio of value between projects. The main goal of using ratios was for easier elicitation, not presentation. Here we focus on functions that generate relative values as a fundamental format, not as a tool for elicitation.

Five steps for quantifying speculative interventions described a workflow for using a set of units to value interventions and using conversion factors to compare interventions of different clusters. Relative values are a different alternative to this option.

Much of our recent work has been on Squiggle, a programming language meant to make estimates using things like relative estimates easy. We’re currently developing some tooling specifically for relative value estimation using Squiggle.

Related Topics

Relative Values in Finance

In financial valuation, relative values serve as an alternative to intrinsic values. These valuations are generally employed to compare a business or investment to a small group of similar alternatives.

The widespread popularity of relative valuations in finance highlights the potential utility of relative values more broadly. However, it is important to note that relative values in finance are typically applied within narrowly defined clusters, which contrasts with the extensive scope we propose when using relative value functions.

Discrete Choice Models

Discrete choice models, or qualitative choice models, serve as valuable tools for extracting preferences between different alternatives. These models have been extensively studied within the field of economics.

Typically, discrete choice models focus on eliciting preferences between a relatively small set of options from individuals who may not have engaged in extensive reflection on their preferences.

Relative value functions, in contrast, are meant primarily as a way to express one’s estimates of value. This can be the value to a collective, not an individual. For example, an estimator could build a relative value function that’s meant to estimate what “a committee of random members of group X would believe”. We could very much imagine the use of discrete choice models being used to inform and complement these estimates, but the relative value functions should provide a different set of information.

Combinatorial Prediction Markets

Combinatorial Prediction Markets have been suggested as one useful tool for scaling prediction questions. One approach to representing complex joint distributions for these formats is by using Bayesian Networks.

Arbitrary functions are more general than Bayesian Networks, which come with different tradeoffs.

Utility Functions

Many value unit tables can be regarded as utility functions. As utility functions, they would be partial (not accounting for all possible outcomes), explicit (as opposed to theoretical), and approximated.

The distinction between value and utility itself is often ambiguous, with different fields using different terminology. In some cases, the words are interchangeable.

It is plausible that numerous reasonable value tables would satisfy the completeness and transitivity criteria required by the Von Neumann-Morgenstern (VNM) utility theorem. If decision-makers were to employ value unit tables while considering expected values, these tables would frequently correspond to VNM utility functions.

All this to say, the techniques and limitations in this document apply to both explicit utility functions and other kinds of value formats.

Appendices

Appendix 1: Dollar Uncorrelated Example

If we treated these as uncorrelated, and used the ranges of (0.005 to 500) and (0.001 to 100), then a simple subtraction would result in

value($5) - value($1) -> -60 to 110 // from calculation

value($5) / value($1) -> 0.0015 to 17,000 // from calculationBut what we really want, if they were properly correlated is:

value($5) - value($1) -> 0.004 to 400

value($5) / value($1) -> 5See this Squiggle Playground for an (approximate) example.

Appendix 2: Programmatic Relative Values Functions

Let’s consider functions that produce value ratios. We are going to be doing this with Squiggle, a language for probabilistic estimation.

We want a function that takes two strings denoting two items, and returns their relative values:

fn(id,id) -> DistributionTo reach a function of that type, we start with putting the conversion factors of our previous table into code:

dollar1 = 1

dollar5 = dollar1 * (4.7 to 5)

costOfp001DeathChance = 10 to 10k // Cost of a 0.001% chance of death, in dollars

valueChanceOfDeath001 = -1 * costOfp001DeathChance * dollar1

valueChanceOfDeath005 = valueChanceOfDeath001 * (4.7 to 5)We want to now make two changes to this:

- Make sure we have a function that takes in two strings, and returns the corresponding variables. We do this by making a simple dictionary with the IDs as the keys, and the variables as the corresponding values. (Here, we use the same names between them.)

- Make sure that all reused variables are done as Sample Sets. This makes sure that repeated values get divided out, or,.

ss(d) = SampleSet.fromDist(d) // Makes sure we use Sample Sets, which is important for correlations

dollar1 = 1 // The value of $1. This is the unit, so we're marking as 1

dollar5 = ss(dollar1 * (4.7 to 5)) // The value of $5. Assuming (steeply) diminishing marginal returns

costOfp001DeathChance = ss(10 to 10k) // Cost of a 0.001% chance of death, in dollars

chanceOfDeath001 = ss(-1 * costOfp001DeathChance * dollar1) // Cost of a 0.001% chance of death

chanceOfDeath005 = chanceOfDeath001 * (4.7 to 5) // Cost of a 0.005% chance of death

items = {

dollar1: dollar1,

dollar5: dollar5,

chanceOfDeath001: chanceOfDeath001,

chanceOfDeath005: chanceOfDeath005

}

fn(item1, item2) = items[item1] / items[item2]

//fn("chanceOfDeath005", "chanceOfDeath001")That’s it. This function now can generate the full value ratio comparison table. Instead of needing 4x4 written comparisons, as we would with a written table, we just really need 4 lines of code (plus boilerplate and helper functions).

As one might expect, these can get significantly more complicated with scale. This specific setup above calculates all listed variables, which will be computationally impractical for functions that estimate large sets of items.

Acknowledgements

This is a project of the Quantified Uncertainty Research Institute. Thanks to Nuño Sempere and Willem Sleegers for comments and suggestions. Also, thanks to Anthropic's Claude, which was useful for rewriting certain sections of this and brainstorming terminology.

Yep, that's basically it.

I'm not sure what you are referring to here. I would flag that the relative value type specification is very narrow - it just states how valuable things are, not the "path of impact" or anything like that.

You need some programming infrastructure to do them. The Squiggle example I provided is one way of going about this. I'd flag that it would take some fiddling to do this in other languages.

If you try doing relative values "naively" (without functions), then I'd expect you'd run into issues when dealing with a lot of heterogenous kinds of value estimates. (Assuming you'd be trying to compare them all to each other). Single unit evaluations are fine for small lists of similar things.