Can GPT-3 predict real world events? To answer this question I had GPT-3 predict the likelihood for every binary question ever resolved on Metaculus.

Predicting whether an event is likely or unlikely to occur, often boils down to using common sense. It doesn't take a genius to figure out that "Will the sun explode tomorrow?" should get a low probability. Not all questions are that easy, but for many questions common sense can bring us surprisingly far.

Experimental setup

Through their API I downloaded every binary question posed on Metaculus.

I then filtered them down to only the non-ambiguously resolved questions, resulting in this list of 788 questions.

For these questions the community's Mean Squared Error was 0.19, a good deal better than random!

Prompt engineering

GPT's performance is notoriously dependent on the prompt it is given.

- I primarily measured the quality of prompts, on the percentage of legible predictions made.

- Predictions were made using the most powerful DaVinci engine.

The best performing prompt was optimized for brevity and did not include the question's full description.

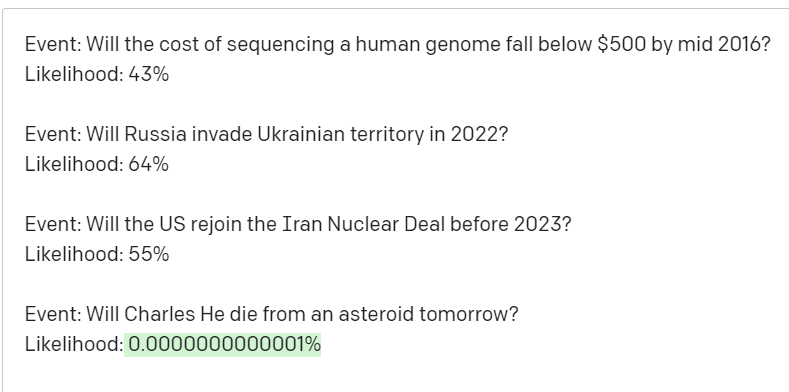

A very knowledgable and epistemically modest analyst gives the following events a likelihood of occuring:

Event: Will the cost of sequencing a human genome fall below $500 by mid 2016?

Likelihood: 43%Event: Will Russia invade Ukrainian territory in 2022?

Likelihood: 64%Event: Will the US rejoin the Iran Nuclear Deal before 2023?

Likelihood: 55%Event: <Question to be predicted>

Likelihood: <GPT-3 insertion>

I tried many variations, different introductions, different questions, different probabilities, including/excluding question descriptions, etc.

Of the 786 questions, the best performing prompt made legible predictions for 770. For the remaining 16 questions GPT mostly just wrote "\n".

If you want to try your own prompt or reproduce the results, the code to do so can be found in this Github repository.

Results

GPT-3's MSE was 0.33, which is about what you'd expect if you were to guess completely at random. This was surprising to me! GPT Why isn't GPT better?

Going into this, I was confident GPT would do better than random. After all many of the questions it was asked to predict, resolved before GPT-3 was even trained. There's probably some of the questions it knows the answer to and still somehow gets wrong!

It seems to me that GPT-3 is struggling to translate beliefs into probabilities. Even if it understands that the sun exploding tomorrow is unlikely, it doesn't know how to formulate that using numeric probabilities. I'm unsure if this is an inherent limitation of GPT-3 or whether its just the prompt that is confusing it.

I wonder if predicting using expressions such as "Likely" | "Uncertain" | "Unlikely", and interpreting these as 75% | 50% | 25% respectively could produce results better than random, as GPT wouldn't have to struggle with translating its beliefs into numeric probabilities. Unfortunately running GPT-3's best engine on 800 questions would be yet another hour and $20 I'm reluctant to spend, so for now that will remain a mystery.

It may be that even oracle AI's will be dangerous, fortunately GPT-3 is far from an oracle!

(Crossposted from lesswrong: https://www.lesswrong.com/posts/c3cQgBN3v2Cxpe2kc/getting-gpt-3-to-predict-metaculus-questions)

I don't know much about AI or machine learning, but as you say, I think some of the reason for your results is that the language model of GPT-3 doesn't have a great "connection" between the "real world" "latent information" in your questions, and the probabilities you want. This deficiency is sort of what you're suggesting in your post, and I think you're right.

I think another major reason is sort of the prompt design or "mindset of use" of GPT-3.

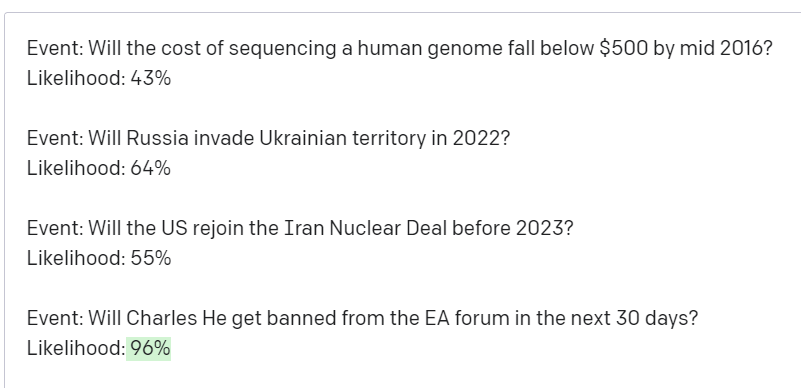

I guess I would sort of say it's useful to see it as a "paid actor". This is a case where it's useful to see GPT-3 as "acting" or trying to generate text that rationalizes a certain framing.

It sort of tries to figure out from your prompt if it was writing a blog post, writing a joke, or having starker, more dramatic framing.

Once you get it into this "mindset", you actually get meaningful completions.

Examples:

Completion 1:

This is not a joke, the above is an actual completion.

Completion 2:

I am not joking, again the above is an actual completion. I am not sure how it is so accurate, since GPT-3's training info is cut off at 2019.

See the parameters below:

Also, there's several comments on your prompt, that you might have thought of before:

- As you noted, your first "long" prompt is long and in this case, this impedes GPT-3's performance. In my own words, I would say that makes it harder for GPT to "construct" the framing involved. https://github.com/MperorM/gpt3-metaculus/blob/main/gpt_prompt.py

- For your shorter prompt you ended up using, I think you might get different results by changing the questions to be similar to the domain, or to "cover more domain space", or "loosen up the probability space".

- You

... (read more)