This is mostly addressing people who care a lot about improving the long-term future and helping life continue for a long time, and who might be tempted to call themselves “longtermist.”

There have been discussions about how “effective altruist” shouldn’t be an identity and some defense of EA-as-identity. (I also think I’ve seen similar discussions about “longtermists” but don’t remember where.) In general, there has been a lot of good content on the effect of identities on truth-seeking conversation (see Scout Mindset or “Keep Your Identity Small”[1]).

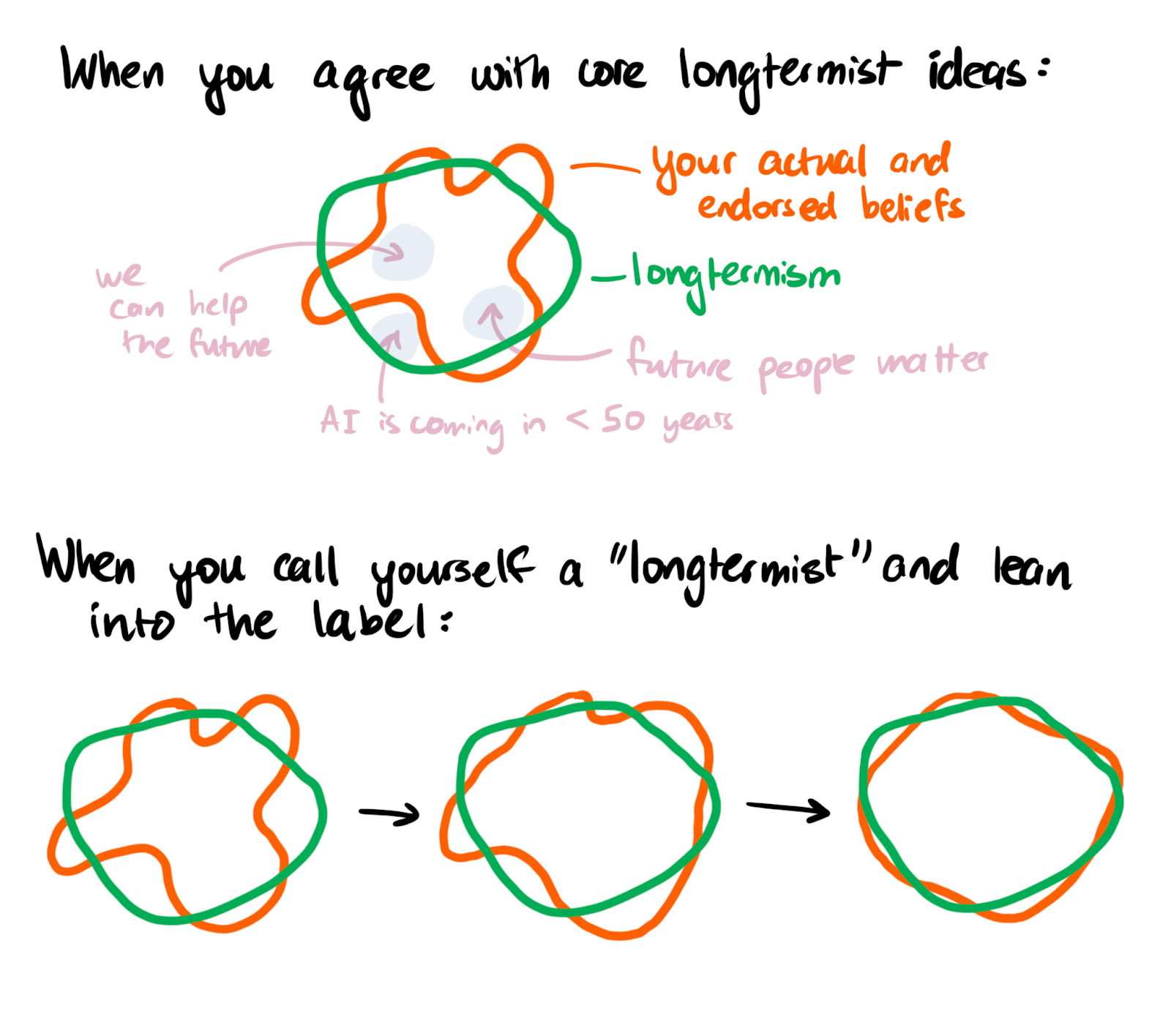

But are there actual harms of identifying as a “longtermist”? I describe two in this post; it can make it harder to change your mind based on new information, and it can make your conversations and beliefs more confused by adding aspects of the group identity that you’d otherwise not have adopted as part of your individual identity.

(0) What is “longtermism”?

When people say “I’m a longtermist,” they mean something like: “I subscribe to the moral-philosophical theory of longtermism.’”

So, what is “longtermism?” The definition given in various places is “the view that positively influencing the long-term future is a key moral priority of our time.” In practice, this often relies on certain moral and empirical beliefs about the world:

- Believing that future beings are morally relevant — this belief is needed in order to argue that we should put resources to helping them if we can, even if those efforts trade off against resources that could go to beings alive today (a moral belief)

- Not putting a significant “pure temporal discount rate” on the moral value of future beings (a person in 1000 years is “worth” basically as much as a person today) (a moral belief)

- Believing that the future is big — that the value of the future can be enormous, and thus the scope of the issue is huge (an empirical belief)

- And thinking that it’s actually possible to help future beings in non-negligible ways in expectation[2] (an empirical belief)

It’s worth pointing out that most people (even those who wouldn’t call themselves “longtermist”) care about future beings. Some people disagree on (2) or (3). And lots of people in effective altruism who disagree with longtermism, I think, disagree primarily with (4): the feasibility of predictably helping the future.

Importantly, I think that while your position on 1-2 can be significantly dependent on strongly felt beliefs,[3] 3-4 are more based on facts about the world: empirical data and arguments that can be refuted.[4]

(1) Calling yourself “longtermist” bakes empirical or refutable claims into an identity, making it harder to course-correct if you later find out you’re wrong.

Rewind to 2012 and pretend that you’re an American who’s realized that, if you want to improve the lives of (current) people, you should probably be aiming your efforts at helping people in poorer countries, not the US. You’d probably not call yourself a "poor-country-ist."[5] Instead, you might go for “effective altruist”[6] and treat the conclusion that the most effective interventions are aimed at people in developing countries as an empirical conclusion given certain assumptions (like impartiality over geographic location). If it turns out that the new most effective intervention would be to help Americans, you can pivot to that without sacrificing or fighting your identity.[7]

Some of the earliest and most influential proponents of longtermism agree that longtermism is not the for-sure-correct approach/belief/philosophy.

For instance, Holden Karnofsky writes:

There are arguments that our ethical decisions should be dominated by concern for ensuring that as many people as possible will someday get to exist. I really go back and forth on how much I buy these arguments, but I'm definitely somewhere between 10% convinced and 50% convinced. So ... say I'm "20% convinced" of some view that says preventing human extinction [6] is the overwhelmingly most important consideration for at least some dimensions of ethics (like where to donate), and "80% convinced" of some more common-sense view that says I should focus on some cause unrelated to human extinction.

I think you should give yourself the freedom to discover later that some or all of the arguments for longtermism were wrong.

As Eric Neyman puts it when discussing neoliberalism as an identity [bold mine]:

In a recent Twitter thread, I drew a distinction between effective altruism and neoliberalism. In my mind, effective altruism is a question (“What are the best ways to improve the world?”), while neoliberalism is an answer (to a related question: “What public policies would most improve the world?”). Identities centered around questions seem epistemically safer than those centered around answers. If you identify as someone who pursues the answer to a question, you won’t be attached to a particular answer. If you identify with an answer, that identity may be a barrier to changing your mind in the face of evidence.

(2) The identity framing adds a bunch of group-belief baggage

Instead of saying something like, “together with such-and-such assumptions, the arguments for longtermism, as described in XYZ, imply YZX conclusions,” the group identity framing leads us to make statements like “longtermists believe that we should set up prediction markets, the ideal state of the world is 10-to-the-many brains in vats, AI will kill us all, etc.”

Similarly, when you don the “longtermist” hat, you might feel tempted to pick up beliefs you don’t totally buy, without fully understanding why this is happening.

It’s possible and valid to believe that future people matter, that we should make sure that humanity survives, and that this century is particularly influential — but not that AI is the biggest risk (maybe you think bio or some other thing is the greatest risk). That view should get discussed and tested on its merits but might get pushed out of your brain if you call yourself a “longtermist” and let the identity and the group beliefs form your conclusions for you.[8]

P.S.

A note of caution: the categories were made for man, not man for the categories.

I’m not saying that there’s no such thing as a group of people we might want to describe with the term “longtermists.” I think this group probably exists and the term “longtermist” can be useful for pointing at something real.

I just don’t really think that these people should want to call themselves “longtermist,” cementing the descriptive term for a vaguely defined group of people as a personal or individual identity.

Some things you can say instead of “I’m a ‘longtermist,’” depending on what is actually true:

- I think the arguments for longtermism are right.

- I think that positively influencing the long-term future is a key moral priority of our time.

- (This and #1 are the closest to being synonymous with or a literal translation of “I am a longtermist.”)

- I try to do good chiefly by helping the long-run future.

- Or: “I believe that most of my predictable positive impact on the world is via my improvements of the long-run future.”

- (This is also a decently close translation of “I am a longtermist.”)

- I don’t want humanity to go extinct (because there’s so much potential) and am trying to make the chances of that happening this century smaller.

- I think this century might be particularly important, and I think in expectation, most of my impact comes from the world in which that’s true, so I’m going to act as if it is.

- I think existential risks are real, and given the scope of the problem, think we should put most of our efforts into fighting existential risks. (And I think the biggest existential risk is the possibility of engineered pandemics.)

- See also: "Long-Termism" vs. "Existential Risk"

- I believe that future beings matter, and I’m working on figuring out whether there’s anything we can or should do to help them. I’ll get back to you when I figure it out.

Thanks to Jonathan Michel and Stephen Clare for leaving comments on a draft! I'm writing in a personal capacity.

- ^

“Keep Your Identity Small” argues that when a position on a certain question becomes part of your identity, arguments about topics related to that question become less productive. The examples used are politics, religion, etc. Short excerpt: “For example, the question of the relative merits of programming languages often degenerates into a religious war, because so many programmers identify as X programmers or Y programmers. This sometimes leads people to conclude the question must be unanswerable—that all languages are equally good. Obviously that's false: anything else people make can be well or badly designed; why should this be uniquely impossible for programming languages? And indeed, you can have a fruitful discussion about the relative merits of programming languages, so long as you exclude people who respond from identity.”

- ^

I’ll have a post about this soon. (Committing publicly right now!)

- ^

This is disputable, but not crucial to the argument, and I think I’m presenting the more widely held view.

- ^

How chaotic is the world, really? How unique is this century? What’s the base rate of extinction?

- ^

(I acknowledge that I’m putting very little effort into producing good names.)

- ^

(or reject identity labels like this entirely…)

- ^

Note that this reasoning applies to other worldviews, too — even some that are widespread as identities.

One example is veganism. If you’re vegan for consequentialist reasons, then your reasoning for being vegan is almost certainly dependent on empirical beliefs which may turn out to be false. (Although perhaps the reasoning behind veganism is less speculative and more tested than that behind longtermism — I’m not sure.) If the reasoning might be wrong, it might be better to avoid having veganism as part of your identity so that you can course-correct later.

The analogy with veganism also brings up further discussion points.

One is that I think there are real benefits to calling yourself “a vegan.” For instance, if you believe that adhering to a vegan diet is important, having veganism as part of your identity can be a helpful accountability nudge. (This sort of benefit is discussed in Eric’s post, which I link to later in the body of the post.) It can also help get you social support. But I still think the harms that I mention in this document apply to veganism.

Similarly, there are probably benefits to calling yourself “a longtermist” beyond ease and speed.

A different discussion could center around the politicization of veganism, whether that’s related to its quality as a decently popular identity, and whether that has any implications for longtermism.

- ^

Stephen describes related considerations in a comment.

This all seems reasonable. But here's why I'm going to continue thinking of myself as a longtermist.

(1) I generally agree, but at some point you become sufficiently certain in a set of beliefs, and they are sufficiently relevant to your decisions, that it's useful to cache them. I happen to feel quite certain in each of the four beliefs you mention in (0), such that spending time questioning them generally has little expected value. But if given reason to doubt one, of course my identity "longtermist" shouldn't interfere, and I think I'm sufficiently epistemically virtuous that I'd update.

(2) I agree that we should beware of "group-belief baggage." Yesterday I told some EA friends that I strongly identify as an EA and with the EA project while being fundamentally agnostic on the EA movement/community. If someone identifies as an EA, or longtermist, or some other identity not exclusively determined by beliefs, I think they should be epistemically cautious. But I think it is possible to have such an identity while maintaining caution.

Put another way, I think your argument proves too much: it's not clear what distinguishes "longtermist" from almost any other identity that implicates beliefs, so an implication of your argument seems to be that you shouldn't adopt any such identity. And whatever conditions could make it reasonable for a person to adopt an identity, I think those conditions hold for me and longtermism.

(And when I think "I am a longtermist," I roughly mean "I think the long-term future is a really big deal, both independent of my actions and in terms of what I should do, and I aspire to act accordingly.")

This seems like a useful distinction, which puts words to something on the back of my mind, thanks

Yes, the former are basically normative while the latter is largely empirical and I think it's useful to separate them. (And Lizka does something similar in this post.)

Thanks for this comment!

I think you're right that I'm proving too much with the broad argument, and I like your reframing of the arguments I'm making as things to be wary of. I'm still uncomfortable with longtermism-as-identity, though --- possibly because I'm less certain of the four beliefs in (0).

I'd be interested in drawing the boundaries more carefully and trying to see when a worldview (that is dependent on empirical knowledge) can safely(ish) become an identity without messing too much with my ability to reason clearly.

+1

Also, I think this all depends on the person in addition to the identity: both what someone believes and their epistemic/psychological characteristics are relevant to whether they should identify as [whatever]. So I would certainly believe you that you shouldn't currently identify as a longtermist, and I might be convinced that a significant number of self-identified longtermists shouldn't so identify, but I highly doubt that nobody should.

Isn't this also true of "Effective Altruist"? And I feel like from my epistemic vantage point, "longtermist" bakes in many fewer assumptions than "Effective Altruist". I feel like there are just a lot of convergent reasons to care about the future, and the case for it seems more robust to me than the case for "you have to try to do the most good", and a lot of the hidden assumptions in EA.

I think a position of "yeah, agree, I also think people shouldn't call themselves EAs or rationalists or etc." is pretty reasonable and I think quite defensible, but I feel a bit confused what your actual stance here is given the things you write in this post.

What empirical claims are baked into EA?

I think in practice, EA is now an answer to the question of how to do the most good, and the answer is “randomista development, animal welfare, extreme pandemic mitigation and AI alignment”. This has a bunch of empirical claims baked into it.

I see EA as the question of how to do the most good; we come up with answers, but they could change. It's the question that's fundamental.

But in practice, I don’t think we come up with answers anymore.

Some people came up with a set of answers, enough of us agree with this set and they’ve been the same answers for long enough that they’re an important part of EA identities, even if they’re less important than the question of how to do the most good.

So I think the relevant empirical claims are baked in to identifying as an EA.

This is sort of getting into the thick EA vs thin EA idea that Ben Todd discussed once, but practically I think almost everyone who identifies as an EA mostly agrees with these areas being amongst top priorities. If you disagree too strongly you would probably not feel like part of the EA movement.

I think some EAs would consider work on other areas like space governance and improving institutional decision-making highly impactful. And some might say that randomista development and animal welfare are less impactful than work on x-risks, even though the community has focussed on them for a long time.

I call myself an EA. Others call me an EA. I don't believe all of these "answer[s] to the question of how to do the most good." In fact, I know several EAs, and I don't think any of them believe all of these answers.

I really think EA is fundamentally cause-neutral, and that an EA could still be an EA even if all of their particular beliefs about how to do good changed.

Hmm.

I also identify as an EA and disagree to some extent with EA answers on cause prioritisation, but my disagreement is mostly about the extent to which they’re priorities compared to other things, and my disagreement isn’t too strong.

But it seems very unlikely for someone to continue to identify as an EA if they strongly disagree with all of these answers, which is why I think, in practice, these answers are part of the EA identity now (although I think we should try to change this, if possible).

Do you know an individual who identifies as an EA and strongly disagrees with all of these areas being priorities?

Not right now. (But if I met someone who disagreed with each of these causes, I wouldn't think that they couldn't be an EA.)

Fair point, thanks!

I think it's probably not great to have "effective altruist" as an identity either (I largely agree with Jonas's post and the others I linked), although I disagree with the case you're making for this.

I think that my case against EA-as-identity would be more on the (2) side, to use the framing of your post. Yours seems to be from (1), and based (partly) on the claim that "EA" requires the assumption that "you have to try to do the most good" (which I think is false). (I also think you're pointing to the least falsifiable of the assumptions/cruxes I listed for longtermism.)

In practice, I probably slip more with "effective altruist" than I do with "longtermist," and call people "EAs" more (including myself, in my head). This post is largely me thinking through what I should do --- rather than explaining to readers how they should emulate me.

One thing I'm curious about - how do you effectively communicate the concept of EA without identifying as an effective altruist?

I really like your drawings in section 2 -- conveys the idea surprisingly succinctly

Related: Effective Altruism is a Question (not an ideology)

I feel like many (most?) of the "-ist"-like descriptors that apply to me are dependent on empirical/refutable claims. For example, I'm also an atheist -- but that view would potentially be quite easy to disprove with the right evidence.

Indeed, I think it's just very common for people who hold a certain empirical view to be called an X-ist. Maybe that's somewhat bad for their epistemics, but I think this piece goes too far in suggesting that I have to think something is "for-sure-correct" before "-ist" descriptors apply.

Separately, I think identifying as a rationalist and effective altruist is good for my epistemics. Part of being a good EA is having careful epistemics, updating based on evidence, being impartially compassionate, etc. Part of being a rationalist is to be aware of and willing to correct for my cognitive biases. When someone challenges me on one of these points, my professed identity gives me a strong incentive to behave well that I wouldn't otherwise have. (To be fair, I'm less sure this applies to "longtermist", which I think has much less pro-epistemic baggage than EA.)

Benjamin Todd makes some similar points here.

Thank your for this piece Lizka!

To what extent do you agree with the following?

Also, "Effective Altruism" and neartermist causes like global health are usually more accessible / easier for ordinary people first learning about EA to understand. As Effective Altruism attracts more attention from media and mainstream culture, we should probably try to stick to the friendly, approachable "Effective Altruism" branding in order to build good impressions with the public, rather than the sometimes alien-seeming and technocratic "longtermism".

I think this part of the definition is weird. We already have the flexible ITC framework for allocating resources across causes, but now we're switching to this binary decision rule of "is there at least one nonnegligible way to help the future"? This seems like a step down in sophistication. Why don't we use a continuous measure of how tractable it is to help future beings? That is, why don't we just use the ITC framework?