TDLR: Assuming that Long Termist causes are by far the most important, 80,000 hours might still be better off devoting more of their space to near-termist causes, to grow the EA community, increase the number working in long term causes and improve EA optics.

Background

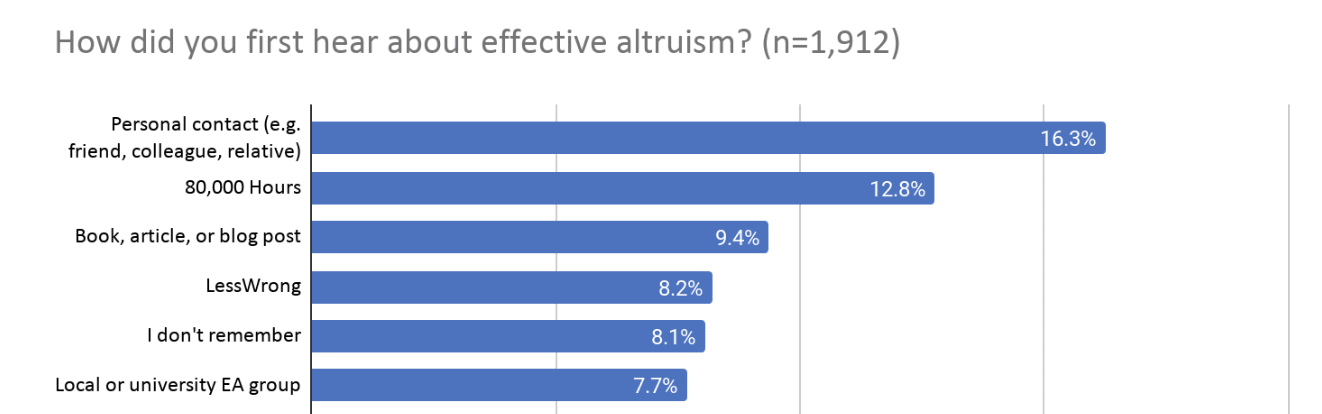

80,000 hours is a front page to EA, presenting the movement/idea/question to the world. For many, their website is people’s introduction to effective altruism (link). and might be the most important internet-based introduction to Effective altrurism, - even if this is not their main purpose.

As I see it, 80,000 hours functions practically as a long-termist career platform, or as their staff put it, to "help people have careers with a lot of social impact". I’m happy that they have updated their site to make this long-termist focus a little more clear, but I think there is an argument that perhaps 20%-30% of their content should be near-term focused, and that this content should be more visible. These arguments I present have probably been discussed ad-nauseam within 80,000 hours leadership and EA leadership in general, and nothing I present is novel. Despite that, I haven’t seen these arguments clearly laid out in one place (I might have missed it), so here goes.

Assumptions

For the purposes of this discussion, I’m going to make these 3 assumptions. They aren’t necessarily true and I don’t necessarily agree with them, but they make my job easier and argument cleaner.

- Long termist causes are by far the most important, and where the vast majority of EAs should focus their work.

- Most of the general public are more attracted to near-termist EA than long-termist EA

- 80,000 hours is an important "frontpage" and "gateway" to EA

Why 80,000 hours might want to focus more on near-term causes

1. Near termist focus as a pathway to more future long termist workers

More focus on near termist careers might paradoxically lead to producing more long termist workers in the long run. Many people are drawn to the clear and more palatable idea that we should devote our lives to doing the most good to humans and animals alive right now (assumption 2). However after further thought and engagement they may change their focus to longtermism. I’m not sure we have stats on this, but I’ve encountered many forum members who seem to have followed this life pattern. By failing to appeal to the many who are attracted to near-termist EA now, we could miss out on significant numbers of long termist workers in a few years time.

2. Near termist causes are a more attractive EA “front door”

80,000 hours is a “front door” to Effective Altruism, then it is to make sure as many people enter the door as possible. Although 80,000 hours make it clear this isn’t one of their main intentions, there is huge benefit to maximizing community growth.

3. Some people will only ever want/be able to work on near-term causes.

There are not-unreasonable people who might struggle with the tenets/tractability of long-termism, or be too far along the road-of-life to move from medicine to machine learning, or just don’t want to engage with long termism for whatever reason. They may miss out a counterfactual fruitful living-your-best-EA-life, because when they clicked on the 80,000 hours website they didn’t manage to scroll down 5 pages to cause area no. 19 and 20 - the first 2 near-term cause areas “Factory farming” and “Easily preventable or treatable illness”. These are listed below well established and clearly tractable cause areas such as “risks from atomically precise manufacturing” and “space governance” [1]

There may be many people who never want to/be able decide to change careers to the highest impact long-term causes, but could change from their current work to a much higher impact near-termist cause if 80,000 hours had more/more obvious near term content.

4. Optics – Show the world that we EAs do really care about currently-alive-beings

I’m not saying that long termist EAs don’t care about people right now, but the current 80,000 hours webpage (and approach) could give a newcomer or media person this impression. Assuming optics are important (many disagree), an 80,000 hours page with a bit more emphasis on near-term content could show that long termists do deeply care about current issues, while clearly prioritising the long term future.

Arguments for the status quo

These are not necessarily the best arguments, just arguments I came up with right now, and push against my earlier arguments. I don't find it easy to argure against myself. If my earlier arguments don’t hold water at all than these are unnecessary.

Epistemic integrity

There is an element of disingenuity in pushing near term causes now, primarily to achieve the goal of generating more long termist workers later on. 80,000 hours should be as straight-forward and upfront as possible.

80,000 hours’ purpose is to maximise work impact, not grow the EA community

Although 80,000 hours happens to be an EA front door, this isn’t their primary purpose so they shouldn’t have to bend their content to optimise for this as well, even if it might be EV positive in the long run.

Long term problems are so many orders of magnitude more important that there should be no watering down or distraction of the core message.

80,000 hours should keep their focus crystal clear. If near termist causes receive even a little more airtime, people who might counterfactually have worked on longtermist causes might instead choose more attractive, but lower impact near term paths.

High uncertainty and obvious bias

I’m very uncertain about the strength of my arguments - I've written this because these may be important questions for consideration and discussion. Full disclosure I’m a mostly near termist myself, so I’m sure that bias comes through in the post ;).

And a cheapshot signoff

I leave you with a slightly-off-topic signoff. Picking someone who changed their career from “Global Health” to “Biosecurity” as one of the “stories we are especially proud of” seems a bit unnecessary. Surely as their gold standard “career change” pin-up story, they could find a higher EV career change. Someone who was previously planning to work for big pharma perhaps?

Hi Nick —

Thanks for the thoughtful post! As you said, we’ve thought about these kinds of questions a lot at 80k. Striking the right balance of content on our site, and prioritising what kinds of content we should work on next, are really tricky tasks, and there’s certainly reasonable disagreement to be had about the trade-offs.

We’re not currently planning to focus on neartermist content for the website, but:

So I think we’re probably covering a lot (though not all) of the benefits we can get by being pretty “big tent” while also trying to (1) not to mislead people about the cause areas we think are most pressing (a reason you touch on above) and (2) focus our efforts where we they'll be as useful as possible. On the latter point: to be honest, much of this is ultimately about capacity constraints. For example, we think that we could do more to make our site helpful and appealing to mid-career people (it can be off-putting for them currently due to a vibe of treating the reader like a 'blank slate'), and my current guess is that our marginal resources would be better put there in the near future vs. doing more neartermist content.

(I work at 80,000 Hours but on the 1on1 side rather than website.) Thanks for writing out your thoughts so clearly and thoroughly Nick. Thanks also for thinking about the issue from both sides - I think you’ve done a job job of capturing reasons against the changes you suggest. The main one I’d add is that having a lot more research and conversations about lots of different areas would need a very substantial increase in capacity.

I’m always sad to hear about taking away the impression that 80,000 Hours doesn’t care about helping present sentient creatures. I think the hardest thing about effective altruism to me is having to prioritise some problems over others when there are so many different sources of suffering in the world. Sometimes the thing that feels most painful to me is the readily avoidable suffering that I’m not doing anything about personally, like malaria. Sometimes it’s the suffering humans cause each other that it feels like we should be able to avoid causing each other, like cutting apart families on the US border. Sometimes it’s suffering that particularly resonates with me, like the lack of adequate health care for pregnancy complications and losses. I so much wish we were in a world where we could solve all of these, rather than needing to triage.

I’m glad that Probably Good exists to try out a different approach from us, and add capacity more generally to the space of people trying to figure out how to use their career to help the world most. You’re right that Probably Good currently has far lower reach than 80,000 Hours. But it’s far earlier in its journey than 80,000 Hours is, and is ramping up pretty swiftly.

Thanks Michelle - great to see 80,000 hours staff respond!

"I’m always sad to hear about taking away the impression that 80,000 Hours doesn’t care about helping present sentient creatures." - This is a bit of a side issue to the points I raised, but I think any passer by could easily get this impression from your website. I don't think its mainly about the prioritisation issue, just slightly cold and calculated presentation. A bit more warmth, kindness and acknowledgement of the pains of prioritisation could make the website feel more compassionate.

Even just some of this sentiment from of your fantastic comment here, displayed on the website in some prominent places could go a long way to demonstrating that 80,000 hours cares about current people

"I think the hardest thing about effective altruism to me is having to prioritise some problems over others when there are so many different sources of suffering in the world. Sometimes the thing that feels most painful to me is the readily avoidable suffering that I’m not doing anything about personally, like malaria. Sometimes it’s the suffering humans cause each other that it feels like we should be able to avoid causing each other, like cutting apart families on the US border. Sometimes it’s suffering that particularly resonates with me, like the lack of adequate health care for pregnancy complications and losses. I so much wish we were in a world where we could solve all of these, rather than needing to triage."

Flagging quickly that ProbablyGood seems to have moved into this niche. Unsure exactly how their strategy differs from 80k hours but their career profiles do seem more animals and global health focused

I think they’re funded by similar sources to 80k https://probablygood.org/career-profiles/

Thanks Geoffry, yes I agree that ProbablyGood have now basically taken over the niche of high EV near-termist career advice and are a great resource. As a doctor, I think their content on medicine as a career is outstanding

A couple of reasons though (from above) why 80,000 hours might still be better having 20-30% near-termist content despite there being a great alternative.

1. Perhaps the argument I'm most interested in is whether 80,000 hours might produce more long-termist workers in the long term by having more of a short term focus.

2. ProbablyGood will probably never be.a "front page" of EA. As in I would imagine that 80,00 hours has 100=1000x the traffic of ProbablyGood, and I doubt many people first contact EA through ProbablyGood (happy to be corrected here though).

On your last point, if you believe that the EV from a "effective neartermism -> effective longtermism" career change is greater than a "somewhat harmful career -> effective neartermism" career change, then the downside of using a "somewhat harmful career -> effective longtermism" example is that people might think the "stopped doing harm" part is more important than the "focused on longtermism" part.

More generally, I think your "arguments for the status quo" seem right to me! I think it's great that you're thinking clearly about the considerations on both sides, and my guess is that you and I would just weight these considerations differently.

Thanks Isaac for the encouragement on the validity of the "arguments for the staus quo". I'm not really sure how I weight the considerations to be honest, I'm more raising the questions for discussion.

Yes that's a fair point about the story at the end. I hadn't consider the "stopped doing harm" part might make my example confusiton. Maybe then I would prefer a "went from being a doctor" to "focused on longtermism", because otherwise it feels like a bit of a kick in the teeth to a decent chunk of the EA community who have decided that global health is.a great thing to devote your life to ;).

Makes sense. To be clear, I think global health is very important, and I think it's a great thing to devote one's life to! I don't think it should be underestimated how big a difference you can make improving the world now, and I admire people who focus on making that happen. It just happens that I'm concerned the future might be even higher priority thing that many people could be in a good position to address.

As one data point: I was interested in global health from a young age, and found 80K during med school in 2019, which led to opportunities in biosecurity research, and now I'm a researcher on global catastrophic risks. I'm really glad I've made this transition! However, it's possible that I would have not applied to 80K (and not gone down this path) if I had gotten the impression they weren't interested in near-termist causes.

Looking back at my 80K 1on1 application materials, I can see I was aware that 80K thought global health was less neglected than biosecurity, and I was considering bio as a career (though perhaps only with 20-30% credence compared to global health). If I'd been aware at the time just how longtermist 80K is, I think there's a 20-40% chance I would have not applied.

I think (name) is a great example of having a lot of impact, but I agree that an example shifting from global health is maybe unnecessarily dismissive. I don't think the tobacco thing is good - surely any remotely moral career advisor would advise moving away from that. Ideally a reader who shifted from a neutral or only very-mildly-good career to a great career would be better (as they do for their other examples). I'd guess 80K know some great examples? Maybe someone working exclusively on rich-country health or pharma who moved into bio-risk?

Thanks for your example - a 20-40% chance you wouldn't have applied is quite high. And I do think if anyone (EA or otherwise) looked through the 80,000 hours website you probably would get the impression that they weren't interested at all in near-termist causes.

Also I think you've nailed it with the "ideal" example career-shift here.

"Ideally a reader who shifted from a neutral or only very-mildly-good career to a great career would be better (as they do for their other examples). I'd guess 80K know some great examples? Maybe someone working exclusively on rich-country health or pharma who moved into bio-risk?"

Strongly agree. I definitely would like to see more content on neartermist causes/ careers. But importantly, I would like to see this content contributed by authors who hold neartermist views and can give those topics justice. Whilst I am appreciative of 80,000 Hours and GWWC attempting to accomodate longtermism-skeptics with some neartermist content, their neartermist content feels condescending because it doesn't properly showcase the perspectives of Effective Altruists who are skeptical of longtermist framings.

I also personally worry 80,000 Hours is seen as the "official EA cause prioritisation" resource and this:

Having more neartermist content will help with this, but I also would like to see 80,000 Hours host content from authors with clashing views. E.g., 80,000 makes a very forceful case that Climate Change is not a material X-Risk, and I would like to see disagreeing writers critique that view on their site.

I also think you hit the nail on the head about many readers being unreceptive to longtermism for concerns like tractability, and that is entirely valid for them.

Thanks Mohammed - yes as a mostly-near-termist myself I agree with many your concerns as well. For this post though I was making an argument which assumes longtermist causes are by far the most important, but like you say there are plenty of other arguments that can be made if we don't necessarily assume that as well.

I agree that as 80,000 hours is so influential and a "front door" to Effective Altruism, the site could mislead readers into thinking there is an official "EA position" on the best careers and causes - although 80,000 hours do say they are "part of the EA community" rather than actively claiming a leadership role.

And I really like the idea of having some discourse and disagreement on the site itself, that feels like a genuine and "EA" style way to go about things.

And thinking about your "condescending" comment, as well as the career example I listed, The "Problems many of our readers prioritise" could be easily interpreted as condescention as well. I don't think 80,000 hours are trying to be condescending, but it is easy to interpret that way.

Plenty of Christians would love more neartermist career content (and would be unlikely to engage with 80k as it's currently branded). So over the past year a group of Christian EAs created an advisory for this, under the direction of EA for Christians

See www.christiansforimpact.org

Losing some parsimony, perhaps 80,000 Hours could allow users to toggle between a "short-termist" and "longtermist" ranking of top career paths? The cost of switching rankings seems low enough that ~anyone who's inclined to work on a longtermist cause area under the status-quo would still do so with this change.

Thanks I really like this idea and hadn't thought about it myself. Obviously there are still potential negative tradeoffs as discussed.

If this is de-railing the convo here feel free to ignore, but what do you mean concretely by the distinction between "near-termist" and "long-termist" cause areas here? Back in spring 2022 when some pretty senior (though not decision-critical) politicians were making calls for NATO to establish a no-fly zone over Ukraine, preventing Nuclear Catastrophe seemed pretty "near-termist" to me?

I also suspect many if not most AIXR people in the Bay think that AI Alignment is a pretty "near-term" concern for them. Similarly, concerns about Shrimp Welfare have only been focused on the here-and-now effects and not the 'long run digital shrimp' strawshrimp I sometimes see on social media.

Is near-term/long-term thinking actually capturing a clean cause area distinction? Or is it just a 'vibe' distinction? I think you clearest definition is:

But to me that's a philosophical debate right, or perspecitve? Because of 80K's top 5 list, I could easily see individuals in each area making arguments that it is a near-term cause.

To be clear, I actually think I agree with a lot of what you say so I don't want to come off as arguing the opposite case. But when I see these arguments about near v long termism or old v new EA or bednets v scifi EA, it just doesn't seem to "carve nature at its joints" as the saying goes, and often leads to confusion as people argue about different things while using the same words.

Thanks those are some fair points. I think I am just using language that others use, so there is some shared understanding, even though it carries with it a lot of fuzziness like you say.

Maybe we can think of better language than "near term" or "long term" framing or just be more precise.

Open Phil had this issue - they now use 'Global Health & Wellbeing' and 'Global Catastrophic Risks', which I think captures the substantive focus of each.

You're assuming that the EV of switches from global health to biosecurity is lower than the EV of switching from something else to biosecurity. Even though global health is better than most cause areas, this could be false in practice for at least two reasons

Hey Thomas - I think Isaac was making a related point above as well.

Yes you're right that under my assumptions the potential EV switch from global health to biosecurity could well be high than switching from something else. Like you said especially if their global health work is bad, or if they are doing less effective things.

On the flipside someone could also be very, very good at global health work with a lot of specific expertise, passion and career capital, which they might not carry into their biosecurity job. This could at the very least least could narrow the EV gap between the 2 careers.

Agree and thanks for writing this up Nick!

I like 80k's recent shift to pushing it's career guide again (what initially brought me into EA - the writing is so good!) and focusing on skill sets.