This is the full text of a post from "The Obsolete Newsletter," a Substack that I write about the intersection of capitalism, geopolitics, and artificial intelligence. I’m a freelance journalist and the author of a forthcoming book called Obsolete: Power, Profit, and the Race to build Machine Superintelligence. Consider subscribing to stay up to date with my work.

[MAY 17, 2025 UPDATE: This piece cited a paper by Aidan Toner-Rodgers that was been retracted by MIT, after it "conducted an internal, confidential review and concluded that the paper should be withdrawn from public discourse.”]

Depending on who you follow, you might think AI is hitting a wall or that it's moving faster than ever.

I was open to the former and even predicted back in June that the jump to the next generation of language models, like GPT-5, would disappoint. But I now think the evidence points toward progress continuing and maybe even accelerating.

This is primarily thanks to the advent of new "reasoning" models like OpenAI's o-series and DeepSeek, a Chinese open-weight model that is nipping at the heels of the American frontier. In essence, these models spend more time and compute on inference, "thinking" about harder prompts, instead of just spitting out an answer.

In my June prediction, I wrote that "We haven't seen anything more than marginal improvements in the year+ since GPT-4." But I now think I was wrong.

Instead, there's a widening gap between AI's public face and its true capabilities. I wrote about this in a TIME Ideas essay that published yesterday. I hit on many of its key points here, but there's a lot more in the full piece, and I encourage you to check it out!

AI progress isn't stalling — it's becoming increasingly illegible

I argued that while everyday users still encounter chatbots that can't count the "Rs" in "strawberry" and the media declares an AI slowdown, behind the scenes, AI is rapidly advancing in technical domains that may end up turbo-charging everything else.

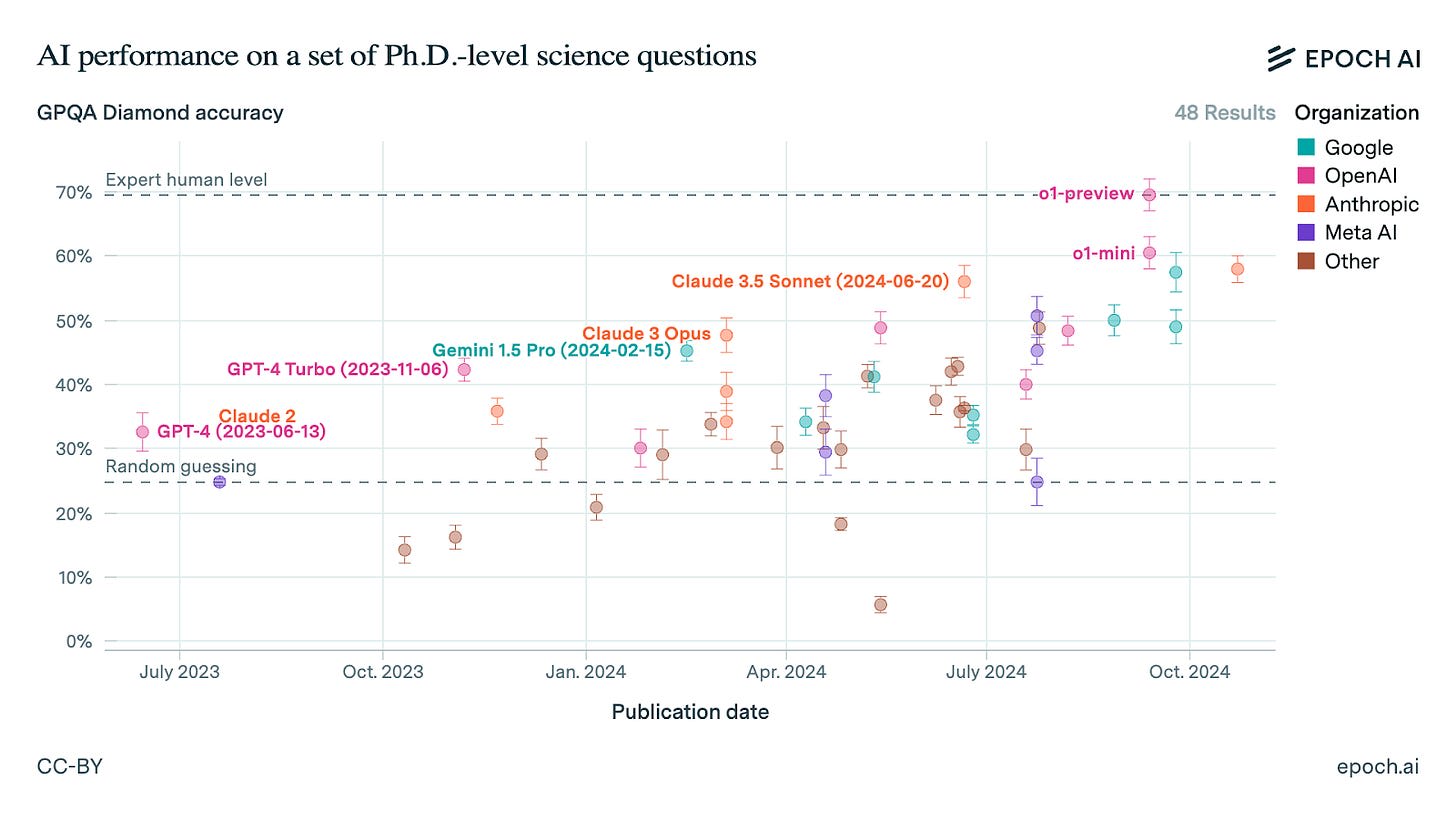

For example, in ~1 year, AI went from barely beating random chance to surpassing experts on PhD-level science questions. OpenAI says that its latest model, o3, now beats human experts in their own field by nearly 20%.

However, as I wrote in TIME:

the vast majority of people won't notice this kind of improvement because they aren't doing graduate-level science work. But it will be a huge deal if AI starts meaningfully accelerating research and development in scientific fields, and there is some evidence that such an acceleration is already happening. A groundbreaking paper by Aidan Toner-Rodgers at MIT recently found that material scientists assisted by AI systems "discover 44% more materials, resulting in a 39% increase in patent filings and a 17% rise in downstream product innovation." Still, 82% of scientists report that the AI tools reduced their job satisfaction, mainly citing "skill underutilization and reduced creativity."

Source: Epoch AI

In just months, models went from 2% to 25% on possibly the hardest AI math benchmark in existence.

And perhaps most significantly, AI systems are getting way better at programming. From the TIME essay:

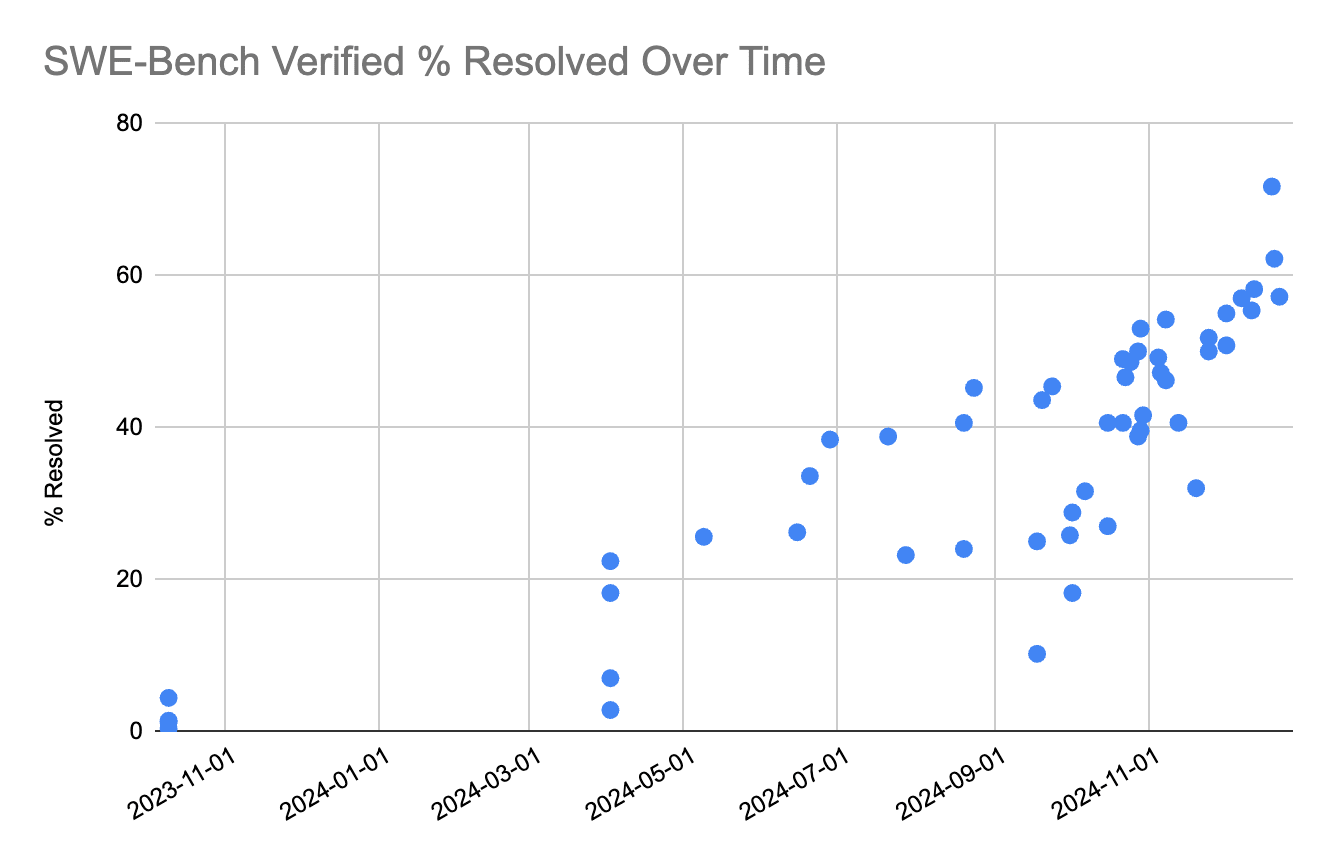

In an attempt to provide more realistic tests of AI programming capabilities, researchers developed SWE-Bench, a benchmark that evaluates how well AI agents can fix actual open problems in popular open-source software. The top score on the verified benchmark a year ago was 4.4%. The top score today is closer to 72%, achieved by OpenAI's o3 model.

Sources: SWE-Bench, OpenAI, chart by Garrison Lovely

There have been similarly dramatic improvements on other benchmarks for programming, math, and machine learning research. But unless you follow the industry closely, it's very hard to figure out what this actually means.

Here's an attempt to spell that out from the TIME essay:

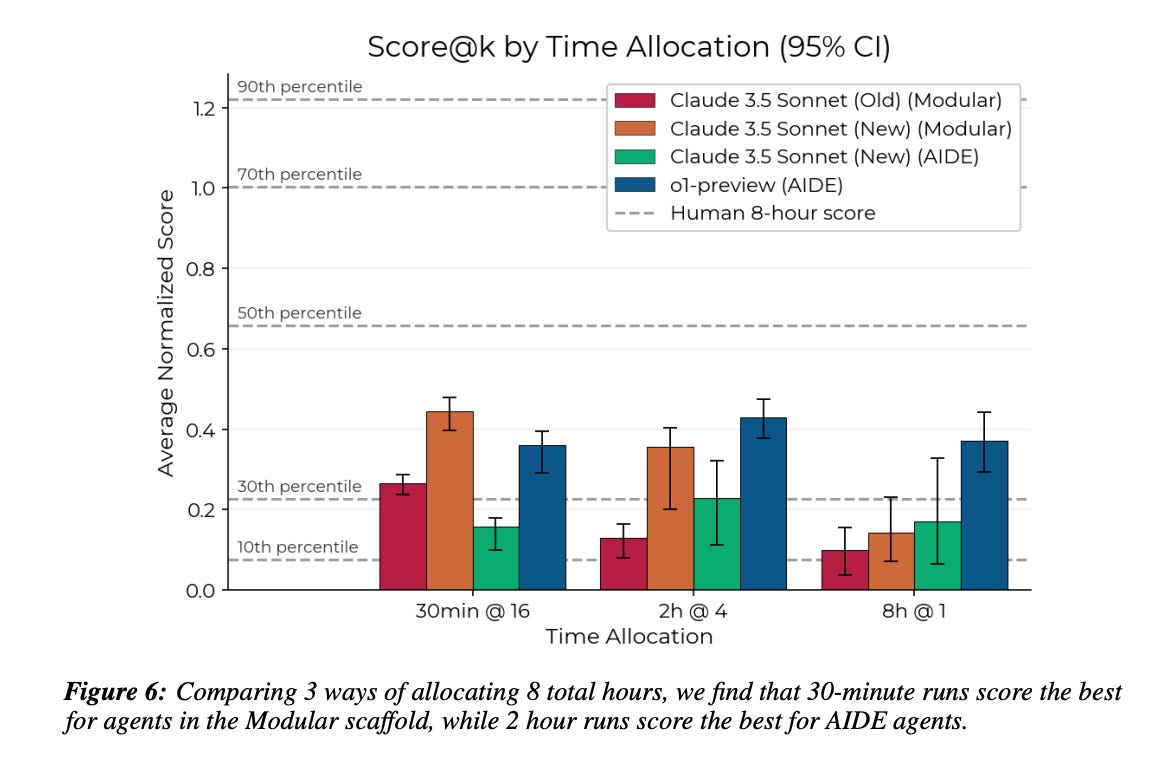

Perhaps the best head-to-head matchup of elite engineers and AI agents was published in November by METR, a leading AI evaluations group. The researchers created novel, realistic, challenging, and unconventional machine learning tasks to compare human experts and AI agents. While the AI agents beat human experts at two hours of equivalent work, the median engineer won at longer time scales.

But even at eight hours, the best AI agents still managed to beat well over one-third of the human experts. The METR researchers emphasized that there was a "relatively limited effort to set up AI agents to succeed at the tasks, and we strongly expect better elicitation to result in much better performance on these tasks." They also highlighted how much cheaper the AI agents were than their human counterparts.

(I'd expect OpenAI's latest model, o3, to do significantly better on the METR evaluation based on its other scores.)

Source: METR

But I can't put the significance of this research better than METR researcher Chris Painter did. He asks us to imagine a dashboard monitoring various AI risks — from bioweapons to political manipulation. If any one capability starts rising, we can work to mitigate it. But what happens when the dashboard shows progress in AI's ability to improve itself? Chris writes:

If AI systems are approaching the point where they can improve themselves, quickly teach themselves new capabilities, how can you trust any of the other panels on your dashboard? If this one capability starts to come online, who can say what comes next?

No one wakes up every morning excited to build an AI system that's explicitly excellent at causing massive harm, but the tech industry has automating AI R&D squarely on its roadmap. This capability could be a crucial inflection point for humanity, and potentially destabilizing.

For more on how this kind of recursive self-improvement could occur and what might happen next, check out this section of my Jacobin cover story.

Smarter models can scheme better

Meanwhile, there's a bunch of new research finding that smarter models are more capable of scheming, deception, sabotage, etc.

In TIME, I spelled out my fear that this mostly invisible progress will leave us dangerously unprepared for what's coming. I worried that politicians and the public will ignore this AI progress, because they can't see the improvements first-hand. All the while, AI companies will continue to advance toward their goal of automating AI research, bootstrapping the automation of everything else.

Right now, the industry is mostly self-regulating, and, at least in the US, that looks unlikely to change anytime soon — unless there's some kind of "warning shot" that motivates action.

Of course, there may be no warning shots, or we may ignore them. Given that many of the leading figures in the field say "no one currently knows how to reliably align AI behavior with complex values," this is cause for serious concern. And the stakes are high, as I wrote in TIME:

The worst-case scenario is that AI systems become scary powerful but no warning shots are fired (or heeded) before a system permanently escapes human control and acts decisively against us.

o3 and the media

I had already written the first draft of the TIME essay when o3 was announced by OpenAI on December 20th. My timeline was freaking out about the shocking gains made on many of these same extremely tough technical benchmarks.

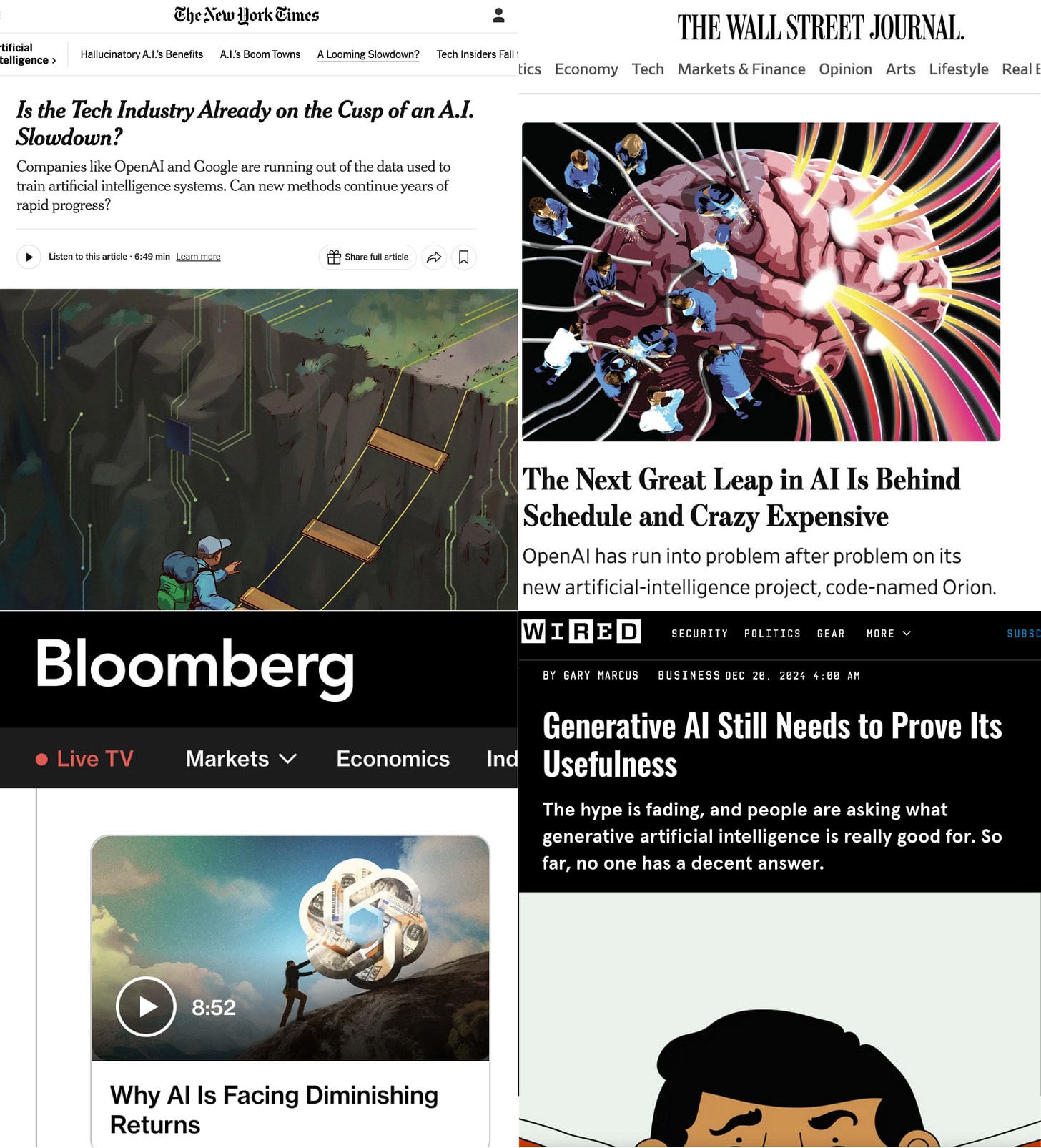

I thought, 'oh man, I need to rework this essay because of how much o3 undermines the thesis that AI progress is stalling out!' But the mainstream media dramatically under-covered the announcement, with most big news sites making no mention of it at all.

In fact, the day after the o3 announcement you could find headlines in the NYT, WIRED, WSJ, and Bloomberg suggesting AI progress was slowing down!

This is not a knock on these individual stories, which contain important reporting, analysis, causes for skepticism, etc. But collectively, the mainstream media is painting a misleading picture of the state of AI that makes it more likely we'll be unprepared for what's coming. (Shakeel Hashim of Transformer had a great, relevant piece on journalism and AGI for Nieman Lab in December.)

Just as one deep learning paradigm might be stalling out, a new one is emerging and iterating faster than ever. There were almost 3 years between GPT-3 and 4, but o3 was announced just ~3.5 months after its predecessor, with huge benchmark gains. There are many reasons this pace might not continue, but to say AI is slowing down seems premature at best.

The gap between AI's public face and its true capabilities is widening by the month. The real question isn't whether AI is hitting a wall — it's whether we'll see what's coming before it's too late.

If you enjoyed this post, please subscribe to The Obsolete Newsletter.

MIT just put up a notice that they've "conducted an internal, confidential review and concluded that the paper should be withdrawn from public discourse".

Yeah, I asked my editor at TIME adding an update. Will edit this piece as well.

Quick point, but I think this title is overstating things. "Is AI Hitting a Wall or Moving Faster Than Ever?" That sounds like it's presupposing that the answer is extreme, where the truth is almost always somewhere in-between.

I've seen a lot of media pieces use this naming convention ("Edward Snowden: Hero or Villain?"), and generally try to recommend against this, going forward.

Hi Garrison,

Do you have any thoughts on the median AI expert thinking full automation of tasks or occupations is 20% likely by 2048, and 80% likely by 2103? I am open to betting 10 k$ against short timelines.

Executive summary: While public perception and media coverage suggest AI progress is slowing, evidence shows rapid advancement in technical capabilities, particularly in reasoning and research tasks, creating a dangerous gap between AI's apparent and actual capabilities.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.