Introduction

As Chief Strategy Officer at Rethink Priorities, I am sometimes asked to share information with those interested in supporting or collaborating with us, or just wanting to learn more about us, so that they can understand the nature of our work, our motivations, and some of our past projects. This post intends to provide an accessible high-level overview, including some key uncertainties and considerations that help drive our approach, along with some select examples of our work, which overall may serve as a useful reference.

Fundamentally, Rethink Priorities (RP) works to do good at scale, but we’re not committed to advancing any particular type of good or limited to a specific approach.

In this piece, we will briefly lay out some of the uncertainties people face when trying to do good, which are some of the motivating factors for our approach. Key uncertainties include (a) limited initial evidence, (b) challenges in measuring progress, and (c) difficulty determining the "right" goal in the first place. Next, we highlight several key considerations for our work, including skepticism, transparency, collaborating with key decision-makers to offer practical guidance, and supporting the development of emerging fields. In doing all that, we will also highlight several examples of projects and some initial results:

- Our Worldview Investigations Team has developed frameworks (such as the Cross-Cause Effectiveness Model and the Moral Parliament Tool) to help donors align their giving with their values and better acknowledge the deep uncertainties involved in such decisions.

- Our Global Health and Development Department’s modeling and reviews (e.g., on lead exposure) have helped to redirect millions of dollars of grants to more cost-effective options.

- Our Moral Weight Project wrestled with the difficult question of how to compare welfare across different species—a task that is arguably as philosophically challenging as it is practically important.

- Our Animal Welfare Department has sought to understand the capacity for sentience among invertebrates, such as insects and shrimps, and promote their wellbeing. As these were nascent (sub)fields, we have had the opportunity to make meaningful contributions.

Note: This piece is trying to be an accessible overview, and as a result, it's not fully comprehensive. For instance, it largely does not cover all of our work, which spans a variety of additional areas and approaches, including surveys and data analysis, global catastrophic risks, and fiscally sponsoring promising initiatives.

On the uncertainties

One of the things that motivates me daily to work at RP is the sheer amount of uncertainty around the best way of doing good. RP exists to help address these uncertainties, but we don’t claim to have all the answers. There are still many, many unanswered questions: How much should we invest in solving a particular problem? Which approach should we adopt? How can we be sure we're actually making a positive difference?

When your aim is making money, it’s relatively straightforward to check if you’re succeeding. Doing good isn’t like that. What might for-profit businesses look like if we didn’t know what it meant to make money and there were no direct metrics like the size of your bank account or share price? This scenario presents a rough approximation of the reality that we in the non-profit world face. It is extremely difficult to decide how to measure progress on goals like improving wellbeing, satisfying preferences, or ensuring justice.

Even beyond the challenge of gathering evidence or assigning probabilities to claims of effectiveness, it’s difficult to know whether we’re pursuing the "right" goal in the first place—or whether we should focus on just one goal or many.

All of this fundamental uncertainty is a double-edged sword: it is daunting that there are so many unknowns, and yet, it is highly motivating that there are so many important questions to investigate and so much work still to be done. At RP, we are constantly refining our approach. We are not married to particular approaches or methods. Put simply, our mission is to do good (ideally large amounts), but not some specific kind of good.

Our approach applied: Some examples of impact

How RP embraces uncertainty

We’re skeptics, open to revising our methods as we learn more. Our focus is not just on deepening our own understanding, but on helping others to navigate their uncertainties. We aim to emulate GiveWell’s high levels of skepticism and transparency toward investigating global health and development interventions, and apply that gold standard across causes areas.

To this end, our Worldview Investigations Team developed free and publicly available tools that rigorously quantify the value of different courses of action, taking into account multiple decision theories. These cause prioritization tools help philanthropists to model uncertainty and better visualize how different assumptions, moral views, and decision-making procedures might affect their choices.

Example 1: Aligning donors’ decisions with their values while accounting for moral uncertainty

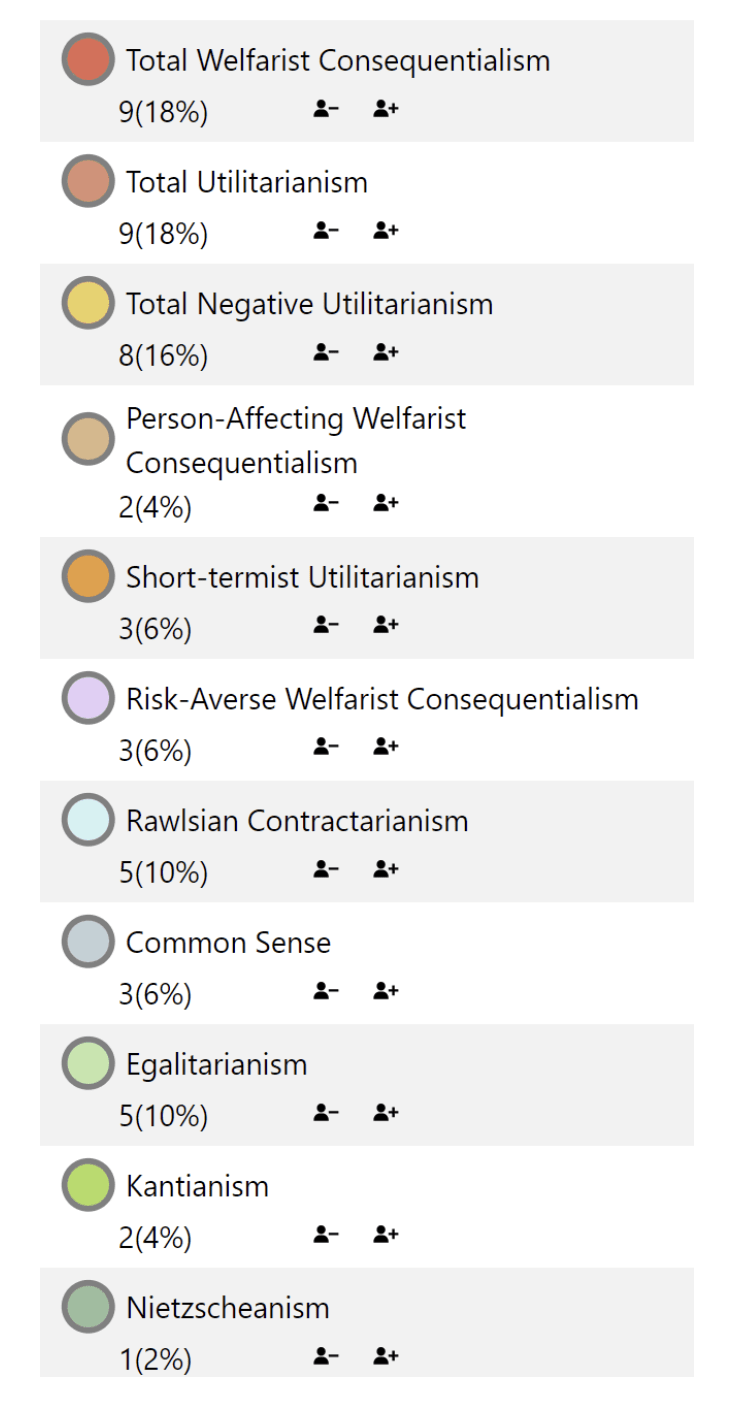

The Moral Parliament Tool models different worldviews by using delegates to represent a set of normative commitments, including first-order moral theories, values, and attitudes toward risk. It works by allowing users to:

- Input their confidence in various worldviews.

- Explore methods for reaching decisions about charitable giving.

The tool models ways that you could consider resource allocation decisions in light of normative uncertainty. It shows the impact of different philosophies and decision-making approaches in philanthropy (see the below image for some reference).

Our team is now looking to further develop this tool and their Portfolio Builder tool for use with a broader set of donors.

How RP works with decision-makers

RP also provides value by turning high-quality research on important issues into practical guidance, which we work to share with key stakeholders. Our stakeholders include non-profit organizations, government agencies, policymakers, fellow research institutes, non-profit entrepreneurs, foundations, individual philanthropists, and other decision-makers. Wherever possible, we seek stakeholders’ input on our project planning, seek their feedback on research, and continue engagement with them beyond the publication of the work.

Below we will highlight two examples: one from our work on global health and development and the other from our worldview investigations.

Example 2: Partnerships and outcomes of our global health and development work

Traditionally, foundations in the global health and development space fund projects based on preferences toward a particular region or type of intervention (they could be described as local optimizers). In contrast, we believe in allocating resources based on cost-effectiveness (which could be described as acting as global optimizers). GiveWell and Open Philanthropy are examples of funders who work as global optimizers. While most of our Global Health and Development Department’s work comes from commissioned projects from such funders, we also work with our networks to broaden the impact of this research and influence other actors.

Recent outcomes from our partnership-building efforts include:

- RP’s investigations into health risks from lead exposure influenced an $8M grant toward an intervention that the team believes is a highly cost-effective way to prevent and reduce the effects of lead exposure.

- A member of our network shared our research findings with an individual philanthropist who subsequently redirected tens of millions of dollars in funding to a more impactful field.

- We had the opportunity to present RP’s investigation into the value of research in influencing actors at different levels of cost-effectiveness at a private event. A major foundation that advises individual donors requested additional information, and shared that this presentation sparked internal discussions about their advising and a nine-figure grantmaking fund.

Example 3: Broadening moral circles

Decision-makers interested in animal welfare face difficult decisions when allocating their resources. Determining the degree to focus on different species entails making judgments about the overall quality of life that different species can experience (i.e. their capacity for welfare). Historically, animal welfare donors have had to rely on theoretical philosophical or scientific considerations or even their gut instincts, with limited practical guidance on how to approach cross-species grant decisions.

To address this issue, we conducted a rigorous investigation that resulted in a model that foundations—or even governments—can directly apply when making decisions.

This Moral Weight Project culminated in a welfare range table and a series of influential research posts. Oxford University Press will also publish an upcoming book on the research edited by RP’s Bob Fischer. The Moral Weight Project’s findings have generated significant discussion shared in academic and non-profit circles. For example, Animal Charity Evaluators are now integrating elements of the work into their evaluation criteria. We are also in active conversations with stakeholders in governmental bodies in the US and in the Netherlands about how they can incorporate this model into their work.

Read more about our moral weight work, our tools and surveysHow RP supports field building

One of the ways in which RP creates impact is by helping to develop fields or subfields for causes that seem pressing, but have historically been overlooked. This work may entail: searching for niches where new research could lead to large-scale impact, conducting initial research, rallying partners, incubating or supporting new projects in a field, and building the talent pipeline by, for example, offering fellowships.

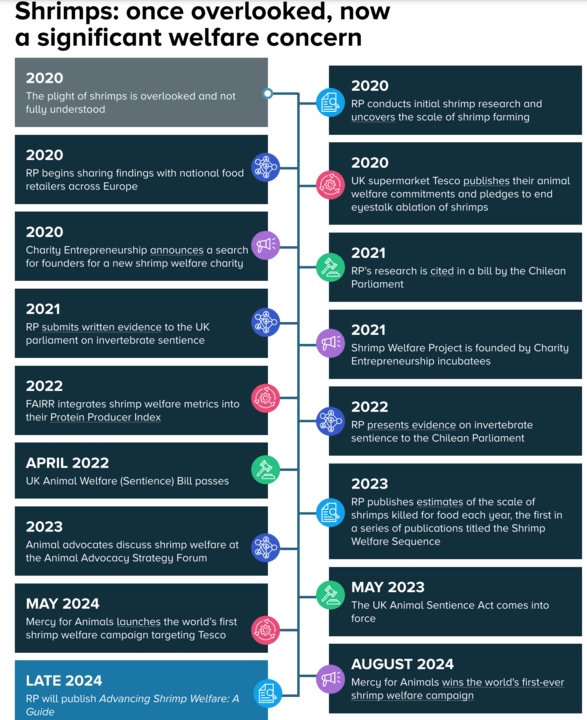

One example of this type of work is our early research on the sentience of invertebrate animals, which led us to advance the subfields of insect welfare and shrimp welfare.

Example 4: Escalating the importance of shrimp welfare within the animal advocacy field

After investigating the evidence that some invertebrates may be sentient, our Animal Welfare Department identified a critical gap in knowledge regarding their welfare. We conducted extensive research to better understand the scale of the issue and found that, at the time of the research, shrimp production affected more individuals alive than insect farming, fish captures, or the farming of any other vertebrates for human consumption. The team also investigated the major welfare threats these animals face. Their findings continue to help bring much-needed clarity, enabling advocates and grantmakers to prioritize welfare issues and tackle the primary sources of suffering for farmed shrimps.

RP’s research opened up new impact pathways, influencing work on policy change, corporate commitments, and strategic shifts within the animal welfare community, alongside legitimizing shrimp welfare as an important concern. See the below graphic for more about how our shrimp welfare work helped contribute to impact in policy, legislation, non-profit entrepreneurship, and industry over time.

Read more about our shrimp welfare work, as well as our efforts to advance farmed animal welfare policy across the EU here.

Reflections and looking forward

Through our diverse body of work in different areas, we seek to decrease the uncertainties that people face when trying to improve the world. Moreover, we work to catalyze action on outstanding opportunities by collaborating with decision-makers to help them be more effective and even develop new subfields. In this sense, Rethink Priorities is a think-and-do tank.

Reflecting on our work, we think that many of our self-generated project ideas—some of which we financed using RP’s unrestricted funding —have been our most innovative or important work resulting in some of the greatest impact over the years. Key examples of self-funded work include invertebrate sentience, moral weights and welfare ranges, the cross-cause model, and the Causes and Uncertainty: Rethinking Value in Expectation (CURVE) sequence. We have learned many lessons from this work, and remain open to ways in which we can improve. Overall, we believe that RP is currently in a position to keep exploring new avenues for impact as long as we have flexible support to seize opportunities.

We invite interested readers to learn more about RP via our research database and to stay updated on new work by subscribing to our newsletter. Also, please feel free to email us any of your questions or feedback!

Acknowledgments

Rethink Priorities is a think-and-do tank dedicated to informing decisions made by high-impact organizations and funders across various cause areas. This post is authored by Kieran Greig. Thanks to Marcus A. Davis, Daniela Waldhorn, John Firth, and David Moss, Janique Behman, Hannah Tookey, Whitney Childs, and Henri Thunberg for having made significant contributions leading up to this text. Sherry Yang is to be credited for graphic design. Special thanks go to Rachel Norman for substantial editing.

Executive summary: Rethink Priorities aims to do good at scale by addressing key uncertainties in effective altruism through research, tools, and collaboration with decision-makers across various cause areas.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.