Life is a response to being

Part II

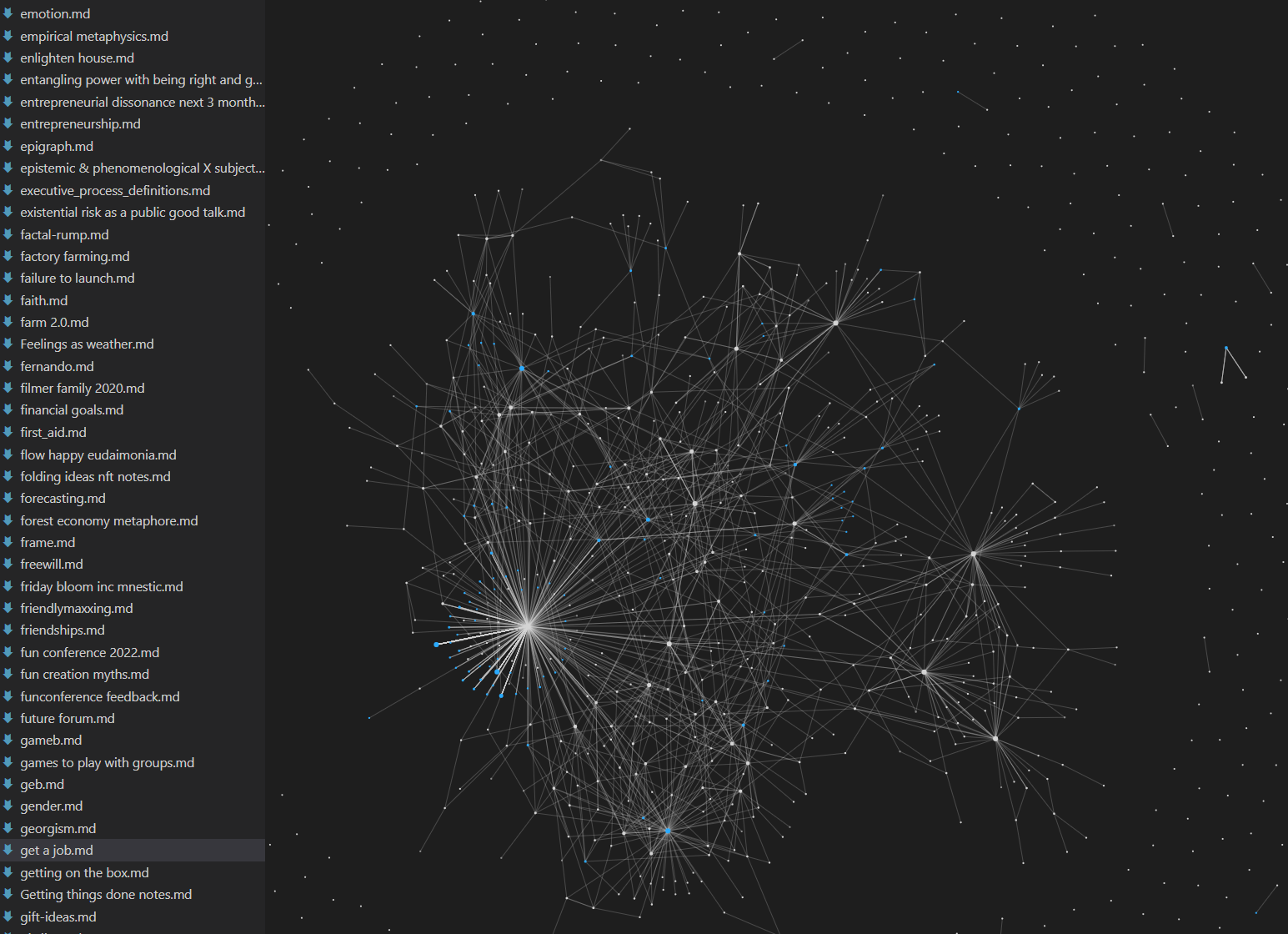

Over the course of my twenties, I'd accumulated thousands of hastily jotted notes. Thoughts that had grown in the gaps between work and play I'd managed to save before lost off the cliff of forgetting.

Thoughts like, "I'm starting to suspect it's possible to be robustly happy on purpose..." or "Inadequate tools for consensus keeps coming up as a kind of problem behind all problems I care about".

Upon arrival, I didn't fully understand why I'd felt so strongly I had to go to a cabin in the woods to be alone for a month. But I knew it had something to do with these notes. They were all the errant Frisbees my mind had wanted to chase when it should have been working, or shopping, or trying to sleep. Thought that couldn't be fully explored for lack of space.

I spent my entire first week in the cabin working through each text file A to Z. From amsterdam-trip-reflections.txt all the way through to xmas-gifts-2021.txt.

Many felt irrelevant to who I was now.

Others felt revelatory.

I began a fresh note, (threads.txt) to tease out the myriad themes, inquiries, and projects tangled across these vast tracts of text. From ~500 text files I pulled ~200 "threads", and wrote the title of each down in pen on an index card.

Everything I'd ever been passionate about in the last 7 years. Anytime a book, or a podcast, or someone's tipsy rant landed in my brain and flowered into violent spirals of thought. It was all here, finally down in one place.

Personal and collective flourishing, how we could better make sense of our shared condition, why is community so scarce if we all crave it, escaping the meaning crisis, x-risk, governance, prediction markets, crypto, effective altruism, dating, what's up with the free energy principle, memetics, sense-making, dungeons and dragons, gender, origins of life, suffering, living in new york, progress studies, dance, game b, virtue, vocation, factory farming, intentional community, the qualia research institute, charter cities apollo longevity vTaiwan attachment the meta crisis powerlifting axiology circling functional programming awakening biotech aesthetics metamorphosis *mind explodes*

For days I couldn't bear to look at it. I played video games, went for walks, and sat in the woods filling my copy of Walden with underlines (feeling enormously grateful to the EAs I met passing through Berlin, who insisted I buy this weird American book).

Eventually, I felt brave enough to sit down with some tea and frown at it all. Slowly, over the course of a morning, the reality of my situation began to sink in.

For eons, matter and information and who knows what else had been up to something incomprehensibly complicated.

And now, a ways into the whole affair, somehow, apparently, I'm here.

I'm looking out from behind these eyes.

I have these hands.

And now it's my turn to be alive.

To face the same question posed to every ancestor who came before me...

What the actual fuck?

What's going on?

How could it be that this is happening?

How is it that I am??

Who authorised this?

Is it good?

Am I meant to respond to this in some way!?

Every time I'd had some space to slow down a little, this was the question looming over my shoulder. The question that forever makes me feel I need more time, more space, more room to step all the way back. To ask:

How do I want to respond?

How do I want to respond to being human? To waking up a couple billion years into whatever it is that's going on with the fact that reality is unfolding moment to moment, and just plain refuses to stop even though it's obviously absurd.

I realised this is why I'd felt so strongly I needed a month of space just to stop and think. Why I'd spent a week filling a kitchen table with chopped up index cards and bad handwriting.

That I am, and must respond to that fact, is a profoundly vexing puzzle. It was in the context of that puzzle, that these threads made sense. They were hints.

This hint came from that drive in 2019, listening to Dave Chalmers explain The Hard Problem of Consciousness. This one here from the book Red Plenty, that one there from a conversation in Mexico. Every time, there's this feeling...

I've no idea what's going on with the fact that I'm alive, but there's something about this idea that seems relevant in some way to what's going on... relevant to the puzzle of what a response commensurate to existing could possibly entail.

Beyond any particular project, or goal, or career trajectory, this is what I want most for my life. To have responded productively to reality as it was presented to me.

I started calling this project I was undertaking "Apollo". After the god of light, logic, and long journeys into the unknown (spawning, obviously, apollo.txt).

Undertaking your own personal Apollo project is a personal long reflection. It's a response to forgetting, to the cheeky puzzle of existence staring us in the face every second of every day, and to all the hints we chance upon as we engage with reality.

It's a radical act of sensemaking by which you attempt to terraform the landscape over which your time flows. Trying to bend the course of your days just a little more towards your best guess as to what might really matter.

My purpose in going to Walden Pond was not to live cheaply or to live dearly there, but to transact some private business with the fewest obstacles; to be hindered from accomplishing which for want of a little common sense, a little enterprise and business talent, appeared not so sad as foolish.

- Walden

Apollo is going out to where there's no light so as to spy the north star, so you may return home with some manner of almanac to guide your way.

This sequence is my own.

Coming up next: Space

I'm so happy you're writing down this story! Looking forward to reading the whole sequence :)

Thanks for following along! <3