This is the introduction of an in-progress report called Strategic Perspectives on Long-term AI Governance (see sequence).

- Aim: to contribute to greater strategic clarity, consensus, and coherence in the Long-term AI Governance field, by disentangling current and possible approaches, and providing insight into their assumptions and cruxes. Practically, the aim is for this to enable a more informed discussion about what near-term actions we can or should pursue to ensure the beneficial development and impact of Transformative AI in the long-term.

- Epistemic status: attempted theory-building, with all the strengths and pitfalls of map-drawing . Draws on years of research in the space and >2 year of focused conversations on this topic, but views are tentative, presented in order to enable further discussion.

- Status: This is a project that I will be producing over the coming months. The breadth of topics and perspectives covered means that some sections are hastily sketched: as I continue developing the report, I welcome feedback and commentary. I am grateful to the many people who have already given feedback and encouragement so far.

Primer

Tl;dr: look at this Airtable.

The Long-term AI Governance community aims to shape the development and deployment of advanced AI--whether understood as Transformative AI (TAI) or as Artificial General Intelligence (AGI)--in beneficial ways.

However, there is currently a lack of strategic clarity, with disagreement over relevant background assumptions; what actions to take in the near-term, the strengths and risks of each approach, and where different approaches might strengthen or trade off against one another.

This sequence will explore 15 different Strategic Perspectives on long-term AI governance, exploring their distinct:

- assumptions about key strategic parameters that shape transformative AI, in terms of technical landscape and governance landscape;

- Theory of victory and rough impact story;

- internal tensions, cruxes, disagreements or tradeoffs within the perspective;

- historical analogies and counter-examples;

- recommended actions, including intermediate goals and concrete near-term interventions;

- suitability, in terms of outside-view strengths and drawbacks.

Project Roadmap

(Links to be added as entries come out)

- I introduce and sketch this sequence (you are here);

- I give a background and rationale for this project, by discussing some of the challenges faced by the Long-term AI Governance field, comparing strategic clarity, consensus, and coherence, and mapping how other work has contributed to questions around strategic clarity.

- I frame the goals and scope of this project--what it does, and does not aim to do;

- I sketch the components of a strategic perspective (i.e. the space of arguments or cruxes along which perspectives can differ or disagree);

- I set out 15 distinct Strategic Perspectives in Long-term AI Governance, clustering different views on the challenges of AI governance (see below);

- I reflect on the distribution of these perspectives in the existing Long-term AI Governance community; and discuss four approaches to navigating between them;

- In four Appendices, I cover:

- (1). Definitions of key terms around advanced AI, AI governance, long-term(ist) AI governance, theories of impact, and strategic perspectives;

- (2). A review of existing work in long-term AI governance, and how this project relates to it;

- (3). scope, limits and risks of the project;

- (4). a taxonomy of relevant strategic parameters in the technical and governance landscapes around TAI, and possible assumptions across them.

Summary of perspectives

Perspective | In (oversimplified) slogan form |

|---|---|

Exploratory | We remain too uncertain to meaningfully or safely act; conduct high-quality research to achieve strategic clarity and guide actions |

Pivotal Engineering | Prepare for a one-shot technical ‘final exam’ to align the first AGI; followed by a pivotal act to mitigate risks from any unsafe systems |

Prosaic Engineering | Develop and refine alignment tools in existing systems, disseminate them to the world, and promote AI lab risk mitigation |

Partisan | Pick a champion to support in the race, to help them develop TAI/AGI first in a safe way, and/or in the service of good values |

Coalitional | Create a joint TAI/AGI program to support, to avert races and share benefits |

Anticipatory | Regulate by establishing forward-looking policies today, which are explicitly tailored to future TAI |

Path-setting | Regulate by establishing policies and principles for today's AI, which set good precedent to govern future TAI |

Adaptation-enabling | Regulate by ensuring flexibility of any AI governance institutions established in the near-term, to avoid suboptimal lock-in and enable their future adaptation to governing TAI |

Network-building | Nurture a large, talented and influential community, and prepare to advise key TAI decision-makers at a future 'crunch time' |

Environment-shaping | Improve civilizational competence, specific institutions, norms, regulatory target surface, cooperativeness, or tools, to indirectly improve conditions for later good TAI decisions |

Containing | Coordinate to ensure TAI/AGI is delayed or never built |

System-changing | Pursue fundamental changes or realignment in the world as precondition to any good outcomes |

Skeptical | Just wait-and-see, because TAI is not possible, long-term impacts should not take ethical priority, and/or the future is too uncertain to be reliably shaped |

Prioritarian | Other existential risks are far more certain, pressing or actionable, and should gain priority |

‘Perspective X’ | [something entirely different, that I am not thinking of] |

Table 1: strategic perspectives, in slogan form

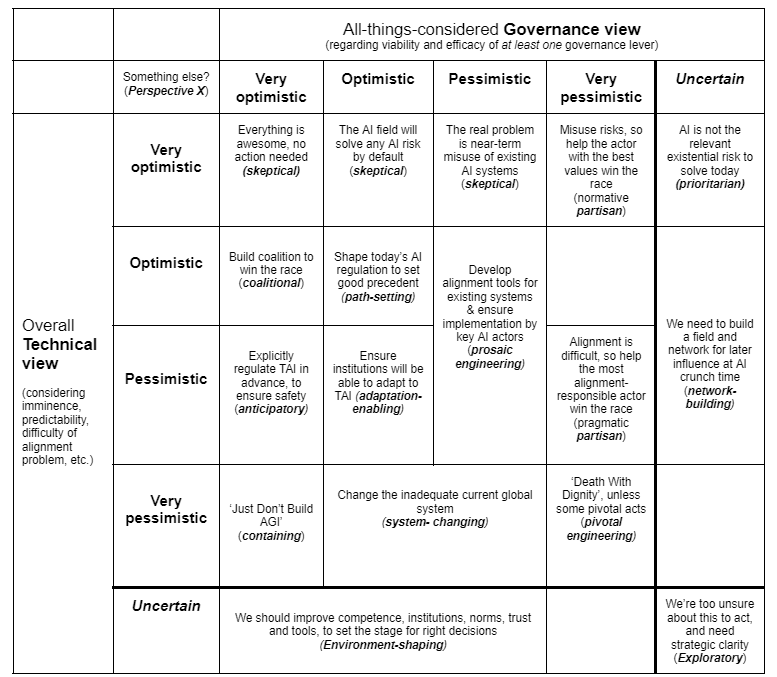

Each of these perspectives can be understood in their own terms: but it is also valuable to compare and contrast them with one another. For instance, we can (imperfectly) sketch different perspectives' positions in terms of their overall optimism or pessimism around the technical and governance parameters of long-term AI governance (see Table 2).

Table 2: oversimplified mapping of strategic perspectives, by overall Technical and Governance views

Acknowledgements

This research has been mainly supported through work within CSER’s AI-FAR team, and by the Legal Priorities Project, especially the 2021 Summer Research Fellowship. It also owes a lot to conversations with many people across this space.

For conversations around this topic that have helped shape my thinking on this topic over many years, I am grateful to Cecil Abungu, Ashwin Acharya, Michael Aird, Nora Amman, Markus Anderljung, Shahar Avin, Joslyn Barnhart, Seth Baum, Haydn Belfield, John Bliss, Miles Brundage, Chris Byrd, Nathan Calvin, Sam Clarke, Di Cooke, Carla Zoe Cremer, Allan Dafoe, Noemi Dreksler, Carrick Flynn, Seán Ó hÉigeartaigh, Samuel Hilton, Tom Hobson, Shin-Shin Hua, Luke Kemp, Seb Krier, David Krueger, Martina Kunz, Jade Leung, John-Clark Levin, Alex Lintz, Kayla Matteucci, Nicolas Moës, Ian David Moss, Neel Nanda, Michael Page, Ted Parson, Carina Prunkl, Jonas Schuett, Konrad Seifert, Rumtin Sepasspour, Toby Shevlane, Jesse Shulman, Charlotte Siegmann, Maxime Stauffer, Charlotte Stix, Robert Trager, Jess Whittlestone, Christoph Winter, Misha Yagudin, and many others. This project does not necessarily represent their views.

Furthermore, for specific input on (sections of) this report, I thank Cecil Abungu, Shahar Avin, Haydn Belfield, Jess Bland, Sam Clarke, Noemi Dreksler, Seán Ó hÉigeartaigh, Caroline Jeanmaire, Christina Korsgaard, Gavin Leech, and Eli Lifland. Again, this analysis does not necessarily represent their views; and any remaining errors are my own.

Looking back at this post, I realize that I originally approached it by asking myself "which perspectives are true," but a more useful approach is to try to inhabit each perspective and within the perspective generate ideas, considerations, interventions, affordances, etc.

I'm curious where the plan "convey an accurate assessment of misalignment risks to everyone, expect that they act sensibly based on that, which leads to low x-risk" fits here.

(I'm not saying I endorse this plan.)

Probably "environment-shaping", but I imagine future posts will discuss each perspective in more detail.

(apologies for very delayed reply)

Broadly, I'd see this as:

Good post!

I appreciate that these are the perspectives' "(oversimplified) slogan form," but still, I identify with 10 of these perspectives, and I strongly believe that this is basically correct. There are several different kinds of ways to make the future go better, and we should do all of them, pursuing whatever opportunities arise and adapting based on changing facts (e.g., about polarity) and possibilities (e.g., for coordination). So I'm skeptical of thinking in terms of these perspectives; I would think in terms of ways-to-make-the-future-go-better. A quick list corresponding directly to your perspectives:

We should more or less do all of these!

Agree on aggregate it's good for a collection of people to pursue many different strategies, but would you personally/individually weight all of these equally? If so, maybe you're just uncertain? My guess is that you don't weight all of these equally. Maybe another framing is to put probabilities on each and then dedicate the appropriate proportion of resources accordingly. This is a very top down approach though and in reality people will do what they will! I guess it seems hard to span more than two beliefs next to each other on any axis as an individual to me. And when I look at my work and my beliefs personally, that checks out.

Sorry more like a finite budget and proportions, not probabilities.

Sure, of course. I just don’t think that looks like adopting a particular perspective.

Thanks for these points! I like the rephrasing of it as 'levers' or pathways, thosea re also good.

A downside of the term 'strategic perspective' is certainly that it implies that you need to 'pick one', that a categorical choice needs to be made amongst them. However:

-it is clearly possible to combine and work across a number of these perspectives simultaneously, so they're not mutually exclusive in terms of interventions; -in fact, under existing uncertainty over TAI timelines and governance conditions (i.e. parameters), it is probably preferable to pursue such a portfolio approach, rather than adopt any one perspective as the 'consensus one'.

I do agree that the 'Perspectives' framing may be too suggestive of an exclusive, coherent position that people in this space must take, when what I mean is more a loosely coherent cluster of views.

--

@tamgent "it seems hard to span more than two beliefs next to each other on any axis as an individual to me" could you clarify what you meant by this?

Which are you?

To some extend, I'd prefer not yet to anchor people too much, before finishing the entire sequence. I'll aim to circle around later and have more deep reflection on my own commitments. In fact, one reason why I'm doing this project is that I notice I have rather large uncertainties over these different theories myself, and want to think through their assumptions and tradeoffs.

Still, while going into more detail on it later, I think it's fair that I provide some disclaimers about my own preferences, for those who wish to know them before going in:

[preferences below break]

... ... ... ...

TLDR: my currently (weakly held) perspective is something like '(a) as default, pursue portfolio approach consisting of interventions from Exploratory, Prosaic Engineering, Path-setting, Adaptation-enabling, Network-building, and Environment-shaping perspectives: (b) under extremely short timelines and reasonably good alignment chances, switch to Anticipatory and Pivotal Engineering; (c) under extremely low alignment success probability, switch to Containing;"

This seems grounded in a set of predispositions / biases / heuristics that are something like:

Given I've quite a lot of uncertainty about key (technical and governance) parameters, I'm hesitant to commit to any one perspective and prefer portfolio approaches. --That means I lean towards strategic perspectives that are more information-providing (Exploratory), more robustly compatible with- and supportive of many others (Network-building, Environment-shaping), and/or more option-preserving and flexible (Adaptation-enabling); --conversely, for these reasons I may have less affinity for perspectives that potentially recommend far-reaching, hard-to-reverse actions under limited information conditions (Pivotal Engineering, Containing, Anticipatory);

My academic and research background (governance; international law) probably gives me a bias towards the more explicitly 'regulatory' perspectives (Anticipatory, Path-setting, Adaptation-enabling), especially in multilateral version (Coalitional); and a bias against perspectives that are more exclusively focused on the technical side alone (eg both Engineering perspectives), pursue more unilateral actions (Pivotal Engineering, Partisan), or which seek to completely break or go beyond existing systems (System-changing)

There are some perspectives (Adaptation-enabling, Containing) that have remained relatively underexplored within our community. While I personally am not yet convinced that there's enough ground to adopt these as major pillars for direct action, from an Exploratory meta-perspective I am eager to see these options studied in more detail.

I am aware that under very short timelines, many of these perspectives fall away or begin looking less actionable;

[ED: I probably ended up being more explicit here than I intended to; I'd be happy to discuss these predispositions, but also would prefer to keep discussion of specific approaches concentrated in the perspective-specific posts (coming soon).

Hi @MMMaas, will you be continuing this sequence? I found it helpful and was looking forward to the next few posts, but it seems like you stopped after the second one.

I will! Though likely in the form of a long form report that's still in draft, planning to write it out in the next months. Can share a (very rough) working draft if you PM me.

A potentially useful subsection for each perspective could be: evidence that should change your mind about how plausible this perspective is (including things you might observe over the coming years/decades). This would be kinda like the future-looking version of the "historical analogies" subsection.

That's a great suggestion, I will aim to add that for each!

This seems great! I really like the list of perspectives, it gave me good labels for some rough concepts I had floating around, and listed plenty of approaches I hadn't given much thought. Two bits of feedback:

Excited for the rest of this sequence :)

Thanks for the catch on the table, I've corrected it!

And yeah, there's a lot of drawbacks to the table format -- and a scatterplot would be much better (though unfortunately I'm not so good with editing tools, would appreciate recommendations for any). In the meantime, I'll add in your disclaimer for the table.

I'm aiming to restart posting on the sequence later this month, would appreciate feedback and comments.