This is the introduction of an in-progress report called Strategic Perspectives on Long-term AI Governance (see sequence).

- Aim: to contribute to greater strategic clarity, consensus, and coherence in the Long-term AI Governance field, by disentangling current and possible approaches, and providing insight into their assumptions and cruxes. Practically, the aim is for this to enable a more informed discussion about what near-term actions we can or should pursue to ensure the beneficial development and impact of Transformative AI in the long-term.

- Epistemic status: attempted theory-building, with all the strengths and pitfalls of map-drawing . Draws on years of research in the space and >2 year of focused conversations on this topic, but views are tentative, presented in order to enable further discussion.

- Status: This is a project that I will be producing over the coming months. The breadth of topics and perspectives covered means that some sections are hastily sketched: as I continue developing the report, I welcome feedback and commentary. I am grateful to the many people who have already given feedback and encouragement so far.

Primer

Tl;dr: look at this Airtable.

The Long-term AI Governance community aims to shape the development and deployment of advanced AI--whether understood as Transformative AI (TAI) or as Artificial General Intelligence (AGI)--in beneficial ways.

However, there is currently a lack of strategic clarity, with disagreement over relevant background assumptions; what actions to take in the near-term, the strengths and risks of each approach, and where different approaches might strengthen or trade off against one another.

This sequence will explore 15 different Strategic Perspectives on long-term AI governance, exploring their distinct:

- assumptions about key strategic parameters that shape transformative AI, in terms of technical landscape and governance landscape;

- Theory of victory and rough impact story;

- internal tensions, cruxes, disagreements or tradeoffs within the perspective;

- historical analogies and counter-examples;

- recommended actions, including intermediate goals and concrete near-term interventions;

- suitability, in terms of outside-view strengths and drawbacks.

Project Roadmap

(Links to be added as entries come out)

- I introduce and sketch this sequence (you are here);

- I give a background and rationale for this project, by discussing some of the challenges faced by the Long-term AI Governance field, comparing strategic clarity, consensus, and coherence, and mapping how other work has contributed to questions around strategic clarity.

- I frame the goals and scope of this project--what it does, and does not aim to do;

- I sketch the components of a strategic perspective (i.e. the space of arguments or cruxes along which perspectives can differ or disagree);

- I set out 15 distinct Strategic Perspectives in Long-term AI Governance, clustering different views on the challenges of AI governance (see below);

- I reflect on the distribution of these perspectives in the existing Long-term AI Governance community; and discuss four approaches to navigating between them;

- In four Appendices, I cover:

- (1). Definitions of key terms around advanced AI, AI governance, long-term(ist) AI governance, theories of impact, and strategic perspectives;

- (2). A review of existing work in long-term AI governance, and how this project relates to it;

- (3). scope, limits and risks of the project;

- (4). a taxonomy of relevant strategic parameters in the technical and governance landscapes around TAI, and possible assumptions across them.

Summary of perspectives

Perspective | In (oversimplified) slogan form |

|---|---|

Exploratory | We remain too uncertain to meaningfully or safely act; conduct high-quality research to achieve strategic clarity and guide actions |

Pivotal Engineering | Prepare for a one-shot technical ‘final exam’ to align the first AGI; followed by a pivotal act to mitigate risks from any unsafe systems |

Prosaic Engineering | Develop and refine alignment tools in existing systems, disseminate them to the world, and promote AI lab risk mitigation |

Partisan | Pick a champion to support in the race, to help them develop TAI/AGI first in a safe way, and/or in the service of good values |

Coalitional | Create a joint TAI/AGI program to support, to avert races and share benefits |

Anticipatory | Regulate by establishing forward-looking policies today, which are explicitly tailored to future TAI |

Path-setting | Regulate by establishing policies and principles for today's AI, which set good precedent to govern future TAI |

Adaptation-enabling | Regulate by ensuring flexibility of any AI governance institutions established in the near-term, to avoid suboptimal lock-in and enable their future adaptation to governing TAI |

Network-building | Nurture a large, talented and influential community, and prepare to advise key TAI decision-makers at a future 'crunch time' |

Environment-shaping | Improve civilizational competence, specific institutions, norms, regulatory target surface, cooperativeness, or tools, to indirectly improve conditions for later good TAI decisions |

Containing | Coordinate to ensure TAI/AGI is delayed or never built |

System-changing | Pursue fundamental changes or realignment in the world as precondition to any good outcomes |

Skeptical | Just wait-and-see, because TAI is not possible, long-term impacts should not take ethical priority, and/or the future is too uncertain to be reliably shaped |

Prioritarian | Other existential risks are far more certain, pressing or actionable, and should gain priority |

‘Perspective X’ | [something entirely different, that I am not thinking of] |

Table 1: strategic perspectives, in slogan form

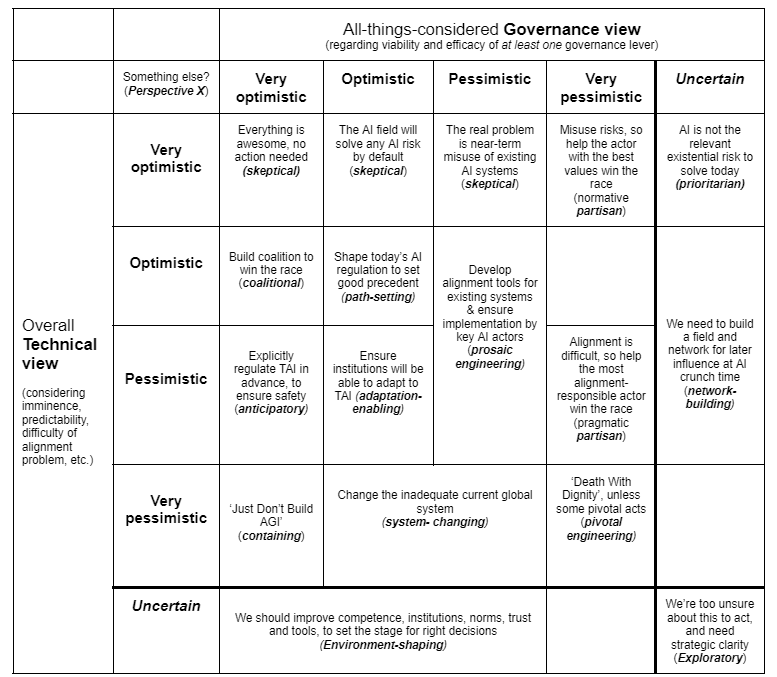

Each of these perspectives can be understood in their own terms: but it is also valuable to compare and contrast them with one another. For instance, we can (imperfectly) sketch different perspectives' positions in terms of their overall optimism or pessimism around the technical and governance parameters of long-term AI governance (see Table 2).

Table 2: oversimplified mapping of strategic perspectives, by overall Technical and Governance views

Acknowledgements

This research has been mainly supported through work within CSER’s AI-FAR team, and by the Legal Priorities Project, especially the 2021 Summer Research Fellowship. It also owes a lot to conversations with many people across this space.

For conversations around this topic that have helped shape my thinking on this topic over many years, I am grateful to Cecil Abungu, Ashwin Acharya, Michael Aird, Nora Amman, Markus Anderljung, Shahar Avin, Joslyn Barnhart, Seth Baum, Haydn Belfield, John Bliss, Miles Brundage, Chris Byrd, Nathan Calvin, Sam Clarke, Di Cooke, Carla Zoe Cremer, Allan Dafoe, Noemi Dreksler, Carrick Flynn, Seán Ó hÉigeartaigh, Samuel Hilton, Tom Hobson, Shin-Shin Hua, Luke Kemp, Seb Krier, David Krueger, Martina Kunz, Jade Leung, John-Clark Levin, Alex Lintz, Kayla Matteucci, Nicolas Moës, Ian David Moss, Neel Nanda, Michael Page, Ted Parson, Carina Prunkl, Jonas Schuett, Konrad Seifert, Rumtin Sepasspour, Toby Shevlane, Jesse Shulman, Charlotte Siegmann, Maxime Stauffer, Charlotte Stix, Robert Trager, Jess Whittlestone, Christoph Winter, Misha Yagudin, and many others. This project does not necessarily represent their views.

Furthermore, for specific input on (sections of) this report, I thank Cecil Abungu, Shahar Avin, Haydn Belfield, Jess Bland, Sam Clarke, Noemi Dreksler, Seán Ó hÉigeartaigh, Caroline Jeanmaire, Christina Korsgaard, Gavin Leech, and Eli Lifland. Again, this analysis does not necessarily represent their views; and any remaining errors are my own.