Knowing the shape of future (longterm) value appears to be important to decide which interventions would more effectively increase it. For example, if future value is roughly binary, the increase in its value is directly proportional to the decrease in the likelihood/severity of the worst outcomes, in which case existential risk reduction seems particularly useful[1]. On the other hand, if value is roughly uniform, focussing on multiple types of trajectory changes would arguably make more sense[2].

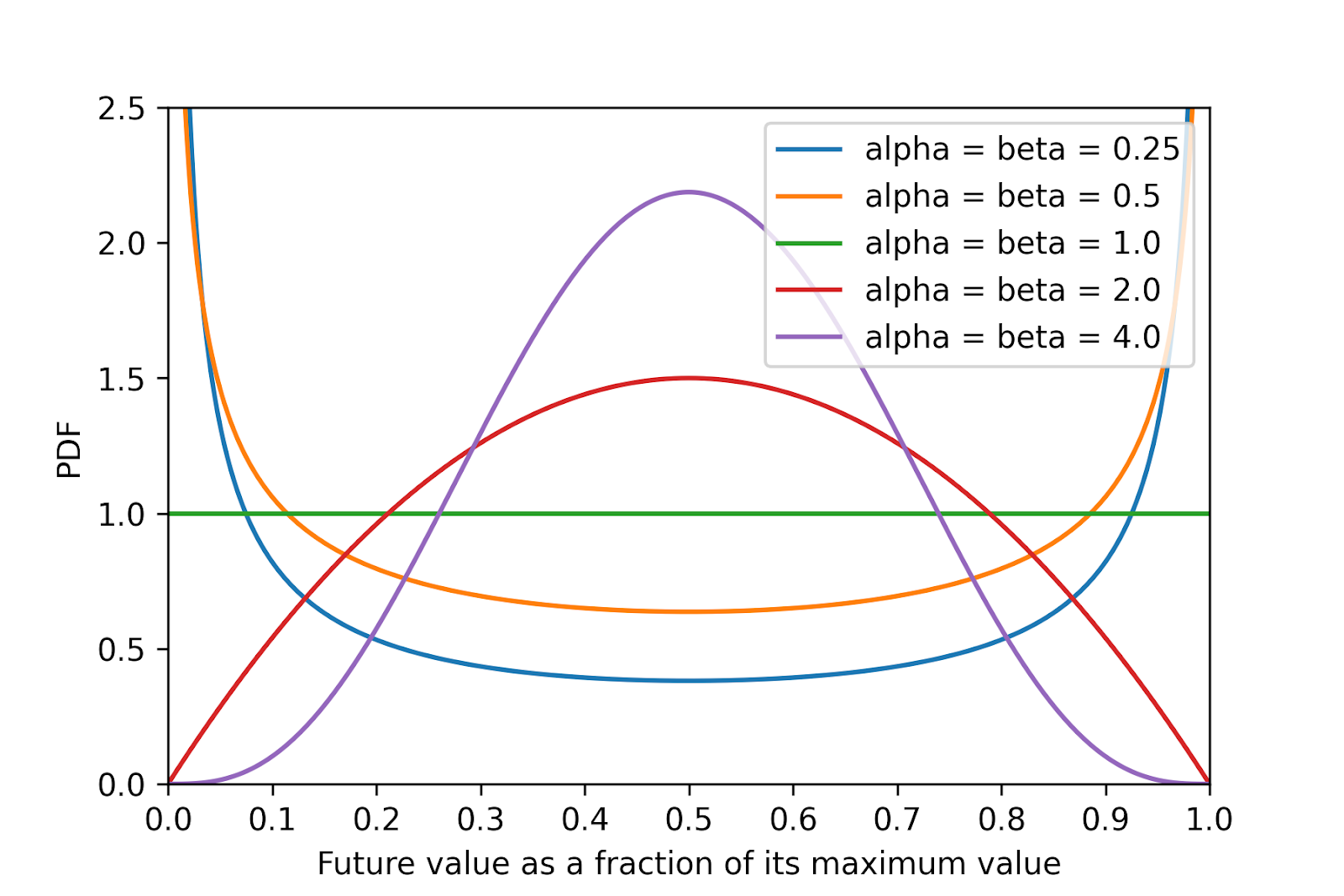

So I wonder what is the shape of future value. To illustrate the question, I have plotted in the figure below the probability density function (PDF) of various beta distributions representing the future value as a fraction of its maximum value[3].

For simplicity, I have assumed future value cannot be negative. The mean is 0.5 for all distributions, which is Toby Ord’s guess for the total existential risk given in The Precipice[4], and implies the distribution parameters alpha and beta have the same value[5]. As this tends to 0, the future value becomes more binary.

- ^

Existential risk was originally defined in Bostrom 2002 as:

One where an adverse outcome would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential.

- ^

Although trajectory changes encompass existential risk reduction.

- ^

The calculations are in this Colab.

- ^

If forced to guess, I’d say there is something like a one in two chance that humanity avoids every existential catastrophe and eventually fulfills its potential: achieving something close to the best future open to us.

- ^

According to Wikipedia, the expected value of a beta distribution is “alpha”/(“alpha” + “beta”), which equals 0.5 for “alpha” = “beta”.

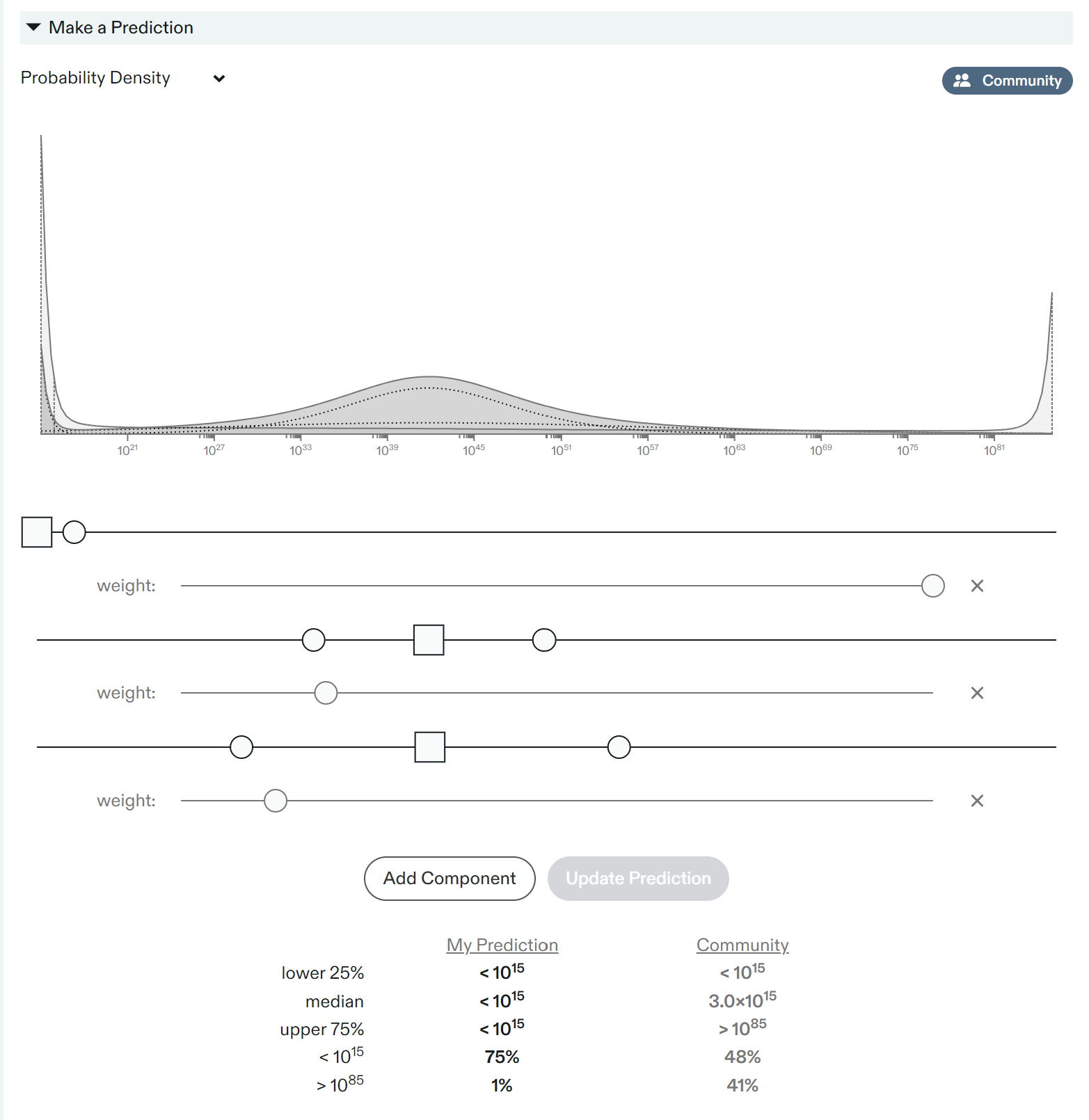

Slightly pedantic note but shouldn’t the metaculus gwp question be phrased as the world gwp in our lightcone? We can’t reach most of the universe so unless I’m misunderstanding this would become a question of aliens and stuff that is completely unrelated/out of the control to/of humans.

Also somewhat confused what money even means, when you have complete control of all the matter in the universe. Is the idea trying to translate our levels of production into what they would be valued at today? Do people today value billions of sentient digital minds? Not saying this isn’t useful to think about but just trying to wrap My head around it.

Thanks for sharing!

In theory, it seems possible to have future value span lots of orders of magnitude while not being binary. For example, one could have a lognormal/loguniform distribution with median close to 10^15, and 95th percentile around 10^42. Even if one thinks there is a hard upper bound, there is the possibility of selecting a truncated lognormal/loguniform (I guess not in Metaculus).