Randomized Control Trials have some drawbacks. For many important questions, like causes of the industrial revolution, a randomized trial is impossible. For many others, RCTs are expensive and cumbersome, leading to low sample sizes or experimental designs that precisely answer irrelevant questions. Still, when RCTs with large sample size and generalizable designs are possible, their advantages justify deference to their results even when observational evidence disagrees. This is the case with the four trials in this post. They each have hundreds to tens of thousands of participants and budgets big enough to test treatments that are relevant to the real world.

The largest RCT in this group, run by Harvard economists and the charity RIP Medical Debt, tests the effects of medical debt cancellation. They relieved $169 million dollars of debt for 83,401 people over two years 2018-2020. Medical debt has extremely low recovery rates, so the $169 million dollar face value only cost 2 or 3 million dollars to relieve, but this is still a large treatment size. The researchers followed up with the recipients of this debt relief with several surveys tracking their mental, physical, and financial health.

There are two other elements which make the evidence from this trial compelling. First, their analyses are pre-registered. This means they submitted the list of regressions they would run before they got the data back from their survey. This is important because it prevents them from putting inconvenient results in the file drawer and is a check against running 100 extra tests where the null hypothesis is true and reporting the 5 that happen to have p < .05. They also ran an expert survey of economists and scientists who predicted the results so we can quantify exactly how much of a narrative violation these results are.

So what did this trial find?

First, we find no impact of debt relief on credit access, utilization, and financial distress on average. Second, we estimate that debt relief causes a moderate but statistically significant reduction in payment of existing medical bills. Third, we find no effect of medical debt relief on mental health on average, with detrimental effects for some groups in pre-registered heterogeneity analysis. [emphasis added]

So wiping out thousands of dollars of medical debt per person had precise null effects on just about anything positive, made recipients less likely to pay off other debt, and was weakly associated with negative mental health changes.

This is very different from the large positive effects on financial stability and mental health that the surveyed experts expected:

The median expert predicted a 7.0 percentage point reduction in depression … expert survey respondents similarly predict increased healthcare access, reduced borrowing, and less cutting back on spending. Taken together, 75.6% of respondents report that medical debt is at least a moderately valuable use of charity resources (68.8% of academics and 78.3% of non-profit staff) and 51.1% think it is very valuable or extremely valuable

The next RCT tests a UBI of $1000 a month over three years with a sample size of 1,000 treated participants and 2,000 controls. This is a smaller sample size than the medical debt trial, but they tracked the participants for longer and collected much more detailed data about everything from their career outcomes to financial health to daily time use tracked via a custom app. $1000 extra dollars a month is also a larger income increase than cancelling even several thousand dollars of medical debt. Among the low income participants in the study that they targeted, this extra cash increased their monthly household incomes by 40% on average. They also had a pre-registered design and an expert survey.

Again they have precisely estimated null effects on lots of things that experts thought would be improved by UBI, including health, career prospects, and investments in education. After the first year even measures closely connected to income like food insecurity didn’t differ between the treated and control group. The treated group worked a lot less: household income decreased by 20 cents for every dollar they received. They filled this extra time with more leisure but not much else.

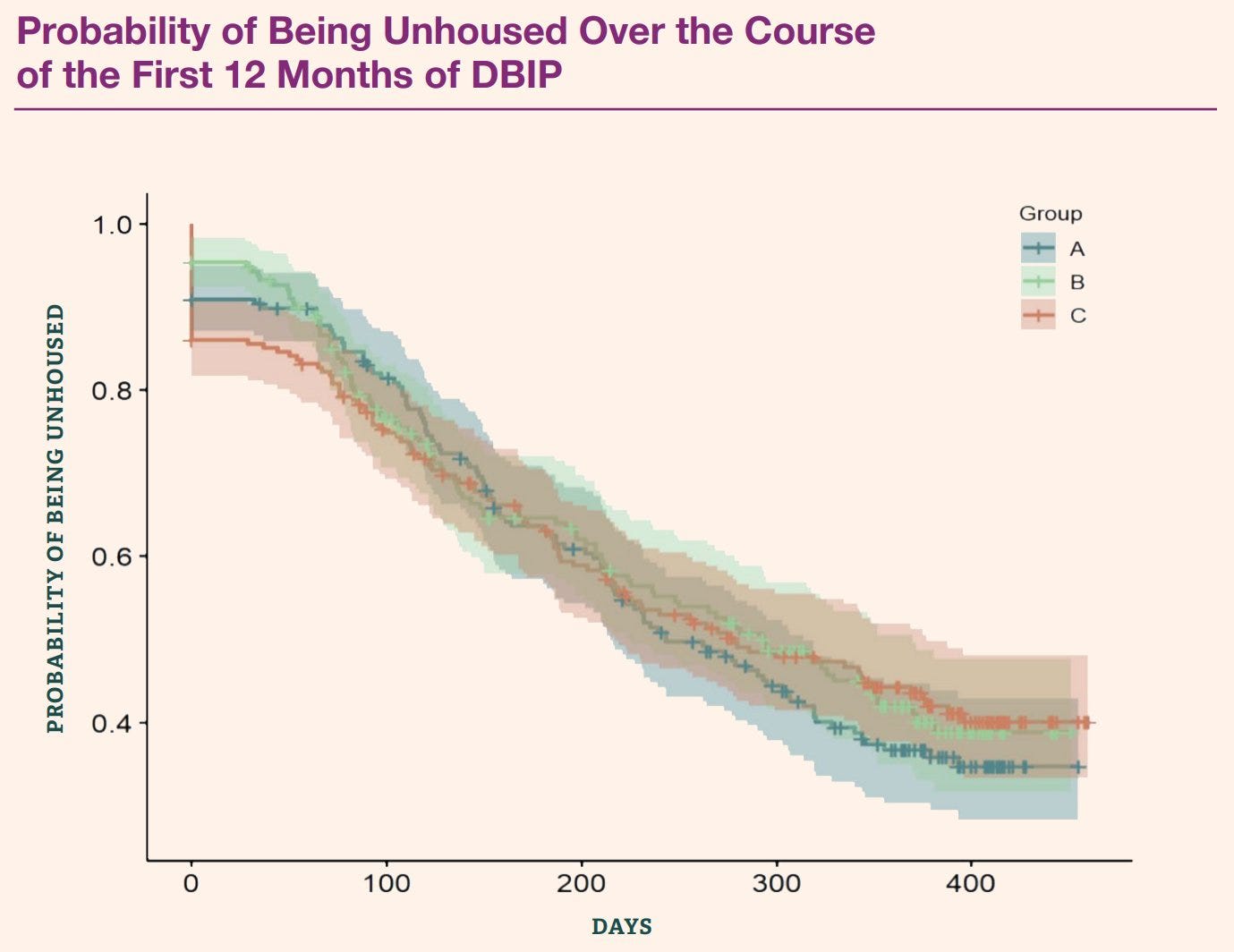

The Denver Basic Income Project also tested a UBI of $1000 a month but enrolled only homeless people in both the treatment and control group. The treated homeless people went from an unhoused rate of ~90% to ~35% over the 12 months following the start of the trial. An impressive sounding result until you compare it to the control goup who went from 88% to 40%. Slightly less improvement but with groups of only about 800, this kind of difference is well within what we'd expect from random variation around an average recovery rate that's equivalent between the treated and control groups.

This null result is clearly not what the creators of this project were hoping for, but I do think this study produces interesting results on homelessness. It reveals a clear statistical separation between what's Matt Yglesias calls America's two homelessness problems. The first type is transitory, caused mostly by economics conditions and housing constraints. This is the group that exits homelessness in both the treatment and control groups. This type of homelessness is not very visible, as many of the people experiencing it still hold down jobs and other societal relationships. This type of homelessness would be smoothed by a cheap housing market with lots of vacancies.

The second homelessness problem is the type of homeless people that stay homeless after year, $12,000 extra bucks or not. The study didn't track substance use, but this type of persistent homelessness is more often motivated by addiction and mental illness than unemployment. This type of homelessness is more visible on the streets of American cities like San Francisco and Philadelphia. This type of homelessness is loud, scary, dangerous, and is not responsive to economic interventions like this basic income.

The final RCT is an older one, from MIT economist John Horton. It's technically the largest RCT on this list by sample size, though the monetary size isn't as large. This trial experimentally rolled out a minimum wage requirement in an online labor market with a sample size of 160,000 job postings. Unlike the previous three trials, this RCT is a narrative violation not because it finds null effects (that’s the prevailing narrative among minimum wage supporters) instead, the results match exactly the predictions of standard economic theory.

(1) the wages of hired workers increases,

(2) at a sufficiently high minimum wage, the probability of hiring goes down,

(3) hours-worked decreases at much lower levels of the minimum wage, and

(4) the size of the reductions in hours-worked can be parsimoniously explained in part by the substantial substitution of higher productivity workers for lower productivity workers.

The decades of empirical debate over the employment effects of the minimum wage rages on within economics. Observational causal inference is difficult to get right and often easy to manipulate in a desired direction. This paper alone isn't a final answer, but it fits with the results of theory and the average of empirical findings. This confluence of results should carry more weight in discrediting observational research with opposite sign than it seems to in economics.

RCTs aren't always the right tool and they certainly aren't infallible. But these four trials have large sample sizes, solid designs, and policy relevant treatments. They all convincingly answer questions that have been the focus of debate in empirical economics for decades. If economists were honest about their commitment to expertise and evidence, these studies would massively shift the direction of research on these questions and near discredit any prognostications based on lower quality observational research that contradicts them. For the three newer studies, there is still time to see if this happens. Unfortunately, the results of these RCTs are politically inconvenient for many economists. For Hortons minimum wage paper, we can see that this political inconvenience has motivated many economists to go on with confounded observational work that contradicts the results of the gold standard of experimental evidence on the topic.

I would not say this is true of the medical debt RCT. I think it tells us very little about situations where people have more normal types of debt and actually expect to repay it

I like Eliezer's tweet on this

Executive summary: Four large-scale randomized controlled trials (RCTs) in economics produced results that challenge prevailing narratives, demonstrating the value of RCTs in providing reliable evidence on important policy questions.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.