Summary

Understanding how people first encounter EA and what helps them get involved in EA is important for understanding how to optimize recruitment. With the Meta Coordination Forum survey suggesting that it would be ideal for the EA community to grow rapidly, this is of high importance. To meet the recommended targets, some of these routes into EA would need to scale very dramatically over the next year.

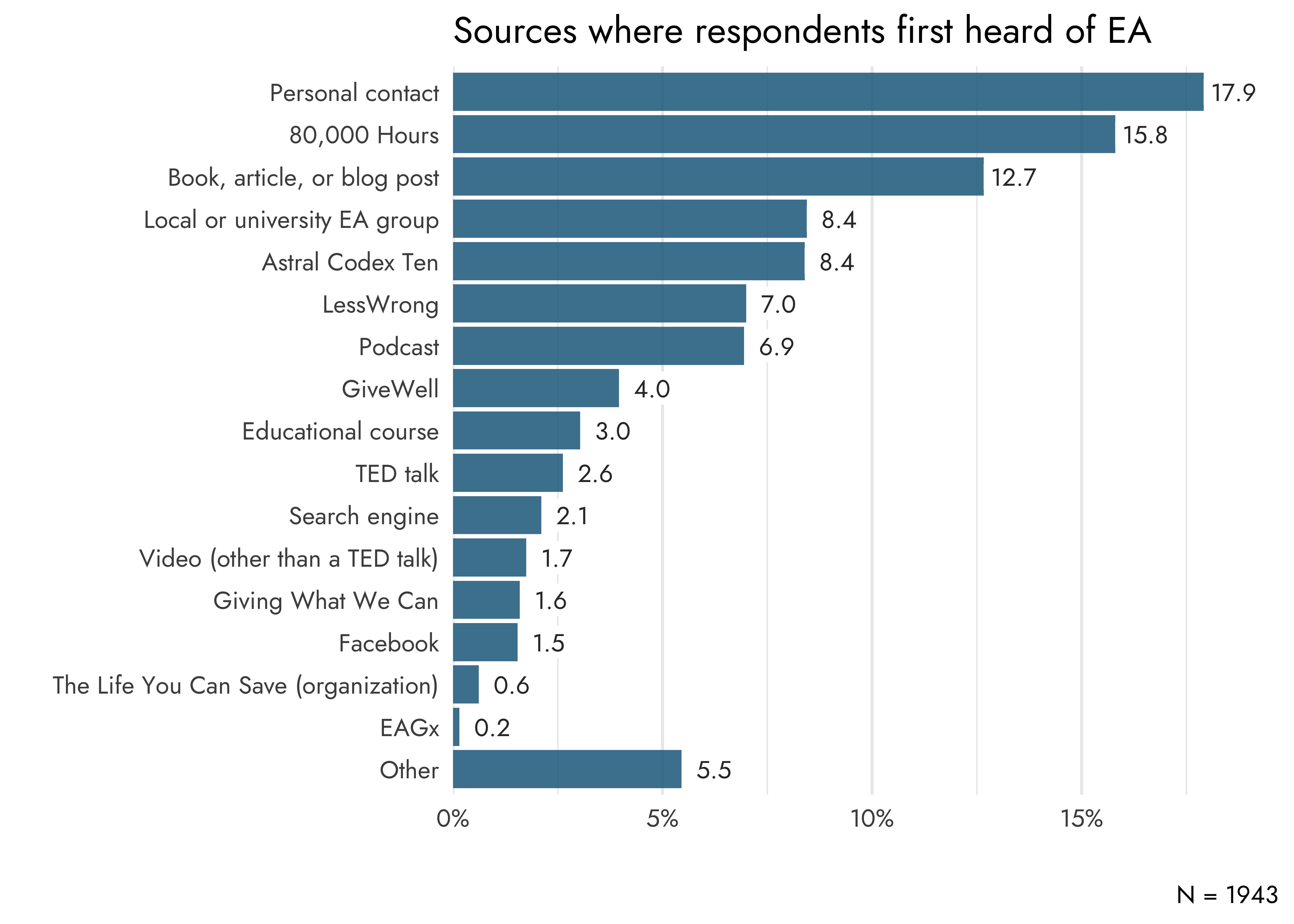

- Personal contacts (17.9%) and 80,000 Hours (15.8%) remain the largest sources of people first hearing about EA

- 80,000 Hours continues to increase in importance

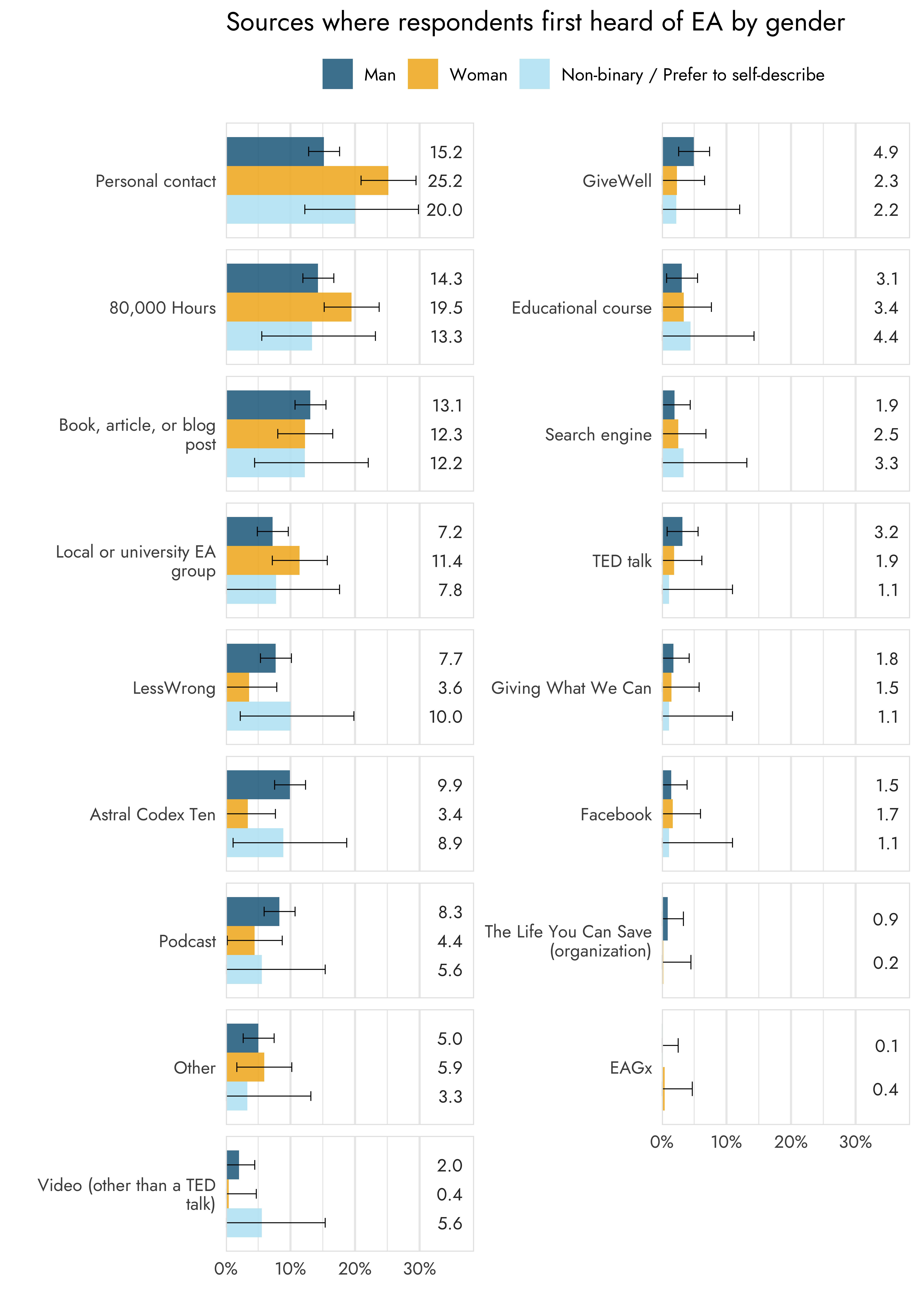

- Personal contacts were particularly important for women first hearing about EA (25.2% vs. 15.2% for men)

- 80,000 Hours was particularly important for non-White people first hearing about EA (22.4% vs. 13.4%)

- Highly engaged EAs disproportionately first learned about EA through personal contacts (19.9% vs. 15.6%)) and EA groups (10.1% vs 6.4%)

- 80,000 Hours and personal contacts were also the factors most commonly cited as important for helping respondents get involved in EA, in roughly similar proportions as in 2022

- Women were particularly likely to cite personal contacts as important for them getting involved (52.2% vs. 43.3% for men)

- Non-White respondents were more likely to select 80,000 Hours (65% vs. 58.4%), the EA Forum (25.4% vs. 16.1%), the online EA community (13.5% vs. 7.4%), and EA Virtual Programs (12% vs. 5.8%)

- Personal contacts were particularly important for highly engaged EAs

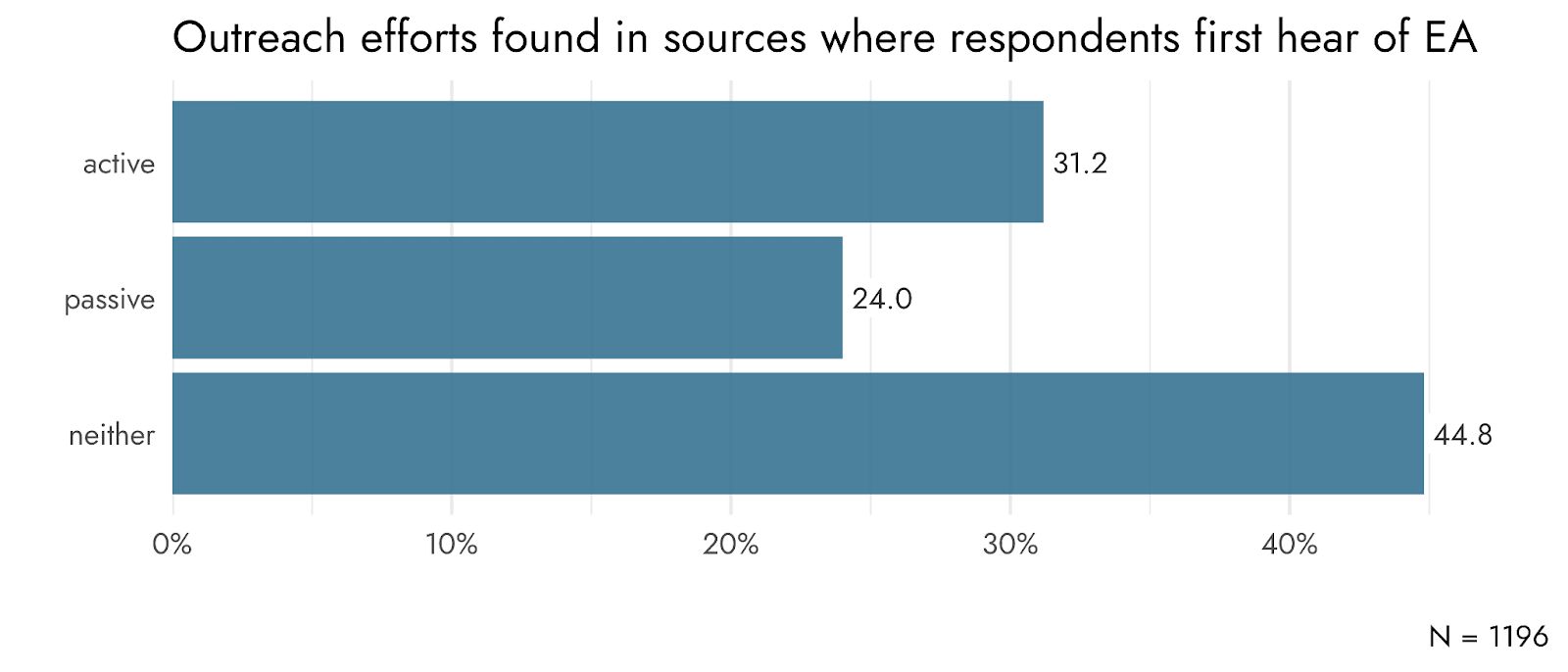

- We coded people’s responses to see whether they first heard about EA due to EA outreach. Just over half of cases (55.2%) involved some form of outreach (31.2% active outreach efforts such as someone seeing an advertisement; 24% passive outreach, such as someone independently finding a book or article created by EAs), and 44.8% involved neither.

Introduction

In this post we report on the results from the 2024 EA Survey about how people get involved in EA. This is the second post about the 2024 EA Survey. The first post, about the demographics in the EA community, can be found here. As usual, there are several caveats about interpreting the results that should be kept in mind, such as those related to how likely the results are likely to be representative of the EA community as a whole. For more on this, please see the introduction of the previous post.

In this post we will report on results about where people first heard about EA and factors important for getting involved, as well as differences between various groups (e.g., gender, racial/ethnic identity, and engagement level) on these questions. Some of the questions were open ended, meaning that there is rich information about some of the topics such as where people first heard of EA. We coded these responses to extract additional information we think are of broad interest to the EA community and decision makers. Note that, as in the previous post, you can request additional analyses for us to conduct if this is helpful for your work.

You can also check out the posts from 2018, 2020, and 2022, on this topic, although the current post can be read as a standalone as we’ll also compare how results have changed over time.

Where do people first hear about EA?

Respondents were asked where they first heard of EA. They could pick a single option from a list of options such as ‘Personal contact’ and ‘Local or university EA group’, including ‘Other’ and ‘I don’t remember’. In the case of ‘Other’, respondents could write down where they first heard of EA. These responses were checked and re-assigned to one of the given categories where possible (64/170 responses).

The most frequent sources were ‘Personal contact’ (17.9%), 80,000 Hours (15.8%), and a ‘Book, article, or blog post’ (12.7%), as can be seen in the graph below.

Note that the different sources are not wholly independent from each other. For example, some respondents who reported first hearing of EA via a personal contact also mentioned that their personal contact referred them to another source such as 80,000 Hours or GiveWell, which are also one of the categories we provided. Additionally, Astral Codex Ten is a blog and could also be considered a ‘Book, article, or blog post’. The categories should therefore mainly be interpreted as what respondents considered their primary source where they first heard of EA.

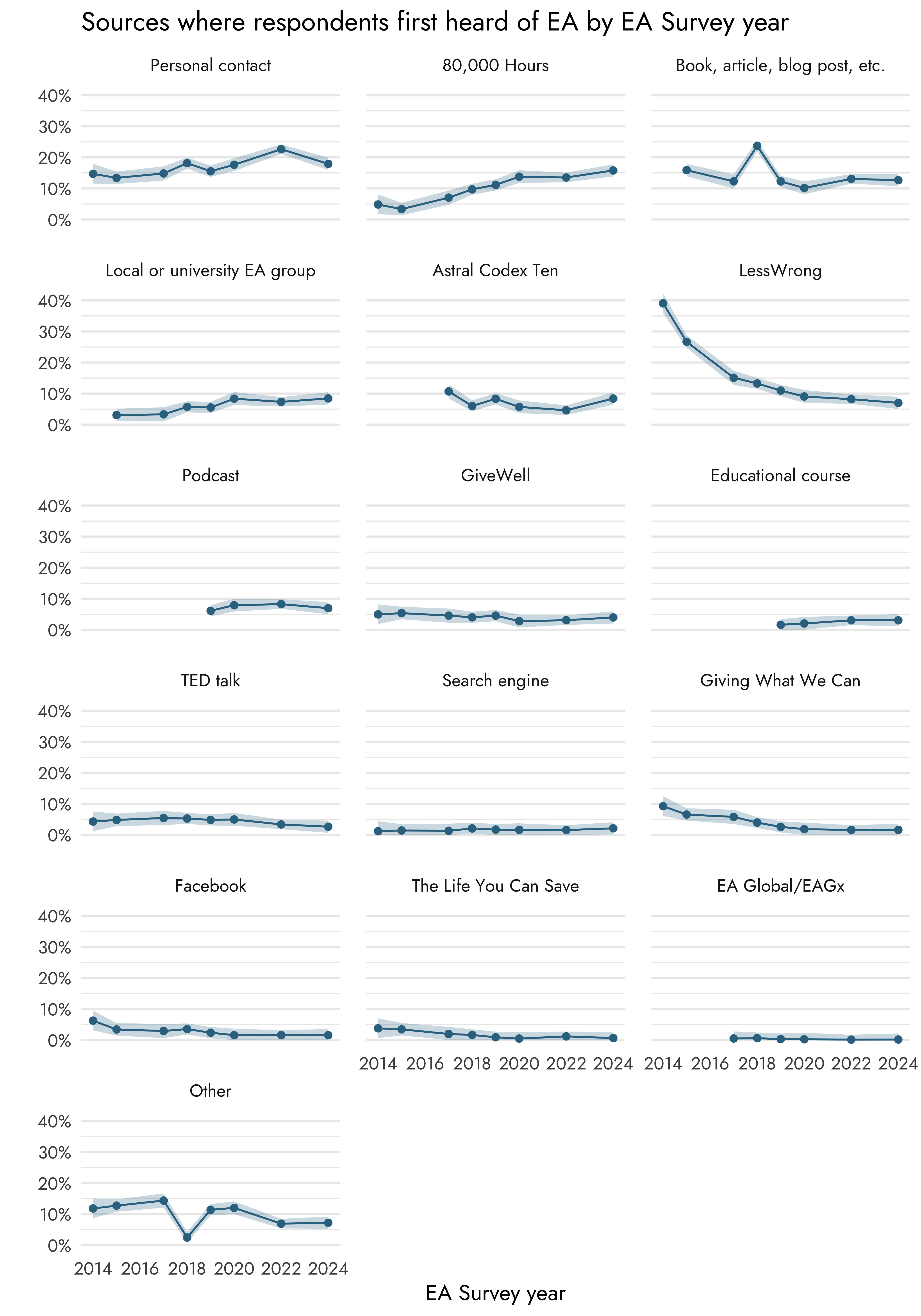

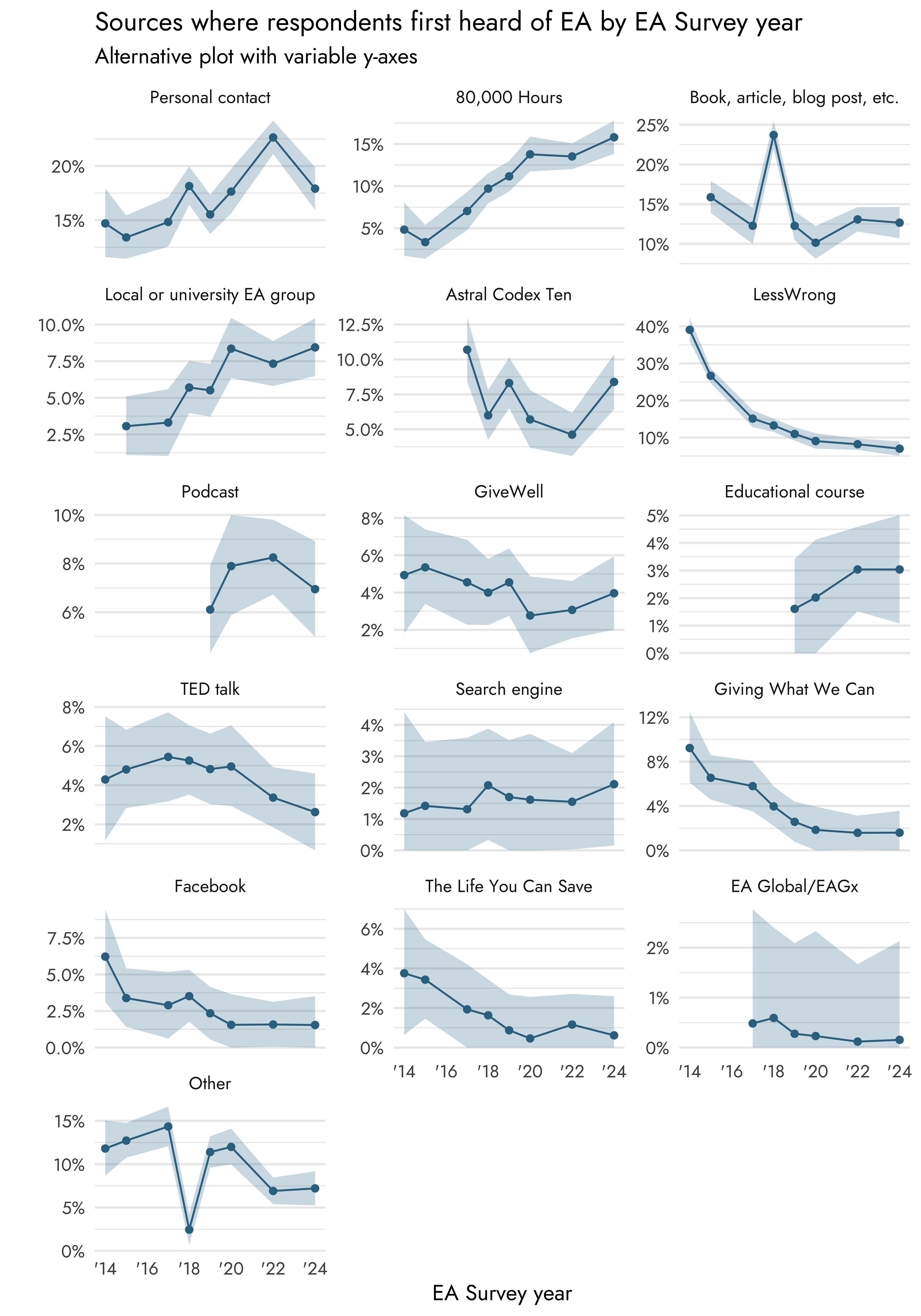

The question of where respondents first heard of EA was first asked in 2014. Since then, the question has been asked in every EA Survey, although not always with the exact same sources. Some were removed (e.g., ‘Animal Charity Evaluators’), added (e.g., ‘Podcast’), or combined (e.g., ‘Friend’ into ‘Personal contact’). Nevertheless, by standardizing the categories we can conduct a year-over-year comparison to see which sources have gotten more or less important as sources where people first hear of EA.

As observed previously, 80,000 Hours has become relatively more important as a source where people first hear of EA. In 2014 and 2015, about 3-5% of respondents selected 80,000 Hours, while now almost 16% of the respondents do. This makes it the largest growing source of where people first hear of EA among the respondents.

Local or university EA groups have also become more important since 2015, from 3% to ~8%, although it has not become more or less popular in recent years. We also saw a relative growth for several years in personal contacts (from ~15% to ~22%), although we see a relative decrease between 2022 and 2024 (to 17.9%).

The most notable change is in LessWrong, which has for years shown a decrease in relative importance, going from being the most important source of introducing respondents at 39.1% in 2014 to one of only single digits. Other sources also show decreases in relative importance since the early years, such as Giving What We Can, Facebook, and The Life You Can Save (organization).

Last time we also noted decreases for GiveWell and Astral Codex Ten. Both sources now show an increase compared to 2022, with Astral Codex Ten showing a relatively large increase from 4.4% to 8.4% (also see Appendix 1 for an alternative graph that uses a variable y-axis to more clearly show the changes from year to year within each source).

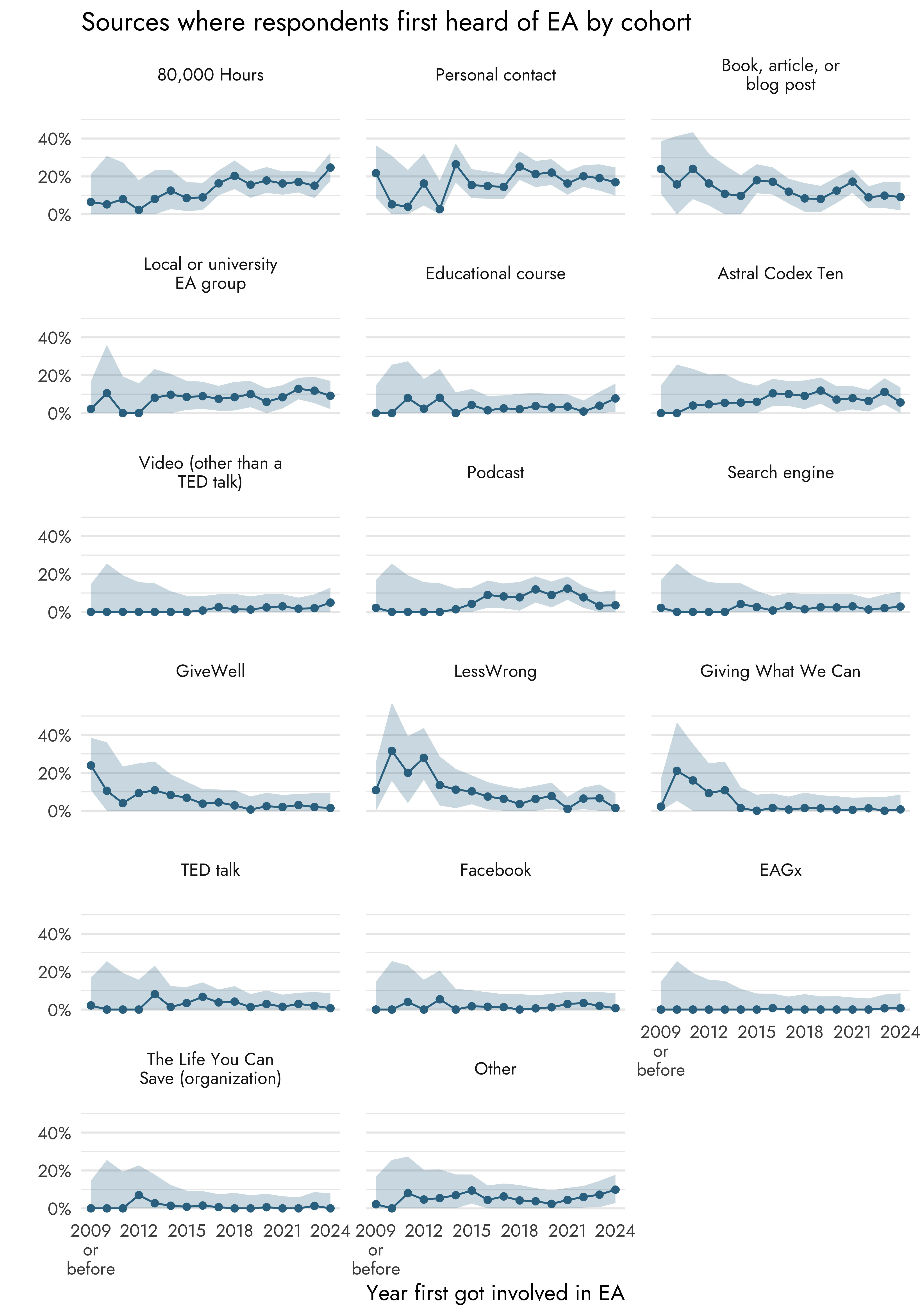

Some of these patterns can also be observed when looking at where different cohorts (when respondents first got involved in EA) first heard of EA.

We see that more recent cohorts are more likely to have heard of EA via 80,000 Hours, with the highest proportion found in the most recent cohort (24.6%).

GiveWell, LessWrong, and Giving What We Can appear to have been important sources among older cohorts. The category ‘Book, article, or blog post’ shows a similar pattern, although this category is still somewhat important as a source of information among those in the most recent cohort (9.15%).

Podcasts seem to have been relatively more important as a source in cohorts from 2015 to 2022, with a drop in more recent cohorts.

Personal contact

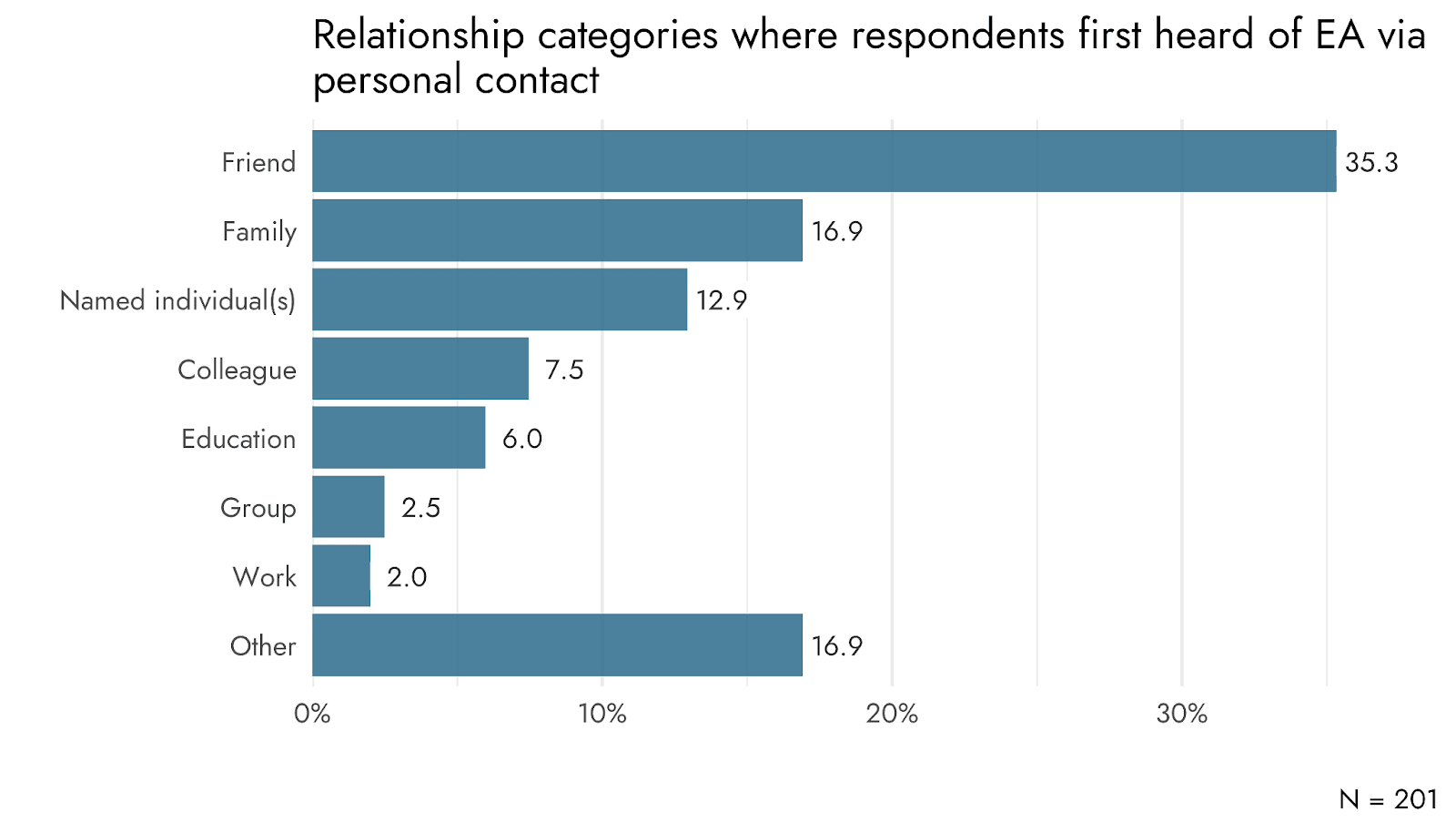

A total of 201 respondents who first heard of EA via personal contact elaborated on where exactly they first heard of EA. We categorized their responses in several categories such as a friend or family member (including their partner). We also included a category for individuals who were specifically named, which were usually well-known people in the EA community.

The most common relationship categories were a friend (35.3%), a family member (16.9%), and ‘Other’ (16.9%), followed by named individuals (12.9%).

Books, articles, and blog posts

Authors

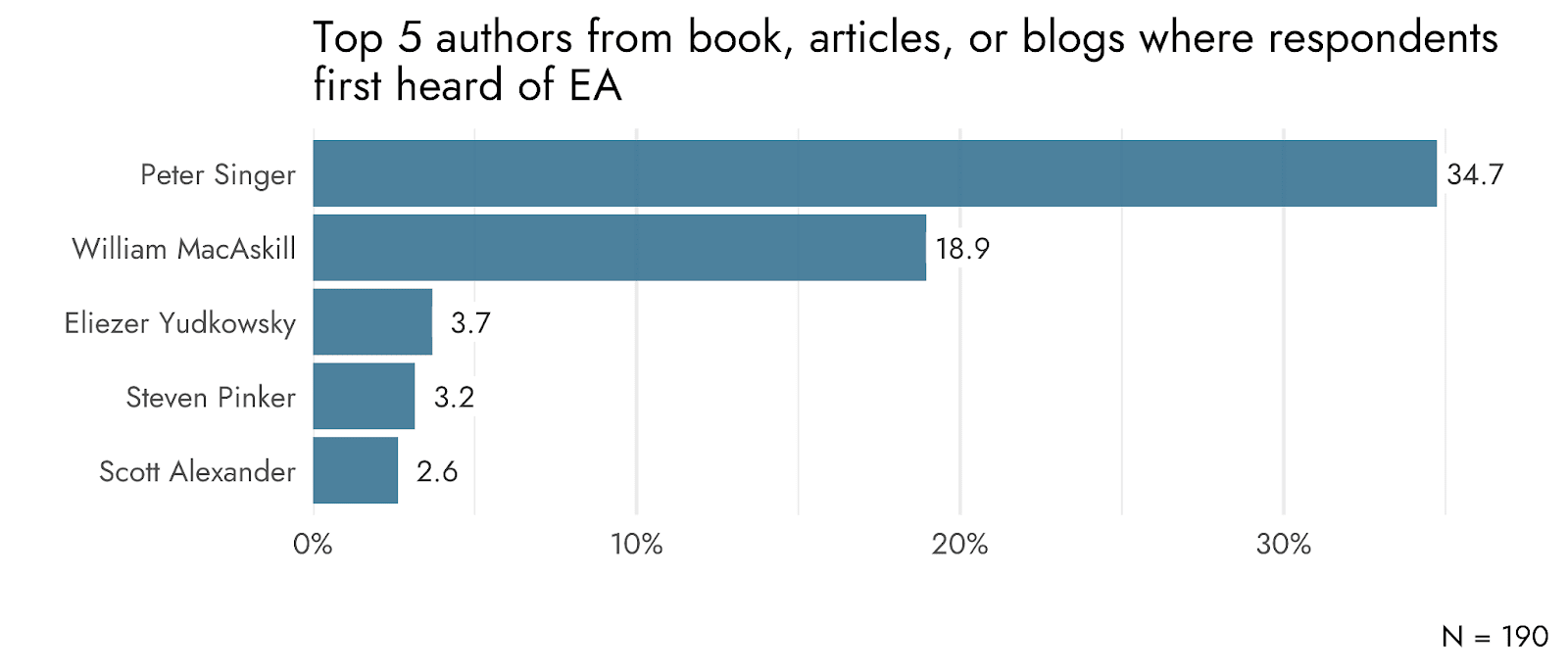

A total of 220 respondents provided additional information about the book, article, or blog post that introduced them to EA. They did not always provide the title of the book, article, or blog post, but they did often mention the author (although not always). Below you can find the top 5 most frequently mentioned authors.

As you can see, Peter Singer (34.7%) and William MacAskill (18.9%) were mentioned most often, followed by Eliezier Yudkowsky (3.7%), Steven Pinker (3.2%), and Scott Alexander (2.6%).

As the next section will show, most of these authors were frequently named due to their popular books, as well as several popular articles. Scott Alexander, in contrast, is mostly known for his blog Astral Codex Ten (previously called Slate Star Codex). The relatively low number of times that Scott Alexander is mentioned is because Astral Codex Ten was also a separate category that respondents could select.

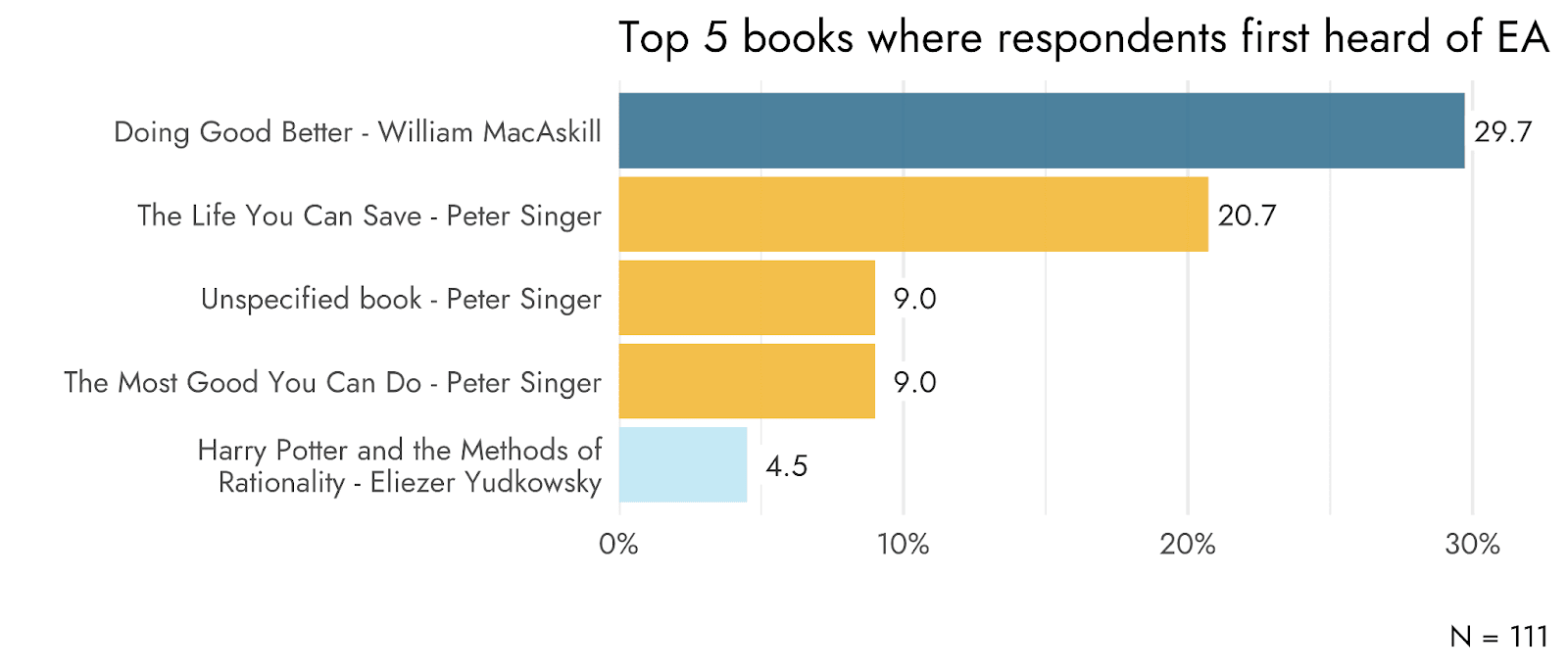

Books

Among the respondents who said they first heard of EA via a book, article, or blog post, 111 respondents specifically mentioned first hearing of EA via a book. The most commonly mentioned books were Doing Good Better by William MacAskill (29.7%), The Life You Can Save (20.7%) and The Most Good You Can Do (9%) by Peter Singer, and Harry Potter and the Methods of Rationality by Eliezer Yudkowsky (HPMOR; 4.5%). Respondents also sometimes mentioned that it was a book by Peter Singer that introduced them to EA, but they did not mention the title (‘Unspecified’, 9%).

It should also be noted that HPMOR was cited more frequently than reported here because it was also often mentioned among those who cited LessWrong (a separate category from books, articles, or blog posts) as where they first heard of EA. Additionally, books were also sometimes mentioned as an additional source of where the respondent first heard of EA.

Articles

Of the 65 respondents who specifically mentioned that they first heard of EA via an article, the most common article was Famine, Affluence, and Morality by Peter Singer (n = 6), followed by articles about FTX/Sam Bankman Fried by unspecified authors (n = 3), The Reluctant Prophet of Effective Altruism by Gideon Lewis-Kraus from the New Yorker (n = 3), and additional unspecified articles.

Blogs

Finally, 24 respondents mentioned their first hearing of EA via a blog. The most commonly mentioned blogs were Marginal Revolution by Tyler Cowen and Alex Tabarrok (n = 4), The Unit of Caring by Kelsey Piper (n = 3), and Thing of Things by Ozy Brennan (n = 3).

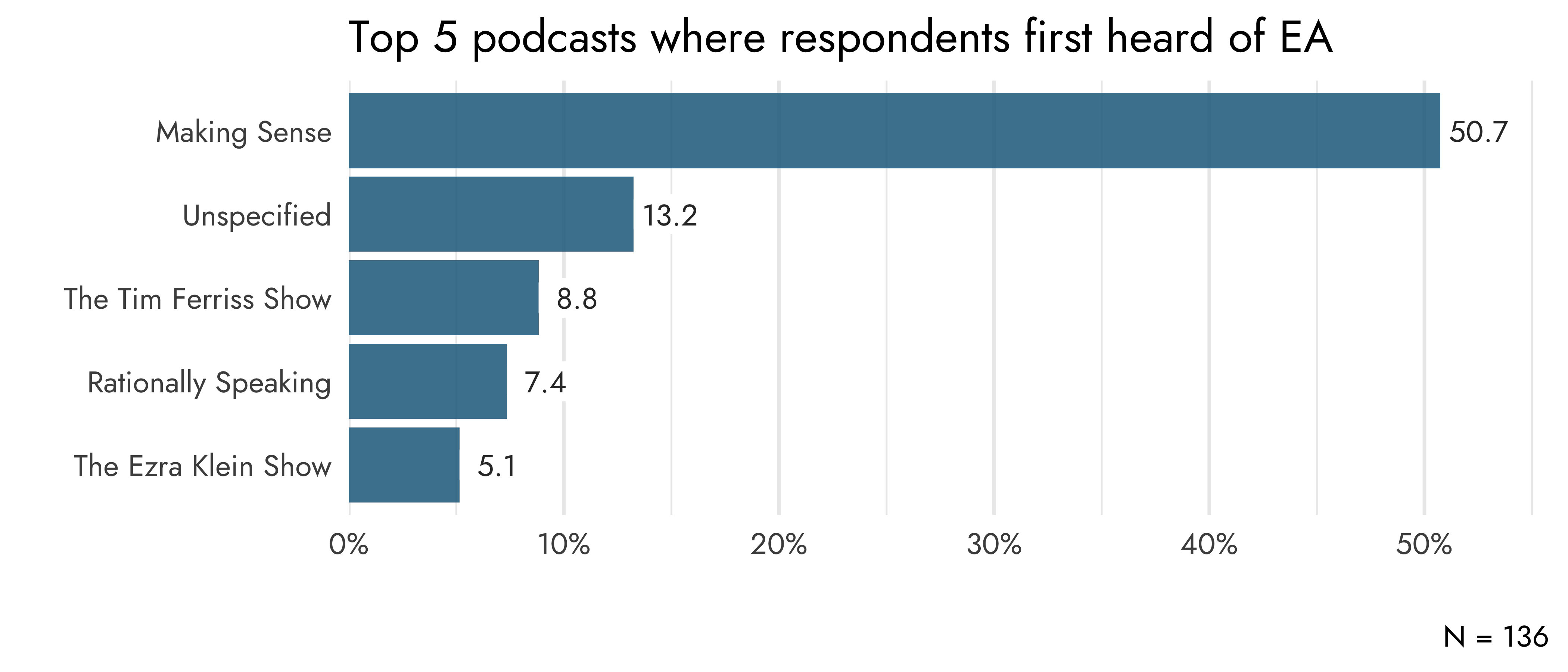

Podcasts

A total of 136 respondents said they first heard of EA via a podcast. By far, the most frequently mentioned podcast was Making Sense by Sam Harris (50.7%), followed by respondents not mentioning the exact podcast (‘Unspecified’; 13.2%). These were followed by The Tim Ferriss Show (8.8%), Rationally Speaking (7.4%), and the Ezra Klein Show (5.1%).

Group differences

Gender

In terms of gender differences, we see that women are more likely to report that they first heard of EA via a personal contact (25.5% vs. 15.2%), 80,000 Hours (19.5% vs. 14.3%), and a local or university EA group (11.4% vs. 7.2%) compared to men. Men, on the other hand, are more likely to report having first heard of EA via Astral Codex Ten (9.9% vs. 3.4%), LessWrong (7.7% vs. 3.6%), a podcast (8.3% vs. 4.4%), GiveWell (4.9% vs. 2.3%), and a video (other than a TED talk; 2% vs. 0.4%). Of these, the largest difference is that for personal contact, followed by Astral Codex Ten and 80,000 Hours.

Only one statistically significant difference was observed involving the group of respondents that identified as non-binary or that preferred to self-describe. Compared to women, they were more likely to have heard of EA via a video (other than a TED talk; 5.6% vs. 0.4%). Note, however, that the lack of other statistically significant differences may stem from the small sample size of this group, not because there are no other potentially relevant differences.

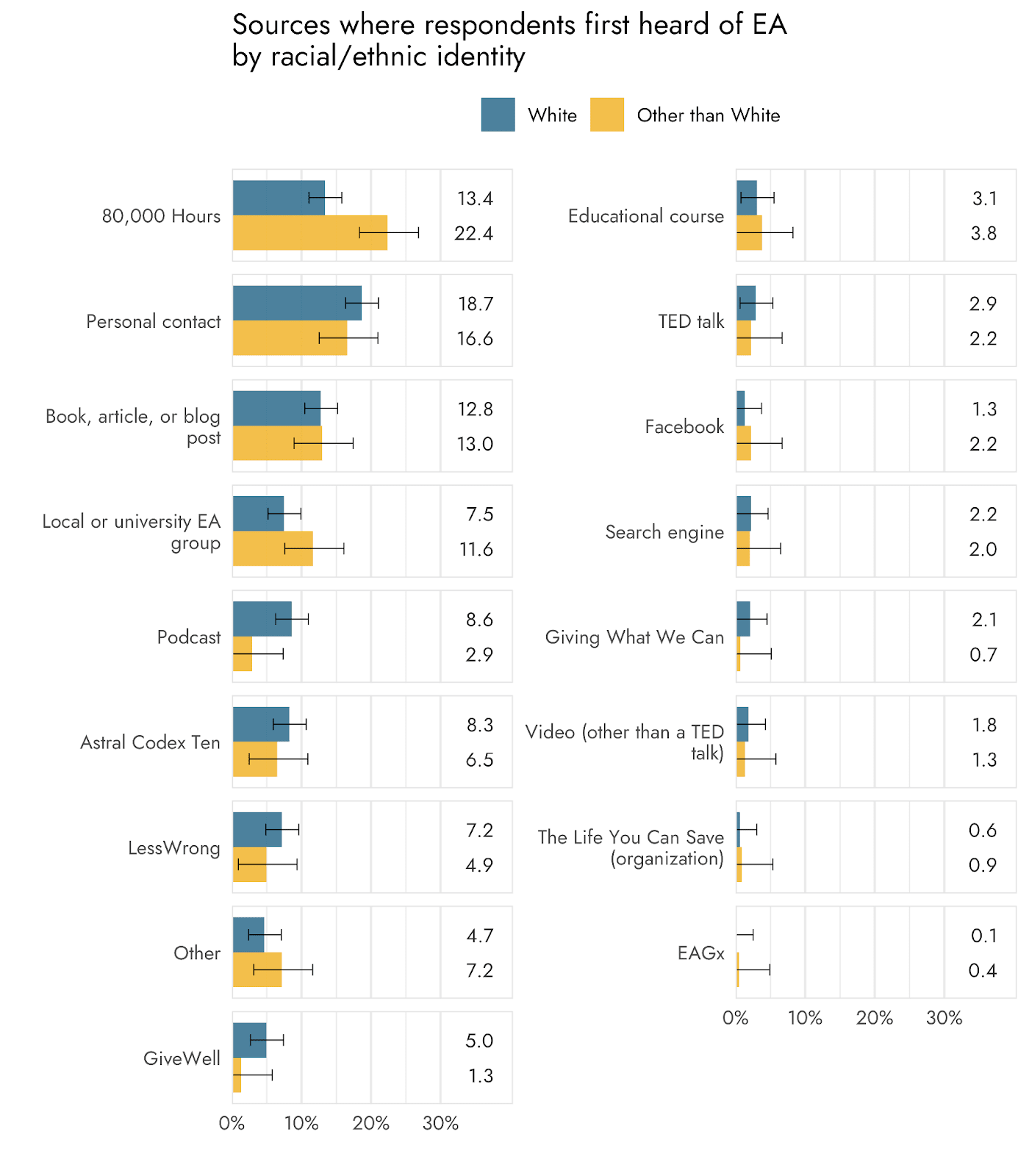

Racial/ethnic identity

In terms of racial/ethnic identity, we observe that respondents other than White are more likely to have heard of EA via 80,000 Hours (22.4% vs. 13.4%) and via a local or university EA Group (11.6% vs. 7.5%). In contrast, White respondents are more likely to have heard of EA via a podcast (8.6% vs. 2.9%) and GiveWell (5% vs. 1.3%). Of these differences, the difference is largest for the 80,000 Hours source.

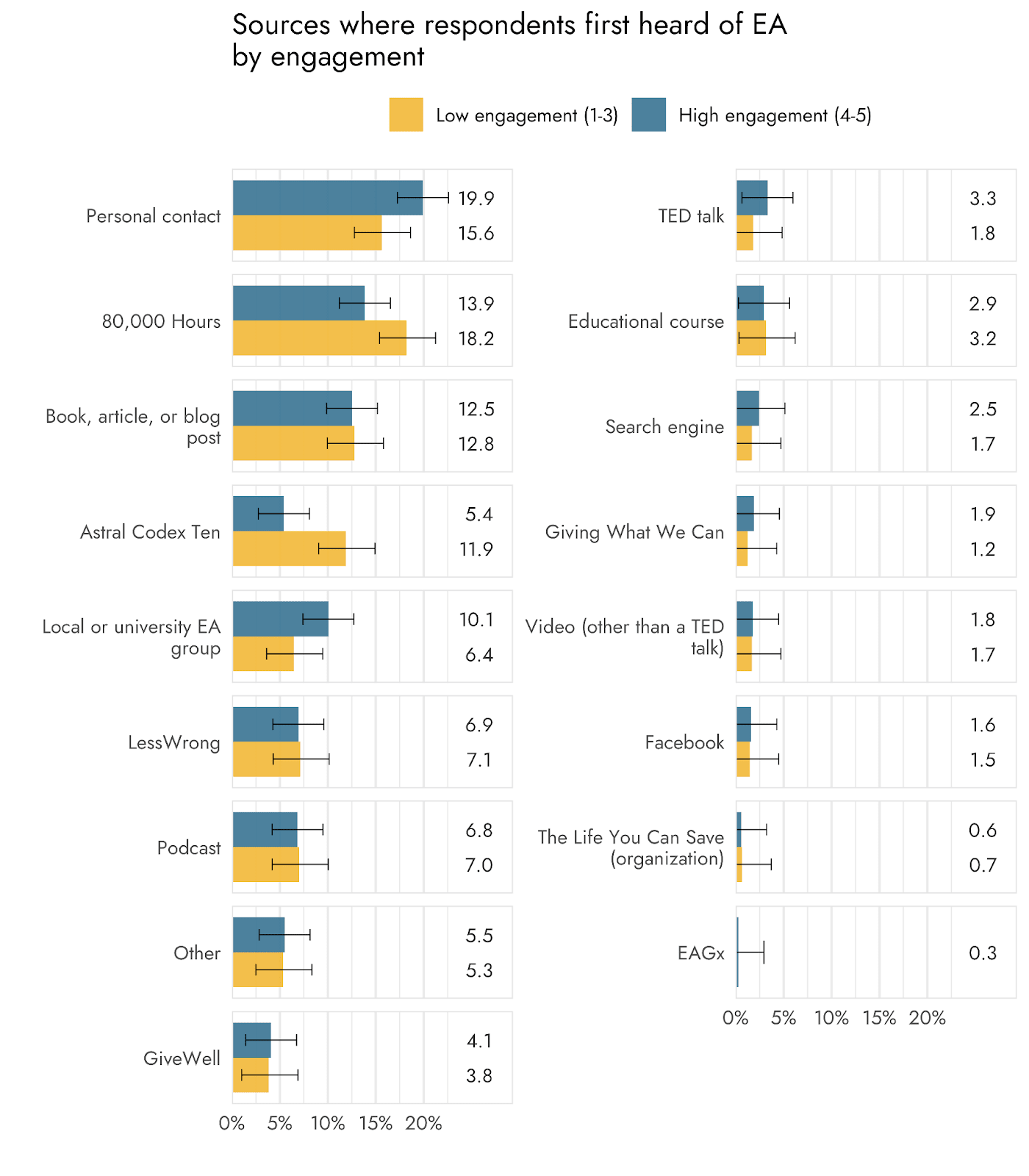

Engagement

We also observed several differences between respondents low in EA engagement and those high in EA engagement[1]. Those low in engagement were more likely to report first hearing of EA via Astral Codex Ten (11.9% vs. 5.4%) and 80,000 Hours (18.2% vs. 13.9%). Those high in engagement were more likely to report a personal contact (19.9% vs. 15.6%), a local or university EA group (10.1% vs. 6.4%), and a TED talk (3.3% vs. 1.8%). Of these, the difference was largest for Astral Codex Ten, followed by 80,000 Hours, and a personal contact.

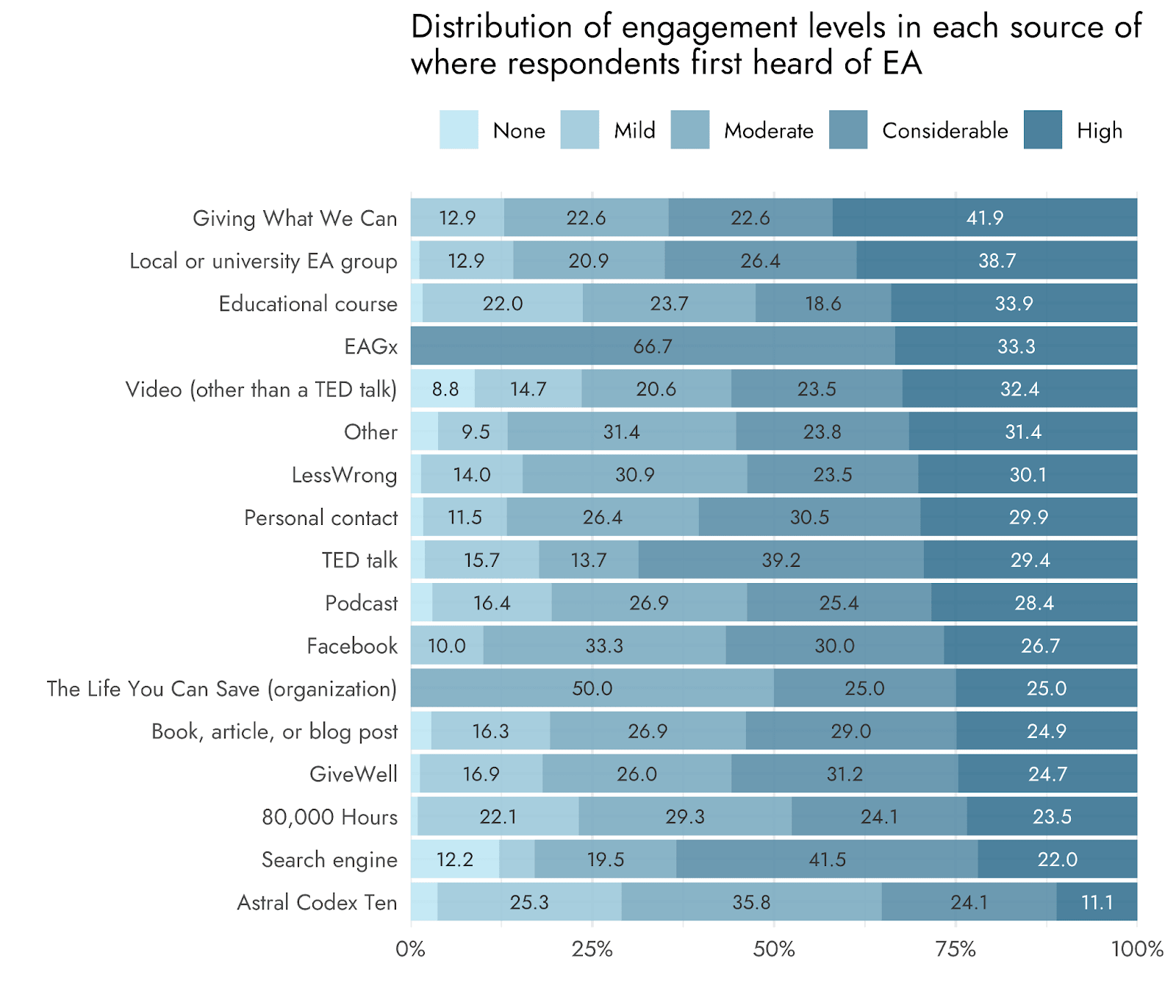

In the appendix we also provided an additional graph showing how each engagement level is distributed within each source.

The effect of outreach

We also coded open responses to assess whether the person first heard about EA due to EA outreach.

We distinguished between 'active outreach' and 'passive outreach' efforts. Active outreach was defined as deliberate initiatives directly promoting EA, such as advertisements, EA groups, and presentations or podcasts by prominent figures in the EA community. Passive outreach, by contrast, refers to people encountering resources that EAs had created, but which were not used in a direct promotional effort— such as people independently searching for “effective careers” and finding an EA resource. Other cases were classified as involving neither kind of outreach, such as people hearing about EA from a friend[2] or reading about EA in a non-EA news article.

Naturally, there are some grey areas between these categories, and some subjectivity and uncertainty to the coding. For example, someone might independently encounter a book or online article due to efforts, behind the scenes to promote the book, and often it is likely a matter of degrees how far individual cases result from active or passive outreach. This is particularly so given that many comments mentioned a combination of different factors (e.g., a friend refers a person to 80,000 Hours), and sometimes provided ambiguous or limited details. Nevertheless, we think that these provide useful rough classifications.

We coded a total of 1196 responses and could identify an active outreach effort in 373 of the responses (31.2%) and a passive outreach effort in 287 (24%). This suggests that slightly over half of cases of people encountering EA result as a result of some form of outreach, and slightly over half of this was the result of active outreach, but just under half of people recruited to EA did not first hear about EA as the result of any kind of EA outreach (though outreach may have helped them get involved later in their EA journey).

Factors Important for Getting Involved

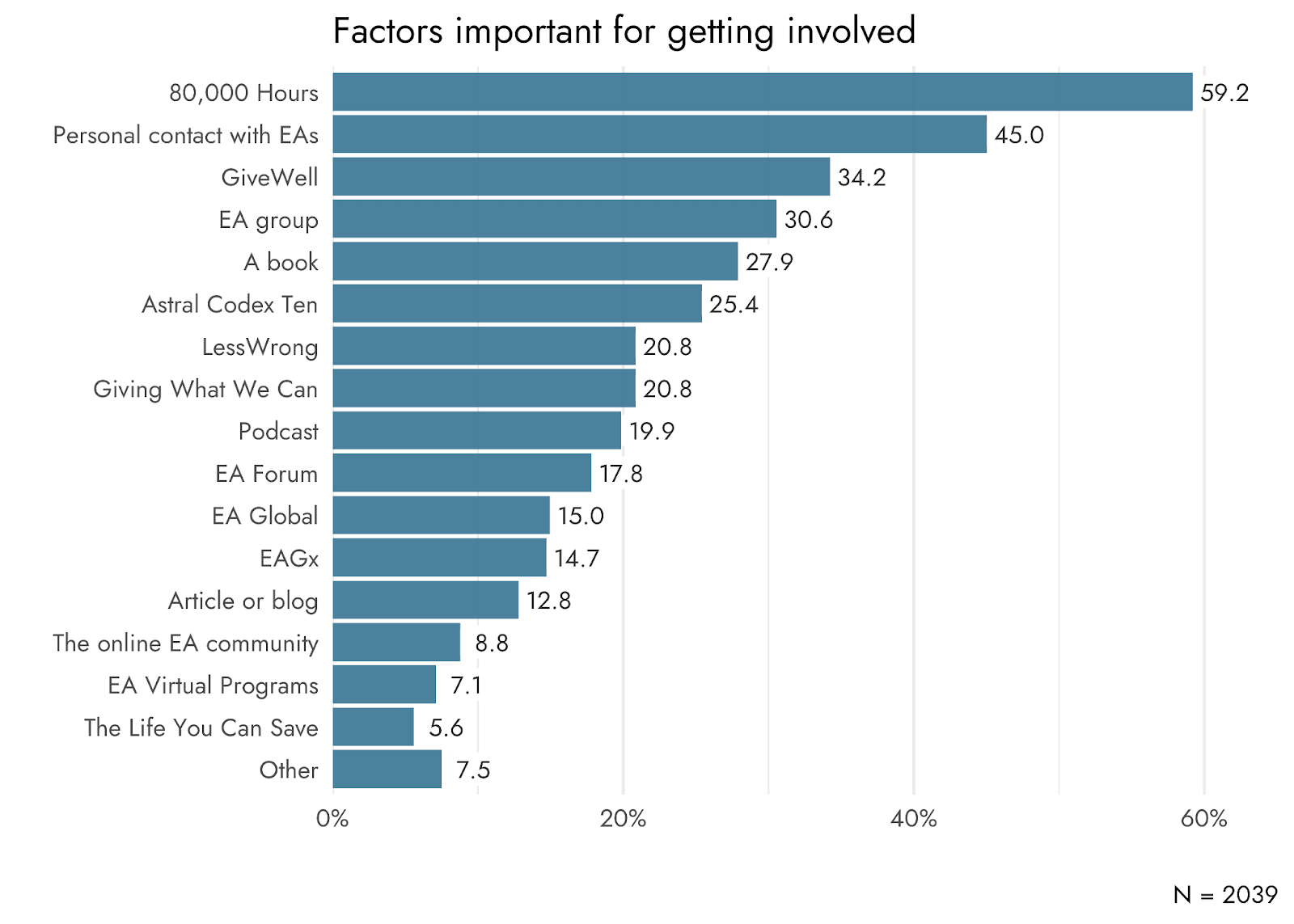

In addition to asking about where respondents first heard of EA, we also asked them which of several factors have been important for them getting involved in EA, such as 80,000 Hours, a book, or the EA Forum. They were asked to select all that apply, so they could select more than one factor.

Among those who selected one of the factors, the average number of picks was 3.73, with a median of 3.

80,000 Hours was the most commonly reported factor important for getting involved (59.2%), followed by personal contact with EAs (45%), and GiveWell (34.2%).

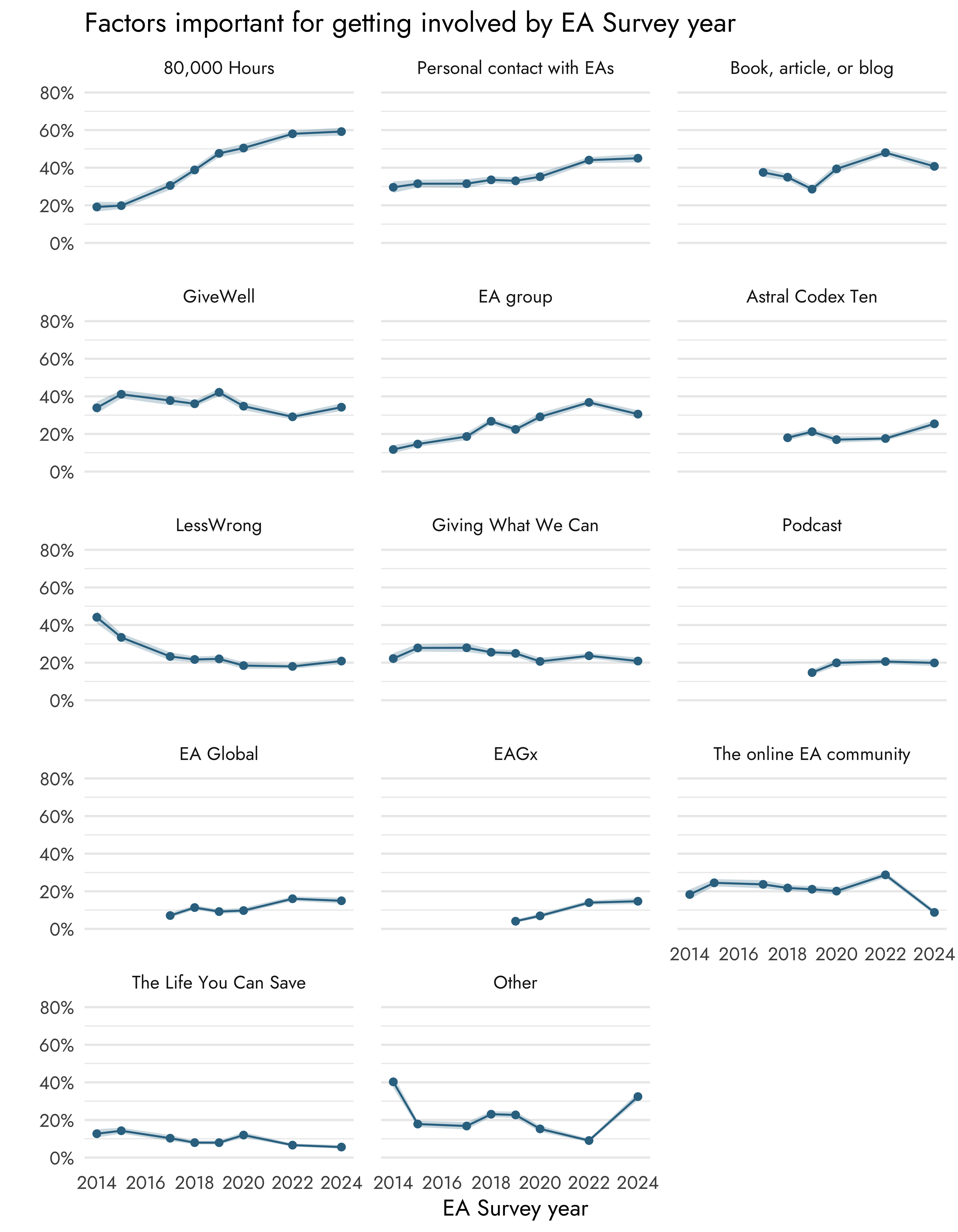

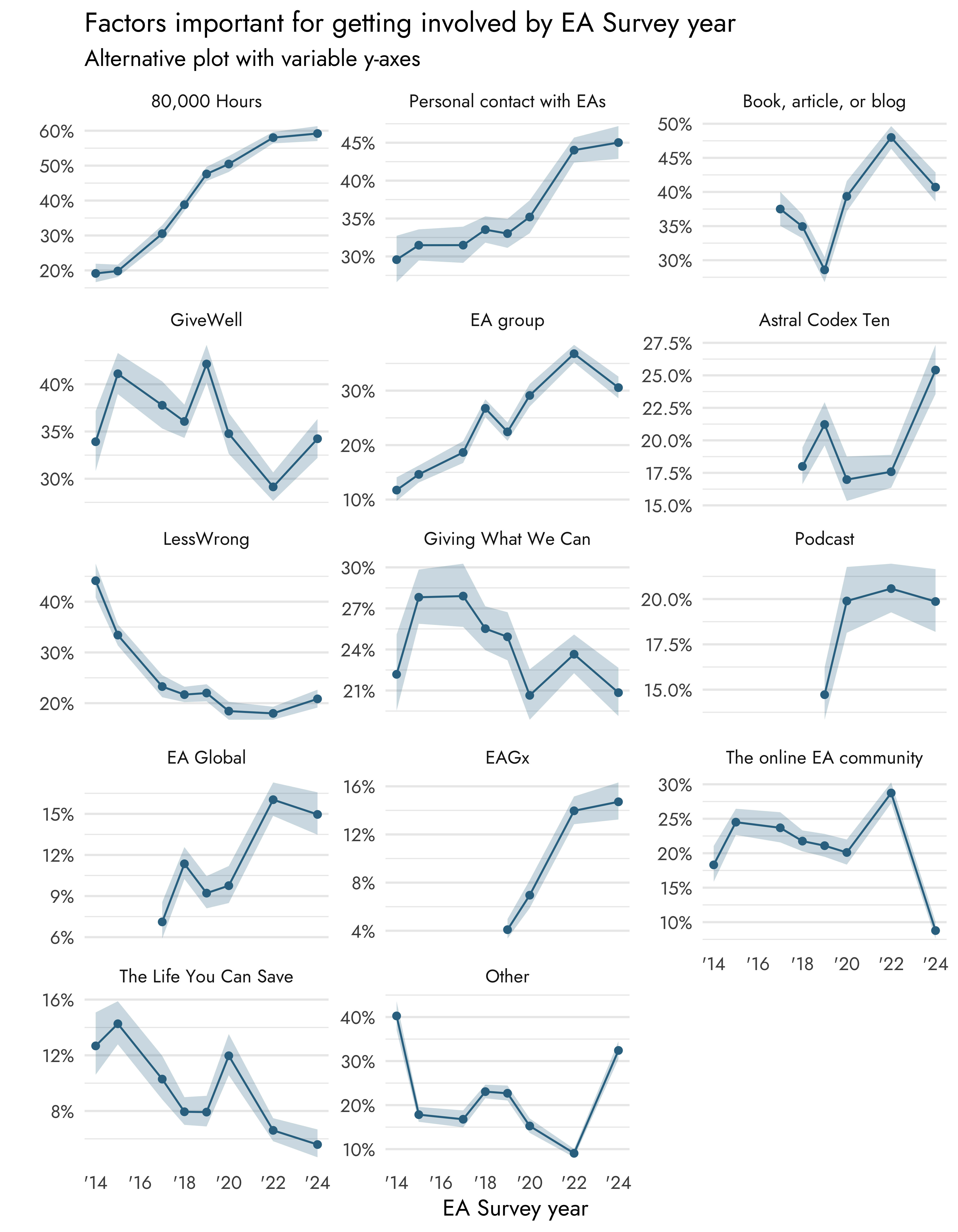

The question was first asked in 2014, although sometimes with a slightly different framing or with different factors to choose from. After standardizing these factors, we can track how some of the factors have changed over time.

As with the question about how respondents first hear of EA, 80,000 Hours has increased over time as a source important for getting involved, going from ~20% in 2014 and 2015 to 59.2% in 2024. This is by far the factor with the strongest increase over time. There has also been an increase in personal contacts with EAs, going from ~30% in 2014-2020 to 45% in 2024, so this increase mostly occurred in the last several years. Similarly, EA groups have also increased, from ~10% in 2014 to 30.6% in 2024, and EAGx as well, going from ~4% in 2019 to almost 15% in 2024.

There also appears to have been an increase for EA Global, although this is mostly due to a single jump between 2020 and 2022, going from ~10% to ~15%.

In contrast, LessWrong was found to become less important for getting involved, starting at 44.1% in 2014 and dropping to around 20% since 2017.

There is also a noticeable drop for the factor ‘The online EA community’ from 2022 to 2024, but this is likely because we included ‘The EA Forum’ for the first time in 2024, which respondents likely included in the online EA community in previous surveys.

Smaller changes were found for other factors, which can be seen in an alternative graph in the appendix. Most of these changes were fairly small at around 5%.

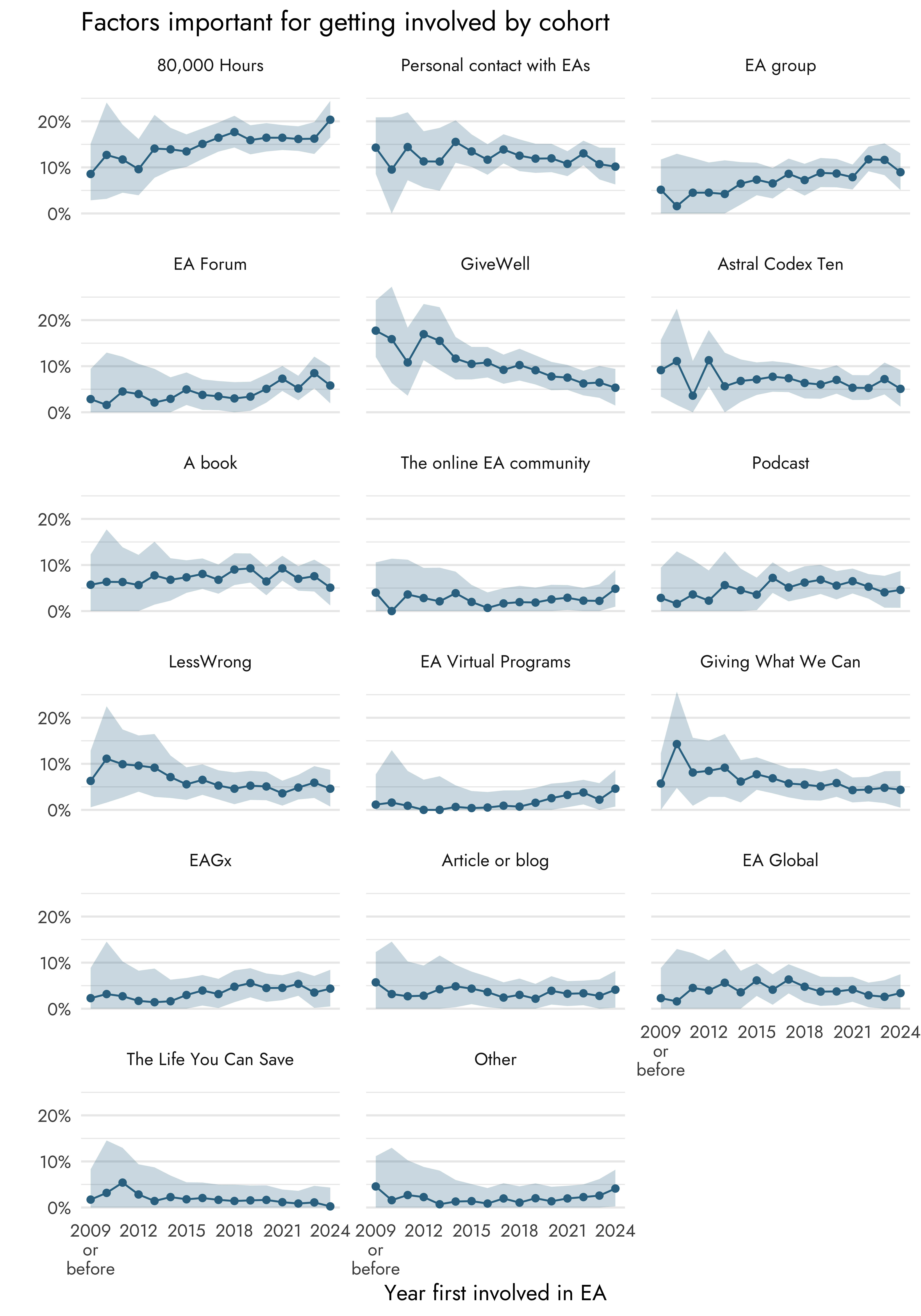

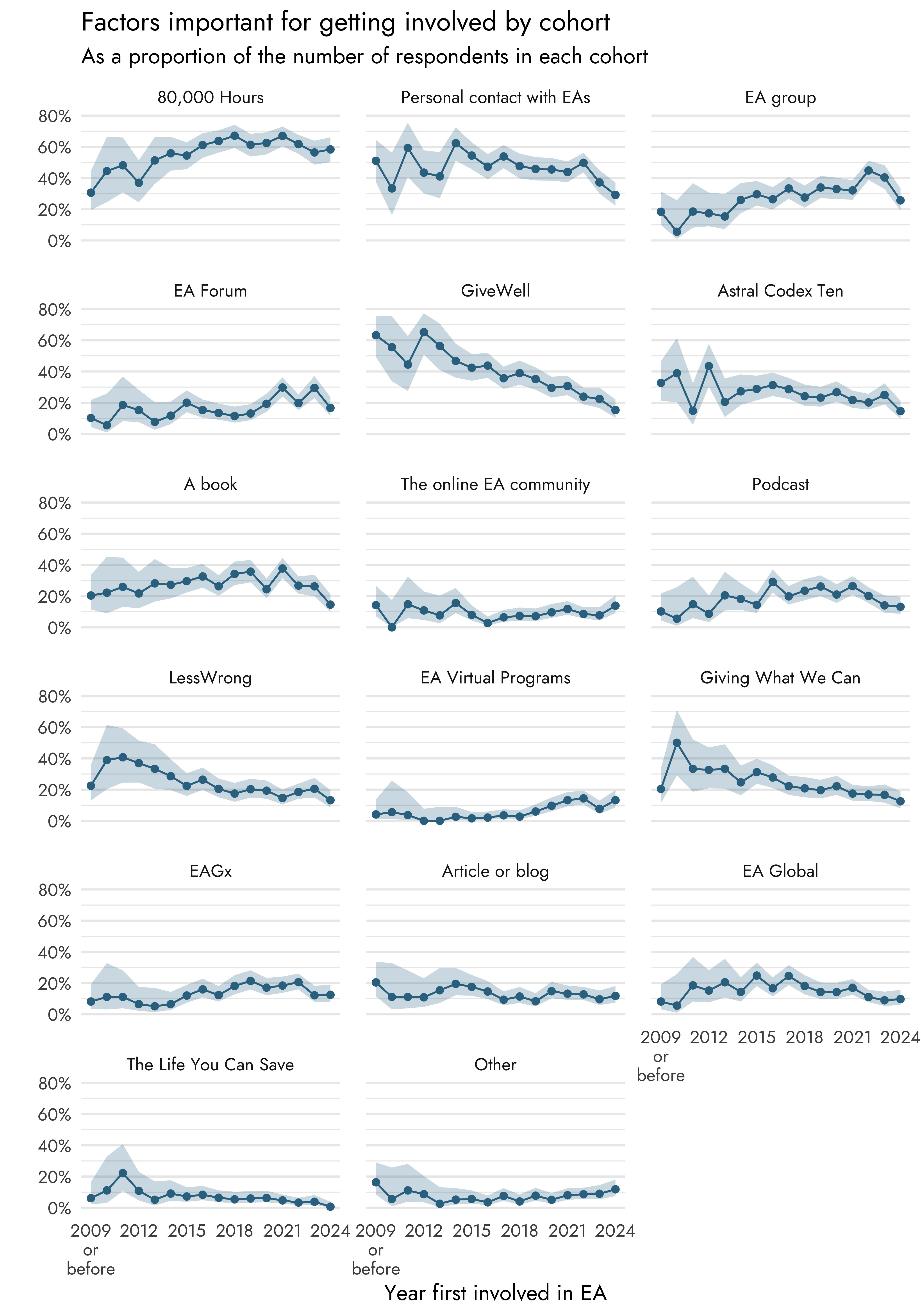

We also looked at cohort effects for factors important for getting involved in EA. Note that because newer cohorts have encountered fewer factors, we calculated the percentage as the percentage of the total number of factors selected by each cohort, as opposed to the percentage of the number of respondents in each cohort (see the Appendix for a graph showing the percentages as divided by the number of respondents in each cohort).

Just like we observed last time, we see fewer cohort effects for factors important for getting involved. We do again see that 80,000 Hours is more important for getting involved among recent cohorts compared to older cohorts, with ~15% of respondents in the more recent cohorts selecting 80,000 Hours and ~10% in the older cohorts. To a lesser extent, we also see that the EA Forum and EA groups are seen as more important among recent cohorts compared to older cohorts, although it should be noted that the confidence intervals for the oldest cohorts are wide due to the low sample sizes in those cohorts.

In contrast, we see that older cohorts are more likely to report GiveWell as an important source for getting involved compared to recent cohorts, from ~15% in the oldest cohorts to 5.3% in the youngest cohort. LessWrong also appears to be a more important factor among older cohorts compared to the newer cohorts, as is the case with Giving What We Can, although the same warning applies regarding the confidence intervals in older cohorts.

Group differences

Gender

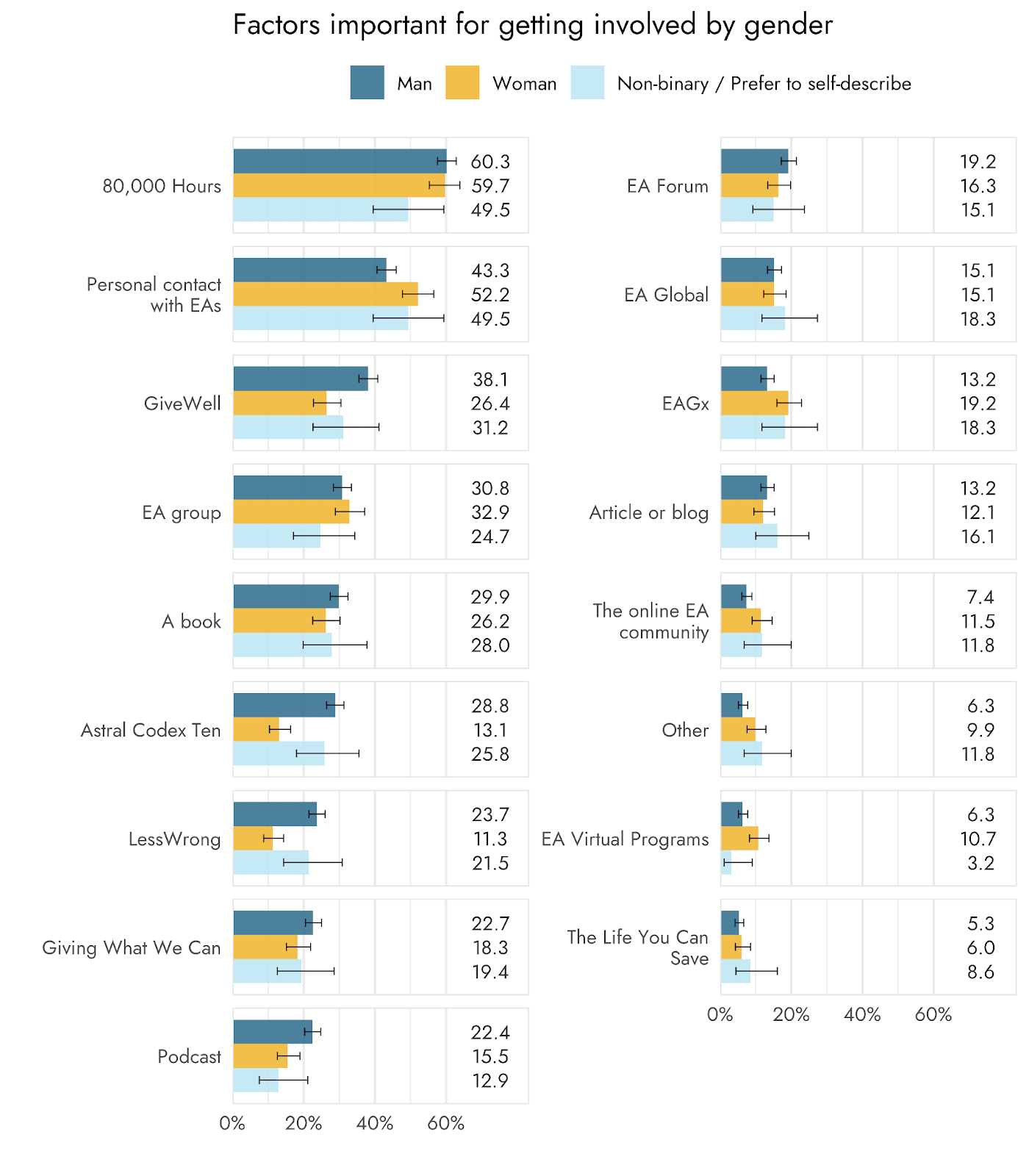

We observed several differences, mostly small differences, between the gender categories on which factors are important for getting involved.

Women were more likely to report personal contact with EAs as an important factor (52.2%) compared to men (43.3%). They were also more likely, compared to men, to report EAGx (19.2% vs. 13.2%) as more important, as well as online contexts, such as the online EA community (11.5% vs. 7.4%) and EA Virtual Programs (10.7% vs. 6.3% for men and 3.2% for non-binary respondents and those who preferred to self-describe), although not the EA Forum (16.3% vs. 19.2% for men and 15.1% for non-binary respondents and those who preferred to self-describe).

Women were less likely to report both Astral Codex Ten (13.1%) as well as LessWrong (11.3%) as an important factor, compared to men (28.8% and 23.7%, respectively) and non-binary respondents and respondents who preferred to self-describe (25.8% and 21.5%, respectively).

Men were more likely to report that GiveWell was an important factor, compared to women (38.1% vs 26.4%). There was also a small, but statistically significant difference for Giving What We Can, with men being slightly more likely to report it as an important factor compared to women (22.7% vs. 18.3%).

Non-binary and respondents who preferred to self-describe were less likely to report 80,000 Hours as an important factor, compared to men (49.5% vs. 60.3%).

Finally, men were more likely to report podcasts as an important factor (22.4%) compared to both women (18.3%) and non-binary respondents and respondents who preferred to self-describe (12.9%).

Note that this section contains relatively many comparisons and that some of the statistically significant differences were very close to our cut-off point for statistical significance (5%). With more stringent criteria, some of the differences would no longer be statistically significant.

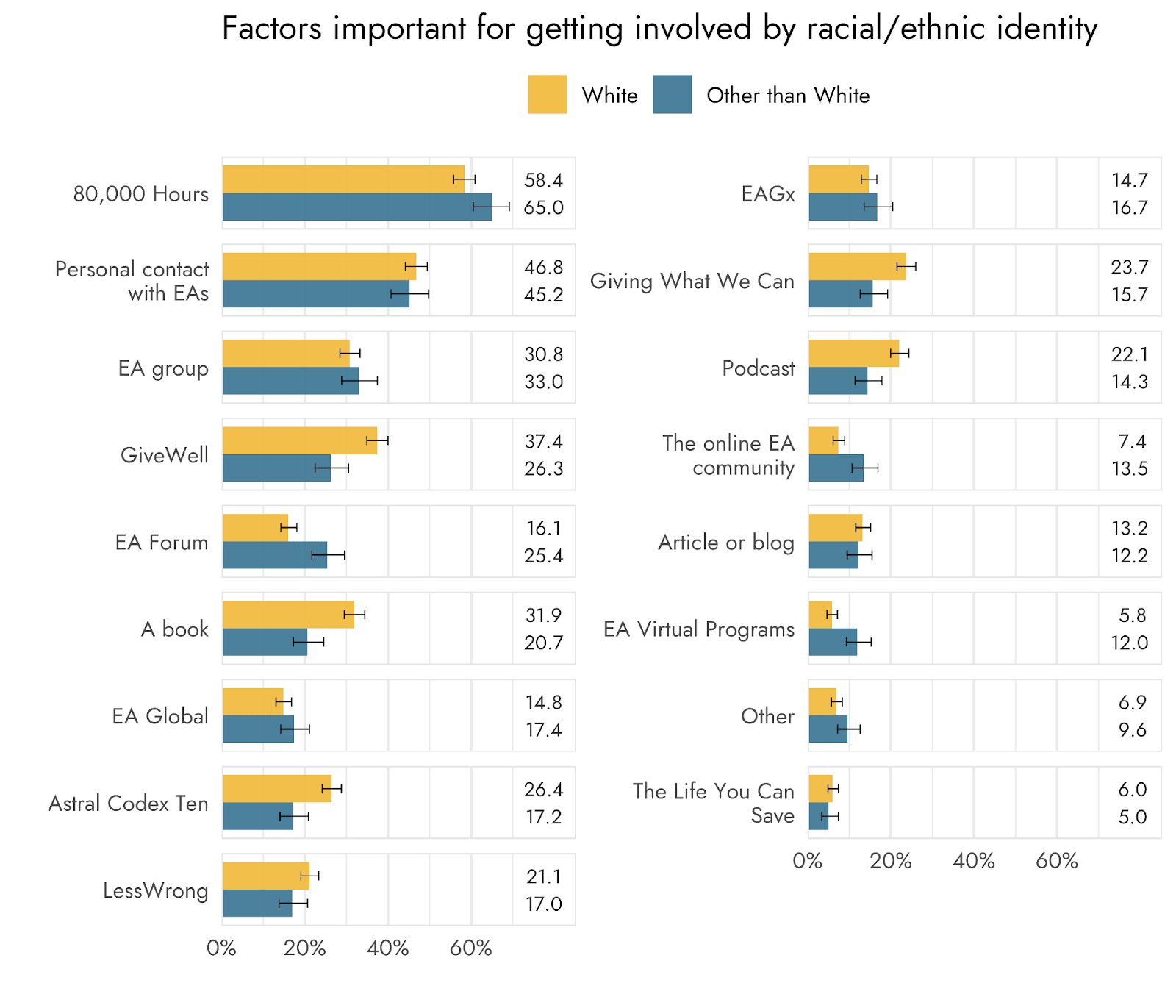

Racial/ethnic identity

There were also several differences between White and non-White respondents regarding which factors were important for getting involved.

Compared to non-White respondents, White respondents were more likely to select GiveWell (37.4% vs. 26.3), a book (31.9% vs. 20.7%), Astral Codex Ten (26.4% vs. 17.2%), LessWrong (21.1% vs. 17%), Giving What We Can (23.7% vs. 15.7%), and podcasts (22.1% vs. 14.3%).

In contrast, non-White respondents were more likely to select 80,000 Hours (65% vs. 58.4%), the EA Forum (25.4% vs. 16.1%), the online EA community (13.5% vs. 7.4%), and EA Virtual Programs (12% vs. 5.8%).

Engagement

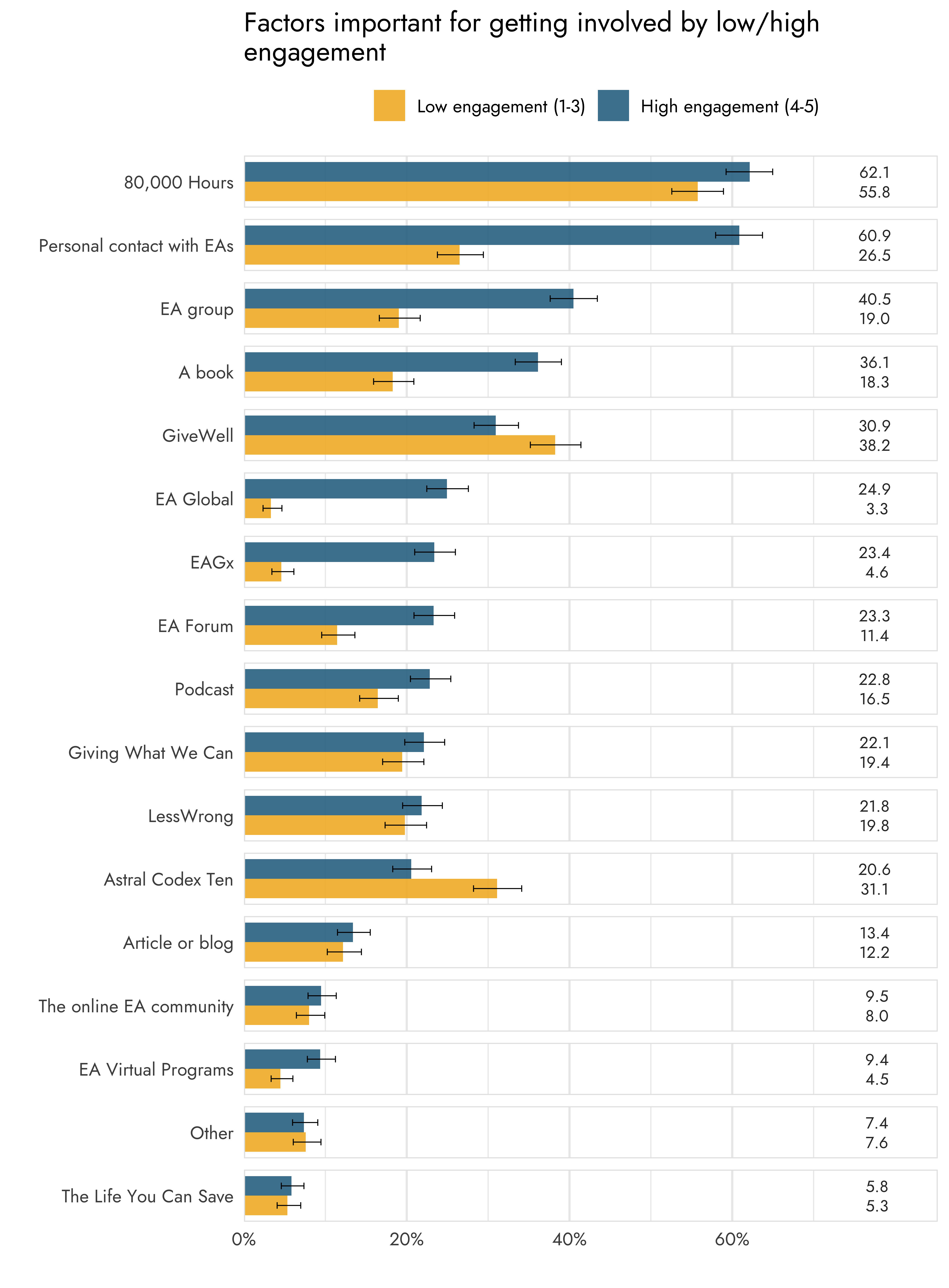

Multiple, and some quite sizable, differences were found between low and highly engaged respondents on factors important for getting involved.

Highly engaged respondents were more likely to select 80,000 Hours (62.1% vs. 55.8%), personal contact with EAs (60.9% vs. 26.5%), an EA group (40.5% vs. 19%), a book (36.1% vs. 18.3%), EA Global (24.9% vs. 3.3%), EAGx (23.4% vs. 4.6%), EA Forum (23.3% vs. 11.4%), a podcast (22.8% vs. 16.5%), and EA Virtual Programs (9.4% vs. 4.5%).

Less engaged respondents, on the other hand, were more likely to select GiveWell (38.2% vs. 30.9%) and Astral Codex Ten (31.% vs. 20.6%).

Referrer Robustness Checks

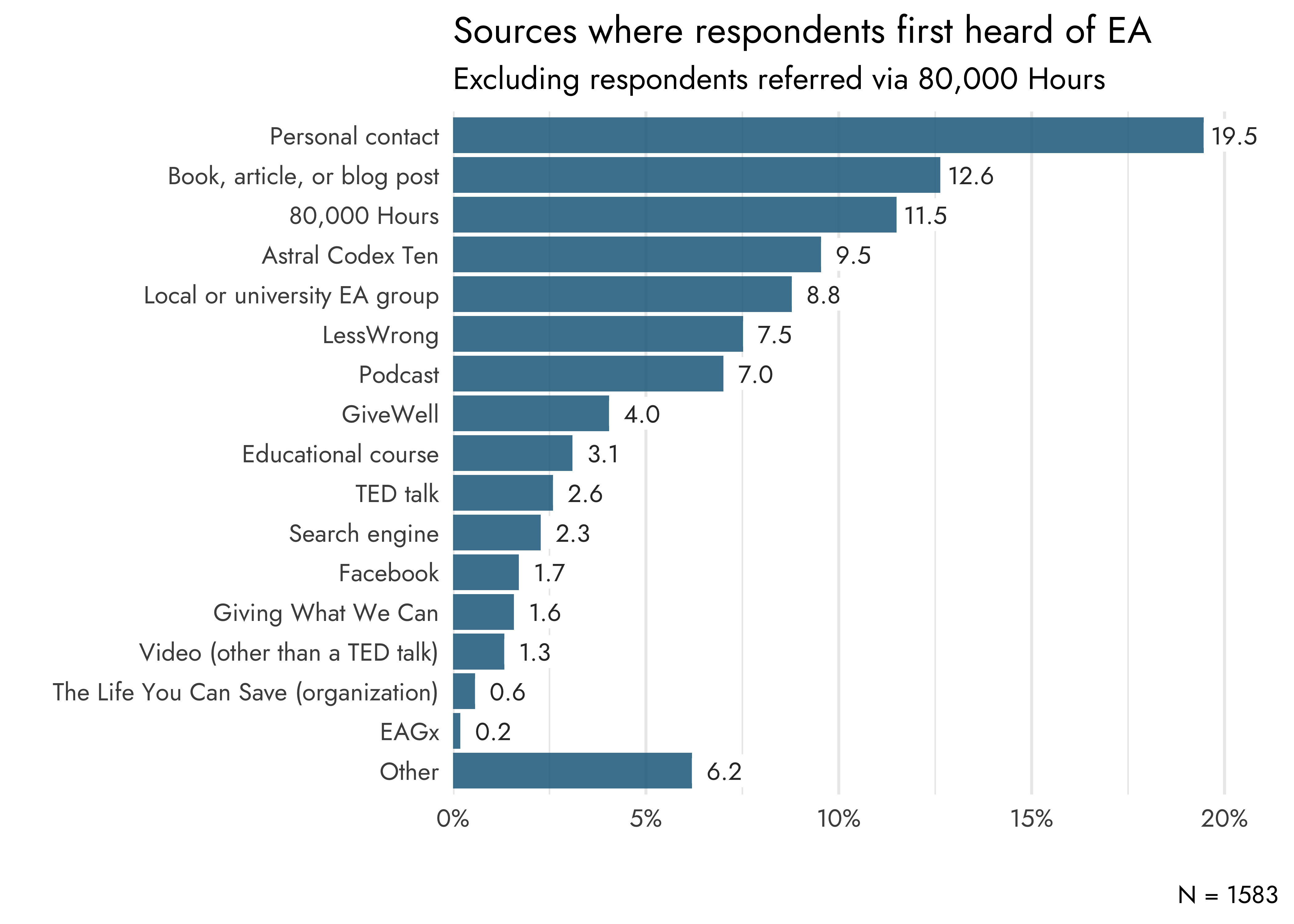

This year we again include a robustness check to see how the results change if we exclude respondents who were referred to the survey from specific referral sources, such as 80,000 Hours or Astral Codex Ten.

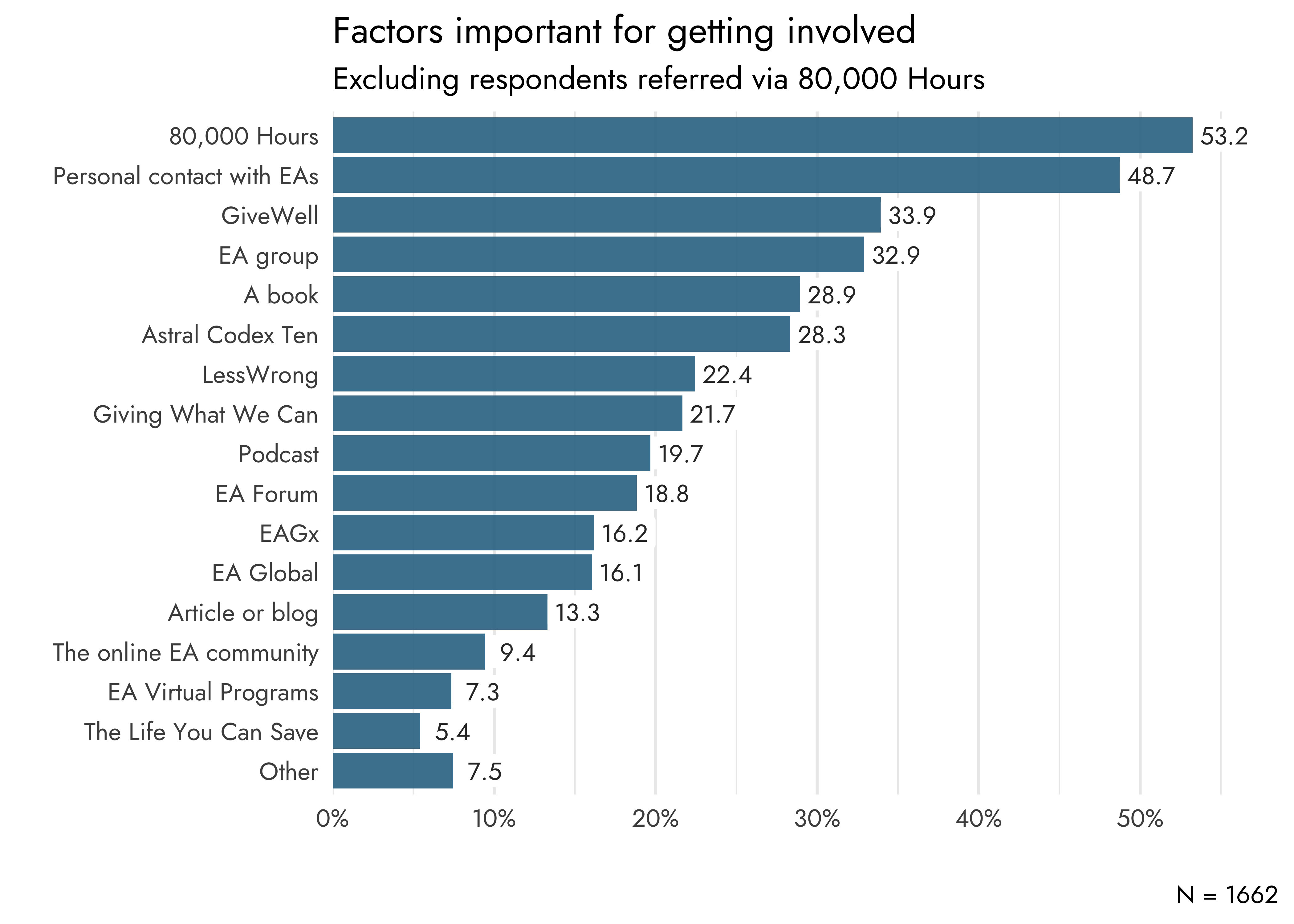

Previously, in 2020, we found that excluding respondents who were referred from 80,000 Hours had some impact on the results, reducing the percentage of respondents who first heard of EA via 80,000 Hours from 12.8% to around 8% and reducing the percentage of respondents selecting 80,000 Hours as important for getting involved from around 50% to around 45%. We find similar decreases this year (see Appendix 5). The percentage of respondents who first heard of EA via 80,000 Hours went down from 15.8% to 11.5% and the percentage selecting 80,000 Hours as important for getting involved went down from 59.2% to 53.2%. These changes are modest and affect the ranking of where most respondents first heard of EA (moving 80,000 Hours from the 2nd to 3rd place), but not for the most important sources important for getting involved. Importantly, the percentages for sources other than 80,000 Hours are less affected by excluding these respondents.

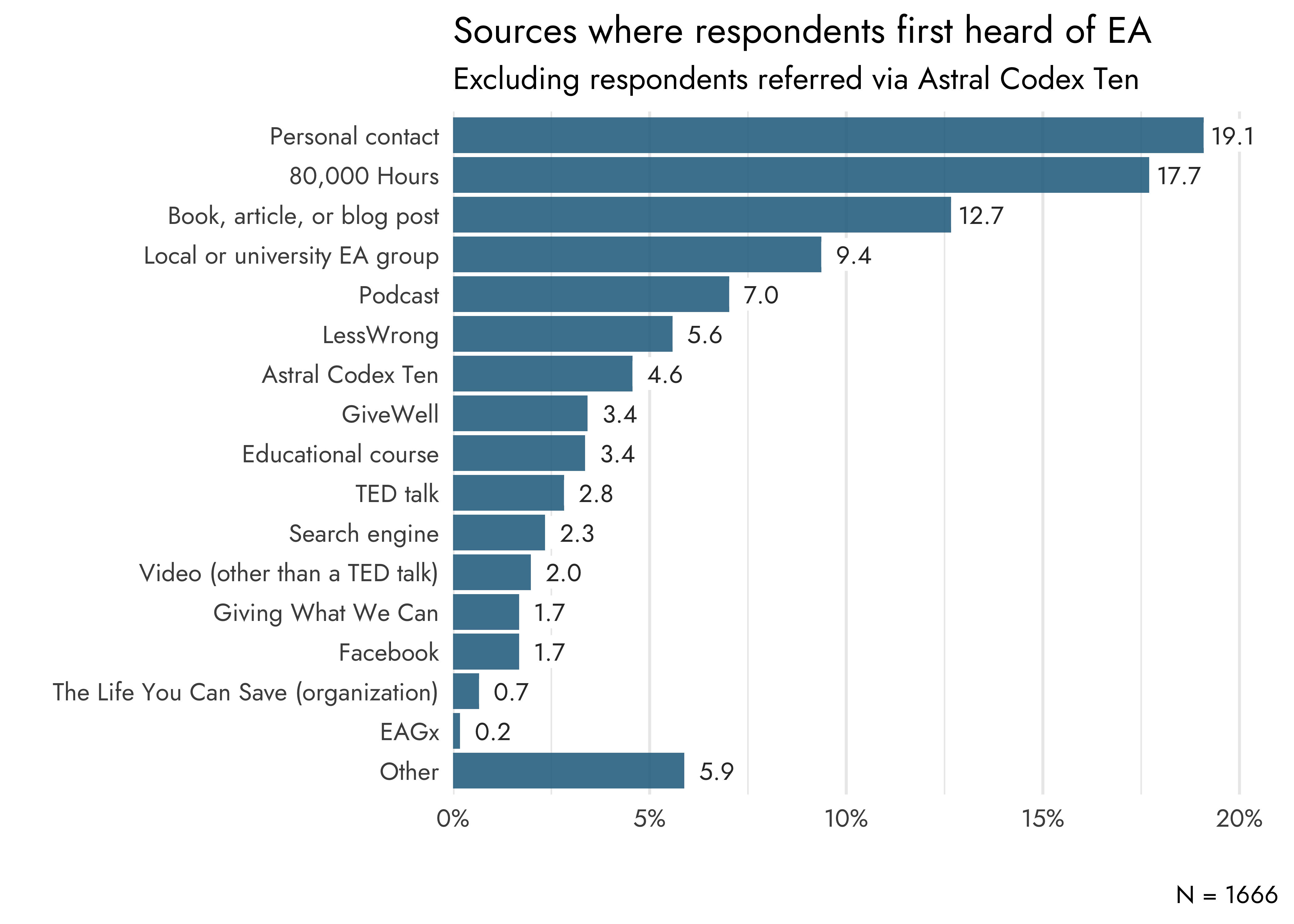

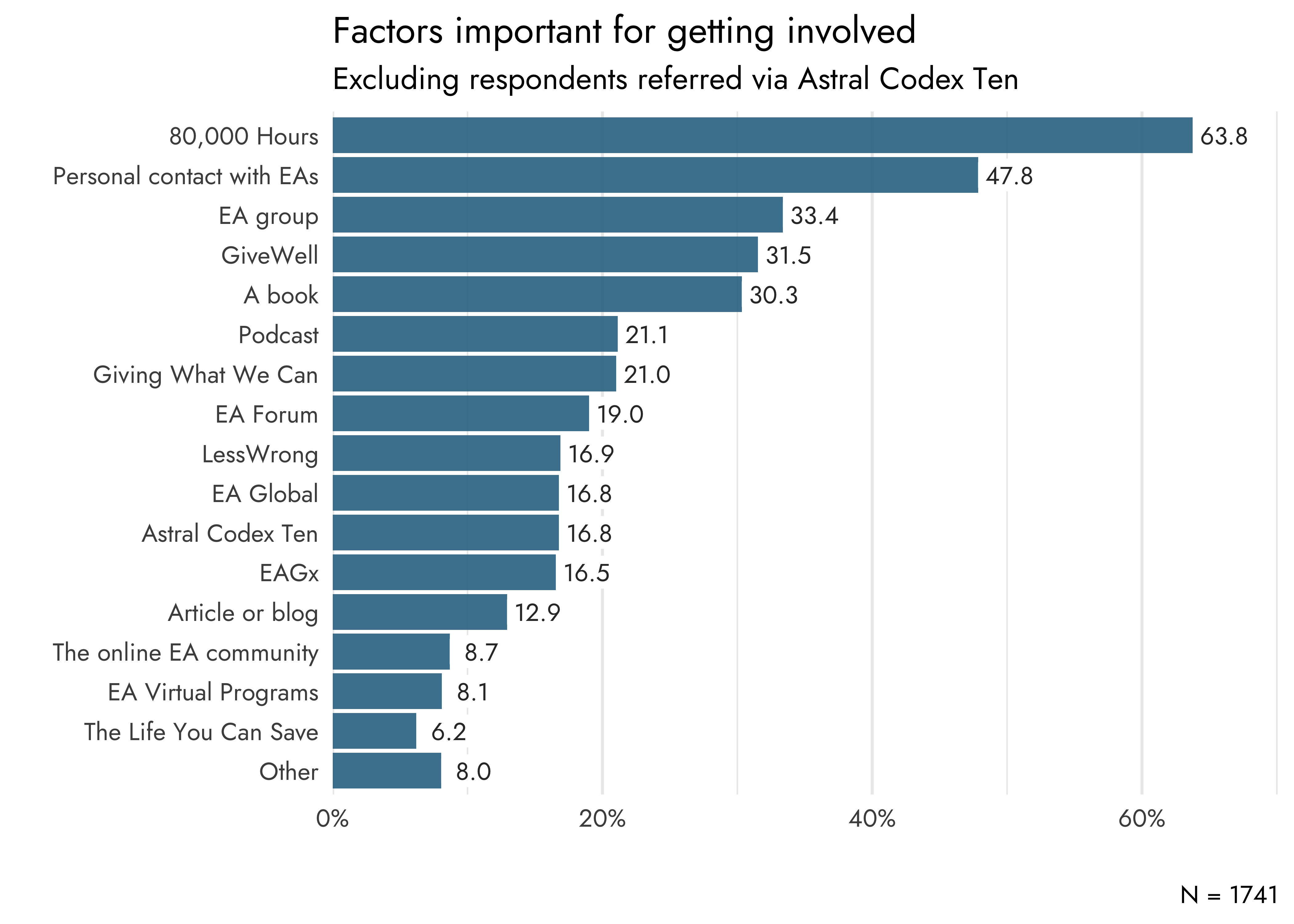

We also performed a robustness check excluding respondents referred to the survey from Astral Codex Ten, since it was the third most popular referral source (14.6%), after 80,000 Hours (18.5%), and being emailed the survey directly (29.4%). Excluding these respondents reduced the percentage of respondents who first heard of EA via Astral Codex Ten from 8.4% to 4.6%, a decrease similar in absolute size to the one observed when excluding respondents from 80,000 Hours. The percentage of respondents selecting Astral Codex Ten as important for getting involved decreased from 25.4% to 16.8%, which is a more sizable decrease compared to the decrease in 80,000 Hours after excluding respondents referred from 80,000 Hours. Excluding respondents from Astral Codex Ten also has a larger impact on sources other than Astral Codex Ten, as seen with for example GiveWell (going from 34.2% to 31.5%) and EA groups (going from 30.6% to 33.4%). The overall ranking, however, remains largely similar, with only minor changes.

This post was written by David Moss and Willem Sleegers. We thank all of the survey respondents for taking the time to complete the survey, as well as Laura Duffy and Oscar Delaney for their feedback on this post.

Rethink Priorities is a think-and-do tank dedicated to informing decisions made by high-impact organizations and funders across various cause areas. We invite you to explore our research database and stay updated on new work by subscribing to our newsletter.

Appendix

Appendix 1: Alternative year-over-year comparison of where respondents first heard of EA

Appendix 2: Composition of engagement levels within each source of where respondents first heard of EA

Appendix 3: Alternative year-over-year comparison of factors important for getting involved in EA

Appendix 4: Factors important for getting involved in EA by cohort (as a proportion of the number of respondents in each cohort)

Appendix 5: Referral exclusions

- ^

Engagement was assessed with the following question: "How engaged do you consider yourself to be with effective altruism and the effective altruism community?"

Respondents could pick one of the following options:

- No engagement: I’ve heard of effective altruism, but do not engage with effective altruism content or ideas at all.

- Mild engagement: I’ve engaged with a few articles, videos, podcasts, discussions, and/or events on effective altruism (e.g., reading Doing Good Better or spending ~5 hours on the website of 80,000 Hours).

- Moderate engagement: I’ve engaged with multiple articles, videos, podcasts, discussions, and/or events on effective altruism (e.g., subscribing to the 80,000 Hours podcast or attending regular events at a local group). I sometimes consider the principles of effective altruism when I make decisions about my career or charitable donations.

- Considerable engagement: I’ve engaged extensively with effective altruism content (e.g., attending an EA Global conference, applying for career coaching, or organizing an EA meetup). I often consider the principles of effective altruism when I make decisions about my career or charitable donations.

- High engagement: I make heavy use of the principles of effective altruism when I make decisions about my career or charitable donations. I am heavily involved in the effective altruism community, perhaps helping to lead an EA group full time or working at an EA-aligned organization.

Low engagement was defined as picking one of the first three options and high engagement as picking either option 4 or 5.

- ^

People might reasonably think of them telling their friends about EA as a form of outreach on their part. But, for our purposes here, we have classified this as not being a form of EA outreach, but just as representing the background passive growth of EA through social networks.

Thanks as always for this valuable data!

Given 80k is a large and growing source of people hearing about and getting involved in EA, some people reading this might be worried that 80k will stop contributing to EA's growth, given our new strategic focus on helping people work on safely navigating the transition to a world with AGI.

tl;dr I don't think it will stop, and might continue as before, though it's possible it will be reduced some.

More:

I am not sure whether 80k's contribution to building ea in terms of sheer numbers of people getting involved is likely to go down due to this focus vs. what it would otherwise be if we simply continued to scale our programmes as they currently are without this change in direction.

My personal guess at this time is that it will reduce at least slightly.

Why would it?

However, I do expect us to continue to significantly contribute to building EA – and we might even continue to do so at a similar level vs. before. This is for a few reasons:

This will probably lead directly and indirectly to a big chunk of our audience continuing to get involved in EA due to engaging with us. This is valuable according to our new focus, because we think that getting involved in EA is often useful for being able to contribute positively to things going well with AGI.

To be clear, we also think EA growing is valuable for other reasons (we still think other cause areas matter, of course!). But it's actually never been an organisational target[1] of ours to build EA (or at least it hasn't since I joined the org 5 years ago); growing EA has always been something we cause as a side effect of helping people pursue high impact careers (because, as above, we've long thought that getting involved in EA is one useful step for pursuing a high impact career!)

Note on all the above: the implications of our new strategic focus for our programmes are still being worked out, so it's possible that some of this will change.

Also relevant: FAQ on the relationship between 80k & EA (from 2023 but I still agree with it)

[1] Except to the extent that helping people into careers building EA constitutes helping them pursue a high impact career - & it is one of many ways of doing that (along with all the other careers we recommend on the site, plus others). We do also sometimes use our impact on the growth of EA as one proxy for our total impact, because the data is available, and we think it's often a useful step to having an impactful career, & it's quite hard to gather data on people we've helped pursue high impact careers more directly.

Thanks Arden!

I also agree that prima facie this strategic shift might seem worrying given that 80K has been the powerhouse of EA movement growth for many years.

That said, I share your view that growth via 80K might reduce less than one would naively expect. In addition to the reasons you give above, another consideration is our finding is that a large percentage of people get into EA via 'passive' outreach (e.g. someone googles "ethical career" and finds the 80K website', and for 80K specifically about 50% of recruitment was 'passive'), rather than active outreach, and it seems plausible that much of that could continue even after 80K's strategic shift.

As noted elsewhere, we plan to research this empirically. Fwiw, my guess is that broader EA messaging would be better (on average and when comparing the best messaging from each) at recruiting people to high levels of engagement in EA (this might differ when looking to recruit people directly into AI related roles), though with a lot of variance within both classes of message.

I'm not sure the "passive" finding should be that reassuring.

I'm imagining someone googling "ethical career" 2 years from now and finding 80k, noticing that almost every recent article, podcast, and promoted job is based around AI, and concluding that EA is just an AI thing now. If they have no interest in AI based careers (either through interest or skillset), they'll just move on to somewhere else. Maybe they would have been a really good fit for an animal advocacy org, but if their first impressions don't tell them that animal advocacy is still a large part of EA they aren't gonna know.

It could also be bad even for AI safety: There are plenty of people here who were initially skeptical of AI x-risk, but joined the movement because they liked the malaria nets stuff. Then over time and exposure they decided that the AI risk arguments made more sense than they initially thought, and started switching over. In hypothetical future 80k, where malaria nets are de-emphasised, that person may bounce off the movement instantly.

I definitely agree that would eventually become the case (eventually all the older non-AI articles will become out of date). I'm less sure it will be a big factor 2 years from now (though it depends on exactly how articles are arranged on the website and so how salient it is that the non-AI articles are old).

I also think this is true in general (I don't have a strong view about the net balance in the case of 80K's outreach specifically).

Previous analyses we conducted suggested that over half of Longtermists (~60%) previously prioritised a different cause and that this is consistent across time.

You can see the overall self-reported flows (in 2019) here.

@titotal I'm curious whether or to what extent we substantively disagree, so I'd be interested in what specific numbers you'd anticipate, if you'd be interested in sharing.

I don't say strictly 0% only because I think there's always the possibility for a few unusual cases, e.g. someone is googling how to do good and happens across an old post about EAG or their inactive local group.

Over half of long termists starting on something else is kind of insane. Although given the current landscape I suspect many of those if there entered now would have entered directly into long termism. Looking forward to seeing the data unfold!

Thanks thats a useful reply with your points 1 and 2 being quite reassuring.

Your no 4. that seems very optimistic. A more narrow focus send unlikely to increase interest over the whole spectrum of seekers coming to the sure, when the default is 80k being the front page of the EA Internet for all coners. The number of AI interested people getting hooked increasing more than the fallout for all other areas seems pretty unlikely.

And I can't really see a world where older people would be more attracted to a site which focuses on an emerging and largely young person's issue.

Thanks for sharing this. It was interesting to read.

I wonder if you wouldn’t mind sharing the rubric for EA involvement. What constitutes a highly engaged EA?

We used the same rubric as in previous analyses (e.g., see here), but it makes sense to repeat it so I also added a footnote.

1 post and 2 comments on the forum ;) @yanni kyriacos

This seems to be out of context?

Just a joke sorry for the confusion!

It’s odd to me that people say they “heard about EA” at EA Global. How’d they hear about EA Global, then? 🤔

Anecdote: I'm one of those people -- would say I'd barely heard of ea / basically didn't know what it was, before a friend who already knew of it suggested I come to an EA global (I think at the time one got a free t-shirt for referring friends). We were both philosophy students & I studied ethics, so I think he thought I might be intersted even though we'd never talked about EA.

I definitely agree that this seems unusual (and our results show that it is!). Still, it seems reasonable that a very small number of people might stumble across an EAGx first, as in Arden's anecdote below.

It seems like, from the chart in the appendix, that more active outreach sources produce higher-engagement EAs. Is this actually true, or does it reflect a confounder (such as age)? If true, it seems very surprising; I would have expected that people who sought out EA on their own would be the most engaged, because they want something from EA specifically. Maybe this has something to do with how engagement was measured (i.e. it seems high on sources that active outreach tries to get people to do, like contact with the EA community, rather than EA-endorsed behaviors like charitable donations)

Thanks for raising this interesting point!

If we look direction at the association between active outreach and levels of engagement, we see no strong relationship (aside from within the very small "No engagement with EA" category).

I agree that it's intuitively plausible, as you say, that people who actively sought out EA might be expected to be more inclined to high engagement.

But I think that whether the person encounters EA through active outreach (influencing selection) may often be in tension with whether the person encounters EA through a high-touch form of engagement (leading to different probability of continued engagement). For example, EA Groups are quite active outreach (ex hypothesi, leading to lower selection for EA inclination), but higher touch (potentially leading to people being more likely to get engaged), whereas a book is more passive outreach (ex hypothesi, leading to higher selection for EA inclination), but lower touch (and so may be less likely to facilitate engagement)[1].

There may be other differences between these particular two examples of course. For example, EA Groups and EA books might select in different ways.

Thank you for this analysis! While insightful, I found myself a bit puzzled. Are introductory fellowships and other courses run by local EA groups considered under "educational courses" or does that mean things like philosophy classes, where EA might be mentioned as a concept?

I am not sure what the primary outreach tool of other EA groups are, but for EA Estonia it is promoting our introductory courses/fellowships. This post made me wonder which category would the course participants choose as first exposure to EA - would it be Facebook (since we promote the course there), articles/blogs/80k Hours/LessWrong (as reading these is part of the course), local EA groups (since the national group runs the course), educational courses (as mentioned above) or friends (about 1/3 of participants find the course via a friend recommendation)?

The lack of mention of courses - aside from specifically EA Virtual Programs - makes me wonder if other EA groups take a different approach to attracting new members. Based on my rough estimates, >80% of our members found EA through our courses or became involved because of it. I would have expected to see “a course/fellowship run by a local EA group” as an option under "Factors important for getting involved."

Did anyone mention a course under the "Other" section? Were there any survey questions about this? If not, would you consider adding it to the next survey? As a community builder, it would be very useful to see to which degree these fellowships and other courses play a role in engaging people or whether we should consider other approaches. Alternatively, would it be possible to ask for country-specific data analysis?

There are two different things to consider here:

In most cases where someone joins EA through an activity run by their EA group, they'll select EA Group. I went through references to "group" in the comments for those who did not select EA Group and most (23/36) were not referring to an EA Group, 3 referred to a friend referring them to a group, 2 referred to seeing an ad for a group, 5 mentioned something else first introducing them to EA but a group being useful for them beginning to seriously engage and the rest were miscellaneous/uncategorisable. I went through comments of those who did select EA Group and 9/111 (8%) mentioned a fellowship. You can see a more detailed analysis of comments by those who selected EA Group in 2020 here, where 7% mentioned a fellowship, but a larger number mentioned an event or fresher's fair.

For Estonia, there are not very clear differences (p=0.023). This is from n=37 for Estonia, so we should not be very confident about the differences.