Epistemic status: I wrote this six months ago, but I never felt satisfied with it, so I kind of forgot about it. At this point, I'll just release it. It is similar to Chris Olah's article on research debt, but it has a different perspective on presenting the mechanisms.

(The examples in this post are not made to be serious proposals)

This post is meant to bring forward the causal mechanisms of clearer communication and the potential of “Distillations”. Distillation here will be defined as the process of re-organising existing information and ideas into a new perspective. The post is partly meant to increase the clarity of what a distillation might be, and partly meant to give some more insight into how it may speed up research.

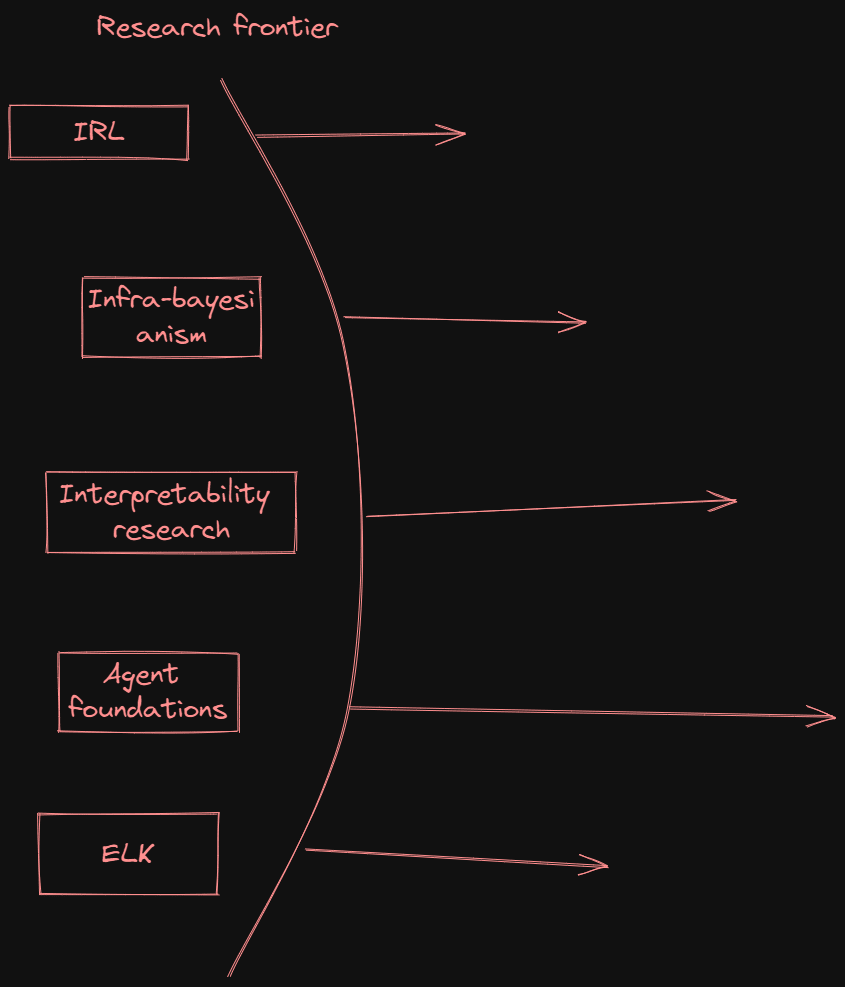

A model of analogous idea generation in AI safety

This is a simple model of the forefront of research in the AI safety field:

The frontier gets forward by different agendas making progress in each of their own domains. However, just like in battle frontiers, communication is very important as if we don’t use the progress other domains make, we might waste a lot of time and do a lot of double work.

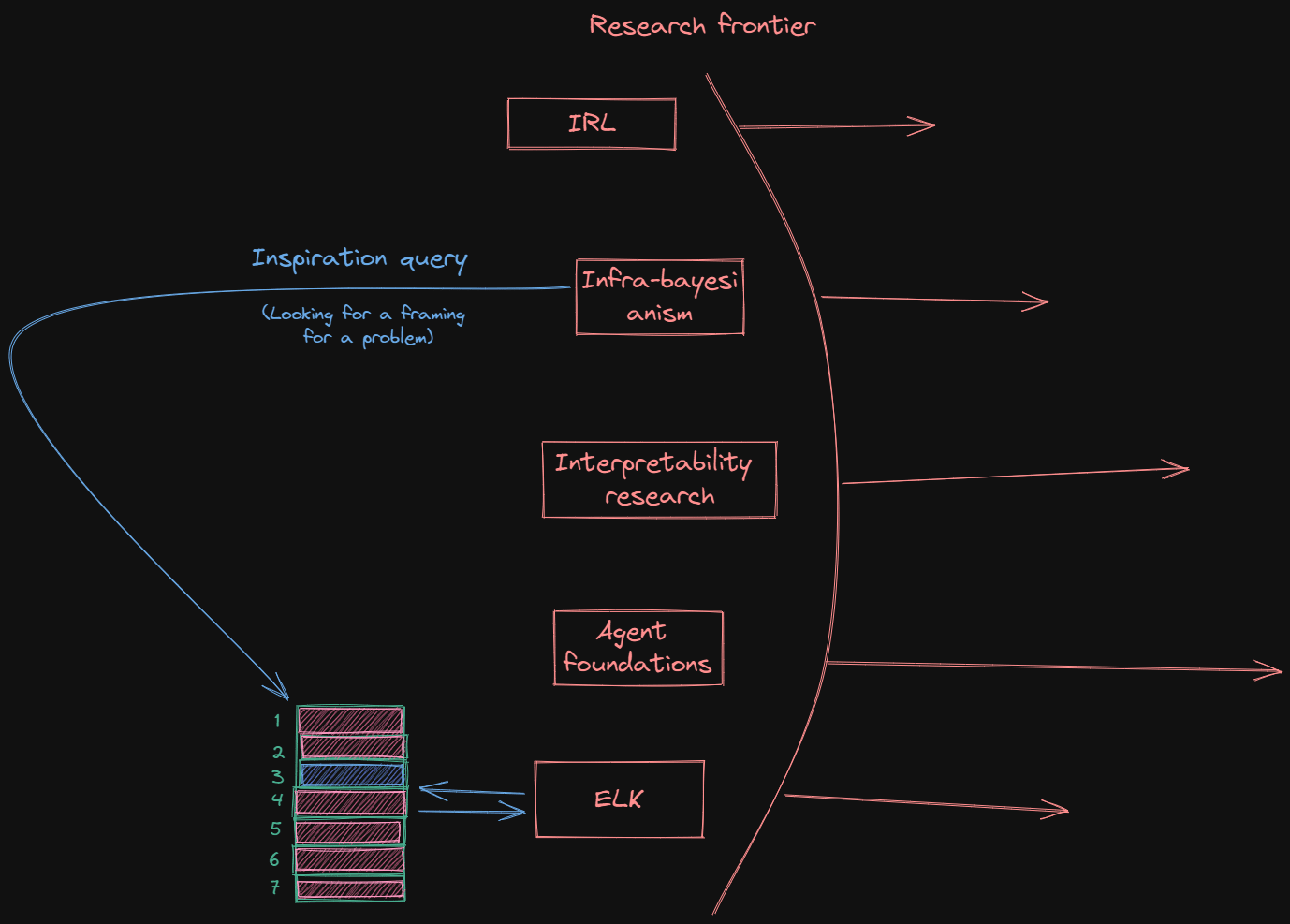

For example, if we're working on infrabayesianism and trying to solve a complicated problem regarding ontology verification (not sure if this is an actual thing being worked on in infrabayesianism). We then try to take inspiration from ELK, as it has been worked on a lot in the reference class of ontologies. This might look something like this:

Figure 2: Progress in alignment

ELK has a research stack of 7 different perspectives in our fictional example. The red blocks are perspectives that don’t yield any light on the problem, whilst the blue lens helps with progress on the problem. A visualization of this is the following:

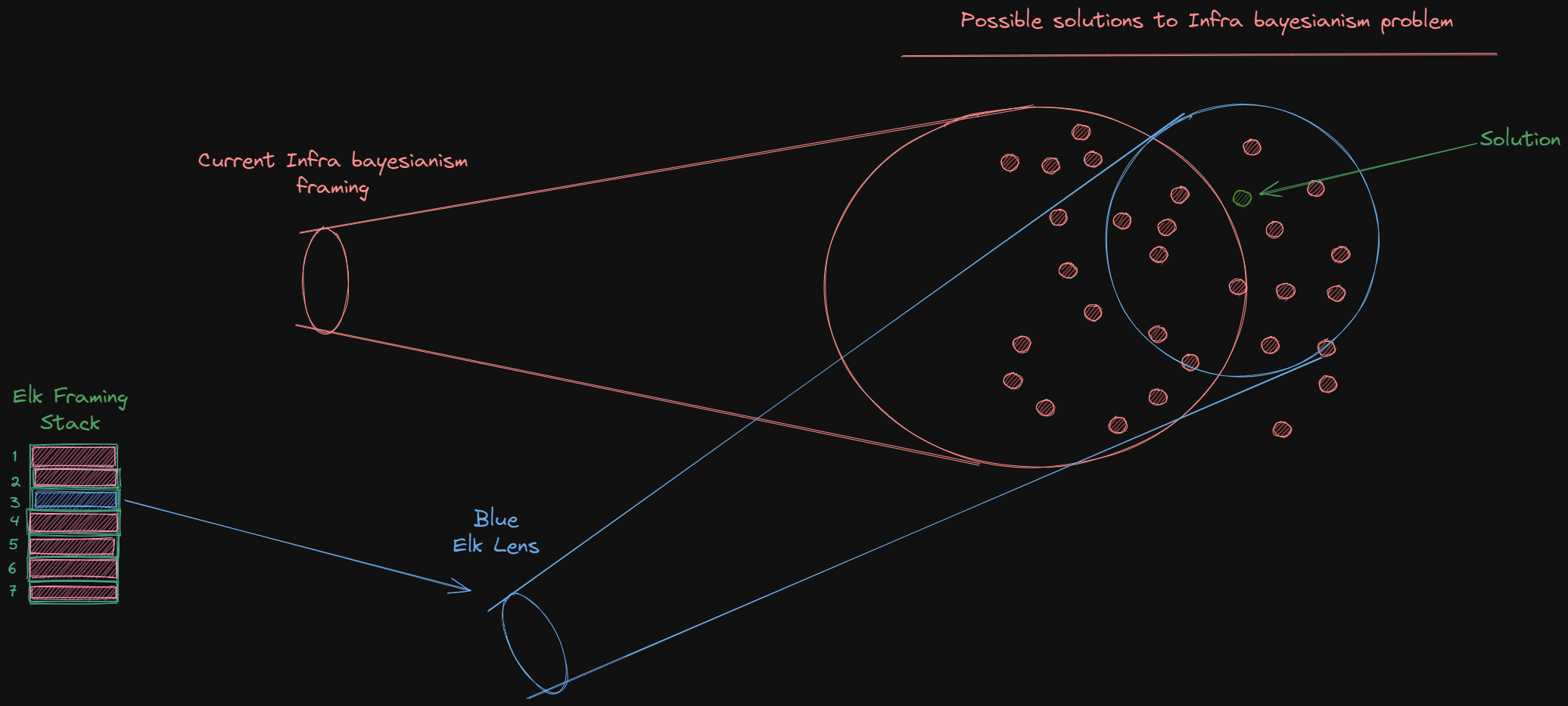

Figure 3: Very simplified perspectives on a problem

Here we can see the old perspective in orange, the old framing of the problem. In our fictional example, the current orange infrabayesian frame might sound something like “This is a one-to-many problem where we have to apply causal inference to back-chain, to formalise this we use causal scrubbing with Knightian uncertainty”

Whilst the new blue approach from the ELK direction might sound something like “If we have a neural network that could spot whenever a matrix describing the original NN internals is changed too much, then we could theoretically spot distribution shifts”

The framing of the problem now points towards using completely different mathematics and might now lead to a possible solution with the toolkit of infrabayesianism.

This depends on whether you can find a framing that makes the problem easier to solve. In this model, the way you do this is to look at similar problems that have been solved before, and you try to extrapolate the methodology to your current problem. Knowing where to look for information is what I believe people call research taste. The faster you can retrieve information, the more perspectives you can take. This is where distillation comes in.

How distillation increases analogous idea generation

The following model is what I believe creates the most amount of value when it comes to distillations:

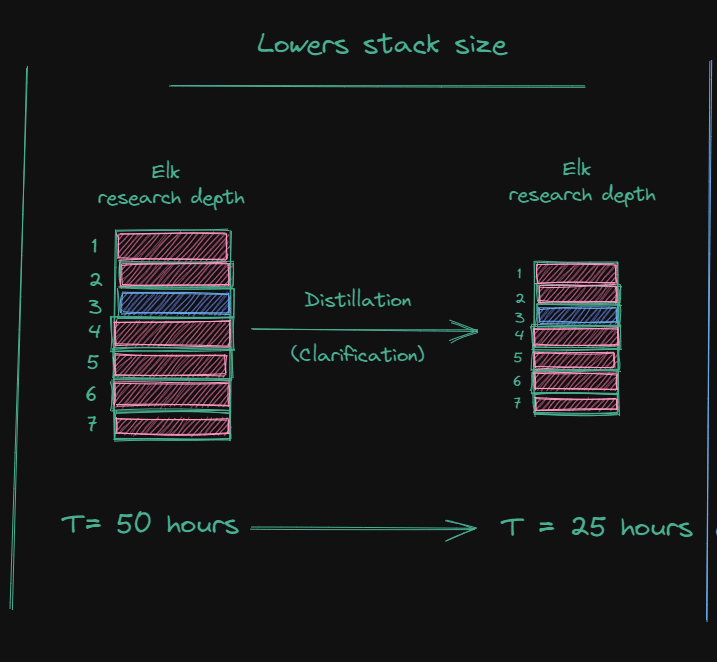

Through the process of summarisation and clarification, distillation lowers the stack size or the research depth of an area:

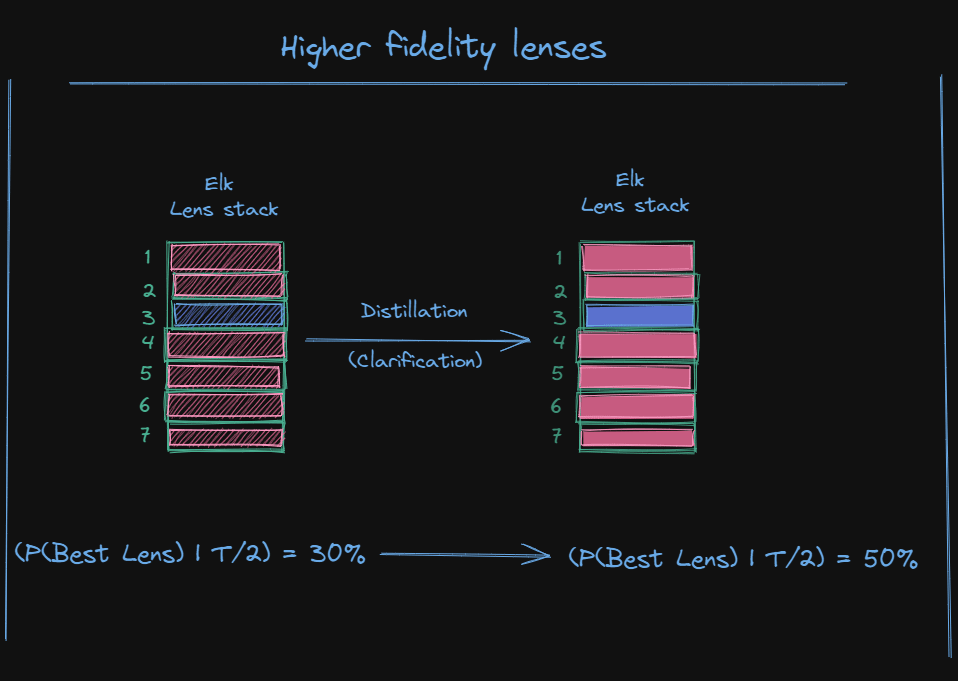

Through the process of clarification, distillation makes it clearer what ways different lenses can be used and makes it more obvious what they’re useful for:

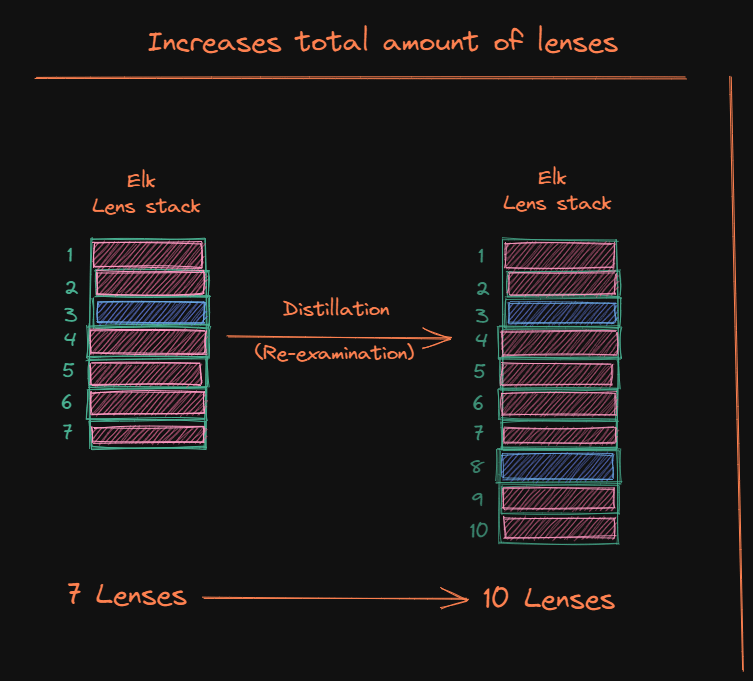

Through re-examination, distillation can generate new lenses from which you can view problems. I will go through what mechanisms of the models are below.

How distillation can lower research depth

Distillation allows for more straightforward perspectives on the research people are doing, and it's specifically rewritten to be easier to understand. An example of this is how much easier it is to understand general relativity now compared to when Einstein first came up with the model. We have analogies that give great intuitions for how spacetime is curved and today even a 13-year-old can understand the intuitive parts of the theory.

An example closer to the AI safety field is AGISF and how it has simplified how people get introduced to the field, from having to read blog posts and papers to learn about alignment, we now have a streamlined cohort where you can talk about the content with others and learn in a linear way.

How distillation can clarify lenses

This type of distillation is closer to research than summarization is, as it is about deconfusing existing concepts and research questions. An example of doing this is asking, “What exactly are we asking when we wonder whether a research agenda generalises past a sharp left turn?”

An example of this work happening in the past is Thomas Larsen and Eli Lifland’s post going through what different organisations in alignment are working on. It doesn’t necessarily work on deconfusing the specific terminology that we use, but it gives a map over different areas of research, which is also work that makes it clearer what lenses different people use. They have deconfused the general lens of the alignment field.

Having shared nomenclature and definitions of problems or understanding of disagreements makes the debate a lot more productive and ensures that we don’t talk past each other.

How distillation can lead to new lenses

The process of lens formation is pretty similar to the process of clarification of lenses as it involves looking at what work people have done before and thinking of new ways to re-examine the work. This essentially takes John Wentworth’s framing exercise and turns the screws up to the max.

How this could look in practice is taking a research agenda in Deep Learning theory, such as infinite width neural networks and linear Taylor approximation of these dynamics and then applying it to ELK. Could this approach yield a way of ontology verification that works in a particular set of cases?

The end product of the re-examination distillation is usually a set of open questions, which in this example might be something like the following:

Can we generate a set of axioms that make a linearisation of the Original neural network verifiable?

What parameters don’t approach 0 in the taylor expansion of the neural network?

Is there a way to approximate these?

If we assume some things about neural circuits, can we identify something that is similar to an attractor that only changes under distributional shifts in the original ELK network?

These questions can then be further expanded by clarification of the lens, making the new lenses clearer and more succinct.

What is the actual difference from doing research?

This type of work lacks the continuation and formalisation of the research ideas. It doesn’t say anything about the solution to the problems that you ask; it is instead looking for the right question to ask, which seems very important when doing science.

Consequences of Distillation

The consequences of distillations are multiplicative benefits to the field of alignment. It reduces double work, increases the speed to asking the right question and makes it easier for new people to get into the field.

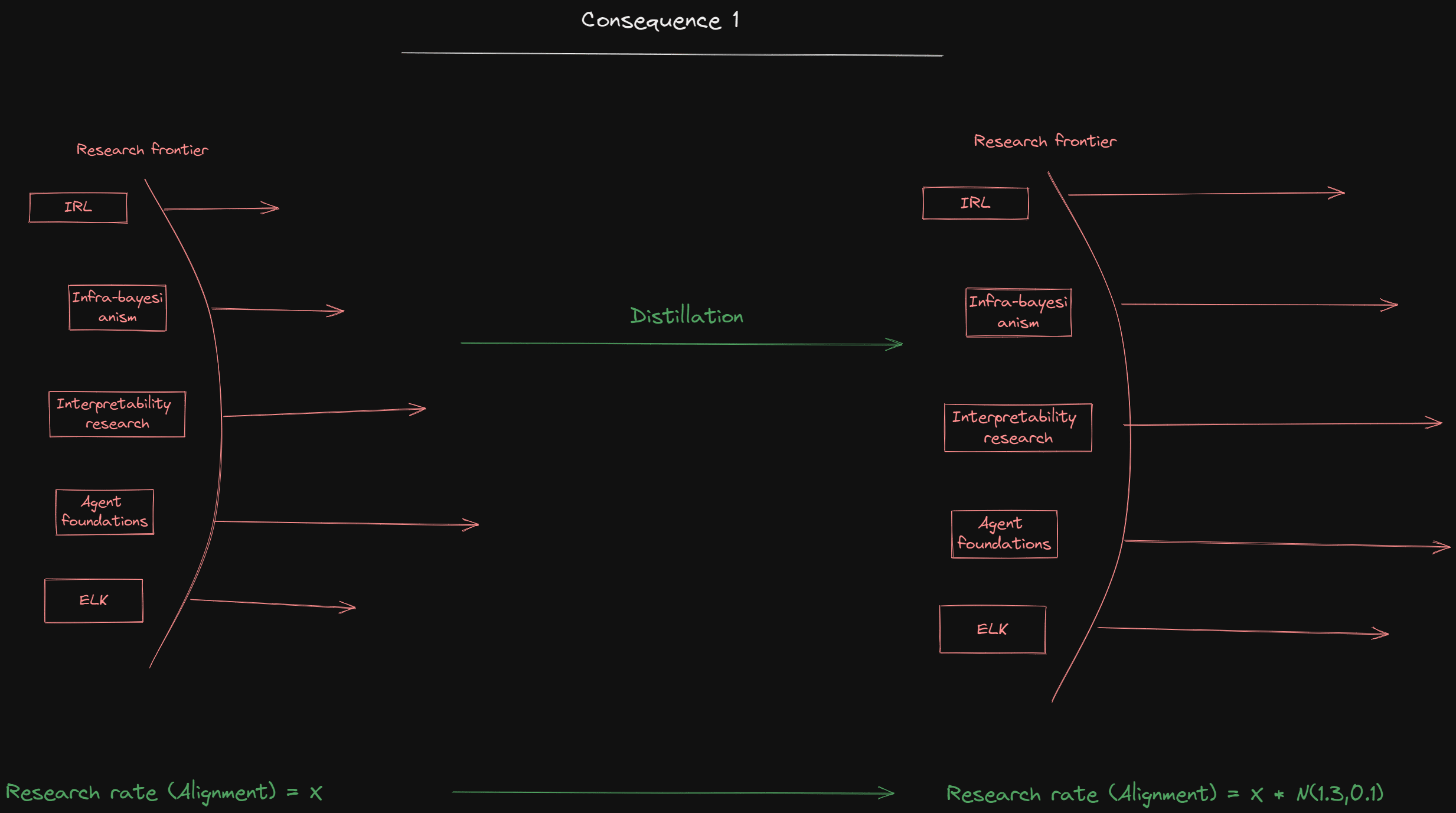

Consequence 1: Increased research speed

Figure 5: Distillation increases the speed of research (I have shallow confidence in that probability distribution btw)

This happens due to the increased availability of new lenses and the retrieval speed increase that follows.

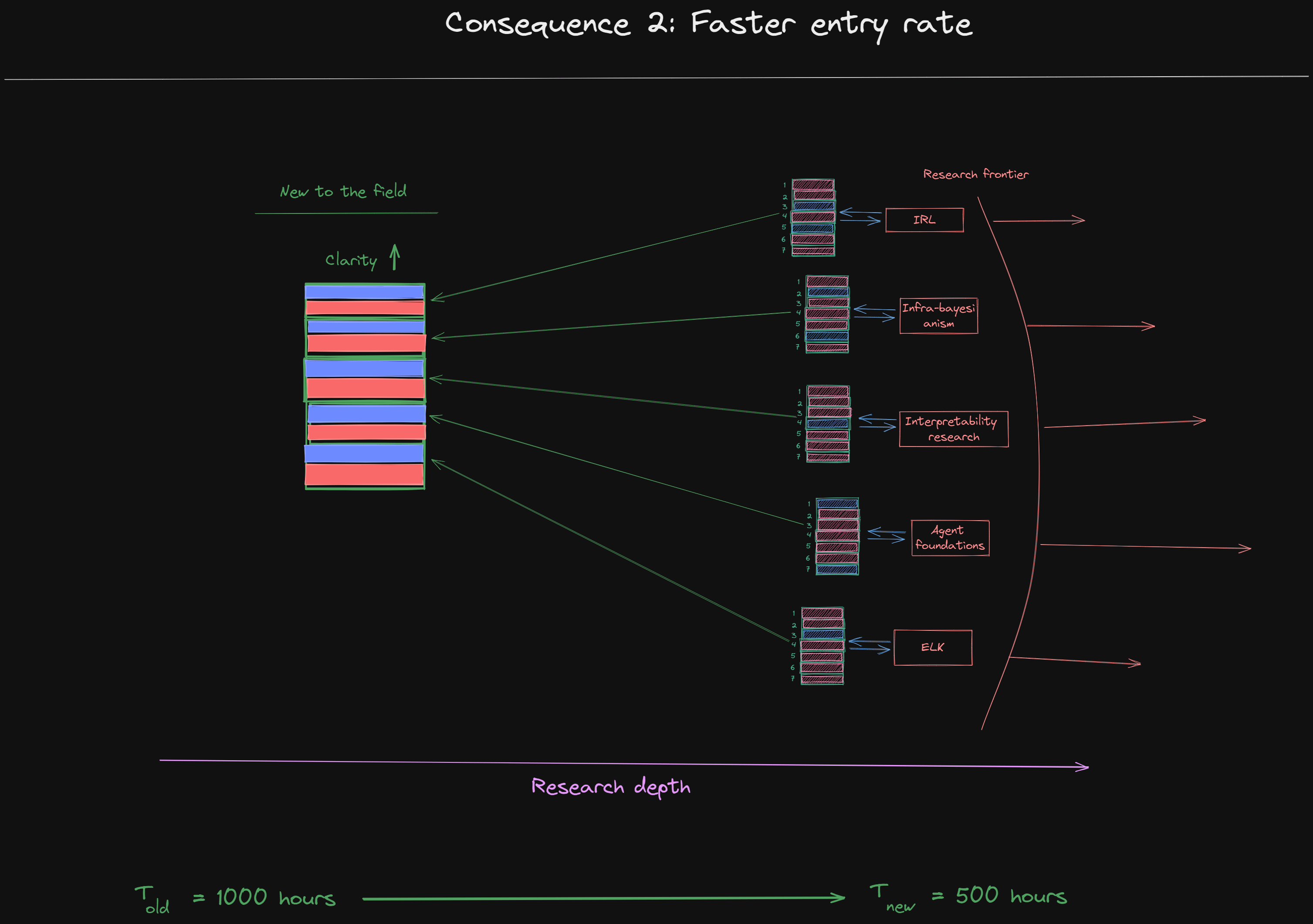

Consequence 2: Faster entry rate

Another consequence is that the entry rate to the field becomes a higher as people don’t have to spend the same amount of time going through confusing explanations.

Figure 6: How distillation decreases the entry requirements

This will affect the speed of research in the field as we will have researchers ready to do research much earlier than today.

Conclusion

There are multiple reasons and underlying ways that distillation is fundamentally helpful for any field of research.

Being a distiller is like building roads and infrastructure for the Romans; you might not be Julius Ceasar, but without you, there would be no roads to get the latest news to the frontiers. There would also be fewer recruits, and the army's mobility would be lower, so for the motherland start distilling. Invictus!

Hi Jonas,

I thought this to be a really clear explainer, thanks! I found the visuals pretty useful.

Glad to hear it!