Let’s reflect on where we’ve updated our views, and how we could improve our epistemics to avoid making the same mistakes again!

How to play:

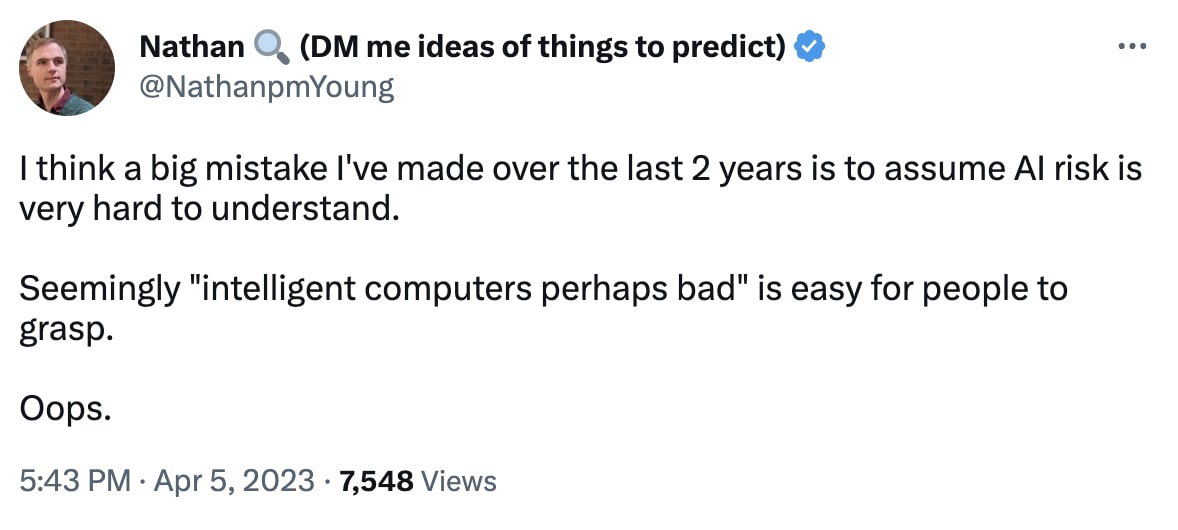

- Search: Look through your past comments and posts, to find a claim you made that you’ve since updated about and no longer endorse. (Or, think of a view you’ve updated about that you didn’t post here)

- Share: Add a comment to this post, quoting/describing the original claim you made. Explain why you don’t believe it any more or why you think you made the mistake you made

- What mental motion were you missing?

- Was there something you were trying to protect?

- Reflect: Reply to your own comment or anyone else’s, reflecting about how we can avoid making these mistakes in the future, and giving tips about what's worked for you

Ground rules:

- Be supportive: It is commendable to publicly share when you’ve changed your views!

- Be constructive: Focus on specific actions we can take to avoid the mistake next time

- Stay on-topic: This is not the place to focus on discussing whether the original claim was right or wrong - for that, you can reply to the comment in its original context. (Though stating your view in the context of giving thought’s on people’s updates might make sense)

Let’s have fun with this and reflect on how we can improve our epistemics!

Inspired by jacobjacob’s “100 ways to light a candle” babble challenge.

There’s also the consideration that alignment still seems really hard to me. I’ve watched the space for 8 years, and alignment hasn’t come to look any less hard to me over that time span. If something is about to be solved or probably easier than expected, 8 years should be enough to see a bit of a development from confusion to clarity, from overwhelm to research agenda, etc. Quite to the contrary, there is much more resignation going around now, so alignment is probably a bigger problem than the average PhD thesis. (Then again the resignation may be the result of shortening timelines rather than anything about the problem.)

I’m not quite sure in which direction that consideration pushes, but I think it makes s-risks a bit less likely again? Ideally we’d just solve alignment, but failing that, it could mean (1) we’re unrepresentatively dumb and the problem is easy or (2) the problem is hard. Option 1 would suck because now alignment is in the hands of all the random AIs that won’t want to align its successors with human values. S-risk now depends on how many AIs there are and how slow the last stretch to superintelligence will be. Option 2 is a bit better because AIs also won’t solve it, so that the future is in the hands of AIs who don’t mind value drift or failed to anticipate it. That sounds bad at first, but it’s a bit of a lesser evil, I think, because there’s no reason why that would happen bunched up for several AIs at one point in time. So the takeoff would have to be very slow for it to still be multipolar.

So yeah, a bunch more worry here, but all the factors are not all pushing in the same direction at least.